一、介绍

二、编程实战

1、贝努利朴素贝叶斯make_blobs数据集的分类

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_blobs

from sklearn.naive_bayes import BernoulliNB

from sklearn.model_selection import train_test_split

X, y = make_blobs(n_samples=500, centers=5, random_state=8)

X_train,X_test,y_train,y_test = train_test_split(X,y,random_state=8)

nb = BernoulliNB()

nb.fit(X_train,y_train)

x_min, x_max = X[:, 0].min() - 0.5, X[:, 0].max() + 0.5

y_min, y_max = X[:, 1].min() - 0.5, X[:, 1].max() + 0.5

xx, yy = np.meshgrid(np.arange(x_min, x_max, .02), np.arange(y_min, y_max, .02))

z = nb.predict(np.c_[(xx.ravel(), yy.ravel())]).reshape(xx.shape)

plt.pcolormesh(xx, yy, z, cmap=plt.cm.Pastel1)

plt.scatter(X_train[:, 0], X_train[:, 1], c=y_train, cmap=plt.cm.cool, edgecolors='k')

plt.scatter(X_test[:, 0], X_test[:, 1], c=y_test, cmap=plt.cm.cool, marker='*')

plt.xlim(xx.min(), xx.max())

plt.ylim(yy.min(), yy.max())

plt.show()

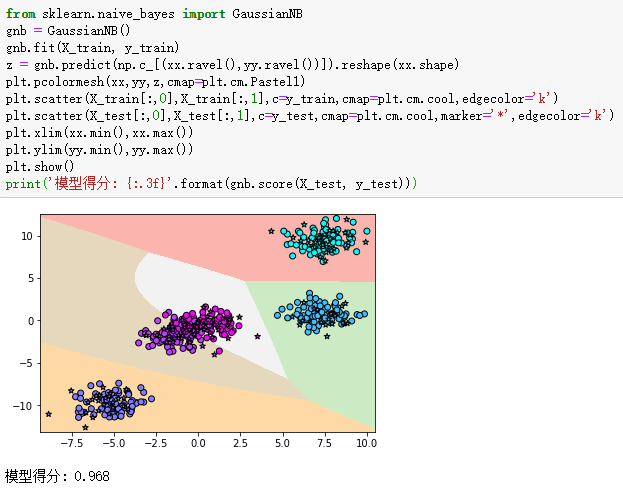

2、高斯朴素贝叶斯make_blobs数据集的分类

from sklearn.naive_bayes import GaussianNB

gnb = GaussianNB()

gnb.fit(X_train, y_train)

z = gnb.predict(np.c_[(xx.ravel(),yy.ravel())]).reshape(xx.shape)

plt.pcolormesh(xx,yy,z,cmap=plt.cm.Pastel1)

plt.scatter(X_train[:,0],X_train[:,1],c=y_train,cmap=plt.cm.cool,edgecolor='k')

plt.scatter(X_test[:,0],X_test[:,1],c=y_test,cmap=plt.cm.cool,marker='*',edgecolor='k')

plt.xlim(xx.min(),xx.max())

plt.ylim(yy.min(),yy.max())

plt.show()

print('模型得分: {:.3f}'.format(gnb.score(X_test, y_test)))

3、高斯朴素贝叶斯的学习曲线

from sklearn.model_selection import learning_curve

from sklearn.model_selection import ShuffleSplit

def plot_learning_curve(estimator, title, X, y, ylim=None, cv=None,

n_jobs=1, train_sizes=np.linspace(.1, 1.0, 5)):

plt.figure()

plt.title(title)

if ylim is not None:

plt.ylim(*ylim)

plt.xlabel("Training examples")

plt.ylabel("Score")

train_sizes, train_scores, test_scores = learning_curve(

estimator, X, y, cv=cv, n_jobs=n_jobs, train_sizes=train_sizes)

train_scores_mean = np.mean(train_scores, axis=1)

test_scores_mean = np.mean(test_scores, axis=1)

plt.grid()

plt.plot(train_sizes, train_scores_mean, 'o-', color="r", label="Training score")

plt.plot(train_sizes, test_scores_mean, 'o-', color="g", label="Cross-validation score")

plt.legend(loc="lower right")

return plt

title = "Learning Curves (Naive Bayes)"

cv = ShuffleSplit(n_splits=100, test_size=0.2, random_state=0)

estimator = GaussianNB()

plot_learning_curve(estimator, title, X, y, ylim=(0.9, 1.01), cv=cv, n_jobs=4)

plt.show()