post请求

在scrapy组件使用post请求需要调用

def start_requests(self):

进行传参再回到

yield scrapy.FormRequest(url=url,formdata=data,callback=self.parse)

进行post请求 其中FormRequest()为post 请求方式

import scrapy class PostSpider(scrapy.Spider): name = 'post' # allowed_domains = ['www.xxx.com'] start_urls = ['https://fanyi.baidu.com/sug'] def start_requests(self): data = { 'kw':'dog' } for url in self.start_urls: yield scrapy.FormRequest(url=url,formdata=data,callback=self.parse) #scrapy.FormRequest() 进行post请求 def parse(self, response): print(response.text)

请求传参

scrapy请求传参 主核心的就是

meta={'item':item}

是一个字典结构,用来存储item 等

通过回调函数的返回url进行访问

import scrapy from moviePro.items import MovieproItem class MovieSpider(scrapy.Spider): name = 'movie' # allowed_domains = ['www.xxx.com'] start_urls = ['https://www.4567tv.tv/frim/index1.html'] #解析详情页中的数据 def parse_detail(self,response): #response.meta返回接收到的meta字典 item = response.meta['item'] actor = response.xpath('/html/body/div[1]/div/div/div/div[2]/p[3]/a/text()').extract_first() item['actor'] = actor yield item def parse(self, response): li_list = response.xpath('//li[@class="col-md-6 col-sm-4 col-xs-3"]') for li in li_list: item = MovieproItem() name = li.xpath('./div/a/@title').extract_first() detail_url = 'https://www.4567tv.tv'+li.xpath('./div/a/@href').extract_first() item['name'] = name #meta参数:请求传参.meta字典就会传递给回调函数的response参数 yield scrapy.Request(url=detail_url,callback=self.parse_detail,meta={'item':item})

#这里url 值定的start_urls 调用直接访问

注意:这里存储的字段一定要与items.py 创建的一致,就是以items.py的字段为主

items.py

import scrapy class MovieproItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() actor = scrapy.Field()

pipelines.py

import pymysql class MovieproPipeline(object): conn = None cursor = None def open_spider(self,spider): print("开始爬虫") self.conn = pymysql.Connect(host='127.0.0.1',port=3306, user='root', password="",db='movie',charset='utf8') def process_item(self, item, spider): self.cursor = self.conn.cursor() try: self.cursor.execute('insert into av values("%s","%s")'%(item['name'],item['actor'])) self.conn.commit() except Exception as e: self.conn.rollback() def close_spider(self,spider): print('结束爬虫') self.cursor.close() self.conn.close()

在执行时可以 省去--nolog,在setting中配置LOG_LEVEL="ERROR"

也可以定义写入文件 ,在setting中配置LOG_FILE = "./log.txt"

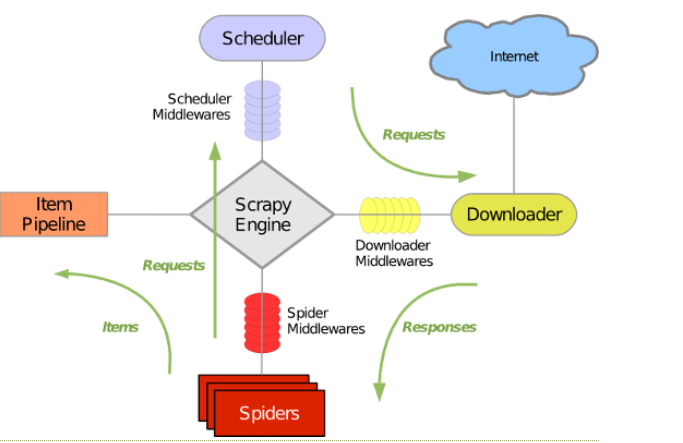

五大核心组件

其中dowmloader 最为重要,它分为三大重要的方法

(1)

def process_request(self, request, spider):

return None

需要有返回NONE 同django的中间建,表示在访问来之前进行操作

用来范文时 ,进行user_agent 的替换, request.headers['User-Agent'] = random.choice([ ...])

(2)

def process_response(self, request, response, spider):

需要有返回respons给 spider 进行数据 处理

用作selenium 模拟访问时,倘若放在spider访问,一条数据就需要生成一个bro,所以添加到这里,一次就好

它将获取的数据

return HtmlResponse(url=spider.bro.current_url,body=page_text,encoding='utf-8',request=request)

返回给spider 进行数据解析

return response

(3)

def process_exception(self, request, exception, spider):

pass

指进行报错使用的情况

用作代理吃进行请求代理ip 的设置

request.meta['proxy'] = random.choice([])

使用

1 import random 2 3 class MiddleproDownloaderMiddleware(object): 4 user_agent_list = [ 5 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 " 6 "(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", 7 "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 " 8 "(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", 9 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 " 10 "(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", 11 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 " 12 "(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", 13 "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 " 14 "(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", 15 "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 " 16 "(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", 17 "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 " 18 "(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", 19 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " 20 "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", 21 "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 " 22 "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", 23 "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 " 24 "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", 25 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " 26 "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", 27 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " 28 "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", 29 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " 30 "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", 31 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " 32 "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", 33 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 " 34 "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", 35 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " 36 "(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", 37 "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 " 38 "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", 39 "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 " 40 "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" 41 ] 42 # 可被选用的代理IP 43 PROXY_http = [ 44 '153.180.102.104:80', 45 '195.208.131.189:56055', 46 ] 47 PROXY_https = [ 48 '120.83.49.90:9000', 49 '95.189.112.214:35508', 50 ] 51 #拦截所有未发生异常的请求 52 def process_request(self, request, spider): 53 54 55 #使用UA池进行请求的UA伪装 56 print('this is process_request') 57 request.headers['User-Agent'] = random.choice(self.user_agent_list) 58 print(request.headers['User-Agent']) 59 60 61 return None 62 #拦截所有的响应 63 def process_response(self, request, response, spider): 64 65 return response 66 #拦截到产生异常的请求 67 def process_exception(self, request, exception, spider): 68 69 print('this is process_exception!') 70 if request.url.split(':')[0] == 'http': 71 request.meta['proxy'] = random.choice(self.PROXY_http) 72 else: 73 request.meta['proxy'] = random.choice(self.PROXY_https)

selenium的中间件使用

注意:使用中间件需要打开中间件的封印 (p56-58)

DOWNLOADER_MIDDLEWARES = {

'wangyiPro.middlewares.WangyiproDownloaderMiddleware': 543,

}

通过实例化bro对象,在请求结束后访问download中间件的 def response(self):

通过获取的数据

return HtmlResponse(url=spider.bro.current_url,body=page_text,encoding='utf-8',request=request)

返回给spider

import scrapy from selenium import webdriver ''' 在scrapy中使用selenium的编码流程: 1.在spider的构造方法中创建一个浏览器对象(作为当前spider的一个属性) 2.重写spider的一个方法closed(self,spider),在该方法中执行浏览器关闭的操作 3.在下载中间件的process_response方法中,通过spider参数获取浏览器对象 4.在中间件的process_response中定制基于浏览器自动化的操作代码(获取动态加载出来的页面源码数据) 5.实例化一个响应对象,且将page_source返回的页面源码封装到该对象中 6.返回该新的响应对象 ''' class WangyiSpider(scrapy.Spider): name = 'wangyi' # allowed_domains = ['www.xxx.com'] start_urls = ['http://war.163.com/'] def __init__(self): self.bro = webdriver.Chrome(executable_path=r'C:UsersAdministratorDesktop爬虫+数据day_03_爬虫chromedriver.exe') def parse(self, response): div_list = response.xpath('//div[@class="data_row news_article clearfix "]') for div in div_list: title = div.xpath('.//div[@class="news_title"]/h3/a/text()').extract_first() print(title) def closed(self,spider): print('关闭浏览器对象!') self.bro.quit()

def process_response(self, request, response, spider):

def process_response(self, request, response, spider): # Called with the response returned from the downloader. # Must either; # - return a Response object # - return a Request object # - or raise IgnoreRequest print('即将返回一个新的响应对象!!!') #如何获取动态加载出来的数据 bro = spider.bro bro.get(url=request.url) sleep(3) #包含了动态加载出来的新闻数据 page_text = bro.page_source sleep(3) return HtmlResponse(url=spider.bro.current_url,body=page_text,encoding='utf-8',request=request)