在网上有一种短8位UUID生成的方法,代码来源:

JAVA生成短8位UUID

public static String[] chars = new String[] { "a", "b", "c", "d", "e", "f", "g", "h", "i", "j", "k", "l", "m", "n", "o", "p", "q", "r", "s", "t", "u", "v", "w", "x", "y", "z", "0", "1", "2", "3", "4", "5", "6", "7", "8", "9", "A", "B", "C", "D", "E", "F", "G", "H", "I", "J", "K", "L", "M", "N", "O", "P", "Q", "R", "S", "T", "U", "V", "W", "X", "Y", "Z" }; public static String generateShortUuid() { StringBuffer shortBuffer = new StringBuffer(); String uuid = UUID.randomUUID().toString().replace("-", ""); for (int i = 0; i < 8; i++) { String str = uuid.substring(i * 4, i * 4 + 4); int x = Integer.parseInt(str, 16); shortBuffer.append(chars[x % 0x3E]); } return shortBuffer.toString(); }

我们进行测试看到底多少会出现重复,写了一个比较简单的方法:

设置了线程池,数据库连接池,每一个线程进行处理一百万条数据,每次携带7万条数据进行数据库的插入。我们将ID设置为数据库的主键,如果出现错误,则表示数据库ID出现重复现象。

如果需要一次性插入更多的数据,或者在插入的时候报下面的错误:

Packet for query is too large (4,800,048 > 4,194,304)

修改 my.ini 加上 max_allowed_packet =67108864 67108864=64M 默认大小4194304 也就是4M 修改完成之后要重启mysql服务,如果通过命令行修改就不用重启mysql服务。 命令修改: 设置为500M mysql> set global max_allowed_packet = 500*1024*1024; 查看mysql的max_allowed_packet大小,运行 show VARIABLES like '%max_allowed_packet%';

下面是插入数据代码,进行测试:

private static ThreadPoolExecutor threadPoolExecutor=new ThreadPoolExecutor(50, 100, 0L, TimeUnit.MILLISECONDS, new LinkedBlockingQueue<Runnable>(1024),new DefaultManagedAwareThreadFactory(), new ThreadPoolExecutor.AbortPolicy()); @Override public void saveTest() { threadPoolExecutor.execute(new saveUUID()); } /** * 设置保存信息的线程 */ class saveUUID implements Runnable{ public saveUUID(){ } @Override public void run() { System.out.println("===================>>>>>" + Thread.currentThread().getName()); List<Map<String,Object>> userList = new ArrayList<>(); int index=0; for(int i = 0; i< 1000000;i++){ long startTime = System.currentTimeMillis(); Map<String,Object> map = new HashMap<>(); map.put("id",getUUID8()); userList.add(map); index++; if (index==70000) { int count = testMapper.saveTest(userList); if (count > 0) { userList.clear(); index=0; long time =System.currentTimeMillis()-startTime; System.out.println( Thread.currentThread().getName() + "======>>>>" +time ); } } } if(!userList.isEmpty()){ testMapper.saveTest(userList); } } }

XML文件:

<insert id="saveTest" parameterType="java.util.List"> insert into tb_user(id,user_name) values <foreach item="item" index="index" collection="list" separator=","> (#{item.id},#{item.id}) </foreach> </insert>

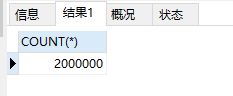

进行测试当数据达到:2129580,也就是两百万的时候出现第一次重复,进行数据库数据查询,发现重复原因是因为MySQL不区分大小写。

关于MySQL主键不区分大小写,或则其他查询不区分大小写 Duplicate entry 'AOVbrXXF' for key 'PRIMARY'

将MySQL进行大小写区分。再进行测试。

数据达到400万条无重复,系统插入缓慢。

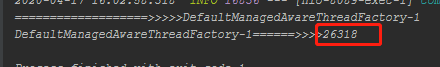

在基础数据为0的情况下,插入数据100万。系统花费时间为:

26.318秒

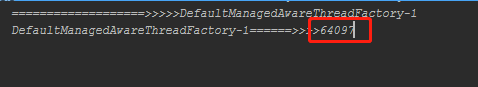

在基础数据为0的情况下,插入数据200万。系统花费的时间为:

64.097秒

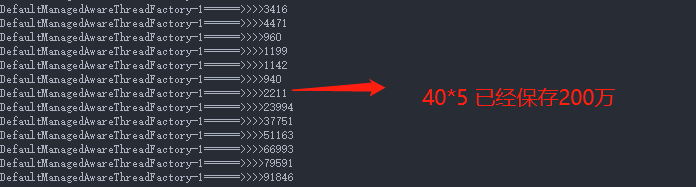

在基础数据为0的情况下,插入数据,系统花费时间:

DefaultManagedAwareThreadFactory-1======>>>>3209 DefaultManagedAwareThreadFactory-1======>>>>1033 DefaultManagedAwareThreadFactory-1======>>>>1089 DefaultManagedAwareThreadFactory-1======>>>>952 DefaultManagedAwareThreadFactory-1======>>>>921 DefaultManagedAwareThreadFactory-1======>>>>1119 DefaultManagedAwareThreadFactory-1======>>>>825 DefaultManagedAwareThreadFactory-1======>>>>911 DefaultManagedAwareThreadFactory-1======>>>>919 DefaultManagedAwareThreadFactory-1======>>>>850 DefaultManagedAwareThreadFactory-1======>>>>949 DefaultManagedAwareThreadFactory-1======>>>>983 DefaultManagedAwareThreadFactory-1======>>>>720 DefaultManagedAwareThreadFactory-1======>>>>1097 DefaultManagedAwareThreadFactory-1======>>>>862 DefaultManagedAwareThreadFactory-1======>>>>1173 DefaultManagedAwareThreadFactory-1======>>>>942 DefaultManagedAwareThreadFactory-1======>>>>808 DefaultManagedAwareThreadFactory-1======>>>>1159 DefaultManagedAwareThreadFactory-1======>>>>845 DefaultManagedAwareThreadFactory-1======>>>>998 DefaultManagedAwareThreadFactory-1======>>>>1478 DefaultManagedAwareThreadFactory-1======>>>>1420 DefaultManagedAwareThreadFactory-1======>>>>974 DefaultManagedAwareThreadFactory-1======>>>>1398 DefaultManagedAwareThreadFactory-1======>>>>4005 DefaultManagedAwareThreadFactory-1======>>>>2922 DefaultManagedAwareThreadFactory-1======>>>>2126 DefaultManagedAwareThreadFactory-1======>>>>1198 DefaultManagedAwareThreadFactory-1======>>>>931 DefaultManagedAwareThreadFactory-1======>>>>2197 DefaultManagedAwareThreadFactory-1======>>>>1014 DefaultManagedAwareThreadFactory-1======>>>>1231 DefaultManagedAwareThreadFactory-1======>>>>3416 DefaultManagedAwareThreadFactory-1======>>>>4471 DefaultManagedAwareThreadFactory-1======>>>>960 DefaultManagedAwareThreadFactory-1======>>>>1199 DefaultManagedAwareThreadFactory-1======>>>>1142 DefaultManagedAwareThreadFactory-1======>>>>940 DefaultManagedAwareThreadFactory-1======>>>>2211 DefaultManagedAwareThreadFactory-1======>>>>23994 DefaultManagedAwareThreadFactory-1======>>>>37751 DefaultManagedAwareThreadFactory-1======>>>>51163 DefaultManagedAwareThreadFactory-1======>>>>66993 DefaultManagedAwareThreadFactory-1======>>>>79591 DefaultManagedAwareThreadFactory-1======>>>>91846

由上可见当数据量达到一定量的时候,时间成指数上升。

如何能突破这个时间节点,估计还需要进一步优化。

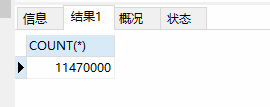

生产UUID满足千万条数据

目前1147万条数据,没有出现重复,满足原作者说的千万条数据不重复