文章来源于本人的印象笔记,如出现格式问题可访问该链接查看原文

部署canal的prometheus监控到k8s中

1、grafana的docker部署方式;https://grafana.com/grafana/download?platform=docker

2、prometheus的docker部署方式: https://github.com/prometheus/prometheus

有了现成的docker镜像后,直接部署即可;

k8s中部署prometheus

yml编排文件如下:

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: '16'

k8s.kuboard.cn/displayName: canal-prometheus

k8s.kuboard.cn/ingress: 'false'

k8s.kuboard.cn/service: NodePort

k8s.kuboard.cn/workload: svc-canal-prometheus

creationTimestamp: '2020-11-06T03:09:55Z'

generation: 16

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: svc-canal-prometheus

name: svc-canal-prometheus

namespace: canal-ns

resourceVersion: '22246892'

selfLink: /apis/apps/v1/namespaces/canal-ns/deployments/svc-canal-prometheus

uid: 4ad37eec-3b36-4107-8ed9-07456abba5ba

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: svc-canal-prometheus

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

kubectl.kubernetes.io/restartedAt: '2020-11-06T14:07:33+08:00'

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: svc-canal-prometheus

spec:

containers:

- image: prom/prometheus

imagePullPolicy: Always

name: canal-prometheus

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/prometheus/prometheus.yml

name: canal-prometheus-volume

subPath: etc/prometheus/prometheus.yml

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 420

items:

- key: prometheus.yml

path: etc/prometheus/prometheus.yml

name: canal-prometheus

name: canal-prometheus-volume

status:

availableReplicas: 1

conditions:

- lastTransitionTime: '2020-11-06T05:30:16Z'

lastUpdateTime: '2020-11-06T05:30:16Z'

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: 'True'

type: Available

- lastTransitionTime: '2020-11-06T05:55:49Z'

lastUpdateTime: '2020-11-06T06:07:43Z'

message: >-

ReplicaSet "svc-canal-prometheus-6f7d7b66c5" has successfully

progressed.

reason: NewReplicaSetAvailable

status: 'True'

type: Progressing

observedGeneration: 16

readyReplicas: 1

replicas: 1

updatedReplicas: 1

此处有一个有趣的点是,k8s中使用configMap进行目录挂载时,一般情况下我们直接挂载到对应的容器目录后,此时目录将会被覆盖,而此时在配置普罗米修斯的文件映射时,则只是挂载到了具体的文件中;主要的配置则是:subPath: etc/prometheus/prometheus.yml,通过subPath的方式可以直接挂载到具体的文件中;

原创声明:作者:Arnold.zhao 博客园地址:https://www.cnblogs.com/zh94

所挂载的具体配置文件的内容如下:

# my global config test

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'canal'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['svc-canal-deployer:11112']

主要是配置下对应的canal-deployer的地址即可;

- targets: ['svc-canal-deployer:11112']

默认情况下canal-deployer的监控端口就是11112,当然如果你修改过该端口,另当别论了 。

svc-canal-deployer是canal-deployer的server名称,由于canal-deployer此处也是已经部署在k8s了所以直接使用服务名进行访问,由k8s service自动做转发即可,如果此处不是在k8s的话,则直接配置canal-deployer的ip地址即可,一样的。

对应的configMap的创建yml如下:

---

apiVersion: v1

data:

prometheus.yml: >-

# my global config test

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global

'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'canal'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['svc-canal-deployer:11112']

kind: ConfigMap

metadata:

creationTimestamp: '2020-11-06T03:15:04Z'

name: canal-prometheus

namespace: canal-ns

resourceVersion: '22246778'

selfLink: /api/v1/namespaces/canal-ns/configmaps/canal-prometheus

uid: 2918cb4e-acd6-4c82-9a2e-19e575ba6cea

创建一个专用的Service进行端口映射,此处映射端口为9090

---

apiVersion: v1

kind: Service

metadata:

annotations:

k8s.kuboard.cn/displayName: canal-prometheus

k8s.kuboard.cn/workload: svc-canal-prometheus

creationTimestamp: '2020-11-06T03:11:34Z'

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: svc-canal-prometheus

name: svc-canal-prometheus

namespace: canal-ns

resourceVersion: '22204370'

selfLink: /api/v1/namespaces/canal-ns/services/svc-canal-prometheus

uid: 6246dafe-f8fd-42ec-8e27-caf38539d35c

spec:

clusterIP: 10.204.71.228

externalTrafficPolicy: Cluster

ports:

- name: prometheus-9090

nodePort: 30018

port: 9090

protocol: TCP

targetPort: 9090

selector:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: svc-canal-prometheus

sessionAffinity: None

type: NodePort

好了,部署完成,此时访问端口结果如下:

部署grafana

Deployment的yml如下

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: '1'

k8s.kuboard.cn/ingress: 'false'

k8s.kuboard.cn/service: NodePort

k8s.kuboard.cn/workload: web-canal-grafana

creationTimestamp: '2020-11-06T06:10:27Z'

generation: 1

labels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: web-canal-grafana

name: web-canal-grafana

namespace: canal-ns

resourceVersion: '22247823'

selfLink: /apis/apps/v1/namespaces/canal-ns/deployments/web-canal-grafana

uid: 484350e4-b408-4361-ac27-633f8d815468

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: web-canal-grafana

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: web-canal-grafana

spec:

containers:

- image: grafana/grafana

imagePullPolicy: Always

name: canal-grafana

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsConfig: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

seLinuxOptions: {}

terminationGracePeriodSeconds: 30

再进行一下server代理,yml如下;映射端口为3000端口

---

apiVersion: v1

kind: Service

metadata:

annotations:

k8s.kuboard.cn/workload: web-canal-grafana

creationTimestamp: '2020-11-06T06:10:27Z'

labels:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: web-canal-grafana

name: web-canal-grafana

namespace: canal-ns

resourceVersion: '22247699'

selfLink: /api/v1/namespaces/canal-ns/services/web-canal-grafana

uid: 8cbde138-6855-4eaf-b9cd-8dae72a2efeb

spec:

clusterIP: 10.204.195.124

externalTrafficPolicy: Cluster

ports:

- name: canal-grafana-3000

nodePort: 31010

port: 3000

protocol: TCP

targetPort: 3000

selector:

k8s.kuboard.cn/layer: web

k8s.kuboard.cn/name: web-canal-grafana

sessionAffinity: None

type: NodePort

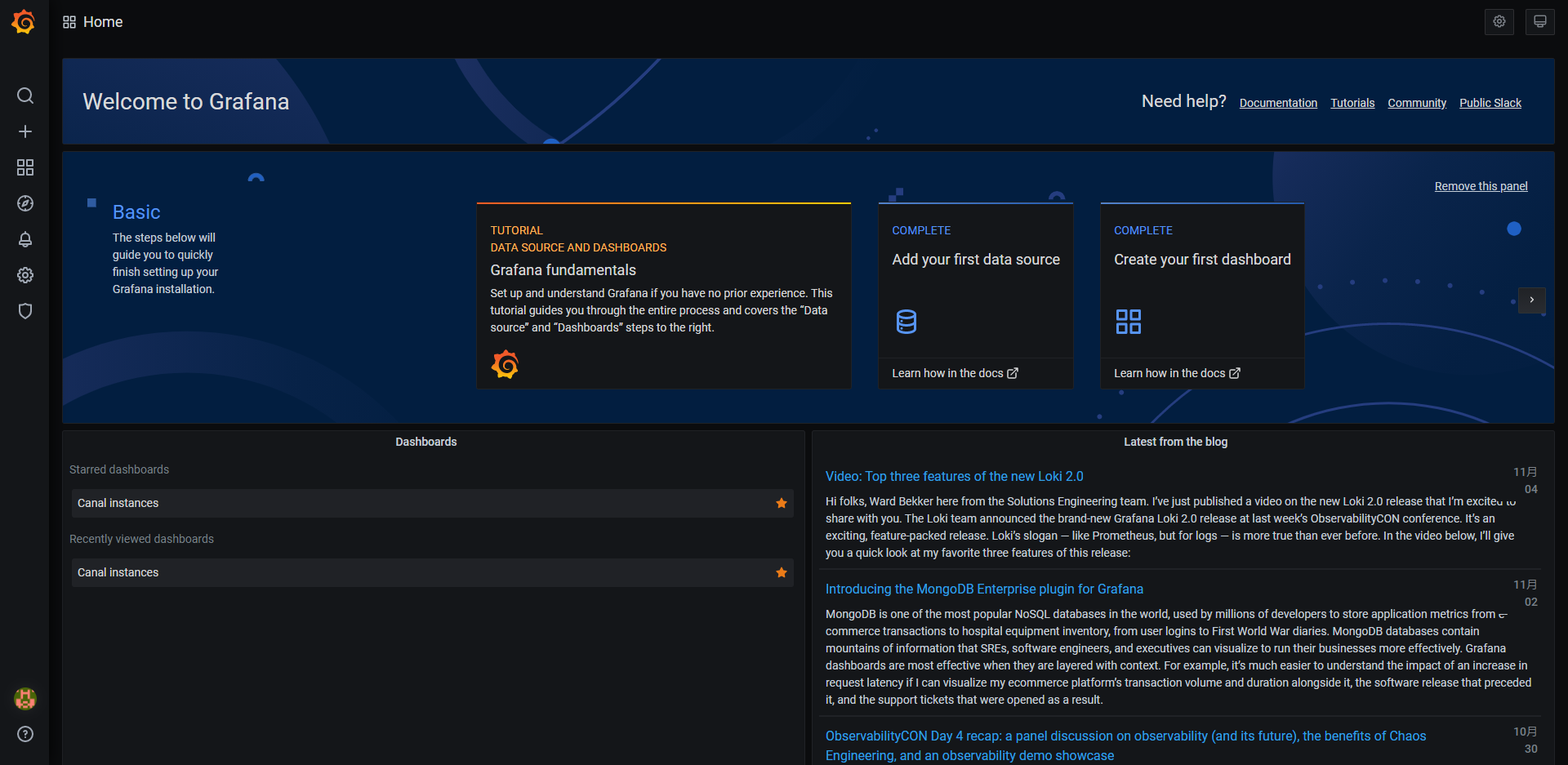

OK,启动后,访问对应的应用后,效果如图所示:

此时还没有进行grefana的配置,所以无法获取canal的监控信息

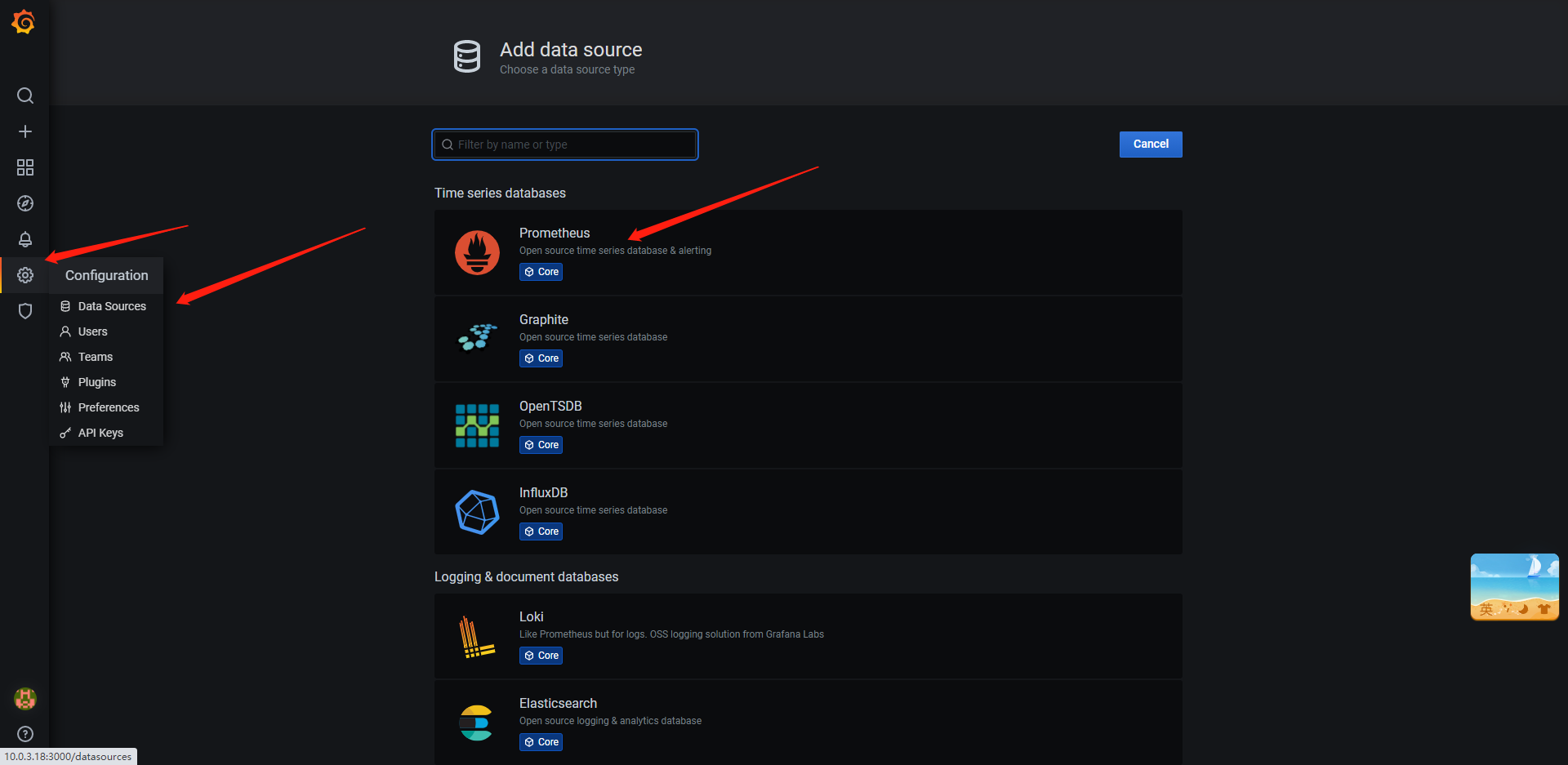

Greafana配置canal监控信息

很简单,基本按照官方的说明即可,

新建一个dataSource,选择prometheus,然后填写对应的prometheus的url地址即可。

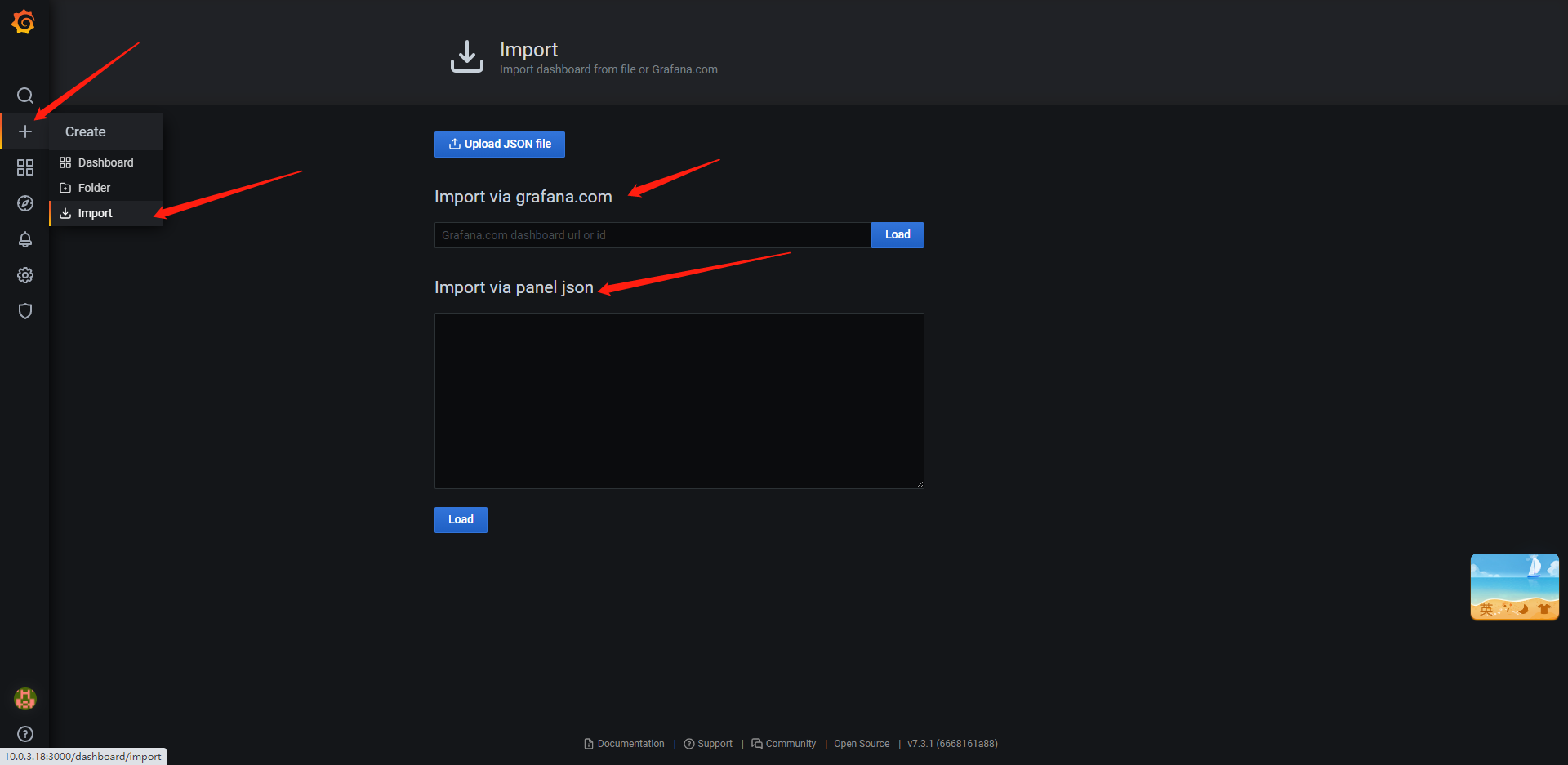

填写完成后,最后一步则是,grafana中导入 canal的监控指标

此时按照URL的导入方式:https://raw.githubusercontent.com/alibaba/canal/master/deployer/src/main/resources/metrics/Canal_instances_tmpl.json

导入该json文件即可;

不过我在处理的时候,该url导入不可用,所以,如果你也是不可用的话,可以直接

wget https://raw.githubusercontent.com/alibaba/canal/master/deployer/src/main/resources/metrics/Canal_instances_tmpl.json

获取对应的文件内容后,再填写到第二个框中即可;

最终的监控效果图

原创声明:作者:Arnold.zhao 博客园地址:https://www.cnblogs.com/zh94

具体各监控指标所表示的含义,直接看github canal文档即可;

由于此处我的canal client并没有直接通过tcp的方式和canal -deployer进行交互,而是直接接入的canal-deployer所吐出来的kakfa数据,所以此处所展示的client指标则为空;

参考链接:

关于普罗米修斯(prometheus)的基本概念

关于Grafana的基本概念

关于canal安装Grafana&prometheus进行监控的说明

关于k8s中目录挂载时不覆盖容器原目录的用法