下面简单介绍两种部署的方式,crontab定时任务+日志,第二种则是scrapyd+spiderkeeper,更推荐后者,图形界面的方式,管理方便,清晰。

scrapy 开发调试

1、在spiders同目录下新建一个run.py文件,内容如下(列表里面最后可以加上参数,如--nolog)

2、下面命令只限于,快速调试的作用或一个项目下单个spider的爬行任务。

from scrapy.cmdline import execute execute(['scrapy','crawl','app1'])

多爬虫并发:

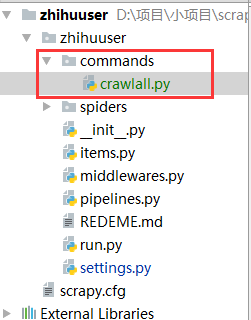

1、在spiders同级创建任意目录,如:commands

2、在其中创建 crawlall.py 文件 (此处文件名就是自定义的命令)

crawlall.py

from scrapy.commands import ScrapyCommand

from scrapy.crawler import CrawlerRunner

from scrapy.exceptions import UsageError

from scrapy.utils.conf import arglist_to_dict

class Command(ScrapyCommand):

requires_project = True

def syntax(self):

return '[options]'

def short_desc(self):

return 'Runs all of the spiders'

def add_options(self, parser):

ScrapyCommand.add_options(self, parser)

parser.add_option("-a", dest="spargs", action="append", default=[], metavar="NAME=VALUE",

help="set spider argument (may be repeated)")

parser.add_option("-o", "--output", metavar="FILE",

help="dump scraped items into FILE (use - for stdout)")

parser.add_option("-t", "--output-format", metavar="FORMAT",

help="format to use for dumping items with -o")

def process_options(self, args, opts):

ScrapyCommand.process_options(self, args, opts)

try:

opts.spargs = arglist_to_dict(opts.spargs)

except ValueError:

raise UsageError("Invalid -a value, use -a NAME=VALUE", print_help=False)

def run(self, args, opts):

# settings = get_project_settings()

spider_loader = self.crawler_process.spider_loader

for spidername in args or spider_loader.list():

print("*********cralall NewsSpider************")

self.crawler_process.crawl(spidername, **opts.spargs)

self.crawler_process.start()

3、到这里还没完,settings.py配置文件还需要加一条。

COMMANDS_MODULE = ‘项目名称.目录名称’

COMMANDS_MODULE = 'NewSpider.commands'

4、执行命令

$ scrapy crawlall

5、 日志输出,

# 保存log信息的文件名 LOG_FILE = "myspider.log" LOG_LEVEL = "INFO"

scrapyd+spiderkeeper

1.安装

$ pip install scrapyd $ pip install scrapyd-client $ pip install spiderkeeper

2.配置

配置scrapy.cfg文件,取消注释url

[settings] default = project.settings [deploy:project_deploy] url = http://localhost:6800/ project = project username = root password = password

scrapyd-deploy在linux和mac下可运行,windows下需在python/scripts路径下新建scrapyd-deploy.bat,注意了,下面python路径以及scrapyd-deploy路径需要修改

@echo off "C:UsersCZNscrapyVirScriptspython.exe" "C:UsersCZNscrapyVirScriptsscrapyd-deploy" %1 %2 %3 %4 %5 %6 %7 %8 %9

cmd下进入scrapy项目根目录,

1)敲入scrapyd-deploy -l

project http://localhost:6800/

2)敲入scrapy list 显示 spider 列表

LOG_STDOUT = True # 大坑,导致scrapy list 失效

3)scrapyd #在scrapy.cfg同路径下启动scrapyd服务器 端口6800

4)spiderkeeper --server=http://localhost:6800 --username=root --password=password #启动spiderkeep 端口5000

5)scrapyd-deploy project_deploy -p project #发布工程到scrapyd

成功返回json数据

6)scrapyd-deploy --build-egg output.egg #生成output.egg文件

7)spiderkeep图形界面上传output.egg即可

部署完成,设置定时爬取任务或启动单个spider

settings.py 几个具有普适性配置

# Obey robots.txt rules ROBOTSTXT_OBEY = False CONCURRENT_REQUESTS = 32 DOWNLOAD_DELAY = 0.1 DOWNLOAD_TIMEOUT = 10 RETRY_TIMES = 5 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 COOKIES_ENABLED = False