clickhouse 集群安装部署文档

一、环境准备

CentOS系统三台测试机

Host: 192.168.2.211、 192.168.2.212、 192.168.2.213

二、RPM安装包安装

# 前提需要zookeeper环境,如没有需要安装(因为之前就有,所以就讲解如何搭建kafka集群)

# 分别在三台机器上root用户下安装clickhouse-server、clickhouse-client

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://packages.clickhouse.com/rpm/clickhouse.repo

sudo yum install -y clickhouse-server clickhouse-client

三、配置

clickhouse-server、clickhouse-client version 21.12.2.17

单机版

1、修改clickhouse-server的配置文件config.xml

[clickhouse@node2 clickhouse-server]$ vim /etc/clickhouse-server/config.xml

<!--因为9000端口被其他服务占用,故把端口tcp端口修改1200,为了之后管理方便就把相关的端口号都改成一个范围内的端口号,方便后期维护使用-->

<!-- Port for HTTP API. See also 'https_port' for secure connections.

This interface is also used by ODBC and JDBC drivers (DataGrip, Dbeaver, ...)

and by most of web interfaces (embedded UI, Grafana, Redash, ...).

-->

<!--http网址访问端口-->

<http_port>1199</http_port>

<!-- Port for interaction by native protocol with:

- clickhouse-client and other native ClickHouse tools (clickhouse-benchmark, clickhouse-copier);

- clickhouse-server with other clickhouse-servers for distributed query processing;

- ClickHouse drivers and applications supporting native protocol

(this protocol is also informally called as "the TCP protocol");

See also 'tcp_port_secure' for secure connections.

-->

<!--clickhouse-client客户端监听端口-->

<tcp_port>1200</tcp_port>

<!-- Compatibility with MySQL protocol.

ClickHouse will pretend to be MySQL for applications connecting to this port.

-->

<!--监听mysql端口-->

<mysql_port>1201</mysql_port>

<!-- Compatibility with PostgreSQL protocol.

ClickHouse will pretend to be PostgreSQL for applications connecting to this port.

-->

<postgresql_port>1202</postgresql_port>

<!-- Port for communication between replicas. Used for data exchange.

It provides low-level data access between servers.

This port should not be accessible from untrusted networks.

See also 'interserver_http_credentials'.

Data transferred over connections to this port should not go through untrusted networks.

See also 'interserver_https_port'.

-->

<interserver_http_port>1203</interserver_http_port>

...

...

...

<!--将注释掉的这行放开,作用是可以通过远程服务器访问服务-->

<listen_host>::</listen_host>

...

...

...

<!--将原始的全部注释掉-->

<!-- <remote_servers> -->

<!-- Test only shard config for testing distributed storage -->

<!-- <test_shard_localhost> -->

<!-- Inter-server per-cluster secret for Distributed queries

default: no secret (no authentication will be performed)

If set, then Distributed queries will be validated on shards, so at least:

- such cluster should exist on the shard,

- such cluster should have the same secret.

And also (and which is more important), the initial_user will

be used as current user for the query.

Right now the protocol is pretty simple and it only takes into account:

- cluster name

- query

Also it will be nice if the following will be implemented:

- source hostname (see interserver_http_host), but then it will depends from DNS,

it can use IP address instead, but then the you need to get correct on the initiator node.

- target hostname / ip address (same notes as for source hostname)

- time-based security tokens

-->

<!-- <secret></secret> -->

<!-- <shard> -->

...

...

<!--</remote_servers>-->

修改到此处单机版的clickhouse就安装完成了。可以测试启动clickhouse-server 和clickhouse-client

启动clickhouse-server

[root@master ~]# systemctl start clickhouse-server

[root@master ~]# systemctl status clickhouse-server

● clickhouse-server.service - ClickHouse Server (analytic DBMS for big data)

Loaded: loaded (/etc/systemd/system/clickhouse-server.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2022-03-10 17:13:21 CST; 6min ago

Main PID: 12290 (clckhouse-watch)

CGroup: /system.slice/clickhouse-server.service

├─12290 clickhouse-watchdog --config=/etc/clickhouse-server/config.xml --pid-file=/run/clickhouse-server/clickhouse-server.pid

└─12291 /usr/bin/clickhouse-server --config=/etc/clickhouse-server/config.xml --pid-file=/run/clickhouse-server/clickhouse-server.pid

Mar 10 17:13:21 master systemd[1]: Started ClickHouse Server (analytic DBMS for big data).

Mar 10 17:13:21 master systemd[1]: Starting ClickHouse Server (analytic DBMS for big data)...

Mar 10 17:13:21 master clickhouse-server[12290]: Processing configuration file '/etc/clickhouse-server/config.xml'.

Mar 10 17:13:21 master clickhouse-server[12290]: Logging trace to /var/log/clickhouse-server/clickhouse-server.log

Mar 10 17:13:21 master clickhouse-server[12290]: Logging errors to /var/log/clickhouse-server/clickhouse-server.err.log

Mar 10 17:13:22 master clickhouse-server[12290]: Processing configuration file '/etc/clickhouse-server/config.xml'.

Mar 10 17:13:22 master clickhouse-server[12290]: Saved preprocessed configuration to '/var/lib/clickhouse/preprocessed_configs/config.xml'.

Mar 10 17:13:22 master clickhouse-server[12290]: Processing configuration file '/etc/clickhouse-server/users.xml'.

Mar 10 17:13:22 master clickhouse-server[12290]: Saved preprocessed configuration to '/var/lib/clickhouse/preprocessed_configs/users.xml'.

#看到active(running)就证明 serve启动完成

systemctl start clickhouse-server #启动

systemctl status clickhouse-server #状态

systemctl stop clickhouse-server #停止

启动clickhouse-client

#接着启动client

#因为我在config.xml的配置文件修改过<tcp_port>, 故在启动的时候需要指定新的port

[root@master ~]# clickhouse-client --port 1200

ClickHouse client version 21.11.7.9 (official build).

Connecting to localhost:1200 as user default.

Connected to ClickHouse server version 21.11.7 revision 54450.

master :)

#到此处就client也启动成功了

集群版

在单机版的基础上新增以下配置

1、修改clickhouse-server的配置文件config.xml

<!--新增-->

<!--ck集群节点-->

<remote_servers>

<!-- 集群名称,可以修改-->

<test_ck_cluster>

<!-- 配置三个分片, 每个分片对应一台机器, 为每个分片配置一个副本 -->

<!--分片1-->

<shard>

<!-- 权重:新增一条数据的时候有多大的概率落入该分片,默认值:1 -->

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>192.168.1.211</host>

<port>1200</port>

<user>default</user>

<password></password>

<compression>true</compression>

</replica>

</shard>

<!--分片2-->

<shard>

<weight>1</weight>

<internal_replication>true</internal_replication>

<replica>

<host>192.168.1.212</host>

<port>1200</port>

<user>default</user>

<password></password>

<compression>true</compression>

</replica>

</shard>

<!--分片3-->

<shard>

<weight>1</weight>

<internal_replication>false</internal_replication>

<replica>

<host>192.168.1.213</host>

<port>1200</port>

<user>default</user>

<password></password>

<compression>true</compression>

</replica>

</shard>

</test_ck_cluster>

</remote_servers>

<!--zookeeper相关配置-->

<zookeeper>

<node index="1">

<host>192.168.1.211</host>

<port>2181</port>

</node>

<node index="2">

<host>192.168.1.212</host>

<port>2181</port>

</node>

<node index="3">

<host>192.168.1.213</host>

<port>2181</port>

</node>

</zookeeper>

<macros>

<replica>192.168.1.211</replica> <!--当前节点主机名-->

</macros>

<networks>

<ip>::/0</ip>

</networks>

<!--压缩相关配置-->

<clickhouse_compression>

<case>

<min_part_size>10000000000</min_part_size>

<min_part_size_ratio>0.01</min_part_size_ratio>

<method>lz4</method> <!--压缩算法lz4压缩比zstd快, 更占磁盘-->

</case>

</clickhouse_compression>

三个节点配置文件的区别

<!-- 每台机器对应各自的主机名 -->

<macros>

<replica>192.168.1.211</replica> <!--当前节点主机名-->

</macros>

全部配置完成后,分别各自启动每个节点的clickhouse-server

然后随机在一台机器上启动client进行测试

测试语句

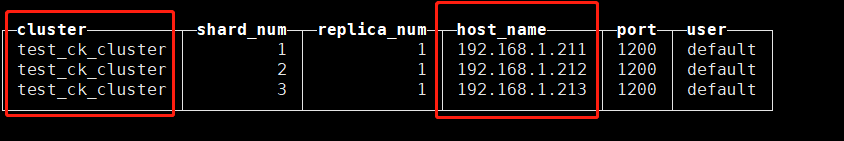

select cluster,shard_num,replica_num,host_name,port,user from system.clusters;

结果

[root@master ~]# clickhouse-client --port

Bad arguments: the required argument for option '--port' is missing

[root@master ~]# clickhouse-client --port 1200

ClickHouse client version 21.11.7.9 (official build).

Connecting to localhost:1200 as user default.

Connected to ClickHouse server version 21.11.7 revision 54450.

master :) select cluster,shard_num,replica_num,host_name,port,user from system.clusters;

SELECT

cluster,

shard_num,

replica_num,

host_name,

port,

user

FROM system.clusters

Query id: 32df1583-7cf0-4e16-b1f4-13506797925c

┌─cluster─────────┬─shard_num─┬─replica_num─┬─host_name─────┬─port─┬─user────┐

│ test_ck_cluster │ 1 │ 1 │ 192.168.1.211 │ 1200 │ default │

│ test_ck_cluster │ 2 │ 1 │ 192.168.1.212 │ 1200 │ default │

│ test_ck_cluster │ 3 │ 1 │ 192.168.1.213 │ 1200 │ default │

└─────────────────┴───────────┴─────────────┴───────────────┴──────┴─────────┘

3 rows in set. Elapsed: 0.001 sec.

master :)

可以看到集群的信息了 就OK了。