1、settings.py主要配置信息,包括USER_AGENT等

# -*- coding: utf-8 -*- # Scrapy settings for renren project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'renren' SPIDER_MODULES = ['renren.spiders'] NEWSPIDER_MODULE = 'renren.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'renren.middlewares.RenrenSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'renren.middlewares.RenrenDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'renren.pipelines.RenrenPipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

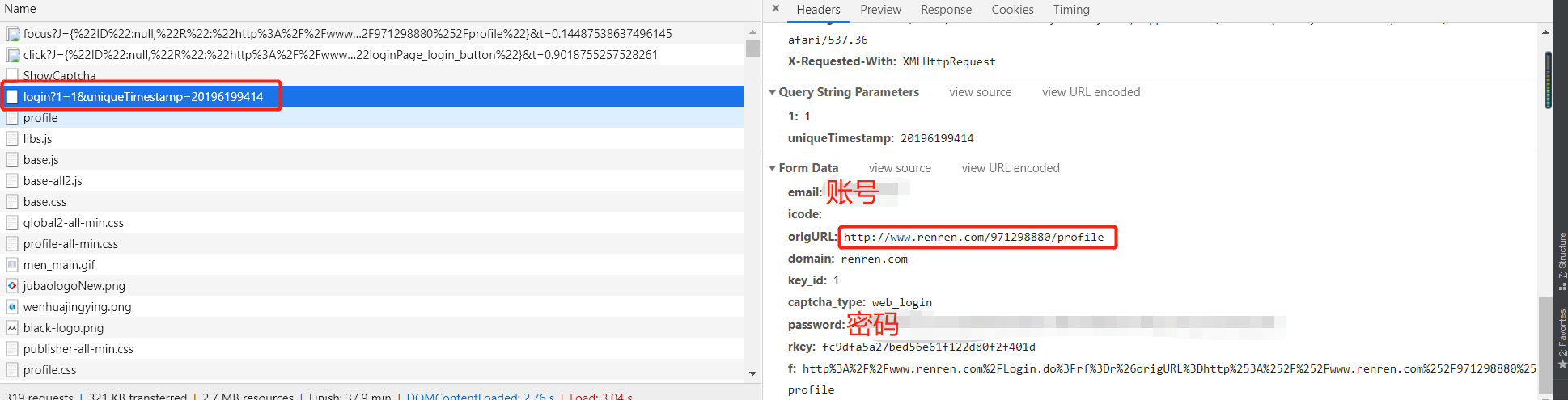

2、rr.py,主要模拟登陆

# -*- coding: utf-8 -*- import scrapy import time import re class RrSpider(scrapy.Spider): name = 'rr' allowed_domains = ['renren.com'] start_urls = ['http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=201961933877'] def start_requests(self): base_url = 'http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=' s = time.strftime("%S") ms = int(round(time.time() % (int(time.time())), 3) * 1000) date_time = '20188010' + str(s) + str(ms) login_url = base_url + date_time data = {'email': '18620028487', 'icode': '', 'origURL': 'http://www.renren.com/home', 'domain': 'renren.com', 'key_id': '1', 'captcha_type': 'web_login', 'password': '41980c8f91e2c872910598a9e0a147d05934506893ef022c5b42357a67e0a3be', 'rkey': 'fc9dfa5a27bed56e61f122d80f2f401d', 'f':'http%3A%2F%2Fwww.renren.com%2FLogin.do%3Frf%3Dr%26origURL%3Dhttp%253A%252F%252Fwww.renren.com%252F971298880%252Fprofile' } yield scrapy.FormRequest(url=login_url, formdata=data, callback=self.parse_login, dont_filter=True) def parse_login(self, response): yield scrapy.Request(url='http://www.renren.com/971298880/profile', callback=self.parse_text, dont_filter=True) def parse_text(self, response): print(response.body.decode())

3、登陆信息