学习数据分析,然后没有合适的数据源,从国家统计局的网页上抓取一页数据来玩玩(没有发现robots协议,也仅仅发出一次连接请求,不对网站造成任何负荷)

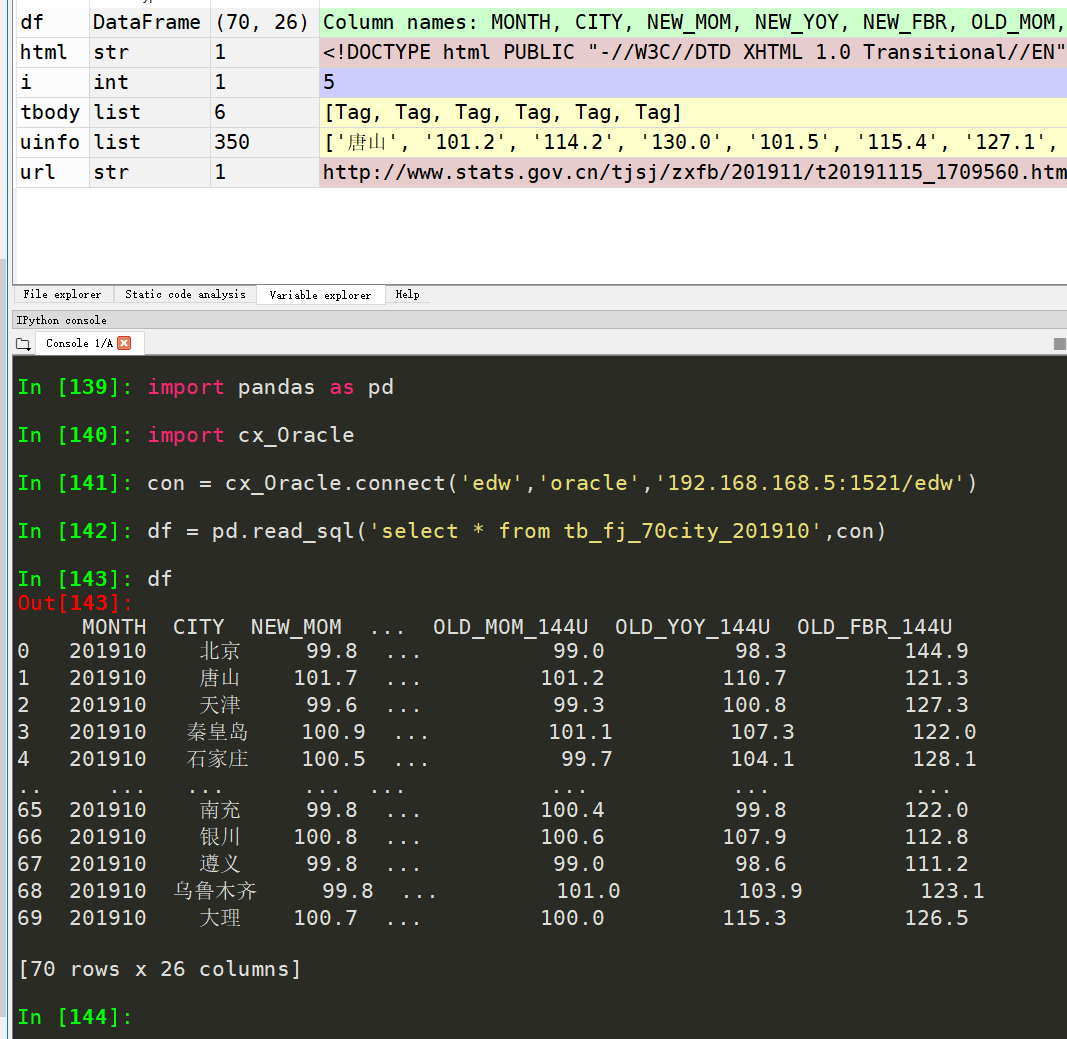

运行效果

源码

python代码

'''

本脚本旨在爬取70城房价进入oracle数据库以供学习

code by 九命猫幺

网页中有6个表格

最终爬取到数据库中形成6合1报表

'''

import requests

from bs4 import BeautifulSoup

import numpy as np

import pandas as pd

from sqlalchemy import create_engine

#爬取网页

def getHTMLText(url):

try:

headers={'User-Agent':'Baiduspider'}

r = requests.get(url,headers=headers,timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return '产生异常'

#解析出列表

def getTrText(tbody,tnum):

uinfo1 = []

uinfo2 = []

for i in tbody.strings:

if i != ' ':

uinfo1.append(str(i.string).replace('u3000','').replace(' ',''))

for i in uinfo1:

if i not in ['皇','岛', '家','庄','丹','江','尔','滨','顶','山']:

uinfo2.append(i.replace('秦','秦皇岛').replace('石','石家庄').replace('牡','牡丹江').replace('哈','哈尔滨').replace('平','平顶山'))

uinfo2 = uinfo2[{1:-280,2:-280,3:-350,4:-350,5:-350,6:-350}[tnum]::]

return uinfo2

#将解析出的列表加工转换传入oracle库

def toSql(uinfo,tnum):

if tnum in [1,2]:

df = pd.DataFrame(np.array(uinfo).reshape(70,4),columns=['city','mom','yoy','fbr'])

else:

df = pd.DataFrame(np.array(uinfo).reshape(35,10),columns=['city','mom_90l','yoy_90l','fbr_90l','mom_90t144','yoy_90t144','fbr_90t144','mom_144u','yoy_144u','fbr_144u'])

con = create_engine('oracle+cx_oracle://edw:oracle@192.168.168.5:1521/?service_name=edw')

df.to_sql('tb_fj_70city_t'+str(tnum),con,if_exists='replace',index=False)

if __name__ == "__main__":

uinfo = []

url = 'http://www.stats.gov.cn/tjsj/zxfb/201911/t20191115_1709560.html'

#爬网页

html = getHTMLText(url)

soup = BeautifulSoup(html,'html.parser')

tbody = soup.select('table.MsoNormalTable tbody')

#解析存储

for i in range(6):

#解析表

uinfo = getTrText(tbody[i],i+1)

#存表入数据库

toSql(uinfo,i+1)

数据库代码

--70个大中城市商品住宅销售价格变动情况

CREATE TABLE tb_fj_70city_201910 AS

WITH tmp1 AS(

SELECT to_char(a.city) city,to_number(a.mom) new_mom,to_number(a.yoy) new_yoy,to_number(a.fbr) new_fbr

FROM tb_fj_70city_t1 a),

tmp2 AS(

SELECT to_char(a.city) city,to_number(a.mom) old_mom,to_number(a.yoy) old_yoy,to_number(a.fbr) old_fbr

FROM tb_fj_70city_t2 a),

tmp3 AS(

SELECT to_char(a.city) city,to_number(a.mom_90l) new_mom_90l,to_number(a.yoy_90l) new_yoy_90l,to_number(a.fbr_90l) new_fbr_90l,

to_number(a.mom_90t144) new_mom_90t144,to_number(a.yoy_90t144) new_yoy_90t144,to_number(a.fbr_90t144) new_fbr_90t144,

to_number(a.mom_144u) new_mom_144u,to_number(a.yoy_144u) new_yoy_144u,to_number(a.fbr_144u) new_fbr_144u

FROM tb_fj_70city_t3 a

UNION

SELECT to_char(a.city) city,to_number(a.mom_90l) new_mom_90l,to_number(a.yoy_90l) new_yoy_90l,to_number(a.fbr_90l) new_fbr_90l,

to_number(a.mom_90t144) new_mom_90t144,to_number(a.yoy_90t144) new_yoy_90t144,to_number(a.fbr_90t144) new_fbr_90t144,

to_number(a.mom_144u) new_mom_144u,to_number(a.yoy_144u) new_yoy_144u,to_number(a.fbr_144u) new_fbr_144u

FROM tb_fj_70city_t4 a),

tmp4 AS(

SELECT to_char(a.city) city,to_number(a.mom_90l) old_mom_90l,to_number(a.yoy_90l) old_yoy_90l,to_number(a.fbr_90l) old_fbr_90l,

to_number(a.mom_90t144) old_mom_90t144,to_number(a.yoy_90t144) old_yoy_90t144,to_number(a.fbr_90t144) old_fbr_90t144,

to_number(a.mom_144u) old_mom_144u,to_number(a.yoy_144u) old_yoy_144u,to_number(a.fbr_144u) old_fbr_144u

FROM tb_fj_70city_t5 a

UNION

SELECT to_char(a.city) city,to_number(a.mom_90l) old_mom_90l,to_number(a.yoy_90l) old_yoy_90l,to_number(a.fbr_90l) old_fbr_90l,

to_number(a.mom_90t144) old_mom_90t144,to_number(a.yoy_90t144) old_yoy_90t144,to_number(a.fbr_90t144) old_fbr_90t144,

to_number(a.mom_144u) old_mom_144u,to_number(a.yoy_144u) old_yoy_144u,to_number(a.fbr_144u) old_fbr_144u

FROM tb_fj_70city_t6 a)

SELECT 201910 month,aa.city,aa.new_mom,aa.new_yoy,aa.new_fbr,bb. old_mom,bb.old_yoy,bb.old_fbr,

cc.new_mom_90l,cc.new_yoy_90l,cc.new_fbr_90l,

cc.new_mom_90t144,cc.new_yoy_90t144,cc.new_fbr_90t144,

cc.new_mom_144u,cc.new_yoy_144u,cc.new_fbr_144u,

dd.old_mom_90l,dd.old_yoy_90l,dd.old_fbr_90l,

dd.old_mom_90t144,dd.old_yoy_90t144,dd.old_fbr_90t144,

dd.old_mom_144u,dd.old_yoy_144u,dd.old_fbr_144u

FROM tmp1 aa

JOIN tmp2 bb ON aa.city=bb.city

JOIN tmp3 cc ON aa.city=cc.city

JOIN tmp4 dd ON aa.city=dd.city;

CALL p_drop_table_if_exist('tb_fj_70city_t1');

CALL p_drop_table_if_exist('tb_fj_70city_t2');

CALL p_drop_table_if_exist('tb_fj_70city_t3');

CALL p_drop_table_if_exist('tb_fj_70city_t4');

CALL p_drop_table_if_exist('tb_fj_70city_t5');

CALL p_drop_table_if_exist('tb_fj_70city_t6');

SELECT * FROM tb_fj_70city_201910;

就这样,表名中列名,取英文首字母:

mom:month on month ,环比

yoy:year on year,同比

fbr:fixed base ratio,定基比

90l:90 lower,90平米以下

144u:144 upper,144平米以上

90t144:90 to 144,90到144平米之间

优化后

上述脚本只能爬取一个月的,并且6表合1操作在数据库中执行,现在优化为批量爬取多个月份的数据

'''

本脚本旨在爬取70城房价进入oracle数据库以供学习

code by 九命猫幺

网页中有6个表格

最终爬取到数据库中形成6合1报表

网址:

'''

import requests

from bs4 import BeautifulSoup

import numpy as np

import pandas as pd

from sqlalchemy import create_engine

import cx_Oracle

#爬取网页

def getHTMLText(url):

try:

headers={'User-Agent':'Baiduspider'}

r = requests.get(url,headers=headers,timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return '产生异常'

#解析出列表

def getTrText(tbody,tnum):

uinfo1 = []

uinfo2 = []

for i in tbody.strings:

if i != ' ':

uinfo1.append(str(i.string).replace('u3000','').replace(' ',''))

for i in uinfo1:

if i not in ['皇','岛', '家','庄','丹','江','尔','滨','顶','山']:

uinfo2.append(i.replace('秦','秦皇岛').replace('石','石家庄').replace('牡','牡丹江').replace('哈','哈尔滨').replace('平','平顶山'))

uinfo2 = uinfo2[{1:-280,2:-280,3:-350,4:-350,5:-350,6:-350}[tnum]::]

return uinfo2

#将解析出的列表加工转换传入oracle库

def toSql(uinfo,tnum):

if tnum in [1,2]:

df = pd.DataFrame(np.array(uinfo).reshape(70,4),columns=['city','mom','yoy','fbr'])

else:

df = pd.DataFrame(np.array(uinfo).reshape(35,10),columns=['city','mom_90l','yoy_90l','fbr_90l','mom_90t144','yoy_90t144','fbr_90t144','mom_144u','yoy_144u','fbr_144u'])

con = create_engine('oracle+cx_oracle://edw:oracle@192.168.168.5:1521/?service_name=edw')

df.to_sql('tb_fj_70city_t'+str(tnum),con,if_exists='replace',index=False)

#6合1 并插入历史宽表

def intoWideTable(month):

con = cx_Oracle.connect('edw','oracle','192.168.168.5:1521/edw')

cur = con.cursor()

cur.execute("CALL p_drop_table_if_exist('tb_fj_70city_"+str(month)+"')")

cur.execute('''CREATE TABLE tb_fj_70city_'''+str(month)+''' AS

WITH tmp1 AS(

SELECT to_char(a.city) city,to_number(a.mom) new_mom,to_number(a.yoy) new_yoy,to_number(a.fbr) new_fbr

FROM tb_fj_70city_t1 a),

tmp2 AS(

SELECT to_char(a.city) city,to_number(a.mom) old_mom,to_number(a.yoy) old_yoy,to_number(a.fbr) old_fbr

FROM tb_fj_70city_t2 a),

tmp3 AS(

SELECT to_char(a.city) city,to_number(a.mom_90l) new_mom_90l,to_number(a.yoy_90l) new_yoy_90l,to_number(a.fbr_90l) new_fbr_90l,

to_number(a.mom_90t144) new_mom_90t144,to_number(a.yoy_90t144) new_yoy_90t144,to_number(a.fbr_90t144) new_fbr_90t144,

to_number(a.mom_144u) new_mom_144u,to_number(a.yoy_144u) new_yoy_144u,to_number(a.fbr_144u) new_fbr_144u

FROM tb_fj_70city_t3 a

UNION

SELECT to_char(a.city) city,to_number(a.mom_90l) new_mom_90l,to_number(a.yoy_90l) new_yoy_90l,to_number(a.fbr_90l) new_fbr_90l,

to_number(a.mom_90t144) new_mom_90t144,to_number(a.yoy_90t144) new_yoy_90t144,to_number(a.fbr_90t144) new_fbr_90t144,

to_number(a.mom_144u) new_mom_144u,to_number(a.yoy_144u) new_yoy_144u,to_number(a.fbr_144u) new_fbr_144u

FROM tb_fj_70city_t4 a),

tmp4 AS(

SELECT to_char(a.city) city,to_number(a.mom_90l) old_mom_90l,to_number(a.yoy_90l) old_yoy_90l,to_number(a.fbr_90l) old_fbr_90l,

to_number(a.mom_90t144) old_mom_90t144,to_number(a.yoy_90t144) old_yoy_90t144,to_number(a.fbr_90t144) old_fbr_90t144,

to_number(a.mom_144u) old_mom_144u,to_number(a.yoy_144u) old_yoy_144u,to_number(a.fbr_144u) old_fbr_144u

FROM tb_fj_70city_t5 a

UNION

SELECT to_char(a.city) city,to_number(a.mom_90l) old_mom_90l,to_number(a.yoy_90l) old_yoy_90l,to_number(a.fbr_90l) old_fbr_90l,

to_number(a.mom_90t144) old_mom_90t144,to_number(a.yoy_90t144) old_yoy_90t144,to_number(a.fbr_90t144) old_fbr_90t144,

to_number(a.mom_144u) old_mom_144u,to_number(a.yoy_144u) old_yoy_144u,to_number(a.fbr_144u) old_fbr_144u

FROM tb_fj_70city_t6 a)

SELECT '''+str(month)+''' month,aa.city,aa.new_mom,aa.new_yoy,aa.new_fbr,bb. old_mom,bb.old_yoy,bb.old_fbr,

cc.new_mom_90l,cc.new_yoy_90l,cc.new_fbr_90l,

cc.new_mom_90t144,cc.new_yoy_90t144,cc.new_fbr_90t144,

cc.new_mom_144u,cc.new_yoy_144u,cc.new_fbr_144u,

dd.old_mom_90l,dd.old_yoy_90l,dd.old_fbr_90l,

dd.old_mom_90t144,dd.old_yoy_90t144,dd.old_fbr_90t144,

dd.old_mom_144u,dd.old_yoy_144u,dd.old_fbr_144u

FROM tmp1 aa

JOIN tmp2 bb ON aa.city=bb.city

JOIN tmp3 cc ON aa.city=cc.city

JOIN tmp4 dd ON aa.city=dd.city''')

cur.close()

con.close()

if __name__ == "__main__":

uinfo = []

urls = {201910:'http://www.stats.gov.cn/tjsj/zxfb/201911/t20191115_1709560.html',

201909:'http://www.stats.gov.cn/tjsj/zxfb/201910/t20191021_1704063.html',

201908:'http://www.stats.gov.cn/tjsj/zxfb/201909/t20190917_1697943.html',

201907:'http://www.stats.gov.cn/statsinfo/auto2074/201908/t20190815_1691536.html',

201906:'http://www.stats.gov.cn/tjsj/zxfb/201907/t20190715_1676000.html',

201905:'http://www.stats.gov.cn/tjsj/zxfb/201906/t20190618_1670960.html',

201904:'http://www.stats.gov.cn/tjsj/zxfb/201905/t20190516_1665286.html',

201903:'http://www.stats.gov.cn/tjsj/zxfb/201904/t20190416_1659682.html'

}

for key in urls:

#爬网页

html = getHTMLText(urls[key])

soup = BeautifulSoup(html,'html.parser')

tbody = soup.select('table.MsoNormalTable tbody')

#解析存储

for i in range(6):

#解析表

uinfo = getTrText(tbody[i],i+1)

#存表入数据库

toSql(uinfo,i+1)

#存入宽表

intoWideTable(key)

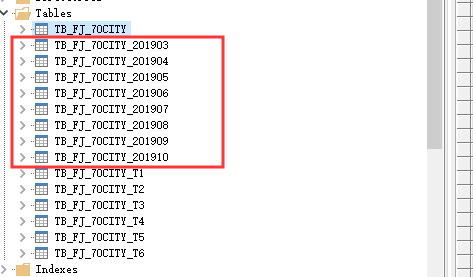

数据库中同时得到了多个月份的

数据库中同时得到了多个月份的

再优化单一月份爬取的代码

import requests

from bs4 import BeautifulSoup

import numpy as np

import pandas as pd

from sqlalchemy import create_engine

import cx_Oracle

#爬取网页

def getHTMLText(url):

try:

headers={'User-Agent':'Baiduspider'}

r = requests.get(url,headers=headers,timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return '产生异常'

#解析出列表

def getTrText(tbody,tnum):

uinfo1 = []

uinfo2 = ['...']

for i in tbody.strings:

if i not in [' ',' ']:

uinfo1.append(str(i.string).replace(' ',''))

for i in uinfo1:

if 'u4e00' <= i <= 'u9fa5' and 'u4e00' <= uinfo2[-1] <= 'u9fa5':

uinfo2[-1]=uinfo2[-1]+i

else:

uinfo2.append(i)

uinfo2 = uinfo2[{1:-280,2:-280}.get(tnum,-350)::]

return uinfo2

#将解析出的列表加工转换传入oracle库

def toSql(uinfo,tnum):

if tnum in [1,2]:

df = pd.DataFrame(np.array(uinfo).reshape(70,4),columns=['city','mom','yoy','fbr'])

else:

df = pd.DataFrame(np.array(uinfo).reshape(35,10),columns=['city','mom_90l','yoy_90l','fbr_90l','mom_90t144','yoy_90t144','fbr_90t144','mom_144u','yoy_144u','fbr_144u'])

con = create_engine('oracle+cx_oracle://edw:oracle@192.168.168.5:1521/?service_name=edw')

df.to_sql('tb_fj_70city_t'+str(tnum),con,if_exists='replace',index=False)

#6合1 并插入历史宽表

def intoWideTable(month):

con = cx_Oracle.connect('edw','oracle','192.168.168.5:1521/edw')

cur = con.cursor()

cur.execute("CALL p_drop_table_if_exist('tb_fj_70city_"+str(month)+"')")

cur.execute('''CREATE TABLE tb_fj_70city_'''+str(month)+''' AS

WITH tmp1 AS(

SELECT to_char(a.city) city,to_number(a.mom) new_mom,to_number(a.yoy) new_yoy,to_number(a.fbr) new_fbr

FROM tb_fj_70city_t1 a),

tmp2 AS(

SELECT to_char(a.city) city,to_number(a.mom) old_mom,to_number(a.yoy) old_yoy,to_number(a.fbr) old_fbr

FROM tb_fj_70city_t2 a),

tmp3 AS(

SELECT to_char(a.city) city,to_number(a.mom_90l) new_mom_90l,to_number(a.yoy_90l) new_yoy_90l,to_number(a.fbr_90l) new_fbr_90l,

to_number(a.mom_90t144) new_mom_90t144,to_number(a.yoy_90t144) new_yoy_90t144,to_number(a.fbr_90t144) new_fbr_90t144,

to_number(a.mom_144u) new_mom_144u,to_number(a.yoy_144u) new_yoy_144u,to_number(a.fbr_144u) new_fbr_144u

FROM tb_fj_70city_t3 a

UNION

SELECT to_char(a.city) city,to_number(a.mom_90l) new_mom_90l,to_number(a.yoy_90l) new_yoy_90l,to_number(a.fbr_90l) new_fbr_90l,

to_number(a.mom_90t144) new_mom_90t144,to_number(a.yoy_90t144) new_yoy_90t144,to_number(a.fbr_90t144) new_fbr_90t144,

to_number(a.mom_144u) new_mom_144u,to_number(a.yoy_144u) new_yoy_144u,to_number(a.fbr_144u) new_fbr_144u

FROM tb_fj_70city_t4 a),

tmp4 AS(

SELECT to_char(a.city) city,to_number(a.mom_90l) old_mom_90l,to_number(a.yoy_90l) old_yoy_90l,to_number(a.fbr_90l) old_fbr_90l,

to_number(a.mom_90t144) old_mom_90t144,to_number(a.yoy_90t144) old_yoy_90t144,to_number(a.fbr_90t144) old_fbr_90t144,

to_number(a.mom_144u) old_mom_144u,to_number(a.yoy_144u) old_yoy_144u,to_number(a.fbr_144u) old_fbr_144u

FROM tb_fj_70city_t5 a

UNION

SELECT to_char(a.city) city,to_number(a.mom_90l) old_mom_90l,to_number(a.yoy_90l) old_yoy_90l,to_number(a.fbr_90l) old_fbr_90l,

to_number(a.mom_90t144) old_mom_90t144,to_number(a.yoy_90t144) old_yoy_90t144,to_number(a.fbr_90t144) old_fbr_90t144,

to_number(a.mom_144u) old_mom_144u,to_number(a.yoy_144u) old_yoy_144u,to_number(a.fbr_144u) old_fbr_144u

FROM tb_fj_70city_t6 a)

SELECT '''+str(month)+''' month,aa.city,aa.new_mom,aa.new_yoy,aa.new_fbr,bb. old_mom,bb.old_yoy,bb.old_fbr,

cc.new_mom_90l,cc.new_yoy_90l,cc.new_fbr_90l,

cc.new_mom_90t144,cc.new_yoy_90t144,cc.new_fbr_90t144,

cc.new_mom_144u,cc.new_yoy_144u,cc.new_fbr_144u,

dd.old_mom_90l,dd.old_yoy_90l,dd.old_fbr_90l,

dd.old_mom_90t144,dd.old_yoy_90t144,dd.old_fbr_90t144,

dd.old_mom_144u,dd.old_yoy_144u,dd.old_fbr_144u

FROM tmp1 aa

JOIN tmp2 bb ON aa.city=bb.city

JOIN tmp3 cc ON aa.city=cc.city

JOIN tmp4 dd ON aa.city=dd.city''')

cur.execute('delete from tb_fj_70city where month='+str(month))

cur.execute('insert into tb_fj_70city select * from tb_fj_70city_'+str(month))

con.commit()

cur.close()

con.close()

if __name__ == "__main__":

uinfo = []

month = 201910

url = 'http://www.stats.gov.cn/tjsj/zxfb/201911/t20191115_1709560.html'

#爬网页

html = getHTMLText(url)

soup = BeautifulSoup(html,'html.parser')

tbody = soup.select('table.MsoNormalTable tbody')

#解析存储

for i in range(6):

#解析表

uinfo = getTrText(tbody[i],i+1)

#存表入数据库

toSql(uinfo,i+1)

intoWideTable(month)

ok了