1、在当前服务器启动hiveserver2服务,远程客户端通过beeline连接

报错信息如下:

root@master:~# beeline -u jdbc:hive2//master:10000 ls: cannot access /data1/hadoop/hive/lib/hive-jdbc-*-standalone.jar: No such file or directory SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/data1/hadoop/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/data1/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] scan complete in 1ms 19/06/17 06:29:13 [main]: ERROR beeline.ClassNameCompleter: Fail to parse the class name from the Jar file due to the exception:java.io.FileNotFoundException: org/ehcache/sizeof/impl/sizeof-agent.jar (No such file or directory) scan complete in 762ms #这里提示找不到文件或者目录 No known driver to handle "jdbc:hive2//master:10000" Beeline version 2.1.0 by Apache Hive beeline>

其实这个问题是由于jdbc协议地址写错造成的,在hive2之后少了个“:”

改成以下这个形式即可:

# beeline -u jdbc:hive2://master:10000 (这是在命令行直接输入)

或者

先输入beeline

然后输入:

!connect jdbc:hive2://master:10000

2、用户不被允许

# beeline -u jdbc:hive2://master:10000 -n root ls: cannot access /data1/hadoop/hive/lib/hive-jdbc-*-standalone.jar: No such file or directory SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/data1/hadoop/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/data1/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Connecting to jdbc:hive2://master:10000 Error: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate root (state=,code=0) Beeline version 2.1.0 by Apache Hive beeline>

(1)修改core-site.xml文件,加入如下选项:

<property>

<name>hadoop.proxyuser.root.hosts</name> #配置成*的意义,表示任意节点使用 hadoop 集群的代理用户 root 都能访问 hdfs 集群

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name> #表示代理用户的组所属

<value>*</value>

</property>

上述的proxyuser后面的root即是报错时User后面的用户,如:User: root is not allowed to

如果报错为:User: yjt is not allowed to,那么修改如下:

hadoop.proxyuser.yjt.hosts

hadoop.proxyuser.yjt.groups

这样改的原因:

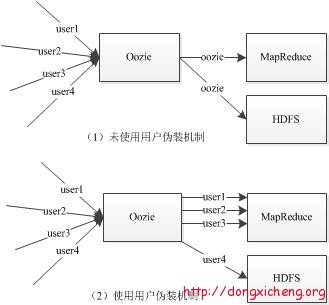

主要原因是hadoop引入了一个安全伪装机制,使得hadoop 不允许上层系统直接将实际用户传递到hadoop层,而是将实际用户传递给一个超级代理,由此代理在hadoop上执行操作,避免任意客户端随意操作hadoop,如下图:

图上的超级代理是“Oozie”,你自己的超级代理是上面设置的proxyuser后面的“xxx”。

而hadoop内部还是延用linux对应的用户和权限。即你用哪个linux用户启动hadoop,对应的用户也就成为hadoop的内部用户

(2)在hdfs-sitx.xml文件添加:

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

上诉配置完以后,重新启动hadoop集群,其实只要hdfs就可以了。

接着执行如下命令:

/# beeline -u jdbc:hive2://master:10000 -n root ls: cannot access /data1/hadoop/hive/lib/hive-jdbc-*-standalone.jar: No such file or directory SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/data1/hadoop/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/data1/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Connecting to jdbc:hive2://master:10000 Error: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate root (state=,code=0) Beeline version 2.1.0 by Apache Hive beeline>

what???,不对???

如果上诉配置正确,集群也已经重启,还是报这样的错误,那么查看一下启动hiveserver2的机器是否启动了namenode节点?

(1)如果没有启动namenode节点,那么查看你配置的用户(这里是root用户)是否有对应的操作hdfs目录的权限(权限控制可能会导致该错误)

(2)如果该节点启动了namenode,那么使用 hdfs haadmin -getAllServiceState 检查该节点的namenode的状态是否是standby。如果是,kill掉,让该节点的namenode变成active。

我碰到的问题就是上诉2,也就是namenode节点是standby。

kill掉以后,再试试

root@master:/# hdfs haadmin -getAllServiceState master:8020 standby slave1:8020 active root@master:/# hdfs haadmin -getAllServiceState master:8020 active 19/06/17 08:49:08 INFO ipc.Client: Retrying connect to server: slave1/172.17.0.3:8020. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=1, sleepTime=1000 MILLISECONDS) slave1:8020 Failed to connect: Call From master/172.17.0.2 to slave1:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused root@master:/# beeline -u jdbc:hive2://master:10000 -n root ls: cannot access /data1/hadoop/hive/lib/hive-jdbc-*-standalone.jar: No such file or directory SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/data1/hadoop/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/data1/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Connecting to jdbc:hive2://master:10000 Connected to: Apache Hive (version 2.1.0) Driver: Hive JDBC (version 2.1.0) 19/06/17 08:49:16 [main]: WARN jdbc.HiveConnection: Request to set autoCommit to false; Hive does not support autoCommit=false. Transaction isolation: TRANSACTION_REPEATABLE_READ Beeline version 2.1.0 by Apache Hive 0: jdbc:hive2://master:10000>

0: jdbc:hive2://master:10000> show databases;

OK

+----------------+--+

| database_name |

+----------------+--+

| default |

| myhive |

+----------------+--+

发现正常连接了。

借鉴:https://blog.csdn.net/qq_16633405/article/details/82190440