Hadoop生态圈-zookeeper的API用法详解

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.测试前准备

1>.开启集群

[yinzhengjie@s101 ~]$ more `which xzk.sh` #!/bin/bash #@author :yinzhengjie #blog:http://www.cnblogs.com/yinzhengjie #EMAIL:y1053419035@qq.com #判断用户是否传参 if [ $# -ne 1 ];then echo "无效参数,用法为: $0 {start|stop|restart|status}" exit fi #获取用户输入的命令 cmd=$1 #定义函数功能 function zookeeperManger(){ case $cmd in start) echo "启动服务" remoteExecution start ;; stop) echo "停止服务" remoteExecution stop ;; restart) echo "重启服务" remoteExecution restart ;; status) echo "查看状态" remoteExecution status ;; *) echo "无效参数,用法为: $0 {start|stop|restart|status}" ;; esac } #定义执行的命令 function remoteExecution(){ for (( i=102 ; i<=104 ; i++ )) ; do tput setaf 2 echo ========== s$i zkServer.sh $1 ================ tput setaf 9 ssh s$i "source /etc/profile ; zkServer.sh $1" done } #调用函数 zookeeperManger [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xzk.sh start 启动服务 ========== s102 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s103 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ========== s104 zkServer.sh start ================ ZooKeeper JMX enabled by default Using config: /soft/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ 4505 Jps 命令执行成功 ============= s102 jps ============ 3672 Jps 3643 QuorumPeerMain 命令执行成功 ============= s103 jps ============ 3376 Jps 3341 QuorumPeerMain 命令执行成功 ============= s104 jps ============ 3419 Jps 3390 QuorumPeerMain 命令执行成功 ============= s105 jps ============ 3802 Jps 命令执行成功 [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ start-dfs.sh SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting namenodes on [s101 s105] s101: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s101.out s105: starting namenode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-namenode-s105.out s103: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s103.out s102: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s102.out s105: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s105.out s104: starting datanode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-datanode-s104.out Starting journal nodes [s102 s103 s104] s102: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s102.out s103: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s103.out s104: starting journalnode, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-journalnode-s104.out SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/soft/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] Starting ZK Failover Controllers on NN hosts [s101 s105] s101: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s101.out s105: starting zkfc, logging to /soft/hadoop-2.7.3/logs/hadoop-yinzhengjie-zkfc-s105.out [yinzhengjie@s101 ~]$ [yinzhengjie@s101 ~]$ xcall.sh jps ============= s101 jps ============ Jps DFSZKFailoverController 命令执行成功 ============= s102 jps ============ Jps DataNode QuorumPeerMain JournalNode 命令执行成功 ============= s103 jps ============ DataNode JournalNode Jps QuorumPeerMain 命令执行成功 ============= s104 jps ============ JournalNode Jps DataNode QuorumPeerMain 命令执行成功 ============= s105 jps ============ DFSZKFailoverController DataNode Jps 命令执行成功 [yinzhengjie@s101 ~]$

2>.添加Maven依赖

1 <?xml version="1.0" encoding="UTF-8"?> 2 <project xmlns="http://maven.apache.org/POM/4.0.0" 3 xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" 4 xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> 5 <modelVersion>4.0.0</modelVersion> 6 <groupId>cn.org.yinzhengjie</groupId> 7 <artifactId>MyZookeep</artifactId> 8 <version>1.0-SNAPSHOT</version> 9 10 <dependencies> 11 <dependency> 12 <groupId>junit</groupId> 13 <artifactId>junit</artifactId> 14 <version>4.12</version> 15 </dependency> 16 17 <dependency> 18 <groupId>org.apache.zookeeper</groupId> 19 <artifactId>zookeeper</artifactId> 20 <version>3.4.6</version> 21 </dependency> 22 </dependencies> 23 </project>

二.Zookeeper的API常用方法介绍

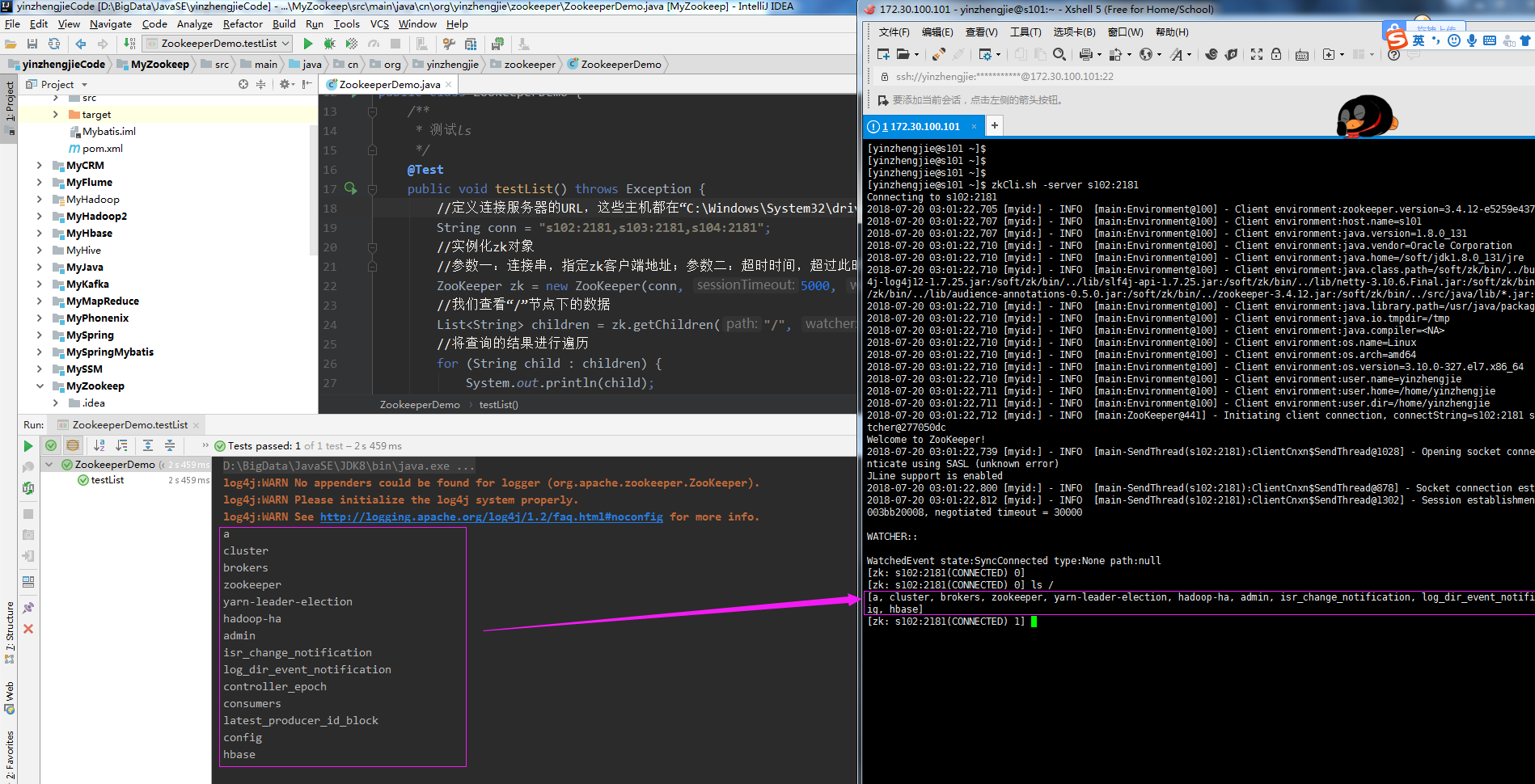

1>.测试ls命令

1 /* 2 @author :yinzhengjie 3 Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/ 4 EMAIL:y1053419035@qq.com 5 */ 6 package cn.org.yinzhengjie.zookeeper; 7 8 import org.apache.zookeeper.ZooKeeper; 9 import org.junit.Test; 10 import java.util.List; 11 12 public class ZookeeperDemo { 13 /** 14 * 测试ls 15 */ 16 @Test 17 public void testList() throws Exception { 18 //定义连接服务器的URL,这些主机都在“C:WindowsSystem32driversetcHOSTS”中做的有映射哟! 19 String conn = "s102:2181,s103:2181,s104:2181"; 20 //实例化zk对象 21 //参数一:连接串,指定zk客户端地址;参数二:超时时间,超过此时间未获得连接,抛出异常;参数三:指定watcher对象,我们指定为null即可 22 ZooKeeper zk = new ZooKeeper(conn, 5000, null); 23 //我们查看“/”节点下的数据 24 List<String> children = zk.getChildren("/", null); 25 //将查询的结果进行遍历 26 for (String child : children) { 27 System.out.println(child); 28 } 29 } 30 }

以上代码输出结果如下:

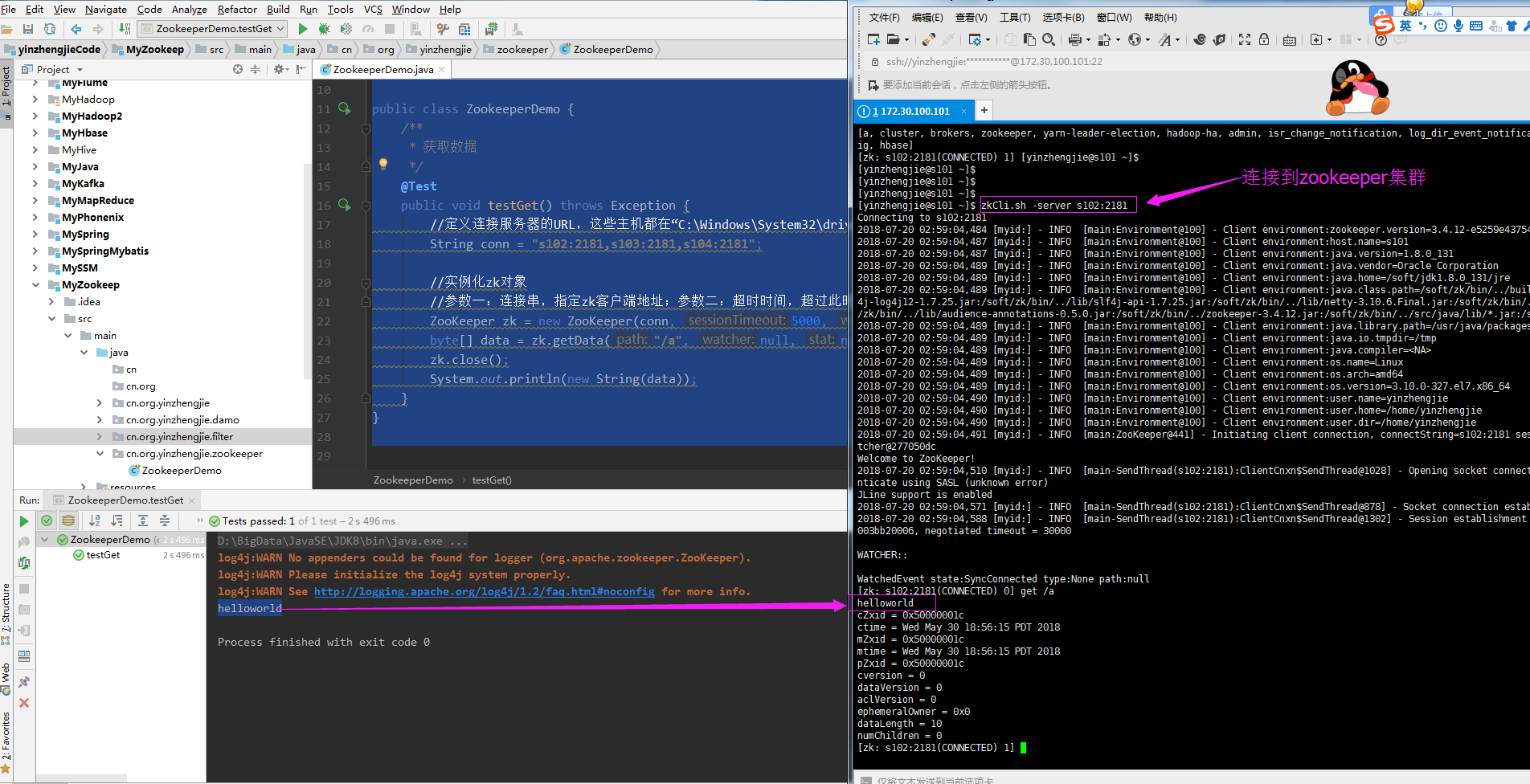

2>.获取数据

1 /* 2 @author :yinzhengjie 3 Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/ 4 EMAIL:y1053419035@qq.com 5 */ 6 package cn.org.yinzhengjie.zookeeper; 7 8 import org.apache.zookeeper.ZooKeeper; 9 import org.junit.Test; 10 11 public class ZookeeperDemo { 12 /** 13 * 获取数据 14 */ 15 @Test 16 public void testGet() throws Exception { 17 //定义连接服务器的URL,这些主机都在“C:WindowsSystem32driversetcHOSTS”中做的有映射哟! 18 String conn = "s102:2181,s103:2181,s104:2181"; 19 20 //实例化zk对象 21 //参数一:连接串,指定zk客户端地址;参数二:超时时间,超过此时间未获得连接,抛出异常;参数三:指定watcher对象,我们指定为null即可 22 ZooKeeper zk = new ZooKeeper(conn, 5000, null); 23 byte[] data = zk.getData("/a", null, null); 24 zk.close(); 25 System.out.println(new String(data)); 26 } 27 }

以上代码输出结果如下:

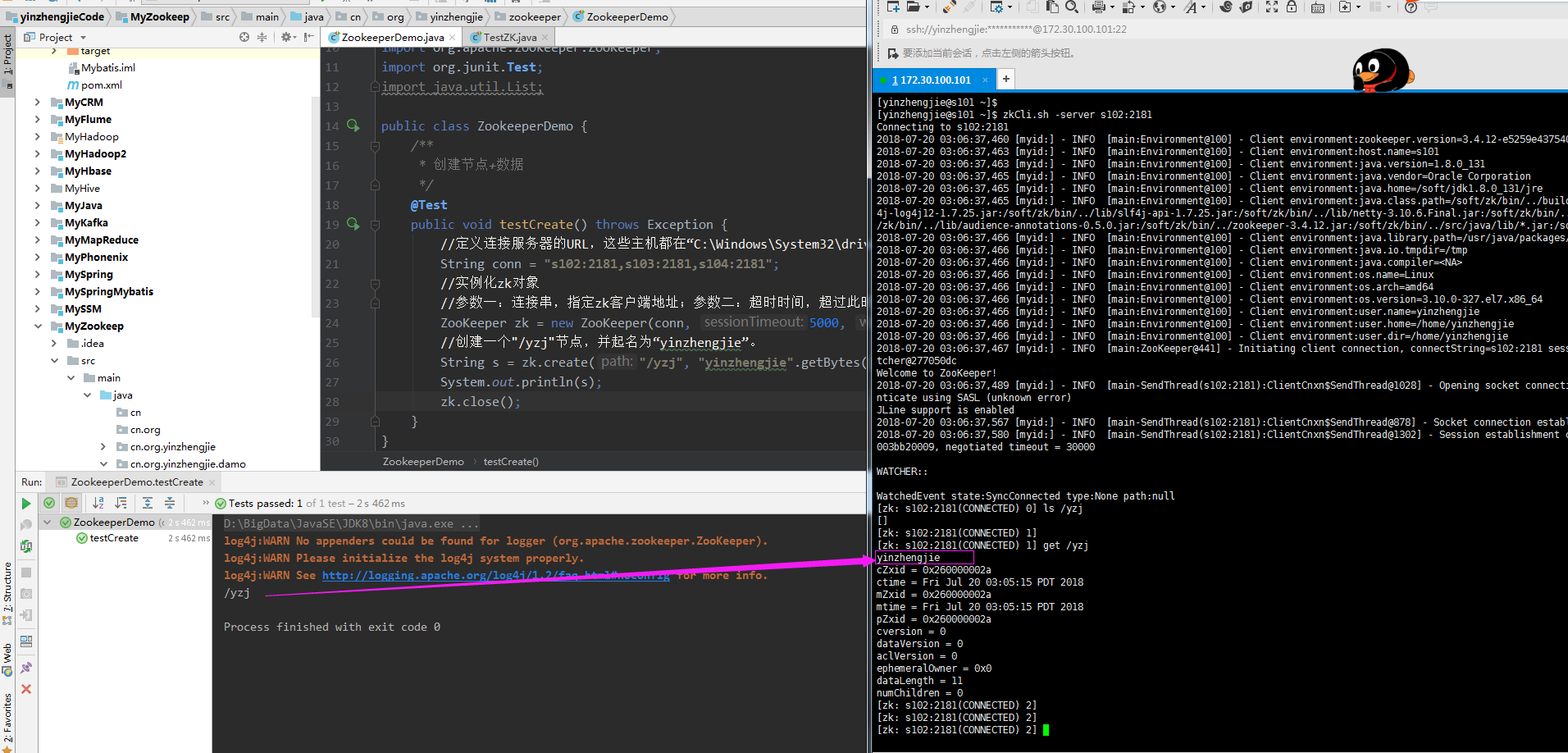

3>.创建节点+数据

1 /* 2 @author :yinzhengjie 3 Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/ 4 EMAIL:y1053419035@qq.com 5 */ 6 package cn.org.yinzhengjie.zookeeper; 7 8 import org.apache.zookeeper.CreateMode; 9 import org.apache.zookeeper.ZooDefs; 10 import org.apache.zookeeper.ZooKeeper; 11 import org.junit.Test; 12 13 public class ZookeeperDemo { 14 /** 15 * 创建节点+数据 16 */ 17 @Test 18 public void testCreate() throws Exception { 19 //定义连接服务器的URL,这些主机都在“C:WindowsSystem32driversetcHOSTS”中做的有映射哟! 20 String conn = "s102:2181,s103:2181,s104:2181"; 21 //实例化zk对象 22 //参数一:连接串,指定zk客户端地址;参数二:超时时间,超过此时间未获得连接,抛出异常;参数三:指定watcher对象,我们指定为null即可 23 ZooKeeper zk = new ZooKeeper(conn, 5000, null); 24 //创建一个"/yzj"节点(该节点不能再zookeeper集群中存在,否则会抛异常哟),并起名为“yinzhengjie”(写入的数据)。 25 String s = zk.create("/yzj", "yinzhengjie".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); 26 System.out.println(s); 27 zk.close(); 28 } 29 }

以上代码输出结果如下:

4>.删除节点

1 /* 2 @author :yinzhengjie 3 Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/ 4 EMAIL:y1053419035@qq.com 5 */ 6 package cn.org.yinzhengjie.zookeeper; 7 8 import org.apache.zookeeper.ZooKeeper; 9 import org.junit.Test; 10 11 public class ZookeeperDemo { 12 /** 13 * 删除节点 14 */ 15 @Test 16 public void testDelete() throws Exception { 17 String conn = "s102:2181,s103:2181,s104:2181"; 18 //实例化zk对象 19 //param1:连接串,指定zk客户端地址;param2:超时时间,超过此时间未获得连接,抛出异常 20 ZooKeeper zk = new ZooKeeper(conn, 5000, null); 21 //删除zookeeper集群中的节点,删除的节点必须存在,如果不存在会抛异常哟(KeeperException$NoNodeException) 22 zk.delete("/yzj",-1); 23 zk.close(); 24 } 25 }

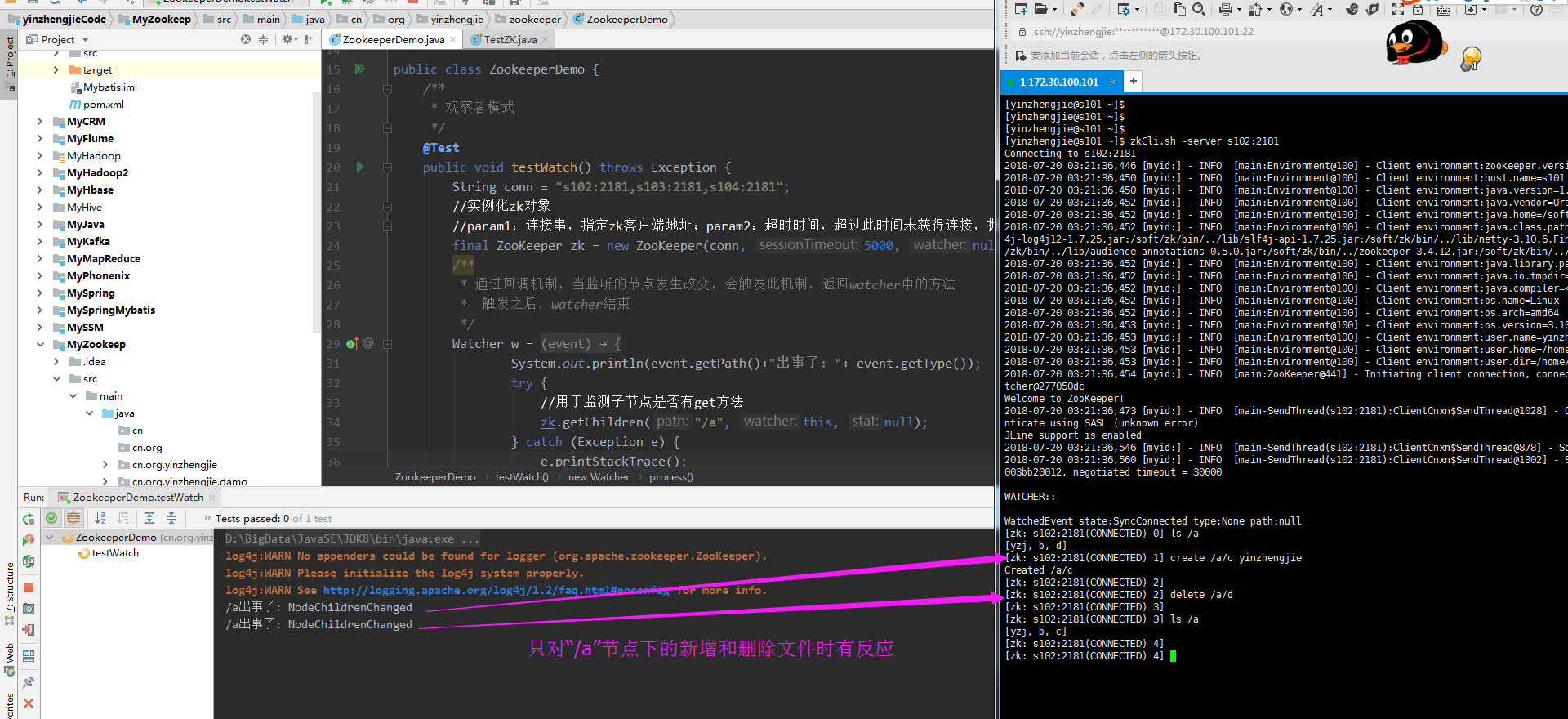

5>.观察者模式,监控某个子节点小案例

1 /* 2 @author :yinzhengjie 3 Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/ 4 EMAIL:y1053419035@qq.com 5 */ 6 package cn.org.yinzhengjie.zookeeper; 7 8 import org.apache.zookeeper.WatchedEvent; 9 import org.apache.zookeeper.Watcher; 10 import org.apache.zookeeper.ZooKeeper; 11 import org.junit.Test; 12 13 public class ZookeeperDemo { 14 /** 15 * 观察者模式 16 */ 17 @Test 18 public void testWatch() throws Exception { 19 String conn = "s102:2181,s103:2181,s104:2181"; 20 //实例化zk对象 21 //param1:连接串,指定zk客户端地址;param2:超时时间,超过此时间未获得连接,抛出异常 22 final ZooKeeper zk = new ZooKeeper(conn, 5000, null); 23 /** 24 * 通过回调机制,当监听的节点发生改变,会触发此机制,返回watcher中的方法 25 * 触发之后,watcher结束 26 */ 27 Watcher w = new Watcher() { 28 public void process(WatchedEvent event) { 29 System.out.println(event.getPath()+"出事了: "+ event.getType()); 30 try { 31 //用于监控"/a"节点下数据是否发生变化 32 zk.getChildren("/a", this, null); 33 } catch (Exception e) { 34 e.printStackTrace(); 35 } 36 } 37 }; 38 zk.getChildren("/a", w, null); 39 for ( ; ;){ 40 Thread.sleep(1000); 41 } 42 } 43 }

以上代码执行结果如下:

6>.