NameNode和SecondaryNameNode工作原理剖析

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.NameNode中的元数据是存储在那里的?

1>.首先,我们做个假设,如果存储在NameNode节点的磁盘中,因为经常需要进行随机访问,还有响应客户请求,必然是效率过低。因此,元数据需要存放在内存中。但如果只存在内存中,一旦断电,元数据丢失,整个集群就无法工作了。因此产生在磁盘中备份元数据的FsImage。

2>.这样又会带来新的问题,当在内存中的元数据更新时,如果同时更新FsImage,就会导致效率过低,但如果不更新,就会发生一致性问题,一旦NameNode节点断电,就会产生数据丢失。因此,引入Edits文件(只进行追加操作,效率很高)。每当元数据有更新或者添加元数据时,修改内存中的元数据并追加到Edits中。这样,一旦NameNode节点断电,可以通过FsImage和Edits的合并,合成元数据。

3>.但是,如果长时间添加数据到Edits中,会导致该文件数据过大,效率降低,而且一旦断电,恢复元数据需要的时间过长。因此,需要定期进行FsImage和Edits的合并,如果这个操作由NameNode节点完成,又会效率过低。因此,引入一个新的节点SecondaryNamenode,专门用于FsImage和Edits的合并。

二.NameNode和SecondaryNameNode工作原理

1>.NameNode和SecondaryNameNode工作机制简介

第一阶段:NameNode启动 1>.第一次启动NameNode格式化后,创建Fsimage和Edits文件。如果不是第一次启动,直接加载编辑日志和镜像文件到内存。 2>.客户端对元数据进行增删改的请求。 3>.NameNode记录操作日志,更新滚动日志。 4>.NameNode在内存中对数据进行增删改。

第二阶段:Secondary NameNode工作 1>.Secondary NameNode询问NameNode是否需要CheckPoint。直接带回NameNode是否检查结果。 2>.Secondary NameNode请求执行CheckPoint。 3>.NameNode滚动正在写的Edits日志。 4>.将滚动前的编辑日志和镜像文件拷贝到Secondary NameNode。 5>.Secondary NameNode加载编辑日志和镜像文件到内存,并合并。 6>.生成新的镜像文件fsimage.chkpoint。 7>.拷贝fsimage.chkpoint到NameNode。 8>.NameNode将fsimage.chkpoint重新命名成fsimage。

2>.NameNode和SecondaryNameNode工作机制详解

Fsimage:

NameNode内存中元数据序列化后形成的文件。

Edits:

记录客户端更新元数据信息的每一步操作(可通过Edits运算出元数据)。

如果看懂上图的小伙伴,这段文字可以跳过,如果没有看明白那么就得仔细阅读下段文字啦:

1>.NameNode启动时,先滚动Edits并生成一个空的edits.inprogress,然后加载Edits和Fsimage到内存中,此时NameNode内存就持有最新的元数据信息。

2>.Client开始对NameNode发送元数据的增删改的请求,这些请求的操作首先会被记录到edits.inprogress中(查询元数据的操作不会被记录在Edits中,因为查询操作不会更改元数据信息),如果此时NameNode挂掉,重启后会从Edits中读取元数据的信息。然后,NameNode会在内存中执行元数据的增删改的操作。

3>.由于Edits中记录的操作会越来越多,Edits文件会越来越大,导致NameNode在启动加载Edits时会很慢,所以需要对Edits和Fsimage进行合并(所谓合并,就是将Edits和Fsimage加载到内存中,照着Edits中的操作一步步执行,最终形成新的Fsimage)。

4>.SecondaryNameNode的作用就是帮助NameNode进行Edits和Fsimage的合并工作。

5>.SecondaryNameNode首先会询问NameNode是否需要CheckPoint(触发CheckPoint需要满足两个条件中的任意一个,定时时间到和Edits中数据写满了)。直接带回NameNode是否检查结果。

6>.SecondaryNameNode执行CheckPoint操作,首先会让NameNode滚动Edits并生成一个空的edits.inprogress,滚动Edits的目的是给Edits打个标记,以后所有新的操作都写入edits.inprogress,其他未合并的Edits和Fsimage会拷贝到SecondaryNameNode的本地,然后将拷贝的Edits和Fsimage加载到内存中进行合并,生成fsimage.chkpoint,然后将fsimage.chkpoint拷贝给NameNode,重命名为Fsimage后替换掉原来的Fsimage。

7>.NameNode在启动时就只需要加载之前未合并的Edits和Fsimage即可,因为合并过的Edits中的元数据信息已经被记录在Fsimage中。

关于Hadoop完全分布式部署可参考:Apache Hadoop 2.9.2 完全分布式部署(HDFS)。

3>.chkpoint检查时间参数设置

[hdfs-default.xml] <configuration> ..... <property> <name>dfs.namenode.checkpoint.period</name> <value>3600</value> </property> ..... </configuration>

<property> <name>dfs.namenode.checkpoint.txns</name> <value>1000000</value> <description>操作动作次数</description> </property> <property> <name>dfs.namenode.checkpoint.check.period</name> <value>60</value> <description> 1分钟检查一次操作次数</description> </property>

三.Fsimage和Edits解析

1>.Fsimage和Edits概念

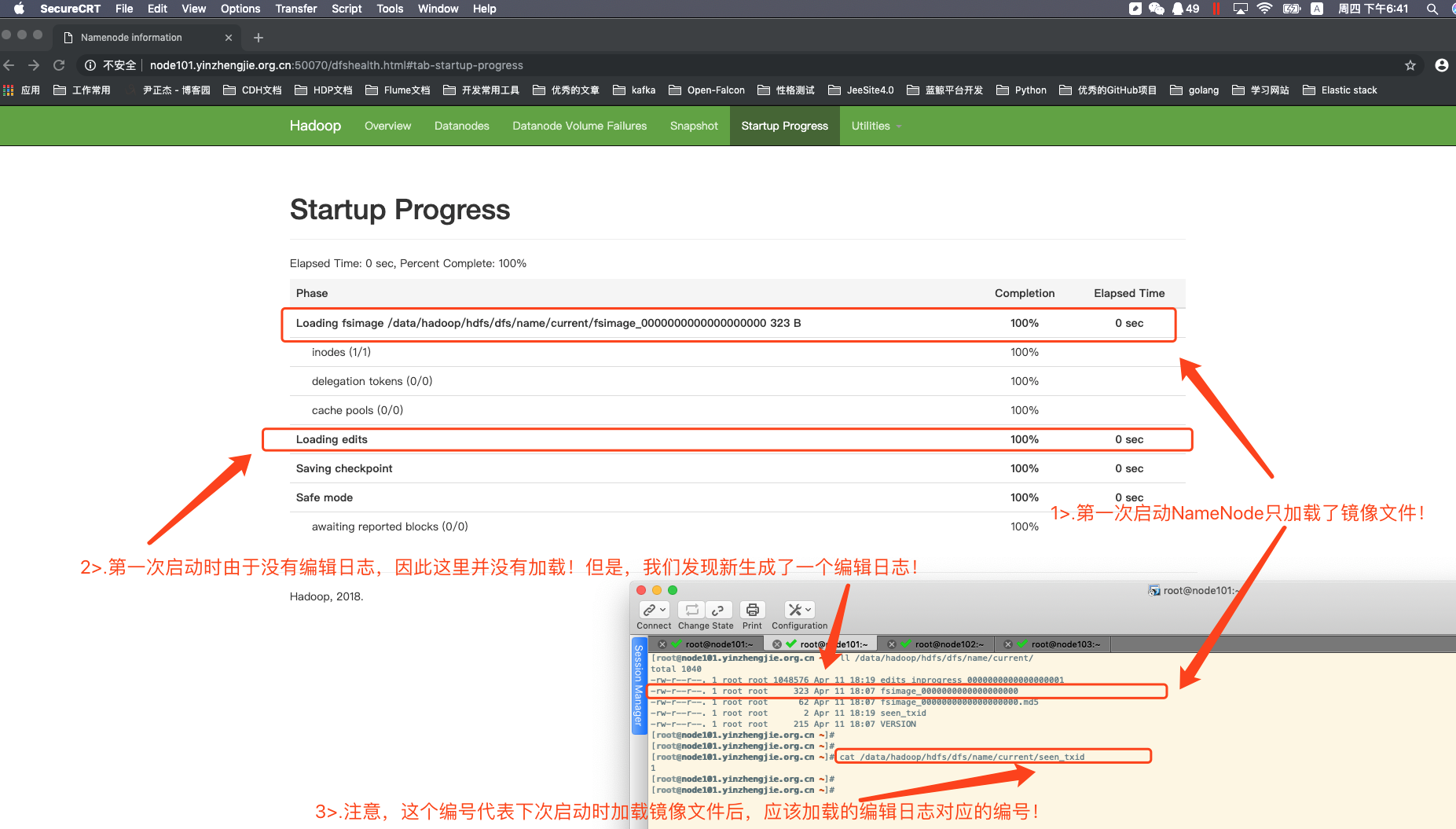

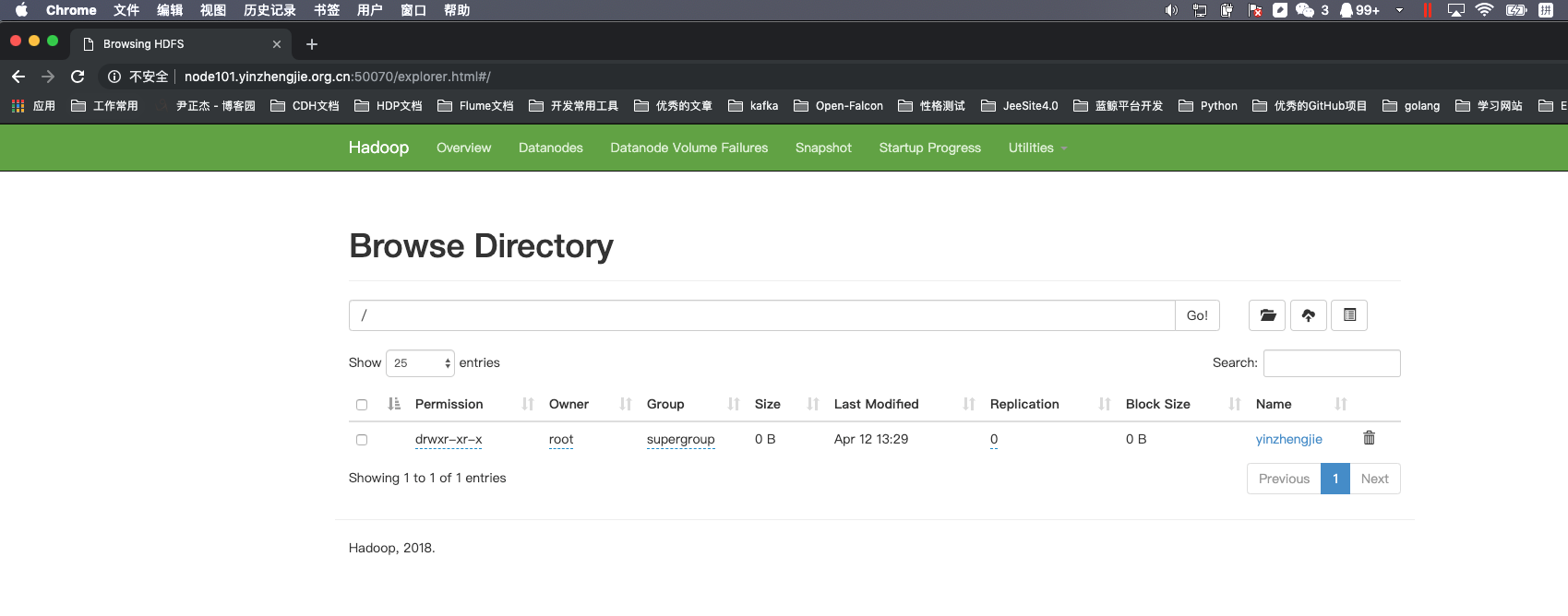

NameNode被格式化之后,将在我们定义的数据目录中("${hadoop.tmp.dir}/dfs/name/current/")产生如下图所示文件:

一.Fsimage文件

HDFS文件系统元数据的一个永久性的检查点,其中包含HDFS文件系统的所有目录和文件inode的序列化信息。

二.Edits文件

存放HDFS文件系统的所有更新操作的路径,文件系统客户端执行的所有写操作首先会被记录到Edits文件中。

三.seen_txid文件

文件保存的是一个数字,就是最后一个edits_的数字。

四.VERSION

记录着集群的版本号,包括存储id,集群id,ctime属性,datanodeuuuid,存储类型等内容。别着急,下面关于NameNode版本号会对该文件的内容进行详细的解释!

温馨提示:

每次NameNode启动的时候都会将Fsimage文件读入内存,加载Edits里面的更新操作,保证内存中的元数据是最新的,同步的,可以看成NameNoede启动的时候将Fsimage和Edits文件进行了合并操作。

但是当格式化NameNode后,如果之前有数据,那么对不起,之前的所有数据丢面临丢失的问题,格式化后第一次启动是不加在编辑日志的,我们可以在NameNode的Web UI中查到相应的记录信息!

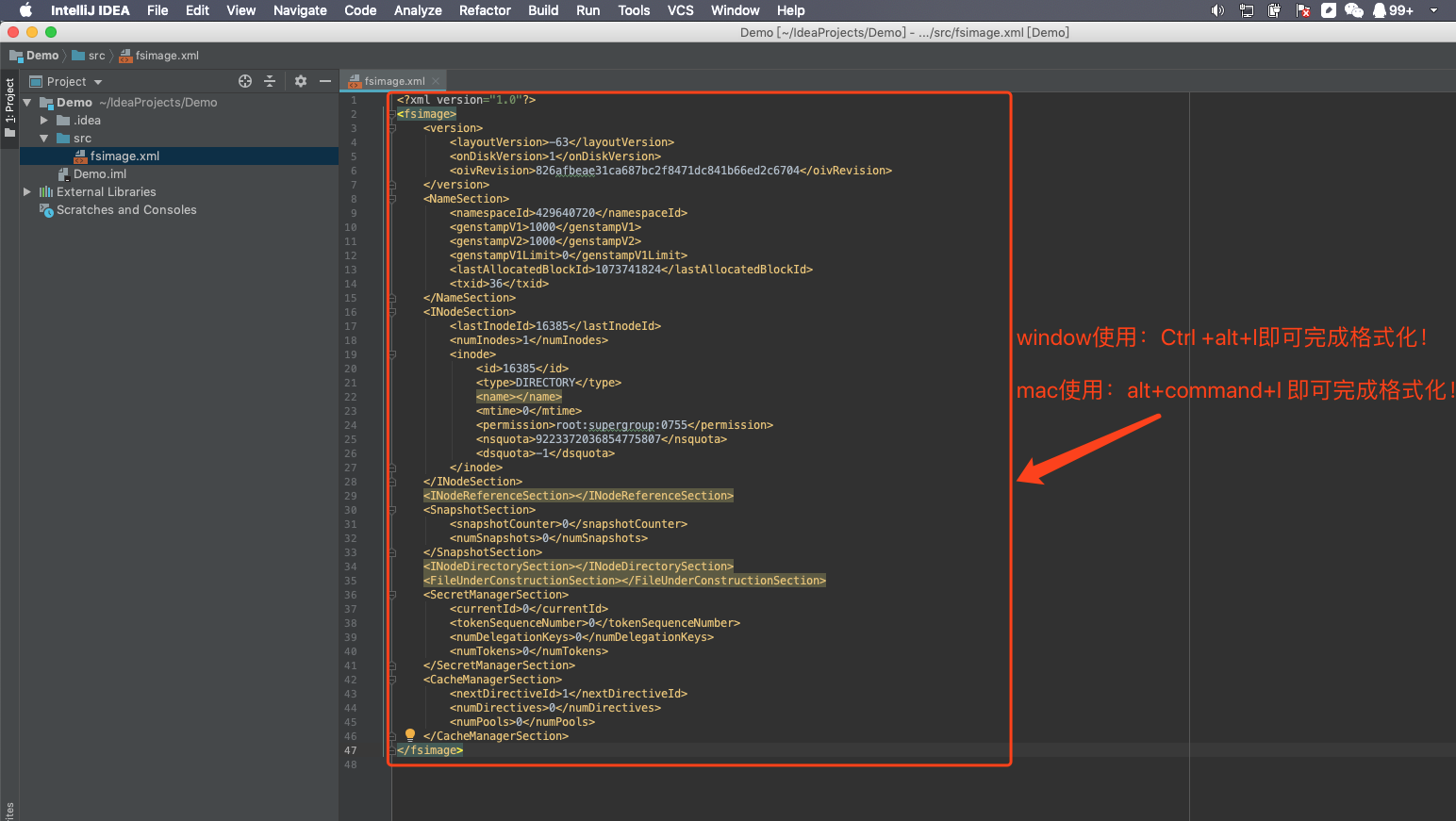

2>.使用oiv命令查看镜像(fsimage)文件

[root@node101.yinzhengjie.org.cn ~]# hdfs oiv Usage: bin/hdfs oiv [OPTIONS] -i INPUTFILE -o OUTPUTFILE Offline Image Viewer View a Hadoop fsimage INPUTFILE using the specified PROCESSOR, saving the results in OUTPUTFILE. The oiv utility will attempt to parse correctly formed image files and will abort fail with mal-formed image files. The tool works offline and does not require a running cluster in order to process an image file. The following image processors are available: * XML: This processor creates an XML document with all elements of the fsimage enumerated, suitable for further analysis by XML tools. * ReverseXML: This processor takes an XML file and creates a binary fsimage containing the same elements. * FileDistribution: This processor analyzes the file size distribution in the image. -maxSize specifies the range [0, maxSize] of file sizes to be analyzed (128GB by default). -step defines the granularity of the distribution. (2MB by default) -format formats the output result in a human-readable fashion rather than a number of bytes. (false by default) * Web: Run a viewer to expose read-only WebHDFS API. -addr specifies the address to listen. (localhost:5978 by default) * Delimited (experimental): Generate a text file with all of the elements common to both inodes and inodes-under-construction, separated by a delimiter. The default delimiter is , though this may be changed via the -delimiter argument. Required command line arguments: -i,--inputFile <arg> FSImage or XML file to process. Optional command line arguments: -o,--outputFile <arg> Name of output file. If the specified file exists, it will be overwritten. (output to stdout by default) If the input file was an XML file, we will also create an <outputFile>.md5 file. -p,--processor <arg> Select which type of processor to apply against image file. (XML|FileDistribution| ReverseXML|Web|Delimited) The default is Web. -delimiter <arg> Delimiting string to use with Delimited processor. -t,--temp <arg> Use temporary dir to cache intermediate result to generate Delimited outputs. If not set, Delimited processor constructs the namespace in memory before outputting text. -h,--help Display usage information and exit [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name/current/ total 1120 -rw-r--r--. 1 root root 42 Apr 11 18:54 edits_0000000000000000001-0000000000000000002 -rw-r--r--. 1 root root 42 Apr 11 19:54 edits_0000000000000000003-0000000000000000004 -rw-r--r--. 1 root root 42 Apr 11 20:54 edits_0000000000000000005-0000000000000000006 -rw-r--r--. 1 root root 42 Apr 11 21:54 edits_0000000000000000007-0000000000000000008 -rw-r--r--. 1 root root 42 Apr 11 22:54 edits_0000000000000000009-0000000000000000010 -rw-r--r--. 1 root root 42 Apr 11 23:54 edits_0000000000000000011-0000000000000000012 -rw-r--r--. 1 root root 42 Apr 12 00:54 edits_0000000000000000013-0000000000000000014 -rw-r--r--. 1 root root 42 Apr 12 01:54 edits_0000000000000000015-0000000000000000016 -rw-r--r--. 1 root root 42 Apr 12 02:54 edits_0000000000000000017-0000000000000000018 -rw-r--r--. 1 root root 42 Apr 12 03:54 edits_0000000000000000019-0000000000000000020 -rw-r--r--. 1 root root 42 Apr 12 04:54 edits_0000000000000000021-0000000000000000022 -rw-r--r--. 1 root root 42 Apr 12 05:54 edits_0000000000000000023-0000000000000000024 -rw-r--r--. 1 root root 42 Apr 12 06:54 edits_0000000000000000025-0000000000000000026 -rw-r--r--. 1 root root 42 Apr 12 07:54 edits_0000000000000000027-0000000000000000028 -rw-r--r--. 1 root root 42 Apr 12 08:54 edits_0000000000000000029-0000000000000000030 -rw-r--r--. 1 root root 42 Apr 12 09:54 edits_0000000000000000031-0000000000000000032 -rw-r--r--. 1 root root 42 Apr 12 10:54 edits_0000000000000000033-0000000000000000034 -rw-r--r--. 1 root root 42 Apr 12 11:54 edits_0000000000000000035-0000000000000000036 -rw-r--r--. 1 root root 1048576 Apr 12 11:54 edits_inprogress_0000000000000000037 -rw-r--r--. 1 root root 323 Apr 12 10:54 fsimage_0000000000000000034 -rw-r--r--. 1 root root 62 Apr 12 10:54 fsimage_0000000000000000034.md5 -rw-r--r--. 1 root root 323 Apr 12 11:54 fsimage_0000000000000000036 -rw-r--r--. 1 root root 62 Apr 12 11:54 fsimage_0000000000000000036.md5 -rw-r--r--. 1 root root 3 Apr 12 11:54 seen_txid -rw-r--r--. 1 root root 215 Apr 11 18:07 VERSION [root@node101.yinzhengjie.org.cn ~]#

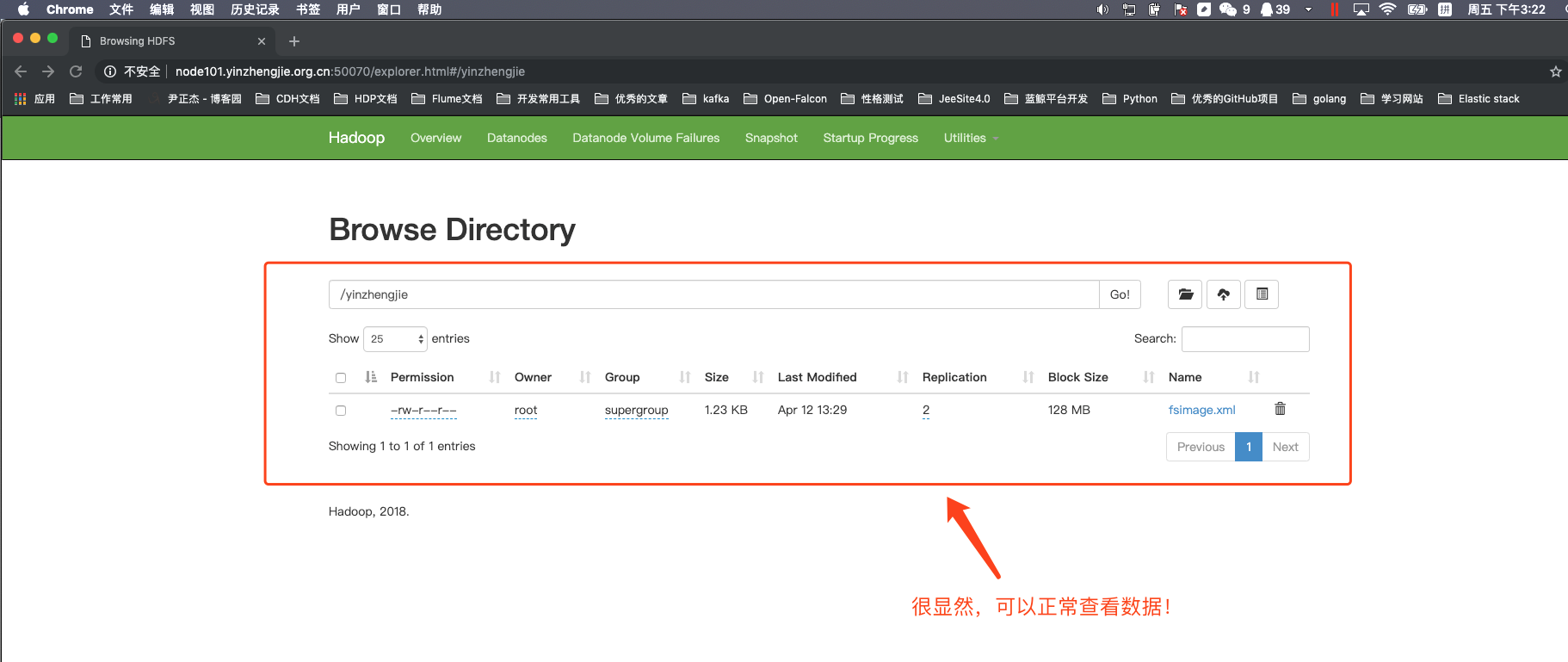

[root@node101.yinzhengjie.org.cn ~]# ll total 0 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs oiv -p XML -i /data/hadoop/hdfs/dfs/name/current/fsimage_0000000000000000036 -o ./fsimage.xml 19/04/12 12:49:09 INFO offlineImageViewer.FSImageHandler: Loading 2 strings [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll total 4 -rw-r--r--. 1 root root 1264 Apr 12 12:49 fsimage.xml [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# cat fsimage.xml <?xml version="1.0"?> <fsimage><version><layoutVersion>-63</layoutVersion><onDiskVersion>1</onDiskVersion><oivRevision>826afbeae31ca687bc2f8471dc841b66ed2c6704</oivRevision></version> <NameSection><namespaceId>429640720</namespaceId><genstampV1>1000</genstampV1><genstampV2>1000</genstampV2><genstampV1Limit>0</genstampV1Limit><lastAllocatedBlockId>1073741824</lastAllocatedBlockId><txid>36</txid></NameSection> <INodeSection><lastInodeId>16385</lastInodeId><numInodes>1</numInodes><inode><id>16385</id><type>DIRECTORY</type><name></name><mtime>0</mtime><permission>root:supergroup:0755</permission><nsquota>9223372036854775807</nsquota><dsquota>-1</dsquota></inode> </INodeSection> <INodeReferenceSection></INodeReferenceSection><SnapshotSection><snapshotCounter>0</snapshotCounter><numSnapshots>0</numSnapshots></SnapshotSection> <INodeDirectorySection></INodeDirectorySection> <FileUnderConstructionSection></FileUnderConstructionSection> <SecretManagerSection><currentId>0</currentId><tokenSequenceNumber>0</tokenSequenceNumber><numDelegationKeys>0</numDelegationKeys><numTokens>0</numTokens></SecretManagerSection><CacheManagerSection><nextDirectiveId>1</nextDirectiveId><numDirectives>0</numDirectives><numPools>0</numPools></CacheManagerSection> </fsimage> [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# sz fsimage.xml #我们发现在Linux上直接查看的话可读性太差啦!我们可以下载下来它并使用开发工具将其打开,使用Eclise或者Idea进行格式化一下就OK rz Starting zmodem transfer. Press Ctrl+C to cancel. Transferring fsimage.xml... 100% 1 KB 1 KB/sec 00:00:01 0 Errors [root@node101.yinzhengjie.org.cn ~]#

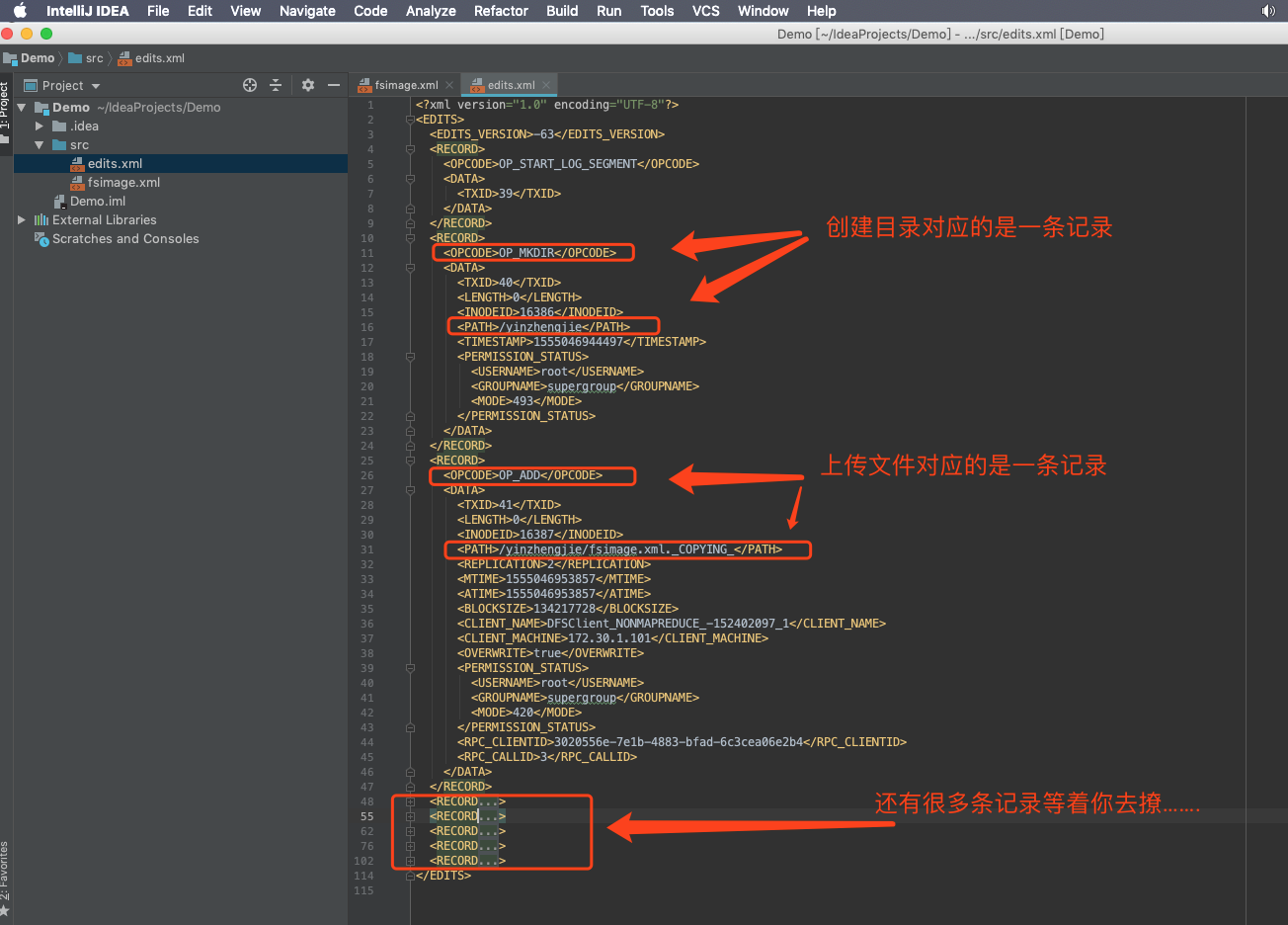

3>.使用oev查看edits文件

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name/current/ total 1124 -rw-r--r--. 1 root root 42 Apr 11 18:54 edits_0000000000000000001-0000000000000000002 -rw-r--r--. 1 root root 42 Apr 11 19:54 edits_0000000000000000003-0000000000000000004 -rw-r--r--. 1 root root 42 Apr 11 20:54 edits_0000000000000000005-0000000000000000006 -rw-r--r--. 1 root root 42 Apr 11 21:54 edits_0000000000000000007-0000000000000000008 -rw-r--r--. 1 root root 42 Apr 11 22:54 edits_0000000000000000009-0000000000000000010 -rw-r--r--. 1 root root 42 Apr 11 23:54 edits_0000000000000000011-0000000000000000012 -rw-r--r--. 1 root root 42 Apr 12 00:54 edits_0000000000000000013-0000000000000000014 -rw-r--r--. 1 root root 42 Apr 12 01:54 edits_0000000000000000015-0000000000000000016 -rw-r--r--. 1 root root 42 Apr 12 02:54 edits_0000000000000000017-0000000000000000018 -rw-r--r--. 1 root root 42 Apr 12 03:54 edits_0000000000000000019-0000000000000000020 -rw-r--r--. 1 root root 42 Apr 12 04:54 edits_0000000000000000021-0000000000000000022 -rw-r--r--. 1 root root 42 Apr 12 05:54 edits_0000000000000000023-0000000000000000024 -rw-r--r--. 1 root root 42 Apr 12 06:54 edits_0000000000000000025-0000000000000000026 -rw-r--r--. 1 root root 42 Apr 12 07:54 edits_0000000000000000027-0000000000000000028 -rw-r--r--. 1 root root 42 Apr 12 08:54 edits_0000000000000000029-0000000000000000030 -rw-r--r--. 1 root root 42 Apr 12 09:54 edits_0000000000000000031-0000000000000000032 -rw-r--r--. 1 root root 42 Apr 12 10:54 edits_0000000000000000033-0000000000000000034 -rw-r--r--. 1 root root 42 Apr 12 11:54 edits_0000000000000000035-0000000000000000036 -rw-r--r--. 1 root root 42 Apr 12 12:54 edits_0000000000000000037-0000000000000000038 -rw-r--r--. 1 root root 1048576 Apr 12 12:54 edits_inprogress_0000000000000000039 -rw-r--r--. 1 root root 323 Apr 12 11:54 fsimage_0000000000000000036 -rw-r--r--. 1 root root 62 Apr 12 11:54 fsimage_0000000000000000036.md5 -rw-r--r--. 1 root root 323 Apr 12 12:54 fsimage_0000000000000000038 -rw-r--r--. 1 root root 62 Apr 12 12:54 fsimage_0000000000000000038.md5 -rw-r--r--. 1 root root 3 Apr 12 12:54 seen_txid -rw-r--r--. 1 root root 215 Apr 11 18:07 VERSION [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs oev Usage: bin/hdfs oev [OPTIONS] -i INPUT_FILE -o OUTPUT_FILE Offline edits viewer Parse a Hadoop edits log file INPUT_FILE and save results in OUTPUT_FILE. Required command line arguments: -i,--inputFile <arg> edits file to process, xml (case insensitive) extension means XML format, any other filename means binary format. XML/Binary format input file is not allowed to be processed by the same type processor. -o,--outputFile <arg> Name of output file. If the specified file exists, it will be overwritten, format of the file is determined by -p option Optional command line arguments: -p,--processor <arg> Select which type of processor to apply against image file, currently supported processors are: binary (native binary format that Hadoop uses), xml (default, XML format), stats (prints statistics about edits file) -h,--help Display usage information and exit -f,--fix-txids Renumber the transaction IDs in the input, so that there are no gaps or invalid transaction IDs. -r,--recover When reading binary edit logs, use recovery mode. This will give you the chance to skip corrupt parts of the edit log. -v,--verbose More verbose output, prints the input and output filenames, for processors that write to a file, also output to screen. On large image files this will dramatically increase processing time (default is false). Generic options supported are: -conf <configuration file> specify an application configuration file -D <property=value> define a value for a given property -fs <file:///|hdfs://namenode:port> specify default filesystem URL to use, overrides 'fs.defaultFS' property from configurations. -jt <local|resourcemanager:port> specify a ResourceManager -files <file1,...> specify a comma-separated list of files to be copied to the map reduce cluster -libjars <jar1,...> specify a comma-separated list of jar files to be included in the classpath -archives <archive1,...> specify a comma-separated list of archives to be unarchived on the compute machines The general command line syntax is: command [genericOptions] [commandOptions] [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll total 4 -rw-r--r--. 1 root root 1264 Apr 12 12:49 fsimage.xml [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs oev -p XML -i /data/hadoop/hdfs/dfs/name/current/edits_inprogress_0000000000000000039 -o ./edits.xml [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll total 8 -rw-r--r--. 1 root root 3124 Apr 12 13:31 edits.xml -rw-r--r--. 1 root root 1264 Apr 12 12:49 fsimage.xml [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# cat edits.xml <?xml version="1.0" encoding="UTF-8"?> <EDITS> <EDITS_VERSION>-63</EDITS_VERSION> <RECORD> <OPCODE>OP_START_LOG_SEGMENT</OPCODE> <DATA> <TXID>39</TXID> </DATA> </RECORD> <RECORD> <OPCODE>OP_MKDIR</OPCODE> <DATA> <TXID>40</TXID> <LENGTH>0</LENGTH> <INODEID>16386</INODEID> <PATH>/yinzhengjie</PATH> <TIMESTAMP>1555046944497</TIMESTAMP> <PERMISSION_STATUS> <USERNAME>root</USERNAME> <GROUPNAME>supergroup</GROUPNAME> <MODE>493</MODE> </PERMISSION_STATUS> </DATA> </RECORD> <RECORD> <OPCODE>OP_ADD</OPCODE> <DATA> <TXID>41</TXID> <LENGTH>0</LENGTH> <INODEID>16387</INODEID> <PATH>/yinzhengjie/fsimage.xml._COPYING_</PATH> <REPLICATION>2</REPLICATION> <MTIME>1555046953857</MTIME> <ATIME>1555046953857</ATIME> <BLOCKSIZE>134217728</BLOCKSIZE> <CLIENT_NAME>DFSClient_NONMAPREDUCE_-152402097_1</CLIENT_NAME> <CLIENT_MACHINE>172.30.1.101</CLIENT_MACHINE> <OVERWRITE>true</OVERWRITE> <PERMISSION_STATUS> <USERNAME>root</USERNAME> <GROUPNAME>supergroup</GROUPNAME> <MODE>420</MODE> </PERMISSION_STATUS> <RPC_CLIENTID>3020556e-7e1b-4883-bfad-6c3cea06e2b4</RPC_CLIENTID> <RPC_CALLID>3</RPC_CALLID> </DATA> </RECORD> <RECORD> <OPCODE>OP_ALLOCATE_BLOCK_ID</OPCODE> <DATA> <TXID>42</TXID> <BLOCK_ID>1073741825</BLOCK_ID> </DATA> </RECORD> <RECORD> <OPCODE>OP_SET_GENSTAMP_V2</OPCODE> <DATA> <TXID>43</TXID> <GENSTAMPV2>1001</GENSTAMPV2> </DATA> </RECORD> <RECORD> <OPCODE>OP_ADD_BLOCK</OPCODE> <DATA> <TXID>44</TXID> <PATH>/yinzhengjie/fsimage.xml._COPYING_</PATH> <BLOCK> <BLOCK_ID>1073741825</BLOCK_ID> <NUM_BYTES>0</NUM_BYTES> <GENSTAMP>1001</GENSTAMP> </BLOCK> <RPC_CLIENTID></RPC_CLIENTID> <RPC_CALLID>-2</RPC_CALLID> </DATA> </RECORD> <RECORD> <OPCODE>OP_CLOSE</OPCODE> <DATA> <TXID>45</TXID> <LENGTH>0</LENGTH> <INODEID>0</INODEID> <PATH>/yinzhengjie/fsimage.xml._COPYING_</PATH> <REPLICATION>2</REPLICATION> <MTIME>1555046954585</MTIME> <ATIME>1555046953857</ATIME> <BLOCKSIZE>134217728</BLOCKSIZE> <CLIENT_NAME></CLIENT_NAME> <CLIENT_MACHINE></CLIENT_MACHINE> <OVERWRITE>false</OVERWRITE> <BLOCK> <BLOCK_ID>1073741825</BLOCK_ID> <NUM_BYTES>1264</NUM_BYTES> <GENSTAMP>1001</GENSTAMP> </BLOCK> <PERMISSION_STATUS> <USERNAME>root</USERNAME> <GROUPNAME>supergroup</GROUPNAME> <MODE>420</MODE> </PERMISSION_STATUS> </DATA> </RECORD> <RECORD> <OPCODE>OP_RENAME_OLD</OPCODE> <DATA> <TXID>46</TXID> <LENGTH>0</LENGTH> <SRC>/yinzhengjie/fsimage.xml._COPYING_</SRC> <DST>/yinzhengjie/fsimage.xml</DST> <TIMESTAMP>1555046954593</TIMESTAMP> <RPC_CLIENTID>3020556e-7e1b-4883-bfad-6c3cea06e2b4</RPC_CLIENTID> <RPC_CALLID>9</RPC_CALLID> </DATA> </RECORD> </EDITS> [root@node101.yinzhengjie.org.cn ~]#

4>.滚动编辑日志(edits)

编辑日志滚动只有两种条件会主动触发,要么就是重启hdfs集群,要么就是手动滚动编辑日志,手动滚动编辑日志很简单,就一条命令搞定:

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name/current/ total 3144 -rw-r--r--. 1 root root 42 Apr 12 18:53 edits_0000000000000000001-0000000000000000002 -rw-r--r--. 1 root root 42 Apr 12 18:53 edits_0000000000000000003-0000000000000000004 -rw-r--r--. 1 root root 1048576 Apr 12 18:53 edits_0000000000000000005-0000000000000000005 -rw-r--r--. 1 root root 42 Apr 12 18:56 edits_0000000000000000006-0000000000000000007 -rw-r--r--. 1 root root 42 Apr 12 18:56 edits_0000000000000000008-0000000000000000009 -rw-r--r--. 1 root root 42 Apr 12 18:57 edits_0000000000000000010-0000000000000000011 -rw-r--r--. 1 root root 42 Apr 12 18:57 edits_0000000000000000012-0000000000000000013 -rw-r--r--. 1 root root 42 Apr 12 18:57 edits_0000000000000000014-0000000000000000015 -rw-r--r--. 1 root root 42 Apr 12 18:58 edits_0000000000000000016-0000000000000000017 -rw-r--r--. 1 root root 42 Apr 12 18:58 edits_0000000000000000018-0000000000000000019 -rw-r--r--. 1 root root 42 Apr 12 18:59 edits_0000000000000000020-0000000000000000021 -rw-r--r--. 1 root root 42 Apr 12 18:59 edits_0000000000000000022-0000000000000000023 -rw-r--r--. 1 root root 42 Apr 12 19:00 edits_0000000000000000024-0000000000000000025 -rw-r--r--. 1 root root 1048576 Apr 12 19:00 edits_0000000000000000026-0000000000000000026 -rw-r--r--. 1 root root 1048576 Apr 12 19:00 edits_inprogress_0000000000000000027 ------->这是当前正在写的编辑日志。 -rw-r--r--. 1 root root 323 Apr 12 19:00 fsimage_0000000000000000025 -rw-r--r--. 1 root root 62 Apr 12 19:00 fsimage_0000000000000000025.md5 -rw-r--r--. 1 root root 323 Apr 12 19:00 fsimage_0000000000000000026 -rw-r--r--. 1 root root 62 Apr 12 19:00 fsimage_0000000000000000026.md5 -rw-r--r--. 1 root root 3 Apr 12 19:00 seen_txid -rw-r--r--. 1 root root 217 Apr 12 19:00 VERSION [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -rollEdits Successfully rolled edit logs. New segment starts at txid 31 -------->注意,这里就是告诉我们新生成的编辑日志编号! [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name/current/ total 3152 -rw-r--r--. 1 root root 42 Apr 12 18:53 edits_0000000000000000001-0000000000000000002 -rw-r--r--. 1 root root 42 Apr 12 18:53 edits_0000000000000000003-0000000000000000004 -rw-r--r--. 1 root root 1048576 Apr 12 18:53 edits_0000000000000000005-0000000000000000005 -rw-r--r--. 1 root root 42 Apr 12 18:56 edits_0000000000000000006-0000000000000000007 -rw-r--r--. 1 root root 42 Apr 12 18:56 edits_0000000000000000008-0000000000000000009 -rw-r--r--. 1 root root 42 Apr 12 18:57 edits_0000000000000000010-0000000000000000011 -rw-r--r--. 1 root root 42 Apr 12 18:57 edits_0000000000000000012-0000000000000000013 -rw-r--r--. 1 root root 42 Apr 12 18:57 edits_0000000000000000014-0000000000000000015 -rw-r--r--. 1 root root 42 Apr 12 18:58 edits_0000000000000000016-0000000000000000017 -rw-r--r--. 1 root root 42 Apr 12 18:58 edits_0000000000000000018-0000000000000000019 -rw-r--r--. 1 root root 42 Apr 12 18:59 edits_0000000000000000020-0000000000000000021 -rw-r--r--. 1 root root 42 Apr 12 18:59 edits_0000000000000000022-0000000000000000023 -rw-r--r--. 1 root root 42 Apr 12 19:00 edits_0000000000000000024-0000000000000000025 -rw-r--r--. 1 root root 1048576 Apr 12 19:00 edits_0000000000000000026-0000000000000000026 -rw-r--r--. 1 root root 42 Apr 12 19:01 edits_0000000000000000027-0000000000000000028 -rw-r--r--. 1 root root 42 Apr 12 19:01 edits_0000000000000000029-0000000000000000030 -rw-r--r--. 1 root root 1048576 Apr 12 19:01 edits_inprogress_0000000000000000031 ------>滚动后,新生成的编辑日志编号还记得么? -rw-r--r--. 1 root root 323 Apr 12 19:00 fsimage_0000000000000000026 -rw-r--r--. 1 root root 62 Apr 12 19:00 fsimage_0000000000000000026.md5 -rw-r--r--. 1 root root 323 Apr 12 19:01 fsimage_0000000000000000028 -rw-r--r--. 1 root root 62 Apr 12 19:01 fsimage_0000000000000000028.md5 -rw-r--r--. 1 root root 3 Apr 12 19:01 seen_txid -rw-r--r--. 1 root root 217 Apr 12 19:00 VERSION [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

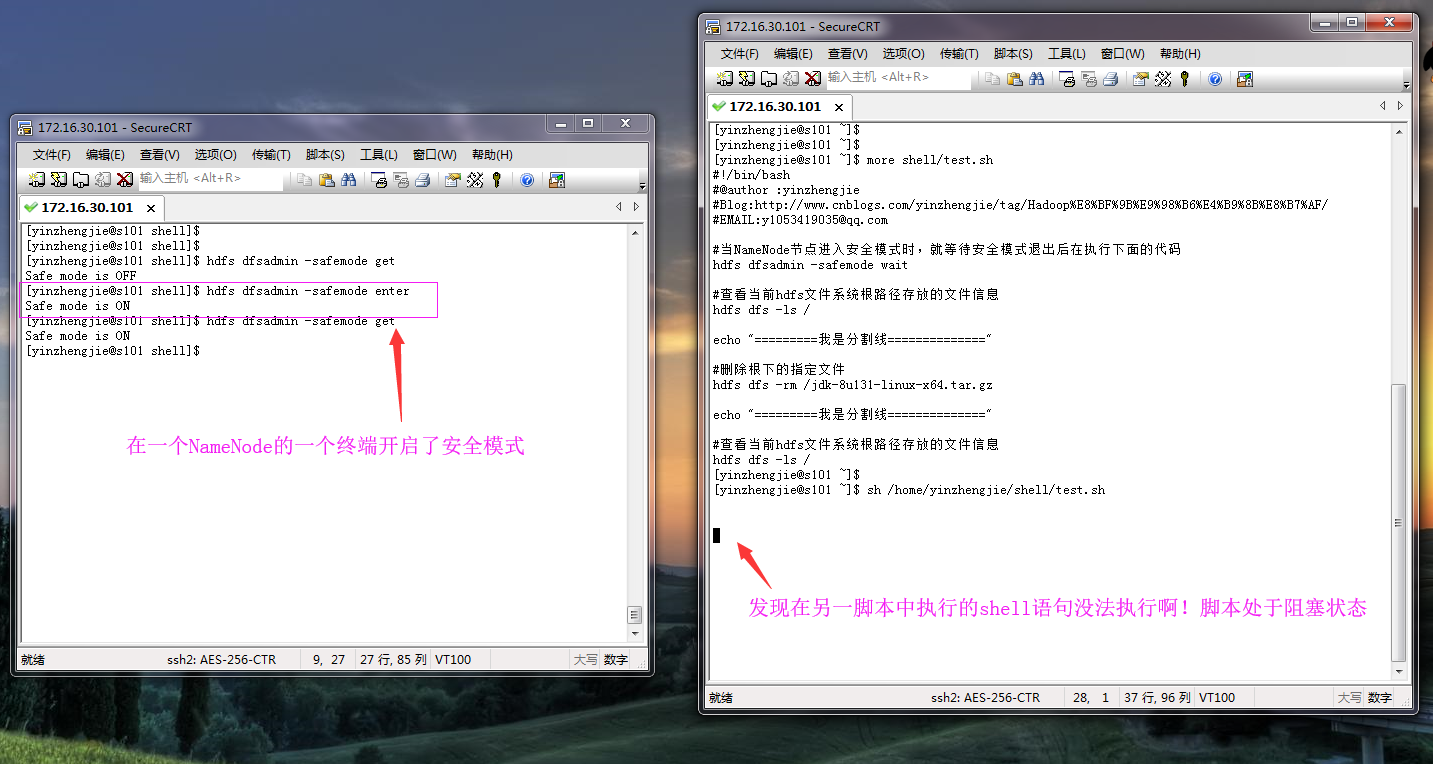

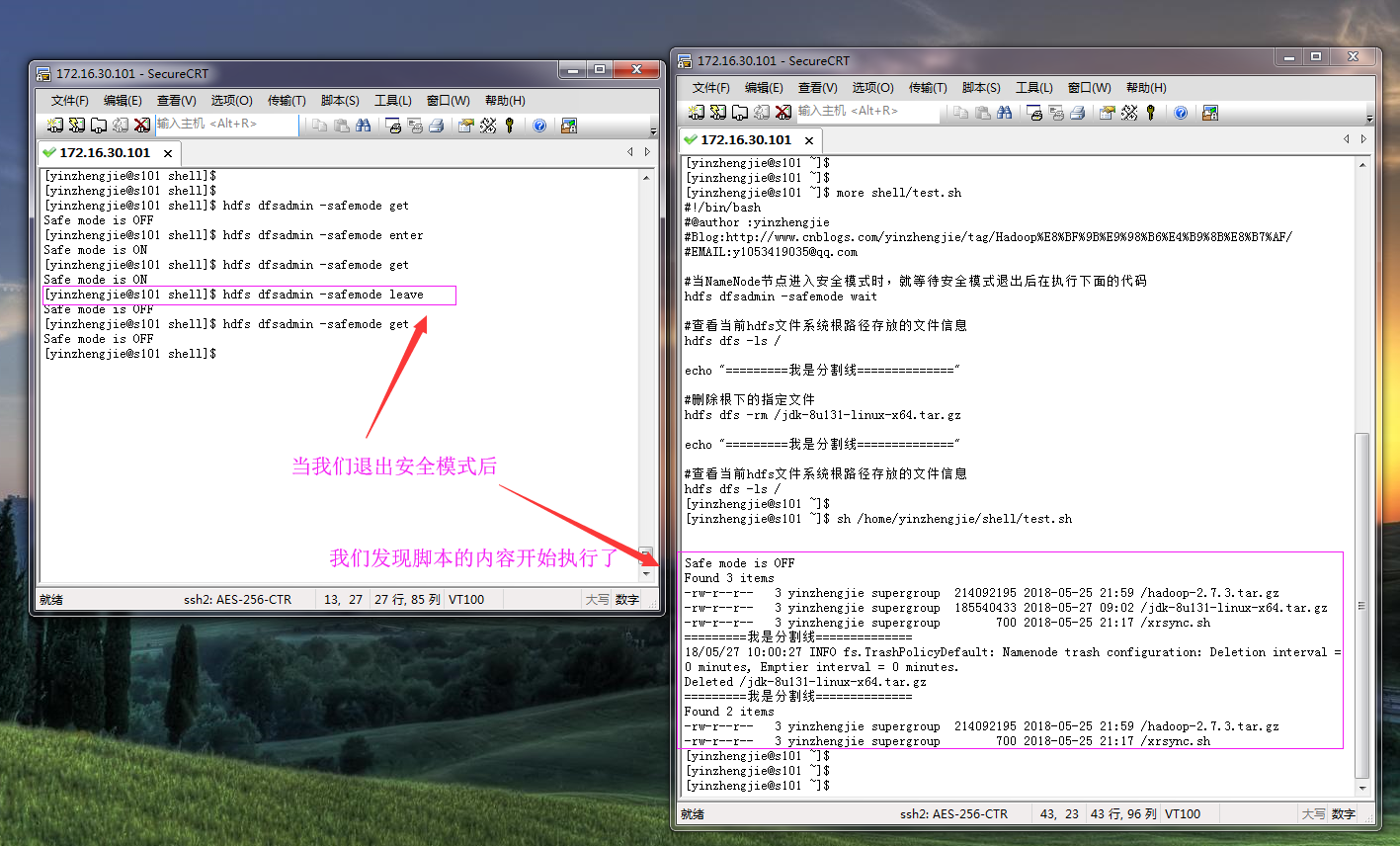

5>.滚动镜像文件(fsimage)

[root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode get Safe mode is OFF [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode get Safe mode is OFF [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode enter Safe mode is ON [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode get Safe mode is ON [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll -h /data/hadoop/hdfs/dfs/name/current/ | grep fsimage -rw-r--r--. 1 root root 323 Apr 12 19:08 fsimage_0000000000000000056 -rw-r--r--. 1 root root 62 Apr 12 19:08 fsimage_0000000000000000056.md5 -rw-r--r--. 1 root root 323 Apr 12 19:08 fsimage_0000000000000000058 ------->滚动前最新的镜像文件 -rw-r--r--. 1 root root 62 Apr 12 19:08 fsimage_0000000000000000058.md5 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -saveNamespace #滚动镜像文件 Save namespace successful [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll -h /data/hadoop/hdfs/dfs/name/current/ | grep fsimage -rw-r--r--. 1 root root 323 Apr 12 19:08 fsimage_0000000000000000058 -rw-r--r--. 1 root root 62 Apr 12 19:08 fsimage_0000000000000000058.md5 -rw-r--r--. 1 root root 323 Apr 12 19:11 fsimage_0000000000000000060 ------->滚动后最新的镜像文件 -rw-r--r--. 1 root root 62 Apr 12 19:11 fsimage_0000000000000000060.md5 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode get Safe mode is ON [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode leave #退出安全模式 Safe mode is OFF [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode get Safe mode is OFF [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

推荐阅读:Hadoop默认的webUI访问端口。

四.NameNode版本号

1>.查看namenode版本号

[root@node101.yinzhengjie.org.cn ~]# cat /data/hadoop/hdfs/dfs/name/current/VERSION #Thu Apr 11 18:07:23 CST 2019 namespaceID=429640720 clusterID=CID-5e6a5eca-6d94-4087-9ff8-7decc325338c cTime=1554977243283 storageType=NAME_NODE blockpoolID=BP-681013498-172.30.1.101-1554977243283 layoutVersion=-63 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

2>.namenode版本号具体解释

一.namespaceID

在HDFS上,会有多个Namenode,所以不同Namenode的namespaceID是不同的,分别管理一组blockpoolID。

二.clusterID

集群id,全局唯一

三.cTime

属性标记了namenode存储系统的创建时间,对于刚刚格式化的存储系统,这个属性为0;但是在文件系统升级之后,该值会更新到新的时间戳。

四.storageType

属性说明该存储目录包含的是namenode的数据结构。

五.blockpoolID

一个block pool id标识一个block pool,并且是跨集群的全局唯一。当一个新的Namespace被创建的时候(format过程的一部分)会创建并持久化一个唯一ID。在创建过程构建全局唯一的BlockPoolID比人为的配置更可靠一些。NN将BlockPoolID持久化到磁盘中,在后续的启动过程中,会再次load并使用。

六.layoutVersion

分层版本,它是一个负整数。通常只有HDFS增加新特性时才会更新这个版本号。

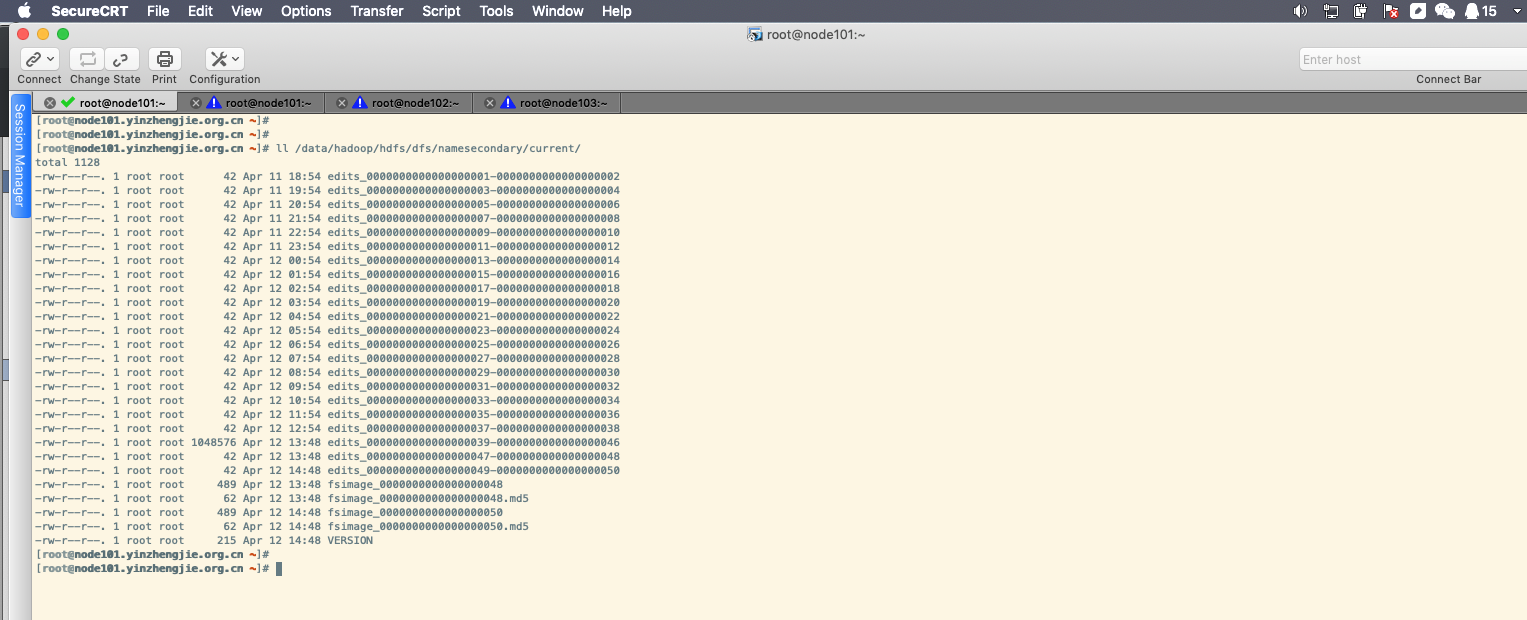

五.SecondaryNameNode目录结构

Secondary Name用来监控HDFS状态的辅助后台呈现,每隔一段时间获取HDFS元数据的快照。在我们定义的数据目录中("${hadoop.tmp.dir}/dfs/namesecondary/current/")可以查看都相应的目录结构,如下图所示:

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/namesecondary/current/ total 1128 -rw-r--r--. 1 root root 42 Apr 11 18:54 edits_0000000000000000001-0000000000000000002 -rw-r--r--. 1 root root 42 Apr 11 19:54 edits_0000000000000000003-0000000000000000004 -rw-r--r--. 1 root root 42 Apr 11 20:54 edits_0000000000000000005-0000000000000000006 -rw-r--r--. 1 root root 42 Apr 11 21:54 edits_0000000000000000007-0000000000000000008 -rw-r--r--. 1 root root 42 Apr 11 22:54 edits_0000000000000000009-0000000000000000010 -rw-r--r--. 1 root root 42 Apr 11 23:54 edits_0000000000000000011-0000000000000000012 -rw-r--r--. 1 root root 42 Apr 12 00:54 edits_0000000000000000013-0000000000000000014 -rw-r--r--. 1 root root 42 Apr 12 01:54 edits_0000000000000000015-0000000000000000016 -rw-r--r--. 1 root root 42 Apr 12 02:54 edits_0000000000000000017-0000000000000000018 -rw-r--r--. 1 root root 42 Apr 12 03:54 edits_0000000000000000019-0000000000000000020 -rw-r--r--. 1 root root 42 Apr 12 04:54 edits_0000000000000000021-0000000000000000022 -rw-r--r--. 1 root root 42 Apr 12 05:54 edits_0000000000000000023-0000000000000000024 -rw-r--r--. 1 root root 42 Apr 12 06:54 edits_0000000000000000025-0000000000000000026 -rw-r--r--. 1 root root 42 Apr 12 07:54 edits_0000000000000000027-0000000000000000028 -rw-r--r--. 1 root root 42 Apr 12 08:54 edits_0000000000000000029-0000000000000000030 -rw-r--r--. 1 root root 42 Apr 12 09:54 edits_0000000000000000031-0000000000000000032 -rw-r--r--. 1 root root 42 Apr 12 10:54 edits_0000000000000000033-0000000000000000034 -rw-r--r--. 1 root root 42 Apr 12 11:54 edits_0000000000000000035-0000000000000000036 -rw-r--r--. 1 root root 42 Apr 12 12:54 edits_0000000000000000037-0000000000000000038 -rw-r--r--. 1 root root 1048576 Apr 12 13:48 edits_0000000000000000039-0000000000000000046 -rw-r--r--. 1 root root 42 Apr 12 13:48 edits_0000000000000000047-0000000000000000048 -rw-r--r--. 1 root root 42 Apr 12 14:48 edits_0000000000000000049-0000000000000000050 -rw-r--r--. 1 root root 489 Apr 12 13:48 fsimage_0000000000000000048 -rw-r--r--. 1 root root 62 Apr 12 13:48 fsimage_0000000000000000048.md5 -rw-r--r--. 1 root root 489 Apr 12 14:48 fsimage_0000000000000000050 -rw-r--r--. 1 root root 62 Apr 12 14:48 fsimage_0000000000000000050.md5 -rw-r--r--. 1 root root 215 Apr 12 14:48 VERSION [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name/current/ total 2156 -rw-r--r--. 1 root root 42 Apr 11 18:54 edits_0000000000000000001-0000000000000000002 -rw-r--r--. 1 root root 42 Apr 11 19:54 edits_0000000000000000003-0000000000000000004 -rw-r--r--. 1 root root 42 Apr 11 20:54 edits_0000000000000000005-0000000000000000006 -rw-r--r--. 1 root root 42 Apr 11 21:54 edits_0000000000000000007-0000000000000000008 -rw-r--r--. 1 root root 42 Apr 11 22:54 edits_0000000000000000009-0000000000000000010 -rw-r--r--. 1 root root 42 Apr 11 23:54 edits_0000000000000000011-0000000000000000012 -rw-r--r--. 1 root root 42 Apr 12 00:54 edits_0000000000000000013-0000000000000000014 -rw-r--r--. 1 root root 42 Apr 12 01:54 edits_0000000000000000015-0000000000000000016 -rw-r--r--. 1 root root 42 Apr 12 02:54 edits_0000000000000000017-0000000000000000018 -rw-r--r--. 1 root root 42 Apr 12 03:54 edits_0000000000000000019-0000000000000000020 -rw-r--r--. 1 root root 42 Apr 12 04:54 edits_0000000000000000021-0000000000000000022 -rw-r--r--. 1 root root 42 Apr 12 05:54 edits_0000000000000000023-0000000000000000024 -rw-r--r--. 1 root root 42 Apr 12 06:54 edits_0000000000000000025-0000000000000000026 -rw-r--r--. 1 root root 42 Apr 12 07:54 edits_0000000000000000027-0000000000000000028 -rw-r--r--. 1 root root 42 Apr 12 08:54 edits_0000000000000000029-0000000000000000030 -rw-r--r--. 1 root root 42 Apr 12 09:54 edits_0000000000000000031-0000000000000000032 -rw-r--r--. 1 root root 42 Apr 12 10:54 edits_0000000000000000033-0000000000000000034 -rw-r--r--. 1 root root 42 Apr 12 11:54 edits_0000000000000000035-0000000000000000036 -rw-r--r--. 1 root root 42 Apr 12 12:54 edits_0000000000000000037-0000000000000000038 -rw-r--r--. 1 root root 1048576 Apr 12 13:29 edits_0000000000000000039-0000000000000000046 -rw-r--r--. 1 root root 42 Apr 12 13:48 edits_0000000000000000047-0000000000000000048 -rw-r--r--. 1 root root 42 Apr 12 14:48 edits_0000000000000000049-0000000000000000050 -rw-r--r--. 1 root root 1048576 Apr 12 14:48 edits_inprogress_0000000000000000051 -rw-r--r--. 1 root root 489 Apr 12 13:48 fsimage_0000000000000000048 -rw-r--r--. 1 root root 62 Apr 12 13:48 fsimage_0000000000000000048.md5 -rw-r--r--. 1 root root 489 Apr 12 14:48 fsimage_0000000000000000050 -rw-r--r--. 1 root root 62 Apr 12 14:48 fsimage_0000000000000000050.md5 -rw-r--r--. 1 root root 3 Apr 12 14:48 seen_txid -rw-r--r--. 1 root root 215 Apr 11 18:07 VERSION [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

SecondNameNode存放数据的目录和NameNode存放数据目录的布局相同,只不过SecondNameNode没有seen_txid这个文件。SecondNamenode的好处就是当NameNode方式故障时,可以从SecondNameNode的数据目录中恢复数据。恢复数据的方法有两种:

方法一:将SecondaryNameNode中的数据拷贝到NameNode存储数据的目录

方法二:使用-importCheckpoint选项启动namenode守护进程,从而将SecondaryNameNode尊重数据拷贝到NameNode目录中。

1>.模拟NameNode故障,采用方法一,恢复NameNode数据。(推荐使用)

[root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# jps 19750 Jps 1978 NameNode 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# kill -9 1978 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# jps 19819 Jps 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# rm -rf /data/hadoop/hdfs/dfs/name/* [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name total 0 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name total 0 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# cp -r /data/hadoop/hdfs/dfs/namesecondary/* /data/hadoop/hdfs/dfs/name/ [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name total 8 drwxr-xr-x. 2 root root 4096 Apr 12 15:19 current -rw-r--r--. 1 root root 31 Apr 12 15:19 in_use.lock [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# jps 21137 Jps 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hadoop-daemon.sh start namenode starting namenode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-namenode-node101.yinzhengjie.org.cn.out [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# jps 21217 NameNode 21316 Jps 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

温馨提示:

我们上面虽说把数据恢复了,但是我们明明知道SeconaryName数据中有一个seen_txid这个文件是没有的,当我们把数据拷贝到NameNode后,启动NameNode时,我们发现他自动生成了该文件!是不是很神奇呢?

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name/current/ total 2156 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000001-0000000000000000002 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000003-0000000000000000004 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000005-0000000000000000006 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000007-0000000000000000008 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000009-0000000000000000010 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000011-0000000000000000012 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000013-0000000000000000014 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000015-0000000000000000016 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000017-0000000000000000018 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000019-0000000000000000020 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000021-0000000000000000022 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000023-0000000000000000024 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000025-0000000000000000026 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000027-0000000000000000028 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000029-0000000000000000030 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000031-0000000000000000032 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000033-0000000000000000034 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000035-0000000000000000036 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000037-0000000000000000038 -rw-r--r--. 1 root root 1048576 Apr 12 15:19 edits_0000000000000000039-0000000000000000046 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000047-0000000000000000048 -rw-r--r--. 1 root root 42 Apr 12 15:19 edits_0000000000000000049-0000000000000000050 -rw-r--r--. 1 root root 1048576 Apr 12 15:21 edits_inprogress_0000000000000000051 -rw-r--r--. 1 root root 489 Apr 12 15:19 fsimage_0000000000000000048 -rw-r--r--. 1 root root 62 Apr 12 15:19 fsimage_0000000000000000048.md5 -rw-r--r--. 1 root root 489 Apr 12 15:19 fsimage_0000000000000000050 -rw-r--r--. 1 root root 62 Apr 12 15:19 fsimage_0000000000000000050.md5 -rw-r--r--. 1 root root 3 Apr 12 15:20 seen_txid -rw-r--r--. 1 root root 215 Apr 12 15:19 VERSION [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# cat /data/hadoop/hdfs/dfs/name/current/seen_txid 51 [root@node101.yinzhengjie.org.cn ~]#

2>.模拟NameNode故障,采用方法二,恢复NameNode数据

[root@node101.yinzhengjie.org.cn ~]# cat /yinzhengjie/softwares/hadoop-2.9.2/etc/hadoop/hdfs-site.xml <?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>dfs.namenode.checkpoint.period</name> <value>30</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/data/hadoop/hdfs/dfs/name</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> </configuration> <!-- hdfs-site.xml 配置文件的作用: #HDFS的相关设定,如文件副本的个数、块大小及是否使用强制权限等,此中的参数定义会覆盖hdfs-default.xml文件中的默认配置. dfs.namenode.checkpoint.period 参数的作用: #两个定期检查点之间的秒数,默认是3600,即1小时。 dfs.namenode.name.dir 参数的作用: #指定namenode的工作目录,默认是file://${hadoop.tmp.dir}/dfs/name dfs.replication 参数的作用: #为了数据可用性及冗余的目的,HDFS会在多个节点上保存同一个数据块的多个副本,其默认为3个。而只有一个节点的伪分布式环境中其仅用 保存一个副本即可,这可以通过dfs.replication属性进行定义。它是一个软件级备份。 --> [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# jps 21217 NameNode 26332 Jps 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# kill -9 21217 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# jps 26377 Jps 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name total 8 drwxr-xr-x. 2 root root 4096 Apr 12 15:20 current -rw-r--r--. 1 root root 32 Apr 12 15:20 in_use.lock [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# rm -rf /data/hadoop/hdfs/dfs/name/* [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name total 0 [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/name total 0 [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/ total 12 drwx------. 3 root root 4096 Apr 12 13:47 data drwxr-xr-x. 2 root root 4096 Apr 12 15:51 name drwxr-xr-x. 3 root root 4096 Apr 12 13:47 namesecondary [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/namesecondary/ total 8 drwxr-xr-x. 2 root root 4096 Apr 12 14:48 current -rw-r--r--. 1 root root 31 Apr 12 13:47 in_use.lock [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# rm -f /data/hadoop/hdfs/dfs/namesecondary/in_use.lock [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# ll /data/hadoop/hdfs/dfs/namesecondary/ total 4 drwxr-xr-x. 2 root root 4096 Apr 12 14:48 current [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hdfs namenode -importCheckpoint 19/04/12 15:53:16 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = node101.yinzhengjie.org.cn/172.30.1.101 STARTUP_MSG: args = [-importCheckpoint] STARTUP_MSG: version = 2.9.2 STARTUP_MSG: classpath = /yinzhengjie/softwares/hadoop-2.9.2/etc/hadoop:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-collections-3.2.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/paranamer-2.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/asm-3.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/zookeeper-3.4.6.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/xz-1.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jettison-1.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/junit-4.11.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/snappy-java-1.0.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-configuration-1.6.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/httpclient-4.5.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/hadoop-annotations-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-lang-2.6.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/xmlenc-0.52.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-compress-1.4.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/avro-1.7.7.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/curator-framework-2.7.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-codec-1.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jetty-sslengine-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/json-smart-1.3.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jsch-0.1.54.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/stax2-api-3.1.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/mockito-all-1.8.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jersey-server-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-cli-1.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-net-3.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/guava-11.0.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jsr305-3.0.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/hadoop-auth-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/stax-api-1.0-2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/servlet-api-2.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jetty-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jets3t-0.9.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-digester-1.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/httpcore-4.4.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jsp-api-2.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/log4j-1.2.17.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jersey-core-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-io-2.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/curator-client-2.7.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jersey-json-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-lang3-3.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/hamcrest-core-1.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-logging-1.1.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/gson-2.2.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/activation-1.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/netty-3.6.2.Final.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/jetty-util-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/lib/commons-math3-3.1.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/hadoop-nfs-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/hadoop-common-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/common/hadoop-common-2.9.2-tests.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/asm-3.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jackson-annotations-2.7.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/okio-1.6.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/guava-11.0.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jackson-core-2.7.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/commons-io-2.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/hadoop-hdfs-client-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-native-client-2.9.2-tests.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-nfs-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-client-2.9.2-tests.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-native-client-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-rbf-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-rbf-2.9.2-tests.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-client-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/hdfs/hadoop-hdfs-2.9.2-tests.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/woodstox-core-5.0.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/guice-3.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/paranamer-2.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/asm-3.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/api-util-1.0.0-M20.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/xz-1.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jettison-1.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jcip-annotations-1.0-1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/snappy-java-1.0.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-configuration-1.6.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-beanutils-1.7.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/curator-recipes-2.7.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/httpclient-4.5.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-lang-2.6.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/xmlenc-0.52.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/java-xmlbuilder-0.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/javax.inject-1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/avro-1.7.7.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/curator-framework-2.7.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-codec-1.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jetty-sslengine-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/json-smart-1.3.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jsch-0.1.54.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/nimbus-jose-jwt-4.41.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/java-util-1.9.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/stax2-api-3.1.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jersey-server-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-cli-1.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/apacheds-i18n-2.0.0-M15.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/metrics-core-3.0.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/htrace-core4-4.1.0-incubating.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-net-3.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/guava-11.0.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/api-asn1-api-1.0.0-M20.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/servlet-api-2.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jetty-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jets3t-0.9.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-digester-1.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/httpcore-4.4.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jsp-api-2.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/log4j-1.2.17.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jersey-client-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jersey-core-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-io-2.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/curator-client-2.7.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jersey-json-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/aopalliance-1.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-lang3-3.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/gson-2.2.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-beanutils-core-1.8.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/activation-1.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/fst-2.50.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/commons-math3-3.1.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/lib/json-io-2.5.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-api-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-router-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-tests-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-client-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-registry-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-common-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-common-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/guice-3.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/asm-3.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/xz-1.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/junit-4.11.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/snappy-java-1.0.5.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/hadoop-annotations-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/javax.inject-1.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/avro-1.7.7.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.9.2-tests.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.9.2.jar:/yinzhengjie/softwares/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.2.jar:/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r 826afbeae31ca687bc2f8471dc841b66ed2c6704; compiled by 'ajisaka' on 2018-11-13T12:42Z STARTUP_MSG: java = 1.8.0_201 ************************************************************/ 19/04/12 15:53:16 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 19/04/12 15:53:16 INFO namenode.NameNode: createNameNode [-importCheckpoint] 19/04/12 15:53:16 INFO impl.MetricsConfig: loaded properties from hadoop-metrics2.properties 19/04/12 15:53:16 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s). 19/04/12 15:53:16 INFO impl.MetricsSystemImpl: NameNode metrics system started 19/04/12 15:53:16 INFO namenode.NameNode: fs.defaultFS is hdfs://node101.yinzhengjie.org.cn:8020 19/04/12 15:53:16 INFO namenode.NameNode: Clients are to use node101.yinzhengjie.org.cn:8020 to access this namenode/service. 19/04/12 15:53:17 INFO util.JvmPauseMonitor: Starting JVM pause monitor 19/04/12 15:53:17 INFO hdfs.DFSUtil: Starting Web-server for hdfs at: http://account.jetbrains.com:50070 19/04/12 15:53:17 INFO mortbay.log: Logging to org.slf4j.impl.Log4jLoggerAdapter(org.mortbay.log) via org.mortbay.log.Slf4jLog 19/04/12 15:53:17 INFO server.AuthenticationFilter: Unable to initialize FileSignerSecretProvider, falling back to use random secrets. 19/04/12 15:53:17 INFO http.HttpRequestLog: Http request log for http.requests.namenode is not defined 19/04/12 15:53:17 INFO http.HttpServer2: Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter) 19/04/12 15:53:17 INFO http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context hdfs 19/04/12 15:53:17 INFO http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs 19/04/12 15:53:17 INFO http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context static 19/04/12 15:53:17 INFO http.HttpServer2: Added filter 'org.apache.hadoop.hdfs.web.AuthFilter' (class=org.apache.hadoop.hdfs.web.AuthFilter) 19/04/12 15:53:17 INFO http.HttpServer2: addJerseyResourcePackage: packageName=org.apache.hadoop.hdfs.server.namenode.web.resources;org.apache.hadoop.hdfs.web.resources, pathSpec=/webhdfs/v1/* 19/04/12 15:53:17 INFO http.HttpServer2: Jetty bound to port 50070 19/04/12 15:53:17 INFO mortbay.log: jetty-6.1.26 19/04/12 15:53:17 INFO mortbay.log: Started HttpServer2$SelectChannelConnectorWithSafeStartup@account.jetbrains.com:50070 19/04/12 15:53:17 WARN common.Util: Path /data/hadoop/hdfs/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 19/04/12 15:53:17 WARN common.Util: Path /data/hadoop/hdfs/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 19/04/12 15:53:17 WARN namenode.FSNamesystem: !!! WARNING !!! The NameNode currently runs without persistent storage. Any changes to the file system meta-data may be lost. Recommended actions: - shutdown and restart NameNode with configured "dfs.namenode.edits.dir.required" in hdfs-site.xml; - use Backup Node as a persistent and up-to-date storage of the file system meta-data. 19/04/12 15:53:17 WARN namenode.FSNamesystem: Only one image storage directory (dfs.namenode.name.dir) configured. Beware of data loss due to lack of redundant storage directories! 19/04/12 15:53:17 WARN namenode.FSNamesystem: Only one namespace edits storage directory (dfs.namenode.edits.dir) configured. Beware of data loss due to lack of redundant storage directories! 19/04/12 15:53:17 WARN common.Util: Path /data/hadoop/hdfs/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 19/04/12 15:53:17 WARN common.Util: Path /data/hadoop/hdfs/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 19/04/12 15:53:17 INFO namenode.FSEditLog: Edit logging is async:true 19/04/12 15:53:17 INFO namenode.FSNamesystem: KeyProvider: null 19/04/12 15:53:17 INFO namenode.FSNamesystem: fsLock is fair: true 19/04/12 15:53:17 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 19/04/12 15:53:17 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 19/04/12 15:53:17 INFO namenode.FSNamesystem: supergroup = supergroup 19/04/12 15:53:17 INFO namenode.FSNamesystem: isPermissionEnabled = true 19/04/12 15:53:17 INFO namenode.FSNamesystem: HA Enabled: false 19/04/12 15:53:17 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling 19/04/12 15:53:17 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000 19/04/12 15:53:17 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 19/04/12 15:53:17 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 19/04/12 15:53:17 INFO blockmanagement.BlockManager: The block deletion will start around 2019 Apr 12 15:53:17 19/04/12 15:53:17 INFO util.GSet: Computing capacity for map BlocksMap 19/04/12 15:53:17 INFO util.GSet: VM type = 64-bit 19/04/12 15:53:17 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB 19/04/12 15:53:17 INFO util.GSet: capacity = 2^21 = 2097152 entries 19/04/12 15:53:17 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 19/04/12 15:53:17 WARN conf.Configuration: No unit for dfs.heartbeat.interval(3) assuming SECONDS 19/04/12 15:53:17 WARN conf.Configuration: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS 19/04/12 15:53:17 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 19/04/12 15:53:17 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0 19/04/12 15:53:17 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000 19/04/12 15:53:17 INFO blockmanagement.BlockManager: defaultReplication = 2 19/04/12 15:53:17 INFO blockmanagement.BlockManager: maxReplication = 512 19/04/12 15:53:17 INFO blockmanagement.BlockManager: minReplication = 1 19/04/12 15:53:17 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 19/04/12 15:53:17 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 19/04/12 15:53:17 INFO blockmanagement.BlockManager: encryptDataTransfer = false 19/04/12 15:53:17 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 19/04/12 15:53:17 INFO namenode.FSNamesystem: Append Enabled: true 19/04/12 15:53:17 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215 19/04/12 15:53:17 INFO util.GSet: Computing capacity for map INodeMap 19/04/12 15:53:17 INFO util.GSet: VM type = 64-bit 19/04/12 15:53:17 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB 19/04/12 15:53:17 INFO util.GSet: capacity = 2^20 = 1048576 entries 19/04/12 15:53:17 INFO namenode.FSDirectory: ACLs enabled? false 19/04/12 15:53:17 INFO namenode.FSDirectory: XAttrs enabled? true 19/04/12 15:53:17 INFO namenode.NameNode: Caching file names occurring more than 10 times 19/04/12 15:53:17 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: falseskipCaptureAccessTimeOnlyChange: false 19/04/12 15:53:17 INFO util.GSet: Computing capacity for map cachedBlocks 19/04/12 15:53:17 INFO util.GSet: VM type = 64-bit 19/04/12 15:53:17 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB 19/04/12 15:53:17 INFO util.GSet: capacity = 2^18 = 262144 entries 19/04/12 15:53:17 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 19/04/12 15:53:17 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 19/04/12 15:53:17 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 19/04/12 15:53:17 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 19/04/12 15:53:17 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 19/04/12 15:53:17 INFO util.GSet: Computing capacity for map NameNodeRetryCache 19/04/12 15:53:17 INFO util.GSet: VM type = 64-bit 19/04/12 15:53:17 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 19/04/12 15:53:17 INFO util.GSet: capacity = 2^15 = 32768 entries 19/04/12 15:53:17 INFO common.Storage: Lock on /data/hadoop/hdfs/dfs/name/in_use.lock acquired by nodename 28133@node101.yinzhengjie.org.cn 19/04/12 15:53:17 INFO namenode.FSImage: Storage directory /data/hadoop/hdfs/dfs/name is not formatted. 19/04/12 15:53:17 INFO namenode.FSImage: Formatting ... 19/04/12 15:53:17 INFO namenode.FSEditLog: Edit logging is async:true 19/04/12 15:53:17 INFO common.Storage: Lock on /data/hadoop/hdfs/dfs/namesecondary/in_use.lock acquired by nodename 28133@node101.yinzhengjie.org.cn 19/04/12 15:53:17 WARN namenode.FSNamesystem: !!! WARNING !!! The NameNode currently runs without persistent storage. Any changes to the file system meta-data may be lost. Recommended actions: - shutdown and restart NameNode with configured "dfs.namenode.edits.dir.required" in hdfs-site.xml; - use Backup Node as a persistent and up-to-date storage of the file system meta-data. 19/04/12 15:53:17 INFO namenode.FileJournalManager: Recovering unfinalized segments in /data/hadoop/hdfs/dfs/namesecondary/current 19/04/12 15:53:17 INFO namenode.FSImage: No edit log streams selected. 19/04/12 15:53:17 INFO namenode.FSImage: Planning to load image: FSImageFile(file=/data/hadoop/hdfs/dfs/namesecondary/current/fsimage_0000000000000000050, cpktTxId=0000000000000000050) 19/04/12 15:53:18 INFO namenode.FSImageFormatPBINode: Loading 3 INodes. 19/04/12 15:53:18 INFO namenode.FSImageFormatProtobuf: Loaded FSImage in 0 seconds. 19/04/12 15:53:18 INFO namenode.FSImage: Loaded image for txid 50 from /data/hadoop/hdfs/dfs/namesecondary/current/fsimage_0000000000000000050 19/04/12 15:53:18 WARN namenode.FSNamesystem: !!! WARNING !!! The NameNode currently runs without persistent storage. Any changes to the file system meta-data may be lost. Recommended actions: - shutdown and restart NameNode with configured "dfs.namenode.edits.dir.required" in hdfs-site.xml; - use Backup Node as a persistent and up-to-date storage of the file system meta-data. 19/04/12 15:53:18 INFO namenode.FileJournalManager: Recovering unfinalized segments in /data/hadoop/hdfs/dfs/name/current 19/04/12 15:53:18 INFO namenode.FSImage: Save namespace ... 19/04/12 15:53:18 INFO namenode.FSImageFormatProtobuf: Saving image file /data/hadoop/hdfs/dfs/name/current/fsimage.ckpt_0000000000000000050 using no compression 19/04/12 15:53:18 INFO namenode.FSImageFormatProtobuf: Image file /data/hadoop/hdfs/dfs/name/current/fsimage.ckpt_0000000000000000050 of size 489 bytes saved in 0 seconds . 19/04/12 15:53:18 INFO namenode.FSImageTransactionalStorageInspector: No version file in /data/hadoop/hdfs/dfs/name 19/04/12 15:53:18 INFO namenode.FSImageTransactionalStorageInspector: No version file in /data/hadoop/hdfs/dfs/name 19/04/12 15:53:18 INFO namenode.FSNamesystem: Need to save fs image? false (staleImage=false, haEnabled=false, isRollingUpgrade=false) 19/04/12 15:53:18 INFO namenode.FSEditLog: Starting log segment at 51 19/04/12 15:53:18 INFO namenode.NameCache: initialized with 0 entries 0 lookups 19/04/12 15:53:18 INFO namenode.FSNamesystem: Finished loading FSImage in 335 msecs 19/04/12 15:53:18 INFO namenode.NameNode: RPC server is binding to node101.yinzhengjie.org.cn:8020 19/04/12 15:53:18 INFO ipc.CallQueueManager: Using callQueue: class java.util.concurrent.LinkedBlockingQueue queueCapacity: 1000 scheduler: class org.apache.hadoop.ipc.DefaultRpcScheduler 19/04/12 15:53:18 INFO ipc.Server: Starting Socket Reader #1 for port 8020 19/04/12 15:53:18 INFO namenode.FSNamesystem: Registered FSNamesystemState MBean 19/04/12 15:53:18 WARN common.Util: Path /data/hadoop/hdfs/dfs/name should be specified as a URI in configuration files. Please update hdfs configuration. 19/04/12 15:53:18 WARN namenode.FSNamesystem: !!! WARNING !!! The NameNode currently runs without persistent storage. Any changes to the file system meta-data may be lost. Recommended actions: - shutdown and restart NameNode with configured "dfs.namenode.edits.dir.required" in hdfs-site.xml; - use Backup Node as a persistent and up-to-date storage of the file system meta-data. 19/04/12 15:53:18 INFO namenode.LeaseManager: Number of blocks under construction: 0 19/04/12 15:53:18 INFO blockmanagement.BlockManager: initializing replication queues 19/04/12 15:53:18 INFO hdfs.StateChange: STATE* Leaving safe mode after 0 secs 19/04/12 15:53:18 INFO hdfs.StateChange: STATE* Network topology has 0 racks and 0 datanodes 19/04/12 15:53:18 INFO hdfs.StateChange: STATE* UnderReplicatedBlocks has 0 blocks 19/04/12 15:53:18 INFO blockmanagement.BlockManager: Total number of blocks = 1 19/04/12 15:53:18 INFO blockmanagement.BlockManager: Number of invalid blocks = 0 19/04/12 15:53:18 INFO blockmanagement.BlockManager: Number of under-replicated blocks = 1 19/04/12 15:53:18 INFO blockmanagement.BlockManager: Number of over-replicated blocks = 0 19/04/12 15:53:18 INFO blockmanagement.BlockManager: Number of blocks being written = 0 19/04/12 15:53:18 INFO hdfs.StateChange: STATE* Replication Queue initialization scan for invalid, over- and under-replicated blocks completed in 6 msec 19/04/12 15:53:18 INFO ipc.Server: IPC Server Responder: starting 19/04/12 15:53:18 INFO ipc.Server: IPC Server listener on 8020: starting 19/04/12 15:53:18 INFO namenode.NameNode: NameNode RPC up at: node101.yinzhengjie.org.cn/172.30.1.101:8020 19/04/12 15:53:18 INFO namenode.FSNamesystem: Starting services required for active state 19/04/12 15:53:18 INFO namenode.FSDirectory: Initializing quota with 4 thread(s) 19/04/12 15:53:18 INFO namenode.FSDirectory: Quota initialization completed in 4 milliseconds name space=3 storage space=2528 storage types=RAM_DISK=0, SSD=0, DISK=0, ARCHIVE=0 19/04/12 15:53:18 INFO blockmanagement.CacheReplicationMonitor: Starting CacheReplicationMonitor with interval 30000 milliseconds 19/04/12 15:53:19 INFO hdfs.StateChange: BLOCK* registerDatanode: from DatanodeRegistration(172.30.1.101:50010, datanodeUuid=07a8ce7e-9ee2-4f39-aa4c-06fc06175ac7, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-57;cid=CID-5e6a5eca-6d94-4087-9ff8-7decc325338c;nsid=429640720;c=1554977243283) storage 07a8ce7e-9ee2-4f39-aa4c-06fc06175ac7 19/04/12 15:53:19 INFO net.NetworkTopology: Adding a new node: /default-rack/172.30.1.101:50010 19/04/12 15:53:19 INFO blockmanagement.BlockReportLeaseManager: Registered DN 07a8ce7e-9ee2-4f39-aa4c-06fc06175ac7 (172.30.1.101:50010). 19/04/12 15:53:19 INFO blockmanagement.DatanodeDescriptor: Adding new storage ID DS-2be780fb-d455-426c-ae0a-f04329f7f6af for DN 172.30.1.101:50010 19/04/12 15:53:19 INFO BlockStateChange: BLOCK* processReport 0x8fbe58a5c4ff1ffc: Processing first storage report for DS-2be780fb-d455-426c-ae0a-f04329f7f6af from datanode 07a8ce7e-9ee2-4f39-aa4c-06fc06175ac7 19/04/12 15:53:19 INFO BlockStateChange: BLOCK* processReport 0x8fbe58a5c4ff1ffc: from storage DS-2be780fb-d455-426c-ae0a-f04329f7f6af node DatanodeRegistration(172.30.1.101:50010, datanodeUuid=07a8ce7e-9ee2-4f39-aa4c-06fc06175ac7, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-57;cid=CID-5e6a5eca-6d94-4087-9ff8-7decc325338c;nsid=429640720;c=1554977243283), blocks: 1, hasStaleStorage: false, processing time: 3 msecs, invalidatedBlocks: 0 19/04/12 15:53:19 INFO hdfs.StateChange: BLOCK* registerDatanode: from DatanodeRegistration(172.30.1.102:50010, datanodeUuid=8810056a-5a58-4d85-8a00-e0ceb5d1ac8b, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-57;cid=CID-5e6a5eca-6d94-4087-9ff8-7decc325338c;nsid=429640720;c=1554977243283) storage 8810056a-5a58-4d85-8a00-e0ceb5d1ac8b 19/04/12 15:53:19 INFO net.NetworkTopology: Adding a new node: /default-rack/172.30.1.102:50010 19/04/12 15:53:19 INFO blockmanagement.BlockReportLeaseManager: Registered DN 8810056a-5a58-4d85-8a00-e0ceb5d1ac8b (172.30.1.102:50010). 19/04/12 15:53:19 INFO hdfs.StateChange: BLOCK* registerDatanode: from DatanodeRegistration(172.30.1.103:50010, datanodeUuid=6625a3aa-8e60-4614-922c-4e3f2821cb9d, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-57;cid=CID-5e6a5eca-6d94-4087-9ff8-7decc325338c;nsid=429640720;c=1554977243283) storage 6625a3aa-8e60-4614-922c-4e3f2821cb9d 19/04/12 15:53:19 INFO net.NetworkTopology: Adding a new node: /default-rack/172.30.1.103:50010 19/04/12 15:53:19 INFO blockmanagement.BlockReportLeaseManager: Registered DN 6625a3aa-8e60-4614-922c-4e3f2821cb9d (172.30.1.103:50010). 19/04/12 15:53:19 INFO blockmanagement.DatanodeDescriptor: Adding new storage ID DS-58b626df-edca-4f58-baae-051a10700a6c for DN 172.30.1.102:50010 19/04/12 15:53:19 INFO blockmanagement.DatanodeDescriptor: Adding new storage ID DS-b1a5ab0c-365e-4c54-8947-5686377fc317 for DN 172.30.1.103:50010 19/04/12 15:53:19 INFO BlockStateChange: BLOCK* processReport 0x894eeec5f232acb8: Processing first storage report for DS-58b626df-edca-4f58-baae-051a10700a6c from datanode 8810056a-5a58-4d85-8a00-e0ceb5d1ac8b 19/04/12 15:53:19 INFO BlockStateChange: BLOCK* processReport 0x894eeec5f232acb8: from storage DS-58b626df-edca-4f58-baae-051a10700a6c node DatanodeRegistration(172.30.1.102:50010, datanodeUuid=8810056a-5a58-4d85-8a00-e0ceb5d1ac8b, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-57;cid=CID-5e6a5eca-6d94-4087-9ff8-7decc325338c;nsid=429640720;c=1554977243283), blocks: 0, hasStaleStorage: false, processing time: 1 msecs, invalidatedBlocks: 0 19/04/12 15:53:19 INFO BlockStateChange: BLOCK* processReport 0xe5052da591a1fb0a: Processing first storage report for DS-b1a5ab0c-365e-4c54-8947-5686377fc317 from datanode 6625a3aa-8e60-4614-922c-4e3f2821cb9d 19/04/12 15:53:19 INFO BlockStateChange: BLOCK* processReport 0xe5052da591a1fb0a: from storage DS-b1a5ab0c-365e-4c54-8947-5686377fc317 node DatanodeRegistration(172.30.1.103:50010, datanodeUuid=6625a3aa-8e60-4614-922c-4e3f2821cb9d, infoPort=50075, infoSecurePort=0, ipcPort=50020, storageInfo=lv=-57;cid=CID-5e6a5eca-6d94-4087-9ff8-7decc325338c;nsid=429640720;c=1554977243283), blocks: 1, hasStaleStorage: false, processing time: 1 msecs, invalidatedBlocks: 0 19/04/12 15:54:05 INFO namenode.FSNamesystem: Roll Edit Log from 172.30.1.101 19/04/12 15:54:05 INFO namenode.FSEditLog: Rolling edit logs 19/04/12 15:54:05 INFO namenode.FSEditLog: Ending log segment 51, 51 19/04/12 15:54:05 INFO namenode.FSEditLog: Number of transactions: 2 Total time for transactions(ms): 0 Number of transactions batched in Syncs: 50 Number of syncs: 3 SyncTimes(ms): 5 19/04/12 15:54:05 INFO namenode.FileJournalManager: Finalizing edits file /data/hadoop/hdfs/dfs/name/current/edits_inprogress_0000000000000000051 -> /data/hadoop/hdfs/dfs/name/current/edits_0000000000000000051-0000000000000000052 19/04/12 15:54:05 INFO namenode.FSEditLog: Starting log segment at 53 19/04/12 15:54:05 INFO namenode.TransferFsImage: Sending fileName: /data/hadoop/hdfs/dfs/name/current/edits_0000000000000000051-0000000000000000052, fileSize: 42. Sent total: 42 bytes. Size of last segment intended to send: -1 bytes. 19/04/12 15:54:05 INFO namenode.TransferFsImage: Combined time for fsimage download and fsync to all disks took 0.00s. The fsimage download took 0.00s at 0.00 KB/s. Synchronous (fsync) write to disk of /data/hadoop/hdfs/dfs/name/current/fsimage.ckpt_0000000000000000052 took 0.00s. 19/04/12 15:54:05 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000052 size 489 bytes. 19/04/12 15:54:05 INFO namenode.NNStorageRetentionManager: Going to retain 2 images with txid >= 50 ^C19/04/12 16:03:13 ERROR namenode.NameNode: RECEIVED SIGNAL 2: SIGINT 19/04/12 16:03:13 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at node101.yinzhengjie.org.cn/172.30.1.101 ************************************************************/ [root@node101.yinzhengjie.org.cn ~]#

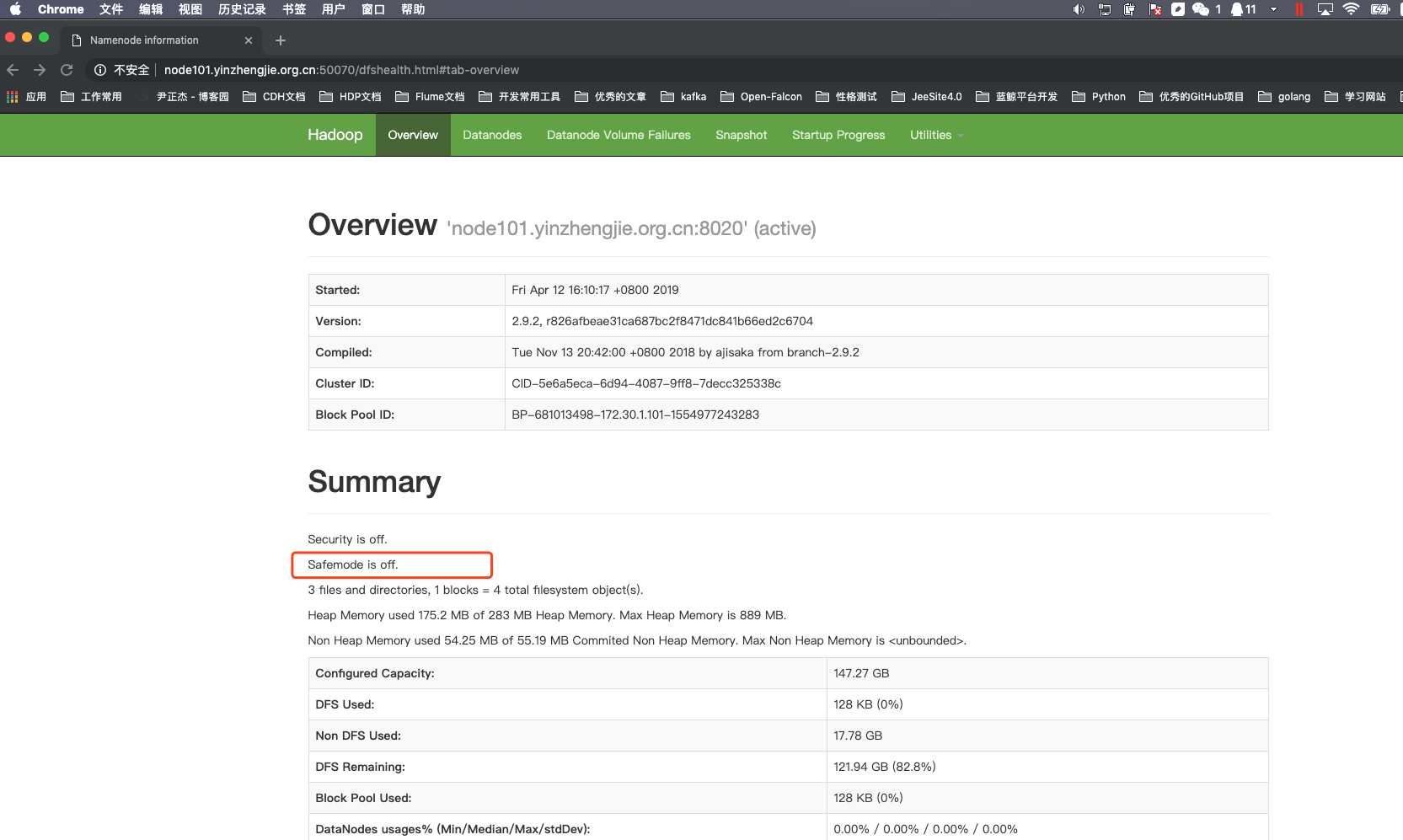

[root@node101.yinzhengjie.org.cn ~]# jps 31621 Jps 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# hadoop-daemon.sh start namenode starting namenode, logging to /yinzhengjie/softwares/hadoop-2.9.2/logs/hadoop-root-namenode-node101.yinzhengjie.org.cn.out [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# jps 31690 NameNode 31804 Jps 2333 SecondaryNameNode 2141 DataNode [root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]#

安全模式是hadoop的一种保护机制,用于保证集群中的数据块的安全性。

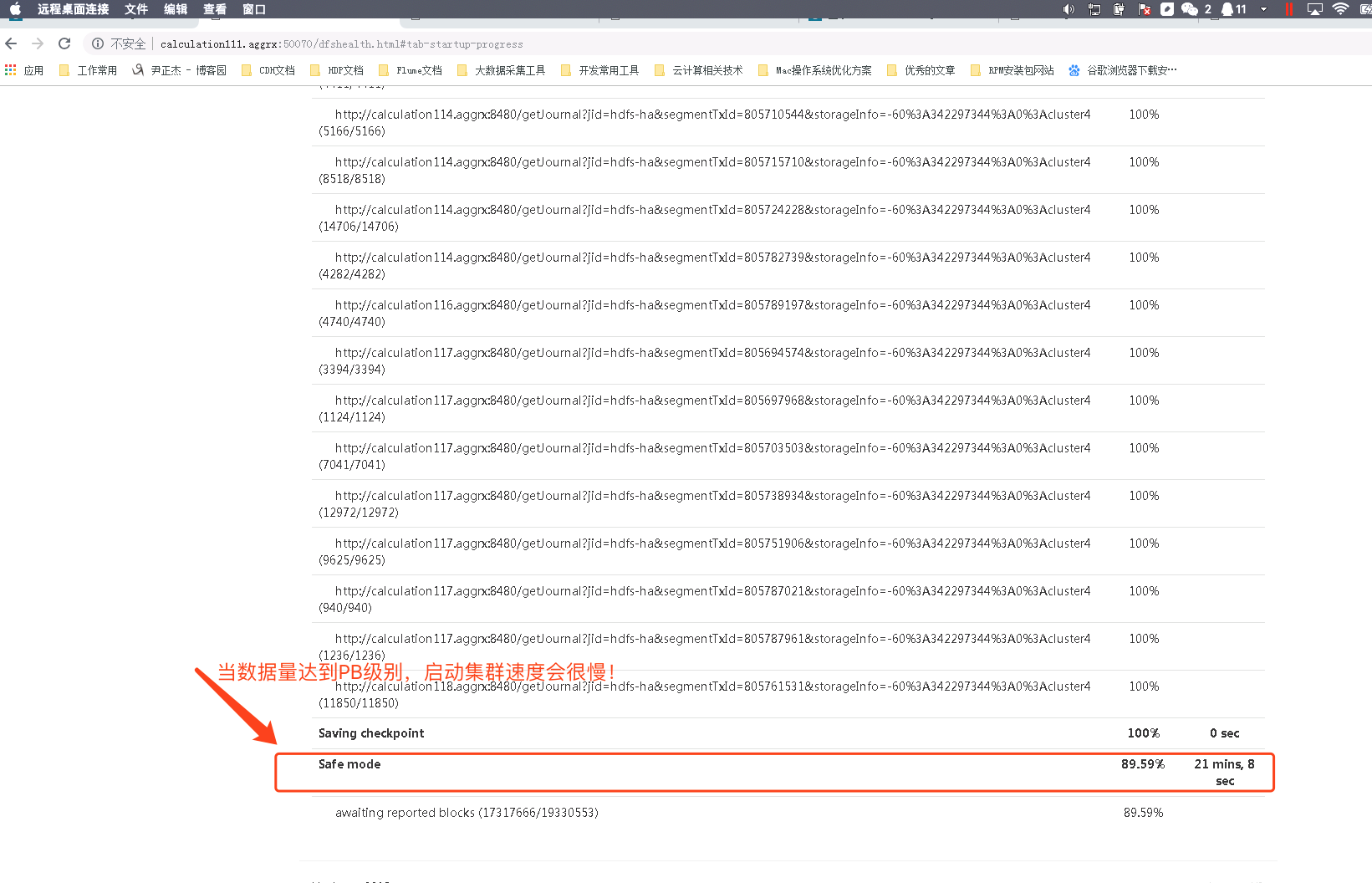

Namenode启动时,首先将映像文件(fsimage)载入内存,并执行编辑日志(edits)中的各项操作。一旦在内存中成功建立文件系统元数据的映像,则创建一个新的fsimage文件和一个空的编辑日志。此时,namenode开始监听datanode请求。但是此刻,namenode运行在安全模式,即namenode的文件系统对于客户端来说是只读的。 系统中的数据块的位置并不是由namenode维护的,而是以块列表的形式存储在datanode中。在系统的正常操作期间,namenode会在内存中保留所有块位置的映射信息。在安全模式下,各个datanode会向namenode发送最新的块列表信息,namenode了解到足够多的块位置信息之后,即可高效运行文件系统。 如果满足“最小副本条件”,namenode会在30秒钟之后就退出安全模式。所谓的最小副本条件指的是在整个文件系统中99.9%的块满足最小副本级别(默认值:dfs.replication.min=1)。在启动一个刚刚格式化的HDFS集群时,因为系统中还没有任何块,所以namenode不会进入安全模式。

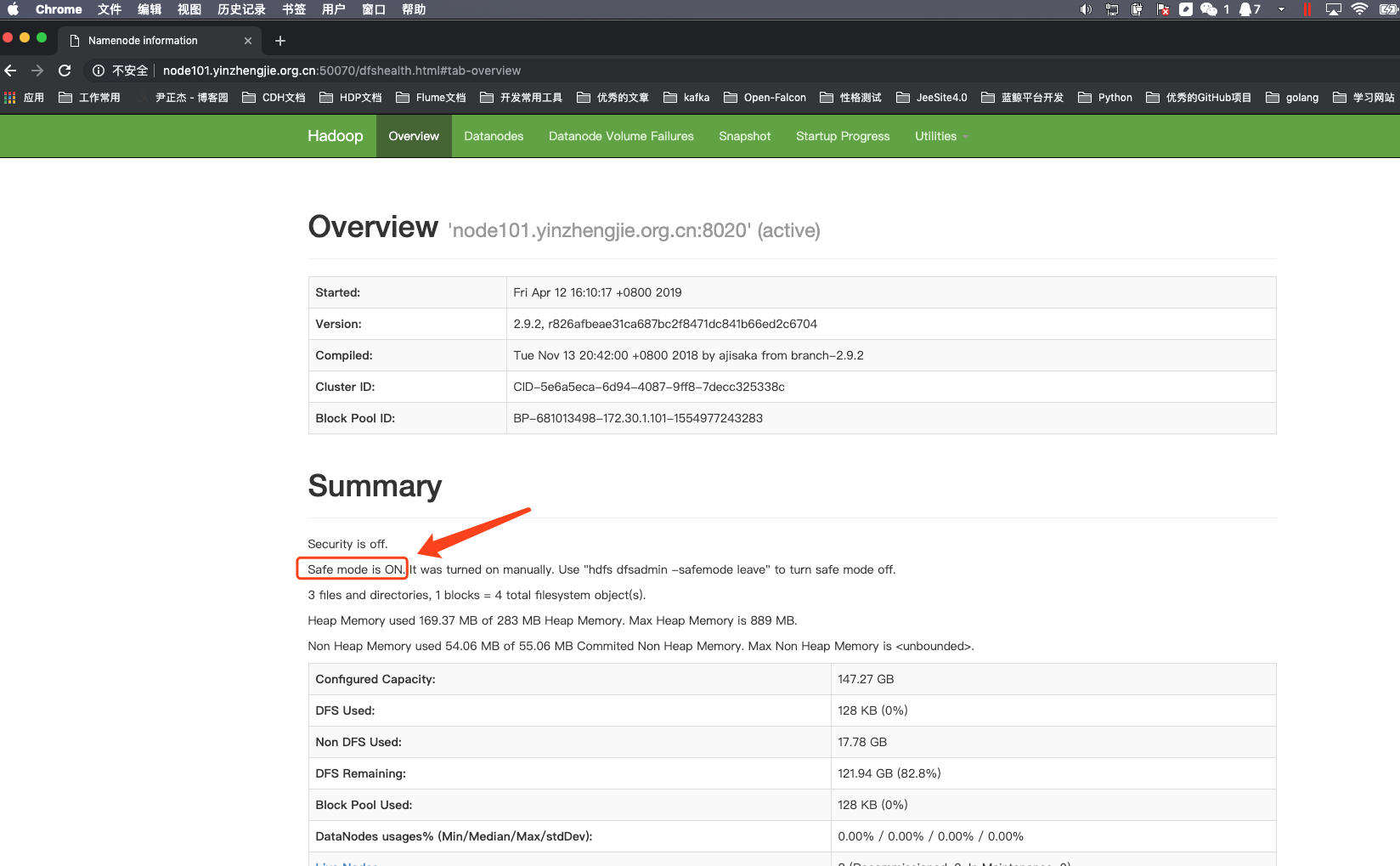

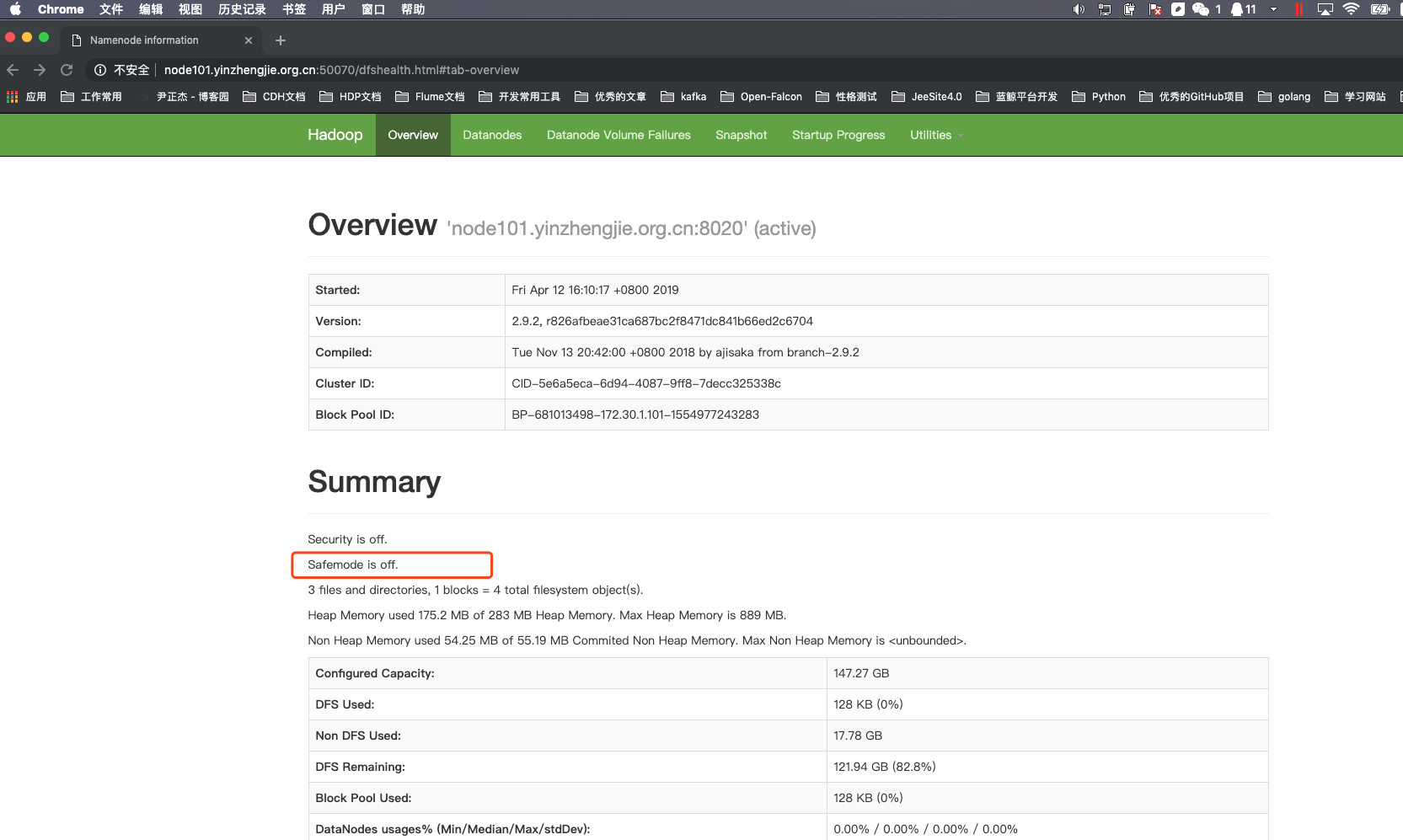

如下图所示,集群处于安全模式:

当然,我们还有部分的DataNode还没有加入到集群中,因此依旧处于安全模式,我们可以在NameNode的WebUI中看到,如下所示:

[root@node101.yinzhengjie.org.cn ~]# hdfs dfsadmin -safemode get

Safe mode is OFF

[root@node101.yinzhengjie.org.cn ~]#