最近做房途网网站监测工具,其中需要分析日志是主要的工作之一,前面用Awstats做分析,发现数据量大了,效率实在太低了,而且很难做具体分析统计入库,最终还是用Logparser来分析Nginx 日志,将统计数据入库,详细信息放到lucene,以方便查询和统计;每天每个城市Nginx的日志大概是500w记录,每100条记录时间如下:

提取5234229中的1000条记录,分析用时8667.606000005ms,Lucene索引用时3630.24890008ms,入库用时848.597200004ms,提取用时2280.48069987ms.

1.具体思路和相关工具如下:

1.1. 由于Nginx是运行在linux上,需要将每天日志进行备份分割,具体分割shell代码(RHEL 5):

LogBackup.sh--压缩前2天日志,备份前一天日志,重启nginx,我用corn每天定时 00:04分执行

logs_path="/var/www/nginxLog/"

date_dir=${logs_path}$(date -d "-1 day" +"%Y")/$(date -d "-1 day" +"%m")/$(date -d "-1 day" +"%d")/

gzip_date_dir=${logs_path}$(date -d "-2 day" +"%Y")/$(date -d "-2 day" +"%m")/$(date -d "-2 day" +"%d")/

mkdir -p ${date_dir}

mv ${logs_path}*.log ${date_dir}

kill -hup 'cat /var/www/nginxLog/nginx.pid'

/usr/bin/gzip ${gzip_date_dir}*.log

1.2.配置BCompare.exe,建立bat文件,每天定时,具体BCompare使用请查帮助

AutoParser.bat--同步日志,分析日志,每天定时6点钟window定时任务

"C:\Program Files\Beyond Compare\BCompare.exe" "@F:\Logs\scripts\nginx.txt"

"C:\tools\app\LogParserTool.exe " 2

F:\Logs\scripts\nginx.txt

load "Sync NginxLog"

sync update:left->right

2.LogParser Api分析思路

2.1 注册com的dll

regsvr32 “C:\tools\app\LogParser.dll”

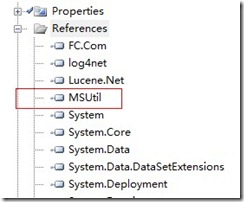

2.2. 引用Com

这样就可以使用Api了

2.3. 核心代码

添加using

using LogQuery = MSUtil.LogQueryClassClass;

using NCSALogInputFormat = MSUtil.COMIISNCSAInputContextClassClass;

using LogRecordSet = MSUtil.ILogRecordset;

查询读取日志

LogQuery oLogQuery = new LogQuery();

NCSALogInputFormat oInputFormat = new NCSALogInputFormat();

string query = @"SELECT RemoteHostName AS clientIp,User-Agent,DateTime as Time,StatusCode AS sc-status,BytesSent AS sc-bytes,Referer,Request FROM {0}";

LogRecordSet oRecordSet = oLogQuery.Execute(string.Format(query, path), oInputFormat);

for (; !oRecordSet.atEnd(); oRecordSet.moveNext())

{

MSUtil.ILogRecord o = oRecordSet.getRecord();

string req = System.Text.Encoding.UTF8.GetString(System.Text.Encoding.GetEncoding("gb2312").GetBytes(o.getValue(6).ToString()));

int firstSpace = req.IndexOf(' '), firstUrlchar = req.IndexOf('/');

string method = req.Substring(0, firstSpace);

string url = req.Substring(firstUrlchar, req.LastIndexOf("HTTP/") - firstUrlchar);

string ext = string.Empty;

string sFile = url;

int idx = url.IndexOf("?");

if (idx != -1) {

sFile = url.Substring(0, idx);

ext = System.IO.Path.GetExtension(sFile);

}else{

ext = System.IO.Path.GetExtension(url);

}

if (ext.Length > 5){

ext = "";

sFile = url;

}

DateTime dtNow1 = DateTime.Now;

DetailObject item = new DetailObject(){

IP = (string)((o.getValue(0) is System.DBNull) ? "" : o.getValue(0)),

Agent = (string)((o.getValue(1) is System.DBNull) ? "" : o.getValue(1)),

Time = (DateTime)o.getValue(2),

Status = (int)((o.getValue(3) is System.DBNull) ? 0 : o.getValue(3)),

Size = (int)((o.getValue(4) is System.DBNull) ? 0 : o.getValue(4)),

Refer = (string)((o.getValue(5) is System.DBNull) ? "" : o.getValue(5)),

Url = url,

Method = method,

Ext = ext,

File = sFile

};

}

注意加红的地方,用的是c#代码,开始直接用logparser的,效率低10倍。

至于后面怎么处理,都是简单的事情了。

附上一段IP监测的代码,当某个IP超标了就可以看出来,item就是DetailObject

#region 记录IP,这个需要放第一

UserViewObject sdiIP;

string keyIp = string.Format("ip_{0}_{1}_{2}", item.IP, item.Agent, item.Time.ToString("yyyyMMdd"));

if (dailyIpItem.ContainsKey(keyIp))

{

sdiIP = dailyIpItem[keyIp];

}

else

{

sdiIP = new UserViewObject()

{

ItemName = "IP",

Ip = item.IP,

Agent = item.Agent,

Day = item.Time.Date

};

dailyIpItem.Add(keyIp, sdiIP);

}

sdiIP.PV++;

sdiIP.Size = item.Size;

#endregion

![G]TPNP[5`$HSVIS`IP0B`EJ G]TPNP[5`$HSVIS`IP0B`EJ](https://images.cnblogs.com/cnblogs_com/yinpengxiang/WindowsLiveWriter/AWStatsJAWStats_87E6/G%5DTPNP%5B5%60$HSVIS%60IP0B%60EJ_thumb.jpg)