集群相关概念简述

HA是High Available缩写,是双机集群系统简称,指高可用性集群,是保证业务连续性的有效解决方案,一般有两个或两个以上的节点,且分为活动节点及备用节点。

1、集群的分类:

LB:负载均衡集群

lvs负载均衡

nginx反向代理

HAProxyHA:

高可用集群

heartbeat

keepalived

redhat5 : cman + rgmanager , conga(WebGUI) --> RHCS(Cluster Suite)集群套件

redhat6 : cman + rgmanager , corosync + pacemaker

redhat7 : corosync + pacemaker

HP:高性能集群

2、系统可用性的计算公式

A=MTBF/(MTBF+MTTR)

A:高可用性,指标:95%, 99%, 99.5%, ...., 99.999%,99.9999%等

MTBF:平均无故障时间

MTTR:平均修复时间

keepalived的概念

keepalived官网http://www.keepalived.org

Keepalived软件起初是专为LVS负载均衡软件设计的,用来管理并监控LVS集群系统中各个服务节点的状态,后来又加入了可以实现高可用的VRRP功能。因此,Keepalived除了能够管理LVS软件外,还可以作为其他服务(例如:Nginx、Haproxy、MySQL等)的高可用解决方案软件。

Keepalived软件主要是通过VRRP协议实现高可用功能的。VRRP是Virtual Router RedundancyProtocol(虚拟路由器冗余协议)的缩写,VRRP出现的目的就是为了解决静态路由单点故障问题的,它能够保证单个主节点宕机时,整个网络可以不间断地运行。

总结,Keepalived 一方面具有配置管理LVS的功能,同时还具有对LVS下面节点进行健康检查的功能,另一方面也可实现系统网络服务的高可用功能。

keepalived的功能

管理LVS负载均衡软件

实现LVS集群节点的健康检查中

作为系统网络服务的高可用性(failover)

Keepalived高可用故障切换转移原理

Keepalived高可用服务对之间的故障切换转移,是通过 VRRP (Virtual Router Redundancy Protocol ,虚拟路由器冗余协议)来实现的。

在 Keepalived服务正常工作时,主 Master节点会不断地向备节点发送(多播的方式)心跳消息,用以告诉备Backup节点自己还活看,当主 Master节点发生故障时,就无法发送心跳消息,备节点也就因此无法继续检测到来自主 Master节点的心跳了,于是调用自身的接管程序,接管主Master节点的 IP资源及服务。而当主 Master节点恢复时,备Backup节点又会释放主节点故障时自身接管的IP资源及服务,恢复到原来的备用角色。

VRRP:

VRRP ,全 称 Virtual Router Redundancy Protocol ,中文名为虚拟路由冗余协议 ,VRRP的出现就是为了解决静态路由的单点故障问题,是通过一种竞选机制来将路由的任务交给某台VRRP路由器的。

keepalived的组成

包名: keepalived

程序环境:

主配置文件:/etc/keepalived/keepalived.conf

主程序文件:/usr/sbin/keepalived

Unit File的环境配置文件:/etc/sysconfig/keepalived

配置文件配置简析

全局配置段:

#vim/etc/keepalived/keepalived.conf #各个配置模块的信息详解 global_defs { #全局默认配置,多数都不需要更改 notification_email { #邮件设置 acassen@firewall.loc #当主节点出现故障时发送邮件 failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc #发送邮件地址,

使用意义不大 smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL #路由器标识,信息不是特别关键 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_pmcast_group4 224.0.44.44 #添加组播地址,防止在同一网段的其他机器被多播影响 vrrp_gna_interval 0 vrrp_iptables #可在默认的全局选项中添加此条选项,

防止主节点在重启后生成iptables规则,造成主节点的再次启用失败 }

虚拟路由实例段

vrrp_instance VI_1 { #虚拟IP地址的设置 state MASTER #设置为主节点 interface eth0 #作用哪个物理网卡上 virtual_router_id 51 #路由器编号自定义,0-255之间的数字就可以 priority 100 #优先级的值0-255之间数字越大优先级越高 advert_int 1 #自己的心跳信息;每隔多少秒向外发布依次 authentication { #认证 auth_type PASS #认证方式:密码认证 auth_pass 1111 #输入密码;随意字符和数字最长八位 } virtual_ipaddress { #VIP配置 <IPADDR>/<MASK> brd <IPADDR> dev <STRING> scope <SCOPE> label <LABEL> 192.168.200.17/24 dev eth1 192.168.200.18/24 dev eth2 label eth2:1 } track_interface { #配置要监控的网络接口,一旦接口出现故障,则转为FAULT状态; eth0 eth1 ... } nopreempt:定义工作模式为非抢占模式; preempt_delay 300:抢占式模式下,节点上线后触发新选举操作的延迟时长; notify_master <STRING>|<QUOTED-STRING>:当前节点成为主节点时触发的脚本; notify_backup <STRING>|<QUOTED-STRING>:当前节点转为备节点时触发的脚本; notify_fault <STRING>|<QUOTED-STRING>:当前节点转为“失败”状态时触发的脚本; notify <STRING>|<QUOTED-STRING>:通用格式的通知触发机制,一个脚本可完成以上三种状态的转换时的通知; }

虚拟服务器配置:

virtual_server IP port | virtual_server fwmark int { delay_loop <INT>:服务轮询的时间间隔; lb_algo rr|wrr|lc|wlc|lblc|sh|dh:定义调度方法; lb_kind NAT|DR|TUN:集群的类型; persistence_timeout <INT>:持久连接时长; protocol TCP:服务协议,仅支持TCP; sorry_server <IPADDR> <PORT>:备用服务器地址; real_server { weight <INT> notify_up <STRING>|<QUOTED-STRING> notify_down <STRING>|<QUOTED-STRING> HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK { ... }:定义当前主机的健康状态检测方法; } HTTP_GET|SSL_GET:应用层检测 HTTP_GET|SSL_GET { url { path <URL_PATH>:定义要监控的URL; status_code <INT>:判断上述检测机制为健康状态的响应码; digest <STRING>:判断上述检测机制为健康状态的响应的内容的校验码; } nb_get_retry <INT>:重试次数; delay_before_retry <INT>:重试之前的延迟时长; connect_ip <IP ADDRESS>:向当前RS的哪个IP地址发起健康状态检测请求 connect_port <PORT>:向当前RS的哪个PORT发起健康状态检测请求 bindto <IP ADDRESS>:发出健康状态检测请求时使用的源地址; bind_port <PORT>:发出健康状态检测请求时使用的源端口; connect_timeout <INTEGER>:连接请求的超时时长; } TCP_CHECK { connect_ip <IP ADDRESS>:向当前RS的哪个IP地址发起健康状态检测请求 connect_port <PORT>:向当前RS的哪个PORT发起健康状态检测请求 bindto <IP ADDRESS>:发出健康状态检测请求时使用的源地址; bind_port <PORT>:发出健康状态检测请求时使用的源端口; connect_timeout <INTEGER>:连接请求的超时时长; } }

脚本定义:

vrrp_script <SCRIPT_NAME> { script "" #定义执行脚本 interval INT #多长时间检测一次 weight -INT #如果脚本的返回值为假,则执行权重减N的操作 rise 2 #检测2次为真,则上线 fall 3 #检测3次为假,则下线 } vrrp_instance VI_1 { track_script { #在虚拟路由实例中调用此脚本 SCRIPT_NAME_1 SCRIPT_NAME_2 ... } }

HTTP_GET|SSL_GET:应用层检测(7层检测)

path <URL_PATH>:定义要监控的URL; status_code <INT>:判断上述检测机制为健康状态的响应码; digest <STRING>:判断上述检测机制为健康状态的响应的内容的校验码; } nb_get_retry <INT>:重试次数; delay_before_retry <INT>:重试之前的延迟时长; connect_ip <IP ADDRESS>:向当前RS的哪个IP地址发起健康状态检测请求 connect_port <PORT>:向当前RS的哪个PORT发起健康状态检测请求 bindto <IP ADDRESS>:发出健康状态检测请求时使用的源地址; bind_port <PORT>:发出健康状态检测请求时使用的源端口; connect_timeout <INTEGER>:连接请求的超时时长;向RS1服务器发送请求对方没有响应,

等待时长。 }

TCP_CHECK { (tcp4层检测)

connect_ip <IP ADDRESS>:向当前RS的哪个IP地址发起健康状态检测请求 connect_port <PORT>:向当前RS的哪个PORT发起健康状态检测请求 bindto <IP ADDRESS>:发出健康状态检测请求时使用的源地址; bind_port <PORT>:发出健康状态检测请求时使用的源端口; connect_timeout <INTEGER>:连接请求的超时时长; }

实验:实现单主配置的示例:(单个的虚拟VRRP IP地址,一个用作主节点,一个作为备用节点)

具体配置信息:(在同一个网路中实现)

实验环境:selinux 和ptables是否关闭;时间是否同步;如果不同步:timedatectl set-timezone Asia/Shanghai可以用此命令设置仅在centos7上有此命令

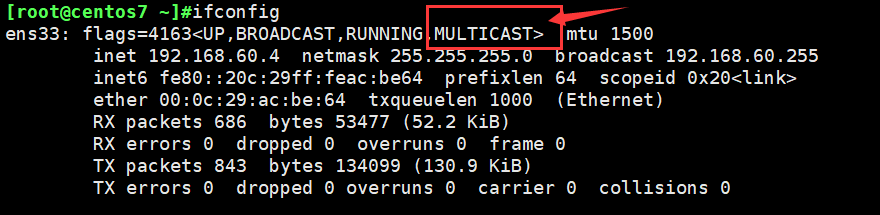

查看网卡信息:是否有以下信息多播方式(默认都有)

如果没有此条信息:则需要手动开启此选项

ip link set dev ens33 multicast on #开启多播模式 ip link set dev ens33 multicast off #关闭多播模式

1) 在主的机器上:

#yum install keepalived #安装包 #vim /etc/keepalived/keepalived.conf #修改配置文件 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 95 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.130.200 #自定义虚拟IP地址 } }

2)在从的机器上:

yum install keepalived #安装包 vim /etc/keepalived/keepalived.conf #修改配置文件 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_instance VI_1 { state BACKUP #状态改为备用模式 interface ens33 virtual_router_id 51 priority 95 #优先级改小一点 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.130.200 } } #systemctl start keepalived #ip a l ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:fd:b3:24 brd ff:ff:ff:ff:ff:ff inet 192.168.130.11/24 brd 192.168.130.255 scope global ens33 valid_lft forever preferred_lft forever inet 192.168.130.200/32 scope global ens33 #配置文件的虚拟IP绑定到从节点上了 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fefd:b324/64 scope link

将主节点的服务器开启:systemctl start keepalived 配置的虚拟IP又绑定到了主节点网卡上

由于是抢占模式,当主节点的服务器起来之后,发现自己的优先级高于从节点的服务器,所以将虚拟IP地址又抢占过来了。

多主配置:2个虚拟的VRRP IP地址,共同提供一个服务,分别在主从上加从主

用户自定义的通知脚本:(通过邮件的方式通知此节点现在所处于什么状态)

在实现单主模式下的keepalived的基础上添加的

notify_master <STRING>|<QUOTED-STRING>:当前节点成为主节点时触发的脚本;

notify_backup <STRING>|<QUOTED-STRING>:当前节点转为备节点时触发的脚本;

notify_fault <STRING>|<QUOTED-STRING>:当前节点转为“失败”状态时触发的脚本;

notify <STRING>|<QUOTED-STRING>:通用格式的通知触发机制,一个脚本可完成以上三种状态的转换时的通知;

#cd /etc/keepalived vim notify.sh #!/bin/bash # contact=’root@localhost’ notify() { local mailsubject=”$(hostname) to be $1, vip floating” local mailbody=”$(date +’%F %T’): vrrp transition, $(hostname) changed to be $1″ echo “$mailbody” | mail -s “$mailsubject” $contact } case $1 in master) notify master ;; backup) notify backup ;; fault) notify fault ;; *) echo “Usage: $(basename $0) {master|backup|fault}” exit 1 ;; esac #yum install mailx -y #安装mail命令 #chmod +x notify.sh #给脚本添加执行权限

脚本调用方法

#vim /etc/keepalived/keepalived.conf virtual_ipaddress { 192.168.130.200 } } track_interface { ens33 } notify_master “/etc/keepalived/notify.sh master” notify_backup “/etc/keepalived/notify.sh backup” notify_fault “/etc/keepalived/notify.sh fault” #systemctl start keepalived #mail #查看邮件信息,可以看到当前主备节点转换的过程和当前所处的状态信息

目的::在上述实验中可以发现,自定义的脚本可以执行你所自定义的任务,所以也可以定义其他的脚本来执行用户所定义的要执行的内容。

实验:结合keepalived来实现LVS的高可用

环境:

各节点时间必须同步;

timedatectl set-timezone Asia/Shanghai#centos7上有此命令

确保iptables及selinux的正确配置;

各节点之间可通过主机名互相通信(对KA并非必须),建议使用/etc/hosts文件实现;

确保各节点的用于集群服务的接口支持MULTICAST通信;D类:224-239;

ip link set dev eth0 multicast off | on

1)在主节点LVS机器上配置

~]# yum install keepalied ~]# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { root@localhost #收件人 } notification_email_from keepalived@localhoat #发件人 smtp_server 127.0.0.1 #邮件服务器IP smtp_connect_timeout 30 #连接超时时长 router_id node1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.111.111 #组播地址 vrrp_iptables #禁止keepalived添加iptables规则 } vrrp_instance VI_1 { #定义虚拟路由实例 state MASTER #初始启动为主节点 interface ens33 #IP属于的网卡 virtual_router_id 51 #节点ID priority 100 #优先级 advert_int 1 #每1秒检测一次 authentication { #认证 auth_type PASS #简单认证 auth_pass 1111 #认证密码 } virtual_ipaddress { #vip绑定的网卡 192.168.130.100/24 dev ens33 } } virtual_server 192.168.130.100 80 { #ipvs规则定义 delay_loop 2 #健康检测,2秒 lb_algo rr #调度算法,轮询 lb_kind DR #lvs模型,DR protocol TCP #tcp协议 real_server 192.168.130.7 80 { #real-server配置 weight 1 #权重为1 HTTP_GET { #HTTP协议检测 url { path / #检测主页 status_code 200 #返回值为200为正常 } connect_timeout 2 #超时时长 nb_get_retry 3 #重连次数 delay_before_retry 1 #重连间隔 } } real_server 192.168.130.10 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 2 nb_get_retry 3 delay_before_retry 1 } } }

~]#yum install ipvsadm #安装实现LVS的包

~]#ip a a 192.168.130.100/24 dev ens33:1 #将VIP地址绑定到LVS本机的DIP上

~]#ip a l #查看VIP是否绑定到本机的网卡上了

~]#systemctl start keepalived #启动服务作为备用模式

~]#ipvsadm -Ln #查看ipvs规则是否生成了

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.130.100:80 rr persistent 50

-> 192.168.130.7:80 Route 1 0 0

-> 192.168.130.10:80 Route 1 0 0

2)在备节点LVS机器上配置

~]# yum install keepalied ~]# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { root@localhost #收件人 } notification_email_from keepalived@localhoat #发件人 smtp_server 127.0.0.1 #邮件服务器IP smtp_connect_timeout 30 #连接超时时长 router_id node1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.111.111 #组播地址 vrrp_iptables #禁止keepalived添加iptables规则 } vrrp_instance VI_1 { #定义虚拟路由实例 state BACKUP #初始启动为备节点 interface ens33 #IP属于的网卡 virtual_router_id 51 #节点ID priority 95 #优先级 advert_int 1 #每1秒检测一次 authentication { #认证 auth_type PASS #简单认证 auth_pass 1111 #认证密码 } virtual_ipaddress { #vip绑定的网卡 192.168.130.100/24 dev ens33 } } virtual_server 192.168.130.100 80 { #ipvs规则定义 delay_loop 2 #健康检测,2秒 lb_algo rr #调度算法,轮询 lb_kind DR #lvs模型,DR protocol TCP #tcp协议 real_server 192.168.130.7 80 { #real-server配置 weight 1 #权重为1 HTTP_GET { #HTTP协议检测 url { path / #检测主页 status_code 200 #返回值为200为正常 } connect_timeout 2 #超时时长 nb_get_retry 3 #重连次数 delay_before_retry 1 #重连间隔 } } real_server 192.168.130.10 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 2 nb_get_retry 3 delay_before_retry 1 } } }

~]#yum install ipvsadm #安装实现LVS的包 ~]#ip a a 192.168.130.100/24 dev ens33:1 #将VIP地址绑定到LVS本机的DIP上 ~]#ip a l #查看VIP是否绑定到本机的网卡上了 ~]#systemctl start keepalived #启动服务作为备用模式 ~]#ipvsadm -Ln #查看ipvs规则是否生成了 IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.130.100:80 rr persistent 50 -> 192.168.130.7:80 Route 1 0 0 -> 192.168.130.10:80 Route 1 0 0

3)在后台web服务器上的配置RS1

#yum install httpd #安装包 echo test1 > /var/www/html/index.html #创建测试主页面 修改内核配置来防止VIP地址的相互冲突: #echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore #echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore #echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce #echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce # cat /proc/sys/net/ipv4/conf/all/arp_ignore #验证修改的内核参数是否成功 1 # cat /proc/sys/net/ipv4/conf/all/arp_announce #验证修改的内核参数是否成功 2 将VIP地址绑定到本机的回环网卡上: #ip a a 192.168.130.100 dev lo 此种只是临时绑定重启服务就会失效的

4)在后台web服务器上的配置RS2

#yum install httpd #安装包 echo test2 > /var/www/html/index.html #创建测试主页面 修改内核配置来防止VIP地址的相互冲突: #echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore #echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore #echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce #echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce # cat /proc/sys/net/ipv4/conf/all/arp_ignore #验证修改的内核参数是否成功 1 # cat /proc/sys/net/ipv4/conf/all/arp_announce #验证修改的内核参数是否成功 2 将VIP地址绑定到本机的回环网卡上: #ip a a 192.168.130.100/32 dev lo 此种只是临时绑定重启服务就会失效的

5)client端访问

# curl 192.168.130.100 #此IP地址为VIP地址 test2 # curl 192.168.130.100 #此IP地址为VIP地址 test1

可以在后台web服务器上web1/web2:配置脚本 (永久)

#!/bin/bash # vip="192.168.130.100/32" iface="lo" case $1 in start) echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce ip addr add $vip label $iface:0 broadcast ${vip%/*} dev $iface ip route add $vip dev $iface ;; stop) ip addr flush dev $iface ip route flush dev $iface echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce ;; *) echo "Usage: `basename $0` start | stop" 1>&2 ;; esac

实验:实现nginx的高可用

1 . 在实现keepalived的主备(抢占模式)的基础上

1)在主节点上配置

# vim /etc/keepalived/keepalived.conf sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.111.111 vrrp_iptables } vrrp_script chk_nginx { #定义一个小脚本,检测本机的nginx程序,检测到nginx暂停 script "killall -0 nginx && exit 0 || exit 1" interval 1 weight -5 #就把优先级-5;之后就小于备节点的优先级,将抢占虚拟IP fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 虚拟IP 192.168.130.200/24 } track_script { #调用脚本 chk_nginx } } #yum install nginx #装包 #vim /etc/nginx/conf.d/test.conf #创建nginx的配置文件 server { listen 80 default_server; server_name www.a.com; root /usr/share/nginx/html; location / { proxy_pass http://www; } } #vim /etc/nginx/nginx.conf http { #在http上添加 upstream www { server 192.168.130.7; server 192.168.130.10; } #nginx #启动nginx服务

2)在备节点上配置

# vim /etc/keepalived/keepalived.conf sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.111.111 vrrp_iptables } vrrp_instance VI_1 { state BACKUP interface ens33 virtual_router_id 51 priority 98 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { #虚拟IP 192.168.130.200/24 } } #yum install nginx #装包 #vim /etc/nginx/conf.d/test.conf #创建nginx的配置文件 server { listen 80 default_server; server_name www.a.com; root /usr/share/nginx/html; location / { proxy_pass http://www; } } #vim /etc/nginx/nginx.conf http { #在http上添加 upstream www { server 192.168.130.7; server 192.168.130.10; } #nginx #启动nginx服务

2.分别在后台web1/web2服务器上

#yum install httpd #安装包 echo test1/test2 > /var/www/html/index.html #创建测试主页面

#systemctl start httpd

2.client测试

# curl 192.168.130.200 test2 # curl 192.168.130.200 test1

此时,如果将主节点的nginx程序手动停止(nginx -s stop ),此时的虚拟IP地址将转移到备用节点上。由此实现了高可用。主节点上nginx启动则恢复到主节点上

博客作业

1、lvs + keepalived的双主模型实现

环境:

各节点时间必须同步;

timedatectl set-timezone Asia/Shanghai #centos7上有此命令

确保iptables及selinux的正确配置;

各节点之间可通过主机名互相通信(对KA并非必须),建议使用/etc/hosts文件实现;

确保各节点的用于集群服务的接口支持MULTICAST通信;D类:224-239;

ip link set dev eth0 multicast off | on

1)LVS1配置

# yum install keepalied # vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from keepalived@localhoat smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.111.111 vrrp_iptables } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 98 advert_int 1 authentication { auth_type PASS auth_pass fd57721a } virtual_ipaddress { 192.168.130.100/24 dev ens33 } } vrrp_instance VI_2 { state BACKUP interface ens33 virtual_router_id 52 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 4a9a407a } virtual_ipaddress { 192.168.130.200/24 dev ens33 } } virtual_server 192.168.130.100 80 { delay_loop 2 lb_algo rr lb_kind DR protocol TCP real_server 192.168.130.7 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 2 nb_get_retry 3 delay_before_retry 1 } } real_server 192.168.130.10 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 2 nb_get_retry 3 delay_before_retry 1 } } } virtual_server 192.168.1300.200 80 { delay_loop 2 lb_algo rr lb_kind DR protocol TCP real_server 192.168.130.7 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 2 nb_get_retry 3 delay_before_retry 1 } } real_server 192.168.130.10 80 { weight 1HTTP_GET { url { path / status_code 200 } connect_timeout 2 nb_get_retry 3 delay_before_retry 1 } } real_server 192.168.130.10 80 { weight 1 HTTP_GET { url { path / status_code 200 } connect_timeout 2 nb_get_retry 3 delay_before_retry 1 } } } #systemctl start keepalived

#ip a l

2)LVS2配置

# yum install keepalied

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhoat

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.111.111

vrrp_iptables

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 98

advert_int 1

authentication {

auth_type PASS

auth_pass fd57721a

}

virtual_ipaddress {

192.168.130.100/24 dev ens33

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 4a9a407a

}

virtual_ipaddress {

192.168.130.200/24 dev ens33

}

}

virtual_server 192.168.130.100 80 {

delay_loop 2

lb_algo rr

lb_kind DR

protocol TCP

real_server 192.168.130.7 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.130.10 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}

virtual_server 192.168.1300.200 80 {

delay_loop 2

lb_algo rr

lb_kind DR

protocol TCP

real_server 192.168.130.7 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.130.10 80 {

weight 1HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.130.10 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}

#systemctl start keepalived

#ip a l

同时启动两台机子服务后对应网卡主节点上绑定虚拟IP

3)后台web服务器web1/web2上:配置脚本

# vim /etc/web.sh #!/bin/bash # vip="192.168.130.100/32" vip2="192.168.130.200/32" iface="lo" case $1 in start) echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce ip addr add $vip label $iface:0 broadcast ${vip%/*} dev $iface ip addr add $vip2 label $iface:1 broadcast ${vip2%/*} dev $iface ip route add $vip dev $iface ip route add $vip2 dev $iface ;; stop) ip addr flush dev $iface ip route flush dev $iface echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce ;; *) echo "Usage: `basename $0` start | stop" 1>&2 ;; esac #bash /etc/web.sh start

3)在client上访问虚拟IP

# curl 192.168.130.200 test2 # curl 192.168.130.200 test1 # curl 192.168.130.100 test2 # curl 192.168.130.100 test1

2、keepalived + haproxy 实现调度器的高可用

在主节点上配置

1)配置haproxy实现负载均衡的功能

#yum install haproxy -y #安装包 # vim /etc/haproxy/haproxy.cfg #修改配置文件 frontend web *:80 default_backend websrvs backend websrvs balance roundrobin server srv1 192.168.130.7:80 check server srv2 192.168.130.10:80 check

#systemctl start haproxy

2)配置keepalived实现高可用

# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from keepalived@localhoat smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.111.111 vrrp_iptables } vrrp_script chk_haproxy { script "killall -0 haproxy" #监控haproxy进程 interval 1 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass fd57721a } virtual_ipaddress { 192.168.130.100/24 dev ens33 } track_script { #调用监控脚本 chk_haproxy } notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" }

在从节点上配置

1)配置haproxy实现负载均衡的功能

#yum install haproxy -y #安装包

# vim /etc/haproxy/haproxy.cfg #修改配置文件

frontend web *:80

default_backend websrvs

backend websrvs

balance roundrobin

server srv1 192.168.130.7:80 check

server srv2 192.168.130.10:80 check

#systemctl start haproxy

2)配置keepalived实现高可用

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhoat

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id node1

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 224.0.111.111

vrrp_iptables

}

vrrp_script chk_haproxy {

script "killall -0 haproxy" #监控haproxy进程

interval 1

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 98

advert_int 1

authentication {

auth_type PASS

auth_pass fd57721a

}

virtual_ipaddress {

192.168.130.100/24 dev ens33

}

track_script { #调用监控脚本

chk_haproxy

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

在web服务器上:配置脚本

# vim /etc/web.sh #!/bin/bash # vip="192.168.130.100/32" vip2="192.168.130.200/32" iface="lo" case $1 in start) echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce ip addr add $vip label $iface:0 broadcast ${vip%/*} dev $iface ip addr add $vip2 label $iface:1 broadcast ${vip2%/*} dev $iface ip route add $vip dev $iface ip route add $vip2 dev $iface ;; stop) ip addr flush dev $iface ip route flush dev $iface echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce ;; *) echo "Usage: `basename $0` start | stop" 1>&2 ;; esac #bash /etc/web.sh start

在client上访问

# curl 192.168.130.100:80 test2 # curl 192.168.130.100:80 test1

3、利用脚本功能实现keepalived的维护模式

! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from keepalived@localhoat smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.111.111 vrrp_iptables } vrrp_script chk_down { script "/bin/bash -c '[[ -f /etc/keepalived/down ]]' && exit 1 || exit 0"

#在keepalived中要特别地指明作为bash的参数的运行 interval 1 weight -10 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass fd57721a } virtual_ipaddress { 192.168.130.100/24 dev ens33 } track_script { chk_down #调用脚本 } notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" } 测试:创建down文件后使得降优先级,从而使得VIP漂移到node2,进入维护模式 [root@node1 ~]# touch /etc/keepalived/down

4、编写Ansible角色批量部署keepalived + nginx 实现双主模型下反向代理器的高可用

以下操作全部在ansible主机上操作

1)基于秘钥通信:

[root@ansible ~]# vim cpkey.sh #!/bin/bash rpm -q expect &>/dev/null || yum -q -y install expect [ ! -e ~/.ssh/id_rsa ] && ssh-keygen -t rsa -P "" -f ~/.ssh/id_rsa &>/dev/null read -p "Host_ip_list: " ip_list_file read -p "Username: " username read -s -p "Password: " password [ ! -e "$ip_list_file" ] && echo "$ip_list_file not exist." && exit [ -z "$ip_list_file" -o -z "$username" -o -z "$password" ] && echo "input error!" && exit localhost_ip=`hostname -I |cut -d' ' -f1` expect <<EOF set timeout 10 spawn ssh-copy-id -i /root/.ssh/id_rsa.pub $localhost_ip expect { "yes/no" { send "yes "; exp_continue} "password" { send "$password "} } expect eof EOF while read ipaddr1; do expect <<EOF set timeout 10 spawn ssh ${username}@${ipaddr1} ':' expect { "yes/no" { send "yes "; exp_continue} "password" { send "$password "} } expect eof EOF done < "$ip_list_file" while read ipaddr2; do expect <<EOF set timeout 10 spawn scp -pr .ssh/ ${username}@${ipaddr2}: expect { "yes/no" { send "yes "; exp_continue} "password" { send "$password "} } expect eof EOF done < "$ip_list_file" [root@ansible ~]# vim iplist.txt 192.168.130.6 192.168.130.7 192.168.130.8 192.168.130.9 192.168.130.11 192.168.130.12 [root@ansible ~]# ./cpkey.sh Host_ip_list: iplist.txt #指定IP地址列表文件 Username: root Password:centos #密码一致

2)配置内部主机基于主机名通信:

[root@ansible ~]# vim /etc/hosts 92.168.130.7 keep1 192.168.130.8 keep2 192.168.130.10 web1 192.168.130.11 web2 192.168.130.6 DNS 192.168.130.9 ansible [root@ansible ~]# yum install ansible -y #基于epel源 [root@ansible ~]# vim /etc/ansible/hosts [keep] 192.168.130.7 192.168.130.8 [web] 192.168.130.10 192.168.130.11 [dns] 192.168.130.6 [root@ansible ~]# ansible all -m copy -a 'src=/etc/hosts dest=/etc/hosts backup=yes'

3)编写角色,实现web服务的部署

[root@ansible ~]# mkdir -p ansible/roles/web/{tasks,templates,files,handlers} [root@ansible ~]# cd ansible/ [root@ansible ansible]# vim roles/web/tasks/install.yml - name: install httpd yum: name=httpd state=present [root@ansible ansible]# vim roles/web/tasks/copy.yml - name: copy config file template: src=httpd.conf.j2 dest=/etc/httpd/conf/httpd.conf notify: restart service - name: copy index.html template: src=index.html.j2 dest=/var/www/html/index.html owner=apache notify: restart service [root@ansible ansible]# vim roles/web/tasks/start.yml - name: start httpd service: name=httpd state=started [root@ansible ansible]# vim roles/web/tasks/main.yml - include: install.yml - include: copy.yml - include: start.yml [root@ansible ansible]# yum install httpd -y [root@ansible ansible]# cp /etc/httpd/conf/httpd.conf roles/web/templates/httpd.conf.j2 [root@ansible ansible]# vim roles/web/templates/httpd.conf.j2 ServerName {{ ansible_fqdn }} [root@ansible ansible]# vim roles/web/templates/index.html.j2 {{ ansible_fqdn }} test page. [root@ansible ansible]# vim roles/web/handlers/main.yml - name: restart service service: name=httpd state=restarted [root@ansible ansible]# vim web.yml --- - hosts: web remote_user: root roles: - web ... [root@ansible ansible]# ansible-playbook web.yml

4)编写角色,实现nginx反向代理服务的部署

[root@ansible ansible]# mkdir -p roles/nginx_proxy/{files,handlers,tasks,templates} [root@ansible ansible]# vim roles/nginx_proxy/tasks/install.yml - name: install nginx yum: name=nginx state=present [root@ansible ansible]# vim roles/nginx_proxy/tasks/copy.yml - name: copy config file template: src=nginx.conf.j2 dest=/etc/nginx/nginx.conf notify: restart service [root@ansible ansible]# vim roles/nginx_proxy/tasks/start.yml - name: start nginx service: name=nginx state=started [root@ansible ansible]# vim roles/nginx_proxy/tasks/main.yml - include: install.yml - include: copy.yml - include: start.yml [root@ansible ansible]# yum install nginx -y [root@ansible ansible]# cp /etc/nginx/nginx.conf roles/nginx_proxy/templates/nginx.conf.j2 [root@ansible ansible]# vim roles/nginx_proxy/templates/nginx.conf.j2 http { upstream websrvs { #后端web服务器的IP地址 server 192.168.130.10; server 192.168.130.11; } server { listen 80 default_server; server_name _; root /usr/share/nginx/html; location / { proxy_pass http://websrvs; } } } [root@ansible ansible]# vim roles/nginx_proxy/handlers/main.yml - name: restart service service: name=nginx state=restarted [root@ansible ansible]# vim nginx_proxy.yml --- - hosts: keep remote_user: root roles: - nginx_proxy ... [root@ansible ansible]# ansible-playbook nginx_proxy.yml

5)编写角色,利用keepalived实现nginx反向代理服务的高可用

[root@ansible ansible]# ansible 192.168.0.8 -m hostname -a 'name=node1' [root@ansible ansible]# ansible 192.168.0.9 -m hostname -a 'name=node2' [root@ansible ansible]# mkdir -p roles/keepalived/{files,handlers,tasks,templates,vars} [root@ansible ansible]# vim roles/keepalived/tasks/install.yml #安装剧本 - name: install keepalived yum: name=keepalived state=present [root@ansible ansible]# vim roles/keepalived/tasks/copy.yml #复制配置文件剧本 - name: copy configure file template: src=keepalived.conf.j2 dest=/etc/keepalived/keepalived.conf notify: restart service when: ansible_fqdn == "node1" #选择性复制,将第一套配置文件复制到node1上 - name: copy configure file2 template: src=keepalived.conf2.j2 dest=/etc/keepalived/keepalived.conf notify: restart service when: ansible_fqdn == "node2" #将第二套配置文件复制到node2上 [root@ansible ansible]# vim roles/keepalived/tasks/start.yml #启动服务 - name: start keepalived service: name=keepalived state=started [root@ansible ansible]# vim roles/keepalived/tasks/main.yml - include: install.yml - include: copy.yml - include: start.yml [root@ansible ansible]# vim roles/keepalived/vars/main.yml #自定义变量 kepd_vrrp_mcast_group4: "224.0.111.222" #组播地址 kepd_interface_1: "ens33" kepd_virtual_router_id_1: "51" #虚拟路由标识ID kepd_priority_1: "100" #优先级 kepd_auth_pass_1: "fd57721a" #简单认证密码,8位 kepd_virtual_ipaddress_1: "192.168.130.100/24" #VIP地址,此处应该为公网地址 kepd_interface_2: "ens33" kepd_virtual_router_id_2: "52" kepd_priority_2: "98" kepd_auth_pass_2: "41af6acc" kepd_virtual_ipaddress_2: "192.168.130.200/24" [root@ansible ansible]# yum install keepalived -y [root@ansible ansible]# cp /etc/keepalived/keepalived.conf roles/keepalived/templates/keepalived.conf.j2 [root@ansible ansible]# vim roles/keepalived/templates/keepalived.conf.j2 #编辑配置文件模板 ! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from keepalived@localhoat smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 {{ kepd_vrrp_mcast_group4 }} vrrp_iptables } vrrp_script chk_nginx { #脚本定义 script "killall -0 nginx" interval 1 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface {{ kepd_interface_1 }} virtual_router_id {{ kepd_virtual_router_id_1 }} priority {{ kepd_priority_1 }} advert_int 1 authentication { auth_type PASS auth_pass {{ kepd_auth_pass_1 }} } virtual_ipaddress { {{ kepd_virtual_ipaddress_1 }} } track_script { chk_nginx } } vrrp_instance VI_2 { state BACKUP interface {{ kepd_interface_2 }} virtual_router_id {{ kepd_virtual_router_id_2 }} priority {{ kepd_priority_2 }} advert_int 1 authentication { auth_type PASS auth_pass {{ kepd_auth_pass_2 }} } virtual_ipaddress { {{ kepd_virtual_ipaddress_2 }} } track_script { chk_nginx } } [root@ansible ansible]# cp roles/keepalived/templates/keepalived.conf.j2 roles/keepalived/templates/keepalived.conf2.j2 [root@ansible ansible]# vim roles/keepalived/templates/keepalived.conf2.j2 #编写第二套配置文件,和第一套不同的只有state和priority参数需要改 ! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from keepalived@localhoat smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id keep1 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 {{ kepd_vrrp_mcast_group4 }} vrrp_iptables } vrrp_script chk_nginx { script "killall -0 nginx" interval 1 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface {{ kepd_interface_1 }} virtual_router_id {{ kepd_virtual_router_id_1 }} priority {{ kepd_priority_2 }} advert_int 1 authentication { auth_type PASS auth_pass {{ kepd_auth_pass_1 }} } virtual_ipaddress { {{ kepd_virtual_ipaddress_1 }} } track_script { chk_nginx } } vrrp_instance VI_2 { state MASTER interface {{ kepd_interface_2 }} virtual_router_id {{ kepd_virtual_router_id_2 }} priority {{ kepd_priority_1 }} advert_int 1 authentication { auth_type PASS auth_pass {{ kepd_auth_pass_2 }} } virtual_ipaddress { {{ kepd_virtual_ipaddress_2 }} } track_script { chk_nginx } } [root@ansible ansible]# vim roles/keepalived/handlers/main.yml #实现配置文件变动时触发重启服务操作 - name: restart service service: name=keepalived state=restarted [root@ansible ansible]# vim keepalived.yml --- - hosts: node remote_user: root roles: - keepalived ... [root@ansible ansible]# ansible-playbook keepalived.yml

6)配置DNS

[root@DNS ~]# yum install bind -y [root@DNS ~]# vim /etc/named.conf [root@DNS ~]# vim /etc/named.conf #将以下参数注释 //listen-on port 53 { 127.0.0.1; }; //allow-query { localhost; }; [root@DNS ~]# vim /etc/named.rfc1912.zones zone "magedu.tech" { type master; file "magedu.tech.zone"; }; [root@DNS ~]# vim /var/named/magedu.tech.zone $TTL 1D @ IN SOA DNS1.magedu.tech. admin.magedu.tech. ( 1 1D 1H 1W 3H ) NS DNS1 DNS1 A 192.168.130.6 www A 192.168.130.100 www A 192.168.130.200 [root@DNS ~]# named-checkconf [root@DNS ~]# named-checkzone "magedu.tech" /var/named/magedu.tech.zone zone magedu.tech/IN: loaded serial 1 OK [root@DNS ~]# service named start Starting named: named: already running [ OK ] [root@DNS ~]# dig www.magedu.tech @192.168.130.6 ; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.62.rc1.el6 <<>> www.magedu.tech @192.168.130.6 ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 9734 ;; flags: qr aa rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 1, ADDITIONAL: 1 ;; QUESTION SECTION: ;www.magedu.tech. IN A ;; ANSWER SECTION: www.magedu.tech. 86400 IN A 192.168.130.200 www.magedu.tech. 86400 IN A 192.168.130.100 ;; AUTHORITY SECTION: magedu.tech. 86400 IN NS dns1.magedu.tech. ;; ADDITIONAL SECTION: dns1.magedu.tech. 86400 IN A 192.168.130.6 ;; Query time: 0 msec ;; SERVER: 192.168.130.6#53(192.168.130.6) ;; WHEN: Sat Jul 14 18:20:56 2018 ;; MSG SIZE rcvd: 100

7)客户端模拟测试

[root@client ~]# vim /etc/resolv.conf nameserver 192.168.130.6 [root@client ~]# for i in {1..2}; do curl www.magedu.tech; done web2 test page. web1 test page.