爬取视频详情:http://www.id97.com/

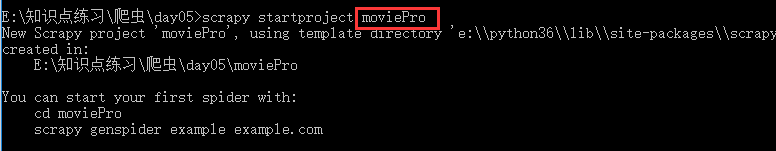

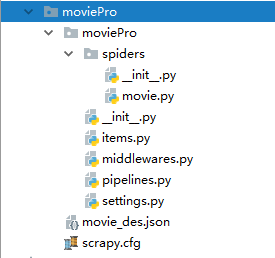

创建环境:

movie.py 爬虫文件的设置:

# -*- coding: utf-8 -*- import scrapy from moviePro.items import MovieproItem class MovieSpider(scrapy.Spider): name = 'movie' # allowed_domains = ['www.id97.com'] start_urls = ['http://www.id97.com/'] def secondPageParse(self,response): item = response.meta['item'] item['actor']=response.xpath('/html/body/div[1]/div/div/div[1]/div[1]/div[2]/table/tbody/tr[1]/td[2]/a/text()').extract_first() item['show_time'] = response.xpath('/html/body/div[1]/div/div/div[1]/div[1]/div[2]/table/tbody/tr[7]/td[2]/text()').extract_first() yield item def parse(self, response): div_list=response.xpath('/html/body/div[1]/div[2]/div[1]/div/div') for div in div_list: item = MovieproItem() item['name']=div.xpath('./div/div[@class="meta"]//a/text()').extract_first() #类型下面有多个a标签,所以使用//text,另外取到的是多个值,所以就用extract取值 item['kind']=div.xpath('./div/div[@class="meta"]/div[@class="otherinfo"]//text()').extract() #拿到的是列表类型,要转为字符串类型 item['kind'] = ''.join(item['kind']) #拿到二次连接,用于发请求,拿到电影详细的描述信息 item['url'] = div.xpath('./div/div[@class="meta"]//a/@href').extract_first() #将item对象参给二级页面方法,进而将内容存入到item里面 yield scrapy.Request(url=item['url'],callback=self.secondPageParse,meta={'item':item})

items.py里面的设置:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class MovieproItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name=scrapy.Field() kind=scrapy.Field() url=scrapy.Field() actor=scrapy.Field() show_time=scrapy.Field()

pipelines.py管道里面设置:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import json class MovieproPipeline(object): def process_item(self, item, spider): dic_item={ '电影名字':item['name'], '影片类型':item['kind'], '主演':item['actor'], '上映时间':item['show_time'], } json_str=json.dumps(dic_item,ensure_ascii=False) with open('./movie_des.json','at',encoding='utf-8') as f: f.write(json_str) print(item['name']) return item

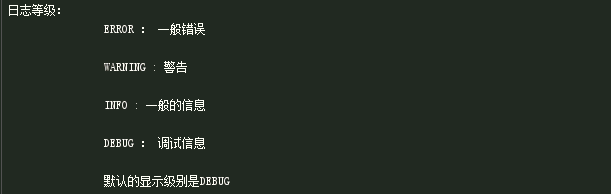

日志等级设置:

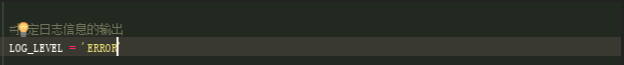

手动设置日志等级,在settings里面设置(可以写在任意位置)

将制定日志信息,写入到文件中进行存储: