【简介】

1@LZO本身是不支持分片的,但是我们给LZO压缩的文件加上索引,就支持分片了

2@Linux本身是不支持LZO压缩的,所以我们需要下载安装软件包,其中包括三个:lzo,lzop,hdoop-gpl-packaging.

3@hdoop-gpl-packaging的主要作用就是给压缩的LZO文件创建索引,否则LZO是不支持分片的,无论文件有多大,都只能有一个map

【说明】因为我的数据没有在压缩后还超过128M的,所以为了演示,在lzo压缩的文件即使超过一个块的大小依旧只用一个map进行,我把块的大小改为10M

[hadoop@hadoop001 hadoop]$ vi hdfs-site.xml

<property>

<name>dfs.blocksize</name>

<value>10485760</value>

</property>

【安装相关依赖】

安装以前先执行以下命令

[hadoop@hadoop001 ~]$ which lzop

/usr/bin/lzop

【注意】这代表你已经有lzop,如果找不到,就执行以下命令

#若没有执行如下安装命令【这些命令一定要在root用户下安装,否则没有权限】

[root@hadoop001 ~]# yum install -y svn ncurses-devel

[root@hadoop001 ~]# yum install -y gcc gcc-c++ make cmake

[root@hadoop001 ~]# yum install -y openssl openssl-devel svn ncurses-devel zlib-devel libtool

[root@hadoop001 ~]# yum install -y lzo lzo-devel lzop autoconf automake cmake

[root@hadoop001 ~]# yum -y install lzo-devel zlib-devel gcc autoconf automake libtool

【用lzop工具压缩测试数据文件】

lzo压缩:lzop -v filename

lzo解压:lzop -dv filename

[hadoop@hadoop001 data]$ ll

-rw-r--r-- 1 hadoop hadoop 68051224 Apr 17 17:37 part-r-00000

[hadoop@hadoop001 data]$ lzop -v part-r-00000

compressing part-r-00000 into part-r-00000.lzo

[hadoop@hadoop001 data]$ ll

-rw-r--r-- 1 hadoop hadoop 68051224 Apr 17 17:37 part-r-00000

-rw-r--r-- 1 hadoop hadoop 29975501 Apr 17 17:37 part-r-00000.lzo ##压缩好的测试数据

[hadoop@hadoop001 data]$ pwd

/home/hadoop/data

[hadoop@hadoop001 data]$ du -sh /home/hadoop/data/*

65M /home/hadoop/data/part-r-00000

29M /home/hadoop/data/part-r-00000.lzo

【安装hadoop-lzo】

[hadoop@hadoop001 app]$ wget https://github.com/twitter/hadoop-lzo/archive/master.zip

--2019-04-18 14:02:32-- https://github.com/twitter/hadoop-lzo/archive/master.zip

Resolving github.com... 13.250.177.223, 52.74.223.119, 13.229.188.59

Connecting to github.com|13.250.177.223|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://codeload.github.com/twitter/hadoop-lzo/zip/master [following]

--2019-04-18 14:02:33-- https://codeload.github.com/twitter/hadoop-lzo/zip/master

Resolving codeload.github.com... 13.229.189.0, 13.250.162.133, 54.251.140.56

Connecting to codeload.github.com|13.229.189.0|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: unspecified [application/zip]

Saving to: “master.zip”

[ <=> ] 1,040,269 86.7K/s in 11s

2019-04-18 14:02:44 (95.4 KB/s) - “master.zip” saved [1040269]

[hadoop@hadoop001 app]$ll

-rw-rw-r-- 1 hadoop hadoop 1040269 Apr 18 14:02 master.zip

[hadoop@hadoop001 app]$ unzip master.zip

-rw-rw-r-- 1 hadoop hadoop 1040269 Apr 18 14:02 master.zip

drwxrwxr-x 5 hadoop hadoop 4096 Apr 17 13:42 hadoop-lzo-master #解压以后的东东 不要问我为什么名字变了

[hadoop@hadoop001 hadoop-lzo-master]$ pwd

/home/hadoop/app/hadoop-lzo-master

[hadoop@hadoop001 hadoop-lzo-master]$ vi pom.xml

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hadoop.current.version>2.6.0</hadoop.current.version>

<hadoop.old.version>1.0.4</hadoop.old.version>

</properties>

【###这里的这四个步骤目前没弄懂是什么意思,回头有进展会进行说明,但是我参考另外一篇博客上,并没有这四部,所以应该并不影响

[hadoop@hadoop001 hadoop-lzo-master]$ export CFLAGS=-m64

[hadoop@hadoop001 hadoop-lzo-master]$ export CXXFLAGS=-m64

[hadoop@hadoop001 hadoop-lzo-master]$ export C_INCLUDE_PATH=/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/lzo/include

[hadoop@hadoop001 hadoop-lzo-master]$ export LIBRARY_PATH=/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/lzo/lib

###】

【mvn编译】

[hadoop@hadoop001 hadoop-lzo-master]$ mvn clean package -Dmaven.test.skip=true

#mvn编译,等待出现BUILD SUCCESS则表示编译成功,几分钟左右

【坑】我在第一次执行的时候一上来就报错

Could not create local repository at /root/maven_repo/repo -> [Help 1]

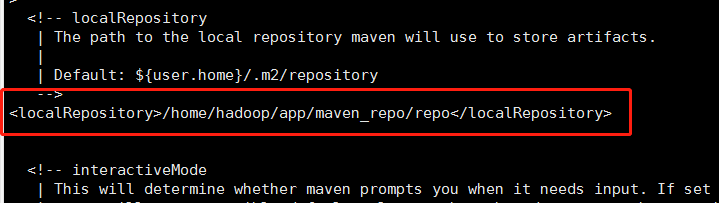

看到这个错误就感觉是用户不同步的问题,因为我当时编译hadoop的时候,怕出现权限问题,就用root用户编译的,所以库也健在root用户下,并没有考虑到这里还会用到这个库,所以报错的时候感觉很绝望。

解决方法:

尝试一:我把maven_repo这个文件夹及以下的文件夹的权限放到最大 失败

尝试二:我把maven_repo的用户和用户组改为hadoop 失败

尝试三:这个方法一开始就想到了,但是怕有问题,所以不敢用,最后没办法了,抱着试试的心态进行

把maven_repo这个文件夹移动到hadoop用下的app文件夹下

然后去maven安装目录修改setting文件的本地库的目录,让maven_repo所在目录跟setting配置一致

[hadoop@hadoop001 repo]$ pwd

/home/hadoop/app/maven_repo/repo

再次运行以上命令

[hadoop@hadoop001 hadoop-lzo-master]$ mvn clean package -Dmaven.test.skip=true

BUILD SUCCESS

成功啦!!!!欢呼!!!!!!!!!!!!!

【查看编译后的jar,hadoop-lzo-0.4.21-SNAPSHOT.jar则为我们需要的jar】

[hadoop@hadoop001 target]$ pwd

/home/hadoop/app/hadoop-lzo-master/target

[hadoop@hadoop001 target]$ ll

total 448

drwxrwxr-x 2 hadoop hadoop 4096 Apr 17 13:43 antrun

drwxrwxr-x 5 hadoop hadoop 4096 Apr 17 13:43 apidocs

drwxrwxr-x 5 hadoop hadoop 4096 Apr 17 13:42 classes

drwxrwxr-x 3 hadoop hadoop 4096 Apr 17 13:42 generated-sources

-rw-rw-r-- 1 hadoop hadoop 180807 Apr 17 13:43 hadoop-lzo-0.4.21-SNAPSHOT.jar

-rw-rw-r-- 1 hadoop hadoop 184553 Apr 17 13:43 hadoop-lzo-0.4.21-SNAPSHOT-javadoc.jar

-rw-rw-r-- 1 hadoop hadoop 52024 Apr 17 13:43 hadoop-lzo-0.4.21-SNAPSHOT-sources.jar

drwxrwxr-x 2 hadoop hadoop 4096 Apr 17 13:43 javadoc-bundle-options

drwxrwxr-x 2 hadoop hadoop 4096 Apr 17 13:43 maven-archiver

drwxrwxr-x 3 hadoop hadoop 4096 Apr 17 13:42 native

drwxrwxr-x 3 hadoop hadoop 4096 Apr 17 13:43 test-classes

【上传jar包】

将hadoop-lzo-0.4.21-SNAPSHOT.jar包复制到我们的hadoop的$HADOOP_HOME/share/hadoop/common/目录下才能被hadoop使用

[hadoop@hadoop001 hadoop-lzo-master]$ cp hadoop-lzo-0.4.21-SNAPSHOT.jar ~/app/hadoop-2.6.0-cdh5.7.0/share/hadoop/common/

[hadoop@hadoop001 hadoop-lzo-master]$ ll ~/app/hadoop-2.6.0-cdh5.7.0/share/hadoop/common/hadoop-lzo*

-rw-rw-r-- 1 hadoop hadoop 180807 Apr 17 13:52 /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/share/hadoop/common/hadoop-lzo-0.4.21-SNAPSHOT.jar

【配置hadoop文件core-site.xml 和mapred-site.xml】

【注意】在配置之前先把集群给关了,否则可能会有坑,配置完以后关闭集群,再开启,启动不起来,说端口被占用,但是jps查不到,也就是说进程处于家私状态了

[hadoop@hadoop001 ~]$ vim ~/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop/core-site.xml

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec

</value>

</property>

<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

【解析】:主要是配置com.hadoop.compression.lzo.LzoCodec、com.hadoop.compression.lzo.LzopCodec压缩类

io.compression.codec.lzo.class必须指定为LzoCodec非LzopCodec,不然压缩后的文件不会支持分片的

vim ~/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop/mapred-site.xml

<property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>org.apache.hadoop.io.compress.SnappyCodec</value>

</property>

<property>

<name>mapreduce.output.fileoutputformat.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.output.fileoutputformat.compress.codec</name>

<value>org.apache.hadoop.io.compress.BZip2Codec</value>

</property>

【启动hadoop】

[hadoop@hadoop001 sbin]$ pwd

/home/hadoop/app/hadoop/sbin

[hadoop@hadoop001 sbin]$start-all.sh

【启动hive测试分片 ##以下内容都是在hive的默认数据库里进行的】

【建表】

CREATE EXTERNAL TABLE g6_access_lzo (

cdn string,

region string,

level string,

time string,

ip string,

domain string,

url string,

traffic bigint)

PARTITIONED BY (

day string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ' '

STORED AS INPUTFORMAT "com.hadoop.mapred.DeprecatedLzoTextInputFormat"

OUTPUTFORMAT "org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat"

LOCATION '/g6/hadoop/access/compress';

【注意】#创建LZO压缩文件测试表,若hadoop的common目录没有hadoop-lzo的jar,就会报类DeprecatedLzoTextInputFormat找不到异常

【刷新分区】

alter table g6_access_lzo add if not exists partition(day='20190418');

【从本地load LZO压缩数据到表g6_access_lzo】

LOAD DATA LOCAL INPATH '/home/hadoop/data/part-r-00000.lzo' OVERWRITE INTO TABLE g6_access_lzo partition (day="20190418");

[hadoop@hadoop001 sbin]$ hadoop fs -du -s -h /g6/hadoop/access/compress/day=20190418/*

28.6 M 28.6 M /g6/hadoop/access/compress/day=20190418/part-r-00000.lzo

【查询测试】

hive (default)> select count(1) from g6_access_lzo;

##日志查看##Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 3.69 sec HDFS Read: 29982759 HDFS Write: 57 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 690 msec

【结论1】因为我们的块大小是给设定了10M,而part-r-00000.lzo这个lzo压缩文件的大小远远大于10M,但是我们可以看见Map只有一个,可见lzo是不支持分片的

【lzo支持分片测试】

#开启压缩,生成的压缩文件格式必须为设置为LzopCodec,lzoCode的压缩文件格式后缀为.lzo_deflate是无法创建索引的。

SET hive.exec.compress.output=true;

SET mapreduce.output.fileoutputformat.compress.codec=com.hadoop.compression.lzo.LzopCodec;

【创建分片测试表】

create table g6_access_lzo_split

STORED AS INPUTFORMAT "com.hadoop.mapred.DeprecatedLzoTextInputFormat"

OUTPUTFORMAT "org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat"

as select * from g6_access;

hive (default)> desc formatted g6_access_lzo_split; #找到表数据的位置

Location: hdfs://hadoop001:9000/user/hive/warehouse/g6_access_lzo_split

[hadoop@hadoop001 sbin]$ hadoop fs -du -s -h /user/hive/warehouse/g6_access_lzo_split/*

28.9 M 28.9 M /user/hive/warehouse/g6_access_lzo_split/000000_0.lzo

#构建LZO文件索引,使用我们之前打的jar包中的工具类

[hadoop@hadoop001 ~]$ hadoop jar ~/app/hadoop-2.6.0-cdh5.7.0/share/hadoop/common/hadoop-lzo-0.4.21-SNAPSHOT.jar

com.hadoop.compression.lzo.LzoIndexer /user/hive/warehouse/wsktest.db/page_views2_lzo_split

[hadoop@hadoop001 sbin]$ hadoop fs -du -s -h /user/hive/warehouse/g6_access_lzo_split/*

28.9 M 28.9 M /user/hive/warehouse/g6_access_lzo_split/000000_0.lzo

2.3 K 2.3 K /user/hive/warehouse/g6_access_lzo_split/000000_0.lzo.index

【查询测试】

hive (default)> select count(1) from g6_access_lzo_split;

Stage-Stage-1: Map: 3 Reduce: 1 Cumulative CPU: 5.85 sec HDFS Read: 30504786 HDFS Write: 57 SUCCESS

Total MapReduce CPU Time Spent: 5 seconds 850 msec

【结论2】这里我们可以卡到map是3,也就是说lzo压缩文件构建索引以后是支持分片的

【总结】

大数据中常见的压缩格式只有bzip2是支持数据分片的,lzo在文件构建索引后才会支持数据分片

【参考博客】

https://my.oschina.net/u/4005872/blog/3036700

https://blog.csdn.net/qq_32641659/article/details/89339471