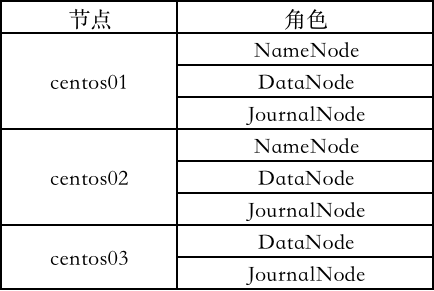

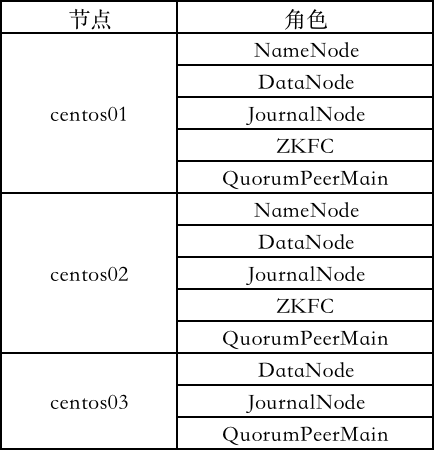

本文是在hadoop搭建的基础上进行改造

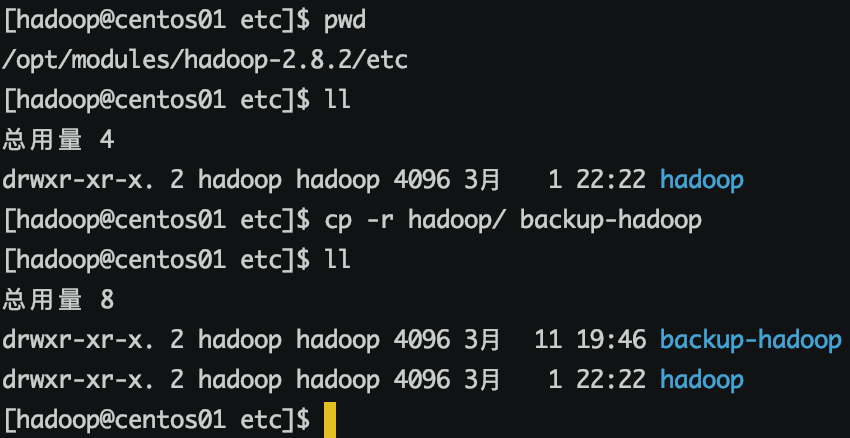

先对之前三个节点的配置、数据进行备份

/opt/modules/hadoop-2.8.2/etc/hadoop 文件夹

/opt/modules/hadoop-2.8.2/tmp文件夹

cp -r hadoop/ backup-hadoop

cp -r tmp/ backup-tmp

一、hdfs-site.xml 文件配置

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- mycluster 为自定义的值,下方配置要使用改值 -->

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<!-- 配置两个NameNode的标示符 -->

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<!-- 配置两个NameNode 所在节点与访问端口 -->

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>centos01:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>centos02:8020</value>

</property>

<!-- 配置两个NameNode 的web页面访问地址 -->

<property>

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>centos01:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>centos02:50070</value>

</property>

<!-- 设置一组JournalNode的URL地址 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://centos01:8485;centos02:8485;centos03:8485/mycluster</value>

</property>

<!-- JournalNode用于存放元数据和状态的目录 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/modules/hadoop-2.8.2/tmp/dfs/jn</value>

</property>

<!-- 客户端与NameNode通讯的类 -->

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 解决HA集群隔离问题 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 上述ssh通讯使用的密钥文件 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<!-- hadoop为当前用户名 -->

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/opt/modules/hadoop-2.8.2/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/opt/modules/hadoop-2.8.2/tmp/dfs/data</value>

</property>

</configuration>

二、core-site.xml 文件配置

<configuration>

<property>

<name>fs.defaultFS</name>

<!-- <value>hdfs://centos01:9000</value> -->

<value>hdfs://mycluster</value>

</property>

<property>

<name>hadoop.temp.dir</name>

<value>file:/opt/modules/hadoop-2.8.2/tmp</value>

</property>

</configuration>

将 hdfs://centos01:9000 改为 hdfs://mycluster Hadoop启动时会找到对应的两个NameNode

将 hdfs-stie.xml 与 core-site.xml 发送到另两个节点

scp /opt/modules/hadoop-2.8.2/etc/hadoop/hdfs-site.xml hadoop@centos02:/opt/modules/hadoop-2.8.2/etc/hadoop/ scp /opt/modules/hadoop-2.8.2/etc/hadoop/core-site.xml hadoop@centos02:/opt/modules/hadoop-2.8.2/etc/hadoop/

scp /opt/modules/hadoop-2.8.2/etc/hadoop/hdfs-site.xml hadoop@centos03:/opt/modules/hadoop-2.8.2/etc/hadoop/ scp /opt/modules/hadoop-2.8.2/etc/hadoop/core-site.xml hadoop@centos03:/opt/modules/hadoop-2.8.2/etc/hadoop/

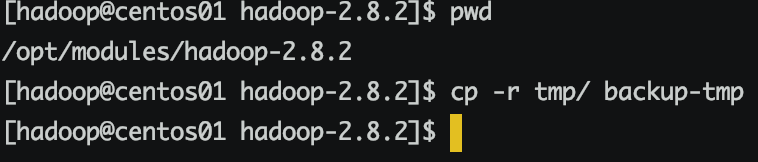

三、启动与测试

1、启动JournalNode进程

删除各个节点$HADOOP_HOME/tmp目录下所有文件

分别进入3个节点Hadoop安装目录,启动3个节点的JournalNode进程

sh /opt/modules/hadoop-2.8.2/sbin/hadoop-daemon.sh start journalnode

2、格式化NameNode

在centos01上执行 *在namenade所在的节点上处理

bin/hdfs namenode -format

执行后存在这句话,执行成功

common.Storage: Storage directory /opt/modules/hadoop-2.8.2/tmp/dfs/name has been successfully formatted.

3、启动NameNode1(活动NameNode)

进入centos01的hadoop安装目录启动namenode1

sh /opt/modules/hadoop-2.8.2/sbin/hadoop-daemon.sh start namenode

启动后生成images元数据

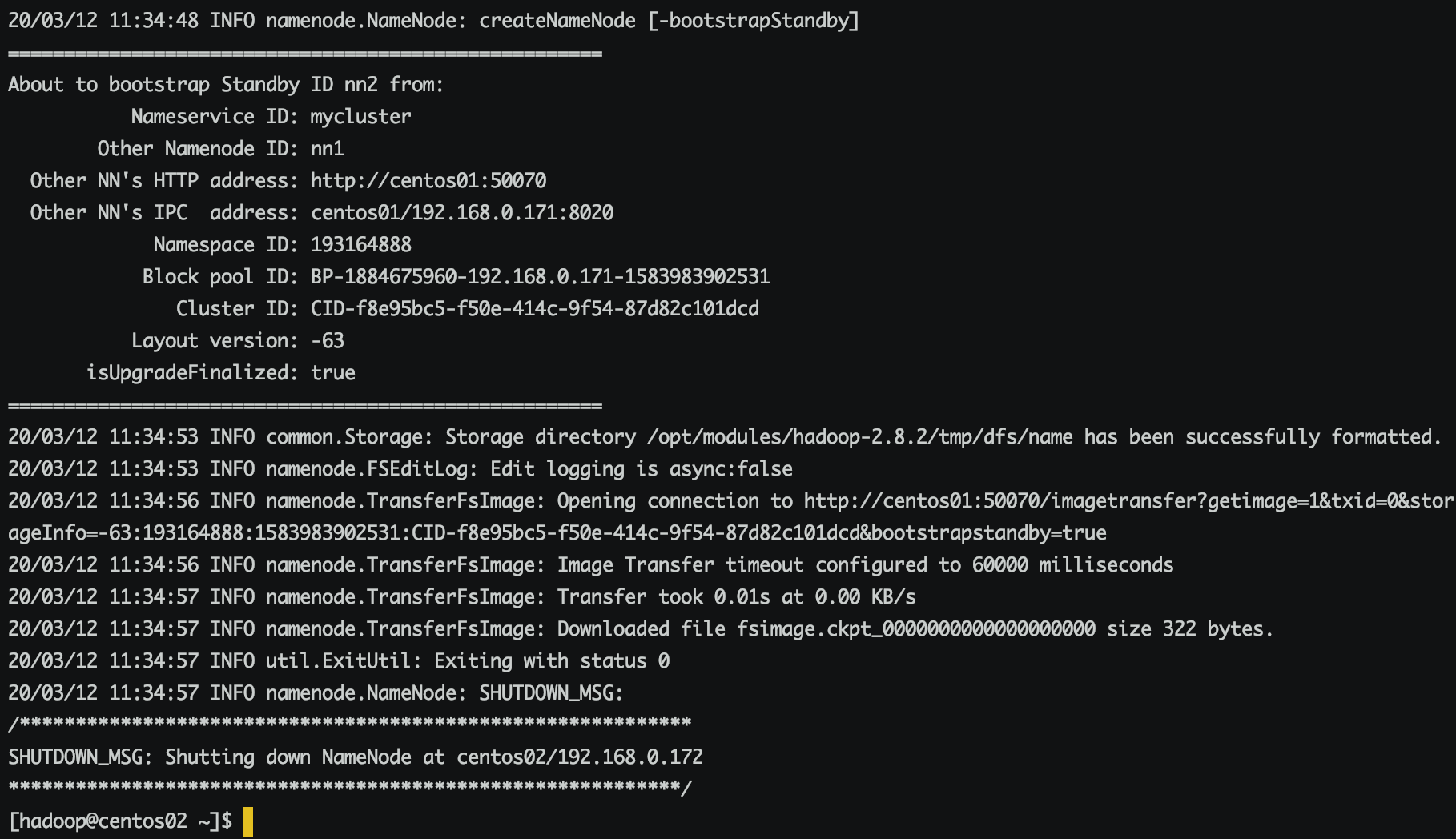

4、复制NameNode1元数据

进入centos02上进入Hadoop安装目录,执行以下,将centos01上的NameNode元数据复制到centos02上(或者将centos01 $HADOOP_HOME/tmp目录复制到centos02相同的位置)

sh /opt/modules/hadoop-2.8.2/bin/hdfs namenode -bootstrapStandby

执行后存在这句话,执行成功

common.Storage: Storage directory /opt/modules/hadoop-2.8.2/tmp/dfs/name has been successfully formatted.

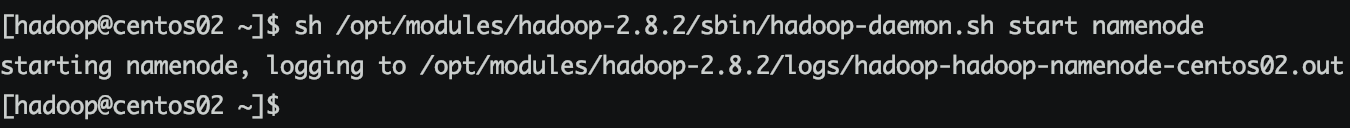

5、启动NameNode2(备用NameNode)

进入centos02的hadoop安装目录启动namenode2

sh /opt/modules/hadoop-2.8.2/sbin/hadoop-daemon.sh start namenode

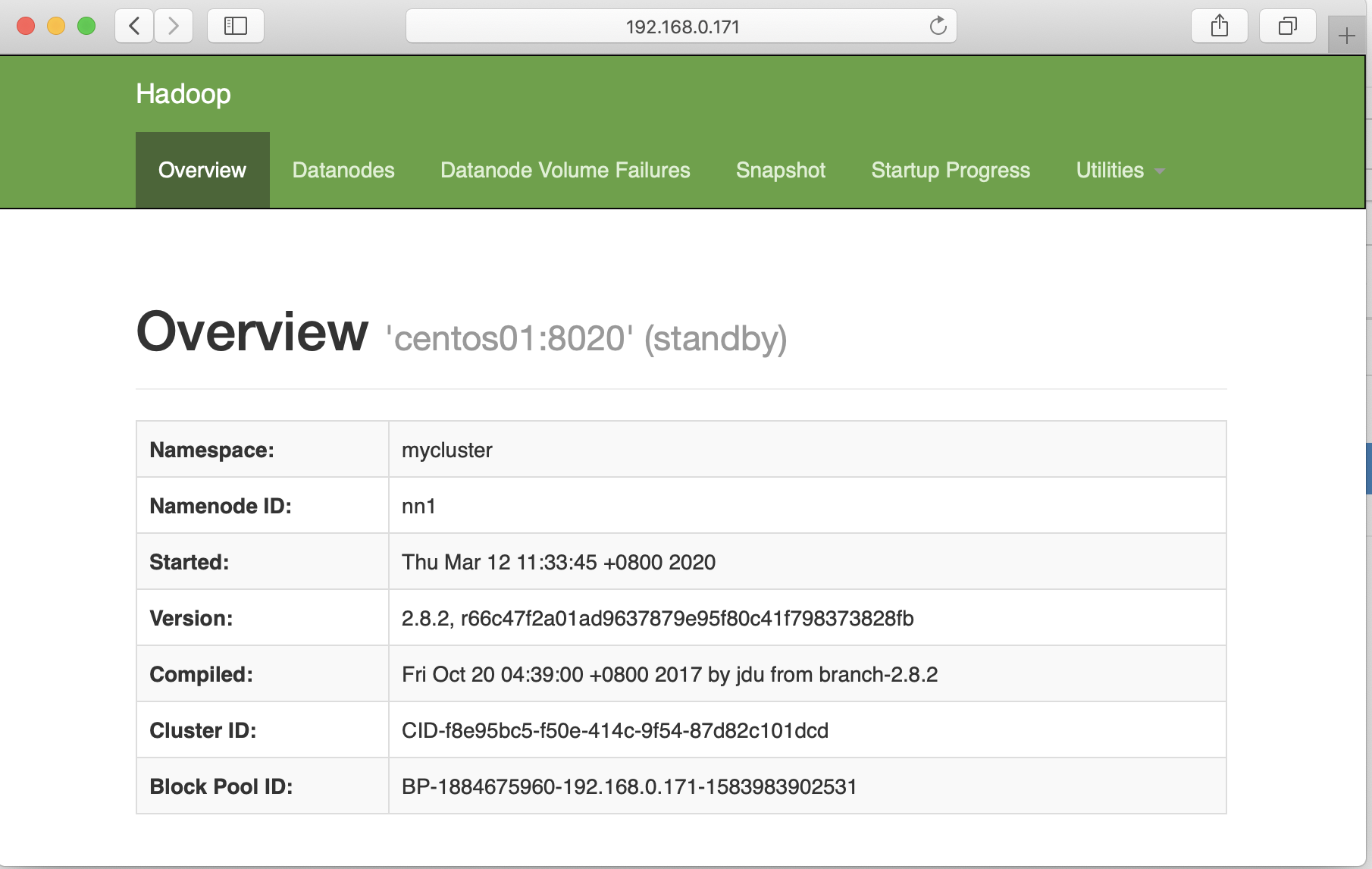

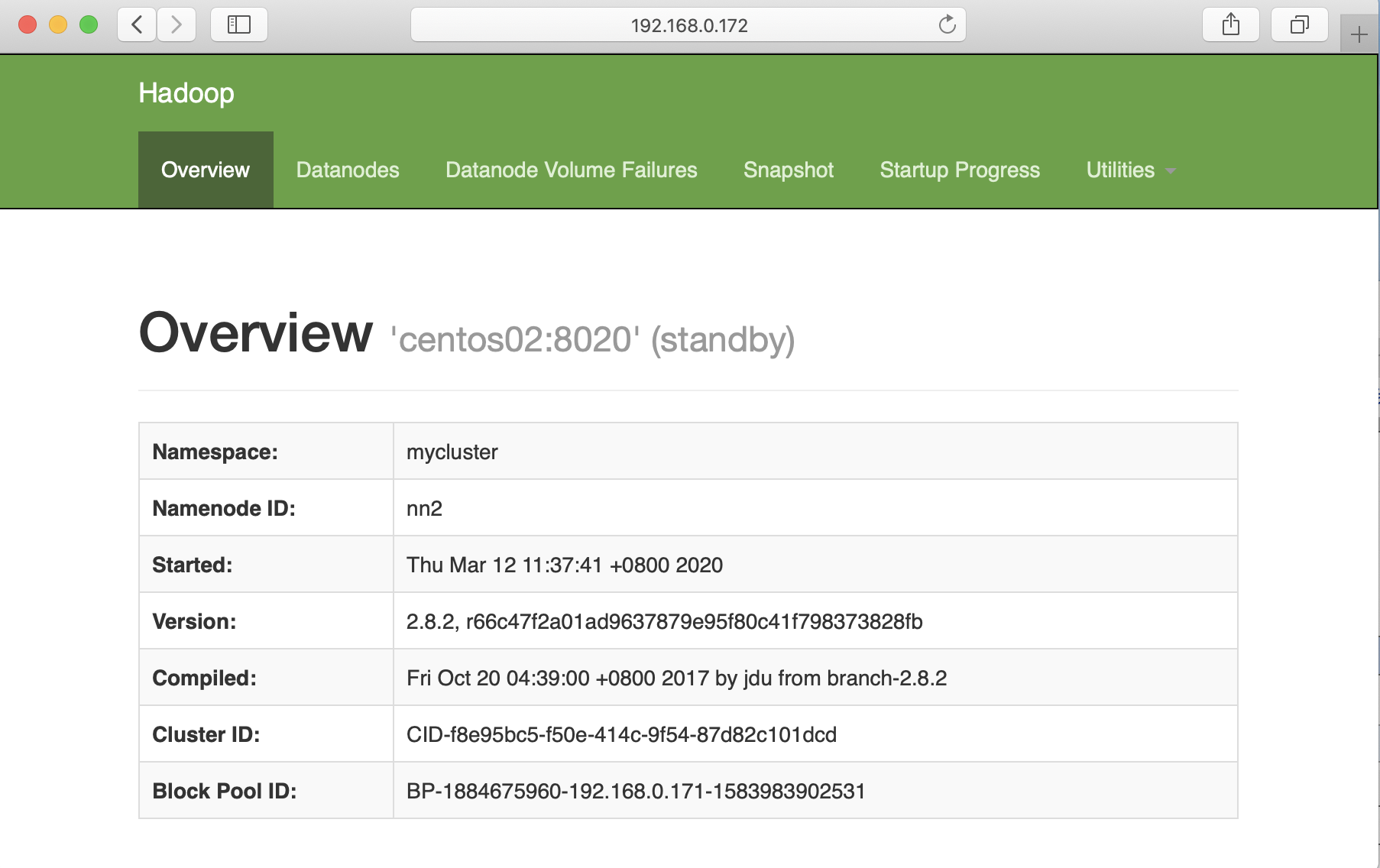

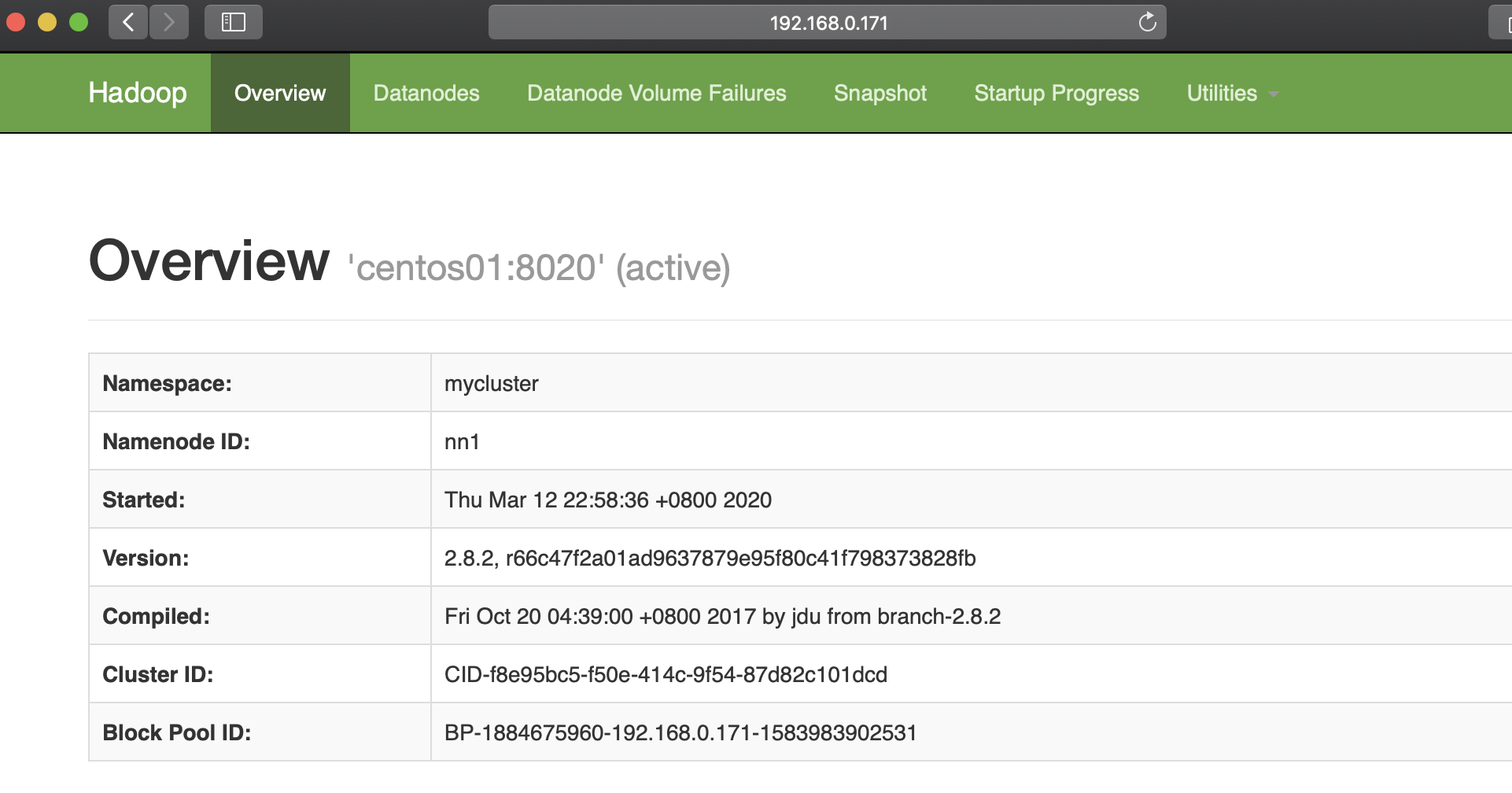

启动后 浏览器 http://192.168.0.171:50070 查看NameNode1状态

浏览器 http://192.168.0.172:50070 查看NameNode2状态

状态都为standby

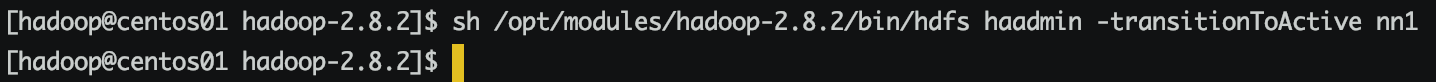

6、将NameNode1状态设置为Active

进入centos01的hadoop安装目录启动namenode1

sh /opt/modules/hadoop-2.8.2/bin/hdfs haadmin -transitionToActive nn1

刷新浏览器 http://192.168.0.171:50070 查看NameNode1状态

状态变为Active

此时DataNode还没有启动

7、重启HDFS

进入centos01的hadoop安装目录

停止hdfs

sh sbin/stop-dfs.sh

启动hdfs

sh sbin/start-dfs.sh

8、再次将NameNode1状态设置为Active

重启后NameNode、DataNode等进程已经启动,需要将NameNode1重新设置Active

sh /opt/modules/hadoop-2.8.2/bin/hdfs haadmin -transitionToActive nn1

通过命令查看状态

bin/hdfs haadmin -getServiceState nn1

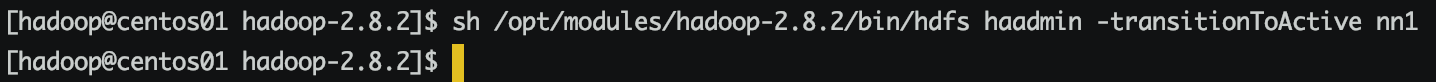

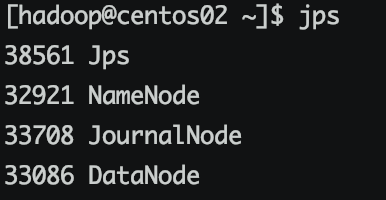

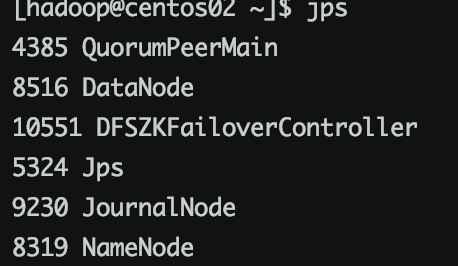

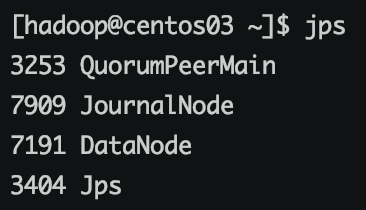

9、每个节点jps命令,查看状态

查看每个节点进程

jps

10、测试HDFS

将centos01上 kill -9 35396 ,手动到centos02上手动激活NameNode2(第六步)

当发生故障需要手动切换

四、结合Zookeeper自动进行故障转移 (zookeeper集群、ZKFailoverController进程(ZKFC))

Zookeeper主要作用故障检测和NameNode选举作用

1、开启自动故障转移功能

在centos01上,修改hdfs-site.xml文件,加入如下内容

<!-- 开启自动故障转移,mycluster为自定义配置的nameservice ID值 -->

<property>

<name>dfs.ha.automatic-failover.enabled.mycluster</name>

<value>true</value>

</property>

完整的配置

1 <configuration> 2 <property> 3 <name>dfs.replication</name> 4 <value>2</value> 5 </property> 6 7 <!-- mycluster 为自定义的值,下方配置要使用改值 --> 8 <property> 9 <name>dfs.nameservices</name> 10 <value>mycluster</value> 11 </property> 12 <!-- 配置两个NameNode的标示符 --> 13 <property> 14 <name>dfs.ha.namenodes.mycluster</name> 15 <value>nn1,nn2</value> 16 </property> 17 <!-- 配置两个NameNode 所在节点与访问端口 --> 18 <property> 19 <name>dfs.namenode.rpc-address.mycluster.nn1</name> 20 <value>centos01:8020</value> 21 </property> 22 <property> 23 <name>dfs.namenode.rpc-address.mycluster.nn2</name> 24 <value>centos02:8020</value> 25 </property> 26 <!-- 配置两个NameNode 的web页面访问地址 --> 27 <property> 28 <name>dfs.namenode.http-address.mycluster.nn1</name> 29 <value>centos01:50070</value> 30 </property> 31 <property> 32 <name>dfs.namenode.http-address.mycluster.nn2</name> 33 <value>centos02:50070</value> 34 </property> 35 <!-- 设置一组JournalNode的URL地址 --> 36 <property> 37 <name>dfs.namenode.shared.edits.dir</name> 38 <value>qjournal://centos01:8485;centos02:8485;centos03:8485/mycluster</value> 39 </property> 40 <!-- JournalNode用于存放元数据和状态的目录 --> 41 <property> 42 <name>dfs.journalnode.edits.dir</name> 43 <value>/opt/modules/hadoop-2.8.2/tmp/dfs/jn</value> 44 </property> 45 <!-- 客户端与NameNode通讯的类 --> 46 <property> 47 <name>dfs.client.failover.proxy.provider.mycluster</name> 48 <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> 49 </property> 50 <!-- 解决HA集群隔离问题 --> 51 <property> 52 <name>dfs.ha.fencing.methods</name> 53 <value>sshfence</value> 54 </property> 55 <!-- 上述ssh通讯使用的密钥文件 --> 56 <property> 57 <name>dfs.ha.fencing.ssh.private-key-files</name> 58 <!-- hadoop为当前用户名 --> 59 <value>/home/hadoop/.ssh/id_rsa</value> 60 </property> 61 62 <!-- 开启自动故障转移,mycluster为自定义配置的nameservice ID值 --> 63 <property> 64 <name>dfs.ha.automatic-failover.enabled.mycluster</name> 65 <value>true</value> 66 </property> 67 68 <!-- 配置sshfence隔离机制超时时间 --> 69 <property> 70 <name>dfs.ha.fencing.ssh.connect-timeout</name> 71 <value>30000</value> 72 </property> 73 74 <property> 75 <name>ha.failover-controller.cli-check.rpc-timeout.ms</name> 76 <value>60000</value> 77 </property> 78 79 <property> 80 <name>dfs.permissions.enabled</name> 81 <value>false</value> 82 </property> 83 <property> 84 <name>dfs.namenode.name.dir</name> 85 <value>file:/opt/modules/hadoop-2.8.2/tmp/dfs/name</value> 86 </property> 87 <property> 88 <name>dfs.datanode.data.dir</name> 89 <value>file:/opt/modules/hadoop-2.8.2/tmp/dfs/data</value> 90 </property> 91 </configuration>

2、指定Zookeeper集群

在centos01节点中,修改core-site.xml文件,加入以下内容

<!-- 指定zookeeper集群节点以及端口 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>centos01:2181,centos02:2181,centos03:2181</value>

</property>

完整的配置

1 <configuration> 2 <property> 3 <name>fs.defaultFS</name> 4 <!-- <value>hdfs://centos01:9000</value> --> 5 <value>hdfs://mycluster</value> 6 </property> 7 <property> 8 <name>hadoop.temp.dir</name> 9 <value>file:/opt/modules/hadoop-2.8.2/tmp</value> 10 </property> 11 <!-- 指定zookeeper集群节点以及端口 --> 12 <property> 13 <name>ha.zookeeper.quorum</name> 14 <value>centos01:2181,centos02:2181,centos03:2181</value> 15 </property> 16 <!-- hadoop链接zookeeper的超时时长设置 --> 17 <property> 18 <name>ha.zookeeper.session-timeout.ms</name> 19 <value>1000</value> 20 <description>ms</description> 21 </property> 22 </configuration>

3、同步其它节点

将修改好的hdfs-site.xml、core-site.xml 同步到其它2个节点

scp /opt/modules/hadoop-2.8.2/etc/hadoop/hdfs-site.xml hadoop@centos02:/opt/modules/hadoop-2.8.2/etc/hadoop/

scp /opt/modules/hadoop-2.8.2/etc/hadoop/core-site.xml hadoop@centos02:/opt/modules/hadoop-2.8.2/etc/hadoop/

scp /opt/modules/hadoop-2.8.2/etc/hadoop/hdfs-site.xml hadoop@centos03:/opt/modules/hadoop-2.8.2/etc/hadoop/

scp /opt/modules/hadoop-2.8.2/etc/hadoop/core-site.xml hadoop@centos03:/opt/modules/hadoop-2.8.2/etc/hadoop/

4、停止HDFS集群

进入centos01的hadoop安装目录

停止hdfs

sh sbin/stop-dfs.sh

5、启动Zookeeper集群

需要登陆到每个节点启动

sh /opt/modules/zookeeper-3.4.14/bin/zkServer.sh start

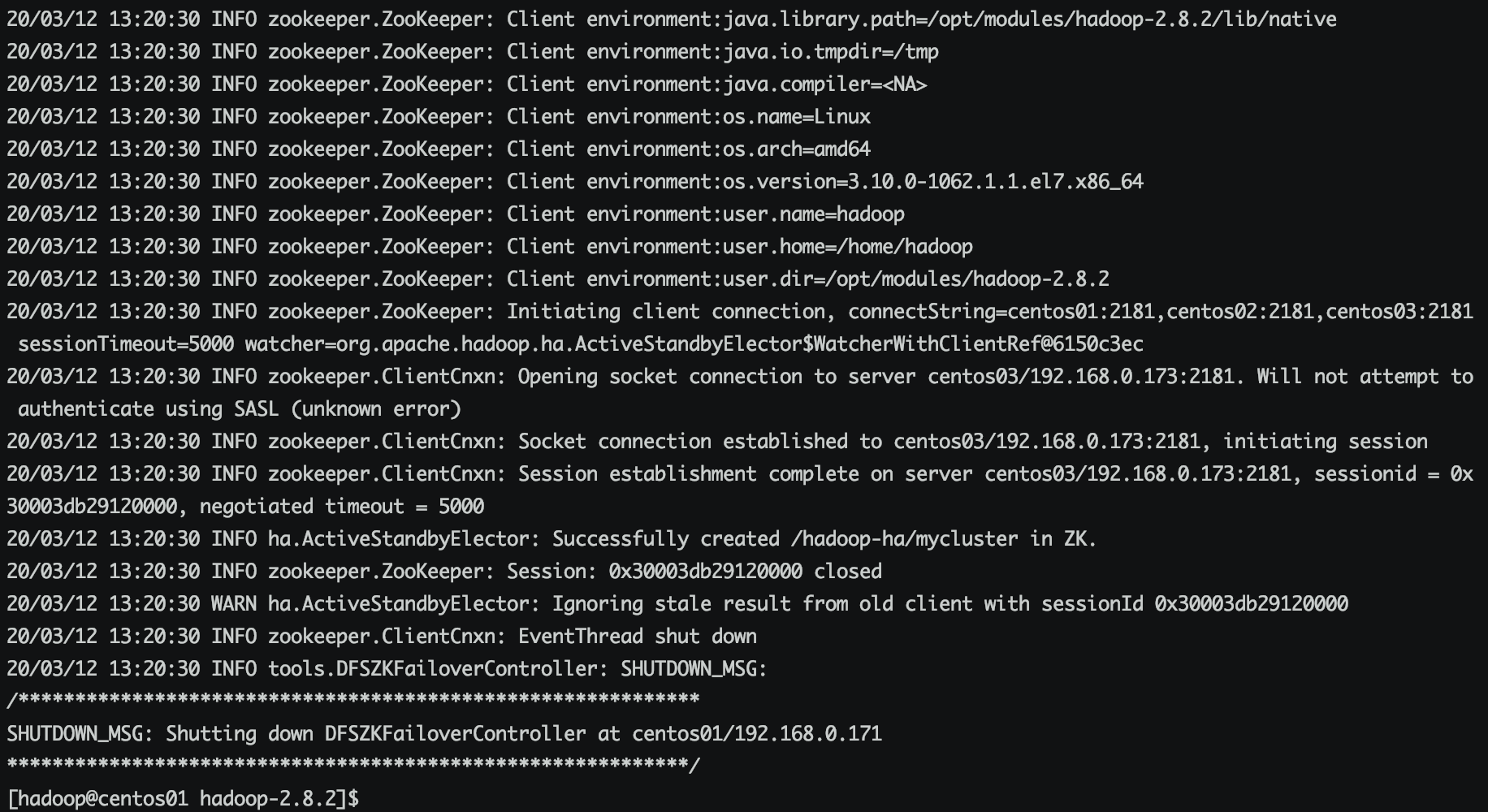

6、初始化HA在Zookeeper中的状态

进入centos01节点hadoop安装目录,执行命令创建znode节点,存储自动故障转移数据

sh bin/hdfs zkfc -formatZK

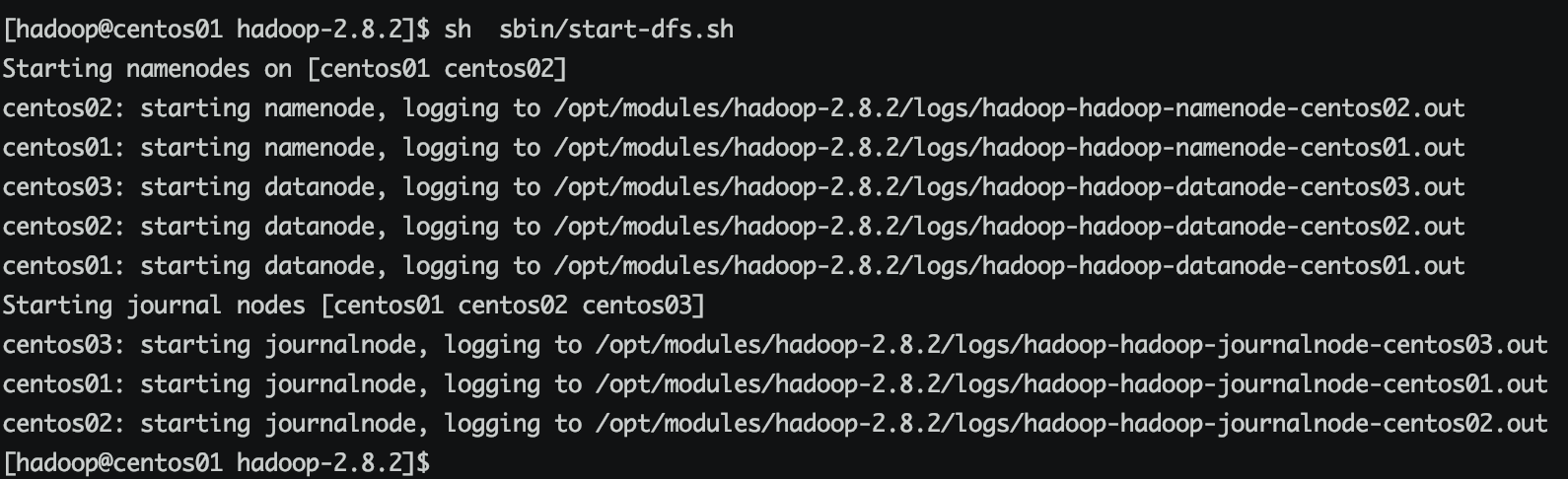

7、启动HDFS集群

进入centos01的hadoop安装目录

启动hdfs

sh sbin/start-dfs.sh

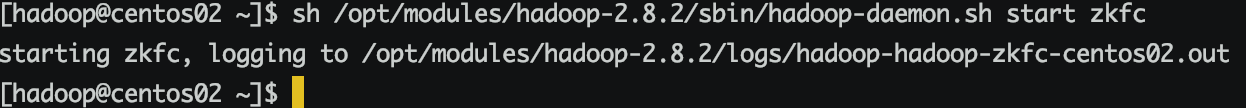

8、启动ZKFC守护进程

需要手动启动运行NameNode的每个节点ZKFC进程,(centos01,centos02 两个节点上运行了NameNode)

sh /opt/modules/hadoop-2.8.2/sbin/hadoop-daemon.sh start zkfc

停止

sh /opt/modules/hadoop-2.8.2/sbin/hadoop-daemon.sh stop zkfc

先启动的NameNode状态为Active

9、测试HDFS故障自动转移

查看每个节点进程情况

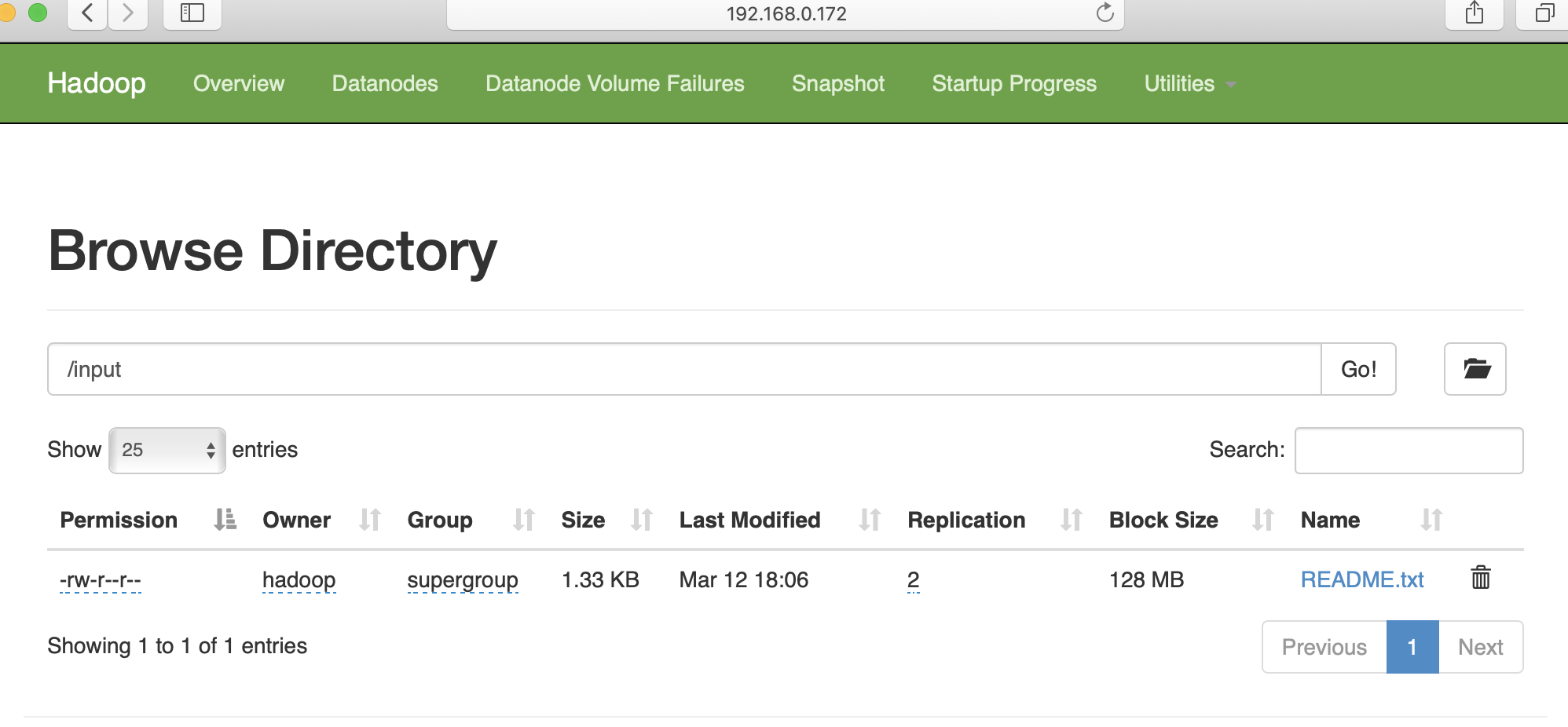

上传一个文件测试

hdfs dfs -mkdir /input hdfs dfs -put /opt/modules/hadoop-2.8.2/README.txt /input

![]()

测试kill centos02上NameNode

访问测试,centos01状态为active

⚠️ 当一个NameNode被kill后,另一个无法自动acitve

由于dfs.ha.fencing.methods参数的value是sshfence,需要使用的fuser命令;所以通过如下命令安装一下即可,两个namenode节点都需要安装

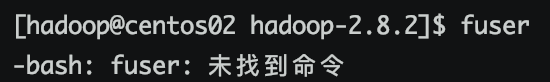

执行 fuser显示未找到命令

fuser

安装

sudo yum install -y psmisc

[hadoop@centos02 hadoop-2.8.2]$ sudo yum install -y psmisc [sudo] hadoop 的密码: 已加载插件:fastestmirror Determining fastest mirrors * base: mirrors.njupt.edu.cn * extras: mirrors.163.com * updates: mirrors.163.com base | 3.6 kB 00:00:00 docker-ce-stable | 3.5 kB 00:00:00 extras | 2.9 kB 00:00:00 updates | 2.9 kB 00:00:00 (1/3): extras/7/x86_64/primary_db | 164 kB 00:00:06 (2/3): docker-ce-stable/x86_64/primary_db | 41 kB 00:00:07 (3/3): updates/7/x86_64/primary_db | 6.7 MB 00:00:07 正在解决依赖关系 --> 正在检查事务 ---> 软件包 psmisc.x86_64.0.22.20-16.el7 将被 安装 --> 解决依赖关系完成 依赖关系解决 ================================================================================================================================= Package 架构 版本 源 大小 ================================================================================================================================= 正在安装: psmisc x86_64 22.20-16.el7 base 141 k 事务概要 ================================================================================================================================= 安装 1 软件包 总下载量:141 k 安装大小:475 k Downloading packages: psmisc-22.20-16.el7.x86_64.rpm | 141 kB 00:00:06 Running transaction check Running transaction test Transaction test succeeded Running transaction 正在安装 : psmisc-22.20-16.el7.x86_64 1/1 验证中 : psmisc-22.20-16.el7.x86_64 1/1 已安装: psmisc.x86_64 0:22.20-16.el7 完毕! [hadoop@centos02 hadoop-2.8.2]$ fuser 未指定进程 Usage: fuser [-fMuvw] [-a|-s] [-4|-6] [-c|-m|-n SPACE] [-k [-i] [-SIGNAL]] NAME... fuser -l fuser -V Show which processes use the named files, sockets, or filesystems. -a,--all display unused files too -i,--interactive ask before killing (ignored without -k) -k,--kill kill processes accessing the named file -l,--list-signals list available signal names -m,--mount show all processes using the named filesystems or block device -M,--ismountpoint fulfill request only if NAME is a mount point -n,--namespace SPACE search in this name space (file, udp, or tcp) -s,--silent silent operation -SIGNAL send this signal instead of SIGKILL -u,--user display user IDs -v,--verbose verbose output -w,--writeonly kill only processes with write access -V,--version display version information -4,--ipv4 search IPv4 sockets only -6,--ipv6 search IPv6 sockets only - reset options udp/tcp names: [local_port][,[rmt_host][,[rmt_port]]]