#spark2.2.0源码编译

#组件:mvn-3.3.9 jdk-1.8

#wget http://mirror.bit.edu.cn/apache/spark/spark-2.2.0/spark-2.2.0.tgz ---下载源码 (如果是Hive on spark---hive2.1.1对应spark1.6.0)

#tar zxvf spark-2.2.0.tgz ---解压

#cd spark-2.2.0/dev

##修改make-distribution.sh的MVN路径为$M2_HOME/bin/mvn ---查看并安装pom.xml的mvn版本

##cd .. ---切换到spark-2.2.0

#./dev/change-scala-version.sh 2.11 ---更改scala版本(低于11不需要此步骤)

#./dev/make-distribution.sh --name "hadoop2-without-hive" --tgz "-Pyarn,hadoop-provided,hadoop-2.7,parquet-provided" ---生成在根目录下

##下载的可直接运行的是2.10.x,源码支持2.11.x,但是编译的时候需要加上条件-Dscala-2.11,但是Spark does not yet support its JDBC component for Scala 2.11. 所以需要这部分功能的还是用2.10.x。

##export MAVEN_OPTS="-Xmx2g -XX:MaxPermSize=512M -XX:ReservedCodeCacheSize=512m"

##./build/mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.3 -DskipTests clean package ---生成在dist目录下

##参考http://spark.apache.org/docs/latest/building-spark.html

./dev/make-distribution.sh --name custom-spark --pip --r --tgz -Psparkr -Phadoop-2.7 -Phive -Phive-thriftserver -Pmesos -Pyarn

##With Hive 1.2.1 support

./build/mvn -Pyarn -Phive -Phive-thriftserver -DskipTests clean package

##With scala 2.10 support

./dev/change-scala-version.sh 2.10

./build/mvn -Pyarn -Dscala-2.10 -DskipTests clean package

##with Mesos support

./build/mvn -Pmesos -DskipTests clean package

##build with sbt

./build/sbt package

##Apache Hadoop 2.6.X

./build/mvn -Pyarn -DskipTests clean package

##Apache Hadoop 2.7.X and later

./build/mvn -Pyarn -Phadoop-2.7 -Dhadoop.version=2.7.3 -DskipTests clean package

##参考https://cwiki.apache.org//confluence/display/Hive/Hive+on+Spark:+Getting+Started

Follow instructions to install Spark:

YARN Mode: http://spark.apache.org/docs/latest/running-on-yarn.html

#spark-submit

$ ./bin/spark-submit --class path.to.your.Class --master yarn --deploy-mode cluster [options] <app jar> [app options]

For example:

$ ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode cluster --driver-memory 4g --executor-memory 2g --executor-cores 1 --queue thequeue lib/spark-examples*.jar 10

#spark-shell

$ ./bin/spark-shell --master yarn --deploy-mode client

#spark-submit add jars

$ ./bin/spark-submit --class my.main.Class --master yarn --deploy-mode cluster --jars my-other-jar.jar,my-other-other-jar.jar my-main-jar.jar app_arg1 app_arg2

Standalone Mode: https://spark.apache.org/docs/latest/spark-standalone.html

#./bin/spark-shell --master spark://IP:PORT ---您还可以传递一个选项--total-executor-cores <numCores>来控制spark-shell在群集上使用的核心数。

#./bin/spark-class org.apache.spark.deploy.Client kill <master url> <driver ID>

Hive on Spark supports Spark on YARN mode as default.

For the installation perform the following tasks:

- Install Spark (either download pre-built Spark, or build assembly from source).

- Install/build a compatible version. Hive root

pom.xml's <spark.version> defines what version of Spark it was built/tested with. - Install/build a compatible distribution. Each version of Spark has several distributions, corresponding with different versions of Hadoop.

- Once Spark is installed, find and keep note of the <spark-assembly-*.jar> location.

- Note that you must have a version of Spark which does not include the Hive jars. Meaning one which was not built with the Hive profile. If you will use Parquet tables, it's recommended to also enable the "parquet-provided" profile. Otherwise there could be conflicts in Parquet dependency. To remove Hive jars from the installation, simply use the following command under your Spark repository:

- Install/build a compatible version. Hive root

Prior to Spark 2.0.0:

./make-distribution.sh --name "hadoop2-without-hive" --tgz "-Pyarn,hadoop-provided,hadoop-2.4,parquet-provided" |

Since Spark 2.0.0:

./dev/make-distribution.sh --name "hadoop2-without-hive" --tgz "-Pyarn,hadoop-provided,hadoop-2.7,parquet-provided" |

-

To add the Spark dependency to Hive:

- Prior to Hive 2.2.0, link the spark-assembly jar to

HIVE_HOME/lib. - Since Hive 2.2.0, Hive on Spark runs with Spark 2.0.0 and above, which doesn't have an assembly jar.

- To run with YARN mode (either yarn-client or yarn-cluster), link the following jars to

HIVE_HOME/lib.- scala-library

- spark-core

- spark-network-common

- To run with LOCAL mode (for debugging only), link the following jars in addition to those above to

HIVE_HOME/lib.- chill-java chill jackson-module-paranamer jackson-module-scala jersey-container-servlet-core

- jersey-server json4s-ast kryo-shaded minlog scala-xml spark-launcher

- spark-network-shuffle spark-unsafe xbean-asm5-shaded

- To run with YARN mode (either yarn-client or yarn-cluster), link the following jars to

- Prior to Hive 2.2.0, link the spark-assembly jar to

-

Configure Hive execution engine to use Spark

set hive.execution.engine=spark;

-

Configure Spark-application configs for Hive. See: http://spark.apache.org/docs/latest/configuration.html. This can be done either by adding a file "spark-defaults.conf" with these properties to the Hive classpath, or by setting them on Hive configuration (

hive-site.xml). For instance:set spark.master=<Spark Master URL>set spark.eventLog.enabled=true;set spark.eventLog.dir=<Spark event log folder (must exist)>set spark.executor.memory=512m;set spark.serializer=org.apache.spark.serializer.KryoSerializer;Configuration property details

spark.executor.memory: Amount of memory to use per executor process.spark.executor.cores: Number of cores per executor.-

spark.yarn.executor.memoryOverhead: The amount of off heap memory (in megabytes) to be allocated per executor, when running Spark on Yarn. This is memory that accounts for things like VM overheads, interned strings, other native overheads, etc. In addition to the executor's memory, the container in which the executor is launched needs some extra memory for system processes, and this is what this overhead is for. spark.executor.instances: The number of executors assigned to each application.spark.driver.memory: The amount of memory assigned to the Remote Spark Context (RSC). We recommend 4GB.spark.yarn.driver.memoryOverhead: We recommend 400 (MB).

-

Allow Yarn to cache necessary spark dependency jars on nodes so that it does not need to be distributed each time when an application runs.

-

Prior to Hive 2.2.0, upload spark-assembly jar to hdfs file(for example: hdfs://xxxx:8020/spark-assembly.jar) and add following in hive-site.xml

<property><name>spark.yarn.jar</name><value>hdfs://xxxx:8020/spark-assembly.jar</value></property> -

Hive 2.2.0, upload all jars in $SPARK_HOME/jars to hdfs folder(for example:hdfs:///xxxx:8020/spark-jars) and add following in hive-site.xml

<property><name>spark.yarn.jars</name><value>hdfs://xxxx:8020/spark-jars/*</value></property>

-

Configuring Spark

Setting executor memory size is more complicated than simply setting it to be as large as possible. There are several things that need to be taken into consideration:

-

More executor memory means it can enable mapjoin optimization for more queries.

-

More executor memory, on the other hand, becomes unwieldy from GC perspective.

- Some experiments shows that HDFS client doesn’t handle concurrent writers well, so it may face race condition if executor cores are too many.

The following settings need to be tuned for the cluster, these may also apply to submission of Spark jobs outside of Hive on Spark:

|

Property

|

Recommendation

|

|---|---|

| spark.executor.cores | Between 5-7, See tuning details section |

| spark.executor.memory | yarn.nodemanager.resource.memory-mb * (spark.executor.cores / yarn.nodemanager.resource.cpu-vcores) |

| spark.yarn.executor.memoryOverhead | 15-20% of spark.executor.memory |

| spark.executor.instances | Depends on spark.executor.memory + spark.yarn.executor.memoryOverhead, see tuning details section. |

#参考http://spark.apache.org/docs/latest/configuration.html

##maven阿里仓库settings.xml

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

pom.xml

<repositories>

<repository>

<id>nexus-aliyun</id>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</repository>

</repositories>

<!--<url>https://repo1.maven.org/maven2</url>--> <url>http://maven.aliyun.com/nexus/content/groups/public/</url>

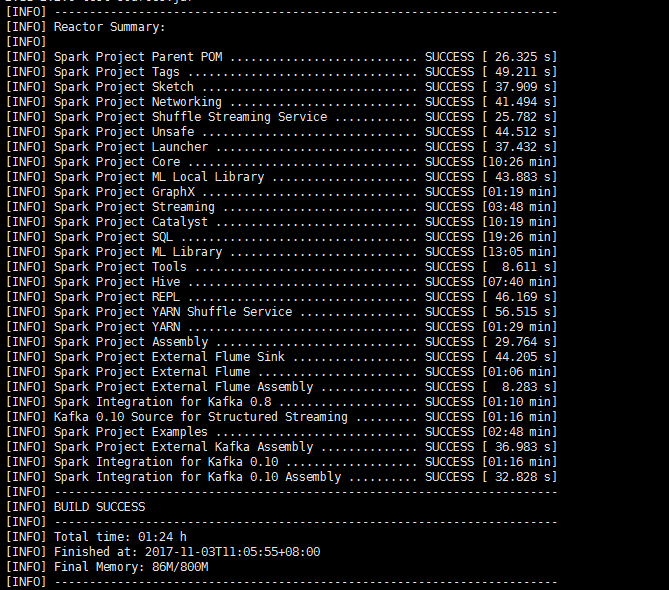

#大约1个多小时,保证联网环境,下载和编译包需要时间,如遇到问题请自查,重新执行上述命令

#官网下载scala2.10.5解压,并命令为scala

#vim /etc/profile

export JAVA_HOME=/usr/app/jdk1.8.0

export SCALA_HOME=/usr/app/scala

export HADOOP_HOME=/usr/app/hadoop

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib"

export HIVE_HOME=/usr/app/hive

export HIVE_CONF_DIR=${HIVE_HOME}/conf

export HBASE_HOME=/usr/app/hbase

export HBASE_CONF_DIR=${HBASE_HOME}/conf

export SPARK_HOME=/usr/app/spark

export CLASSPATH=.:${JAVA_HOME}/lib:${SCALA_HOME}/lib:${HIVE_HOME}/lib:${HBASE_HOME}/lib:$CLASSPATH

export PATH=.:${JAVA_HOME}/bin:${SCALA_HOME}/bin:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:${SPARK_HOME}/bin:${HIVE_HOME}/bin:${HBASE_HOME}/bin:$PATH

#spark-env.sh

export JAVA_HOME=/usr/app/jdk1.8.0 export SCALA_HOME=/usr/app/scala export HADOOP_HOME=/usr/app/hadoop export HADOOP_CONF_DIR=/usr/app/hadoop/etc/hadoop export YARN_CONF_DIR=/usr/app/hadoop/etc/hadoop #export SPARK_LAUNCH_WITH_SCALA=0 #export SPARK_WORKER_MEMORY=512m #export SPARK_DRIVER_MEMORY=512m export SPARK_EXECUTOR_MEMORY=512M export SPARK_MASTER_IP=192.168.66.66 export SPARK_HOME=/usr/app/spark export SPARK_LIBRARY_PATH=/usr/app/spark/lib export SPARK_MASTER_WEBUI_PORT=18080 export SPARK_WORKER_DIR=/usr/app/spark/work export SPARK_MASTER_PORT=7077 export SPARK_WORKER_PORT=7078 export SPARK_LOG_DIR=/usr/app/spark/logs export SPARK_PID_DIR='/usr/app/spark/run' export SPARK_DIST_CLASSPATH=$(/usr/app/hadoop/bin/hadoop classpath)

#spark-default.conf

spark.master spark://192.168.66.66:7077 #spark.home /usr/app/spark spark.eventLog.enabled true spark.eventLog.dir hdfs://xinfang:9000/spark-log spark.serializer org.apache.spark.serializer.KryoSerializer #spark.executor.memory 512m spark.driver.memory 700m spark.executor.extraJavaOptions -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three" #spark.driver.extraJavaOptions -XX:PermSize=128M -XX:MaxPermSize=256M spark.yarn.jar hdfs://192.168.66.66:9000/spark-assembly-1.6.0-hadoop2.4.0.jar

spark.master指定Spark运行模式,可以是yarn-client、yarn-cluster、spark://xinfang:7077

spark.home指定SPARK_HOME路径

spark.eventLog.enabled需要设为true

spark.eventLog.dir指定路径,放在master节点的hdfs中,端口要跟hdfs设置的端口一致(默认为8020),否则会报错

spark.executor.memory和spark.driver.memory指定executor和dirver的内存,512m或1g,既不能太大也不能太小,因为太小运行不了,太大又会影响其他服务

#配置yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>8182</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>3.1</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

说明:yarn.nodemanager.vmem-check-enabled 这个配置的意思是忽略虚拟内存的检查,如果你是安装在虚拟机上,这个配置很有用,配上去之后后续操作不容易出问题。如果是实体机上,并且内存够多,可以将这个配置去掉。

#低版本:将spark/lib/spark-assembly-*.jar拷贝到$HIVE_HOME/lib目录下)

#高版本:将spark/jars/spark*.jar和scala*jar拷贝到$HIVE_HOME/lib目录下)

#hadoop dfs -put /usr/app/spark/lib/spark-assembly-1.6.0-hadoop2.4.0.jar / ---上传jar文件 ---这一点非常关键

#配置hive-site.xml

<configuration> <property> <name>hive.metastore.schema.verification</name> <value>false</value> </property> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://192.168.66.66:3306/hive?createDatabaseIfNotExist=true</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>1</value> </property> <!--<property> <name>hive.hwi.listen.host</name> <value>192.168.66.66</value> </property> <property> <name>hive.hwi.listen.port</name> <value>9999</value> </property> <property> <name>hive.hwi.war.file</name> <value>lib/hive-hwi-2.1.1.war</value> </property>--> <property> <name>hive.metastore.warehouse.dir</name> <value>/usr/hive/warehouse</value> </property> <property> <name>hive.exec.scratchdir</name> <value>/usr/hive/tmp</value> </property> <property> <name>hive.querylog.location</name> <value>/usr/hive/log</value> </property> <property> <name>hive.server2.thrift.port</name> <value>10000</value> </property> <property> <name>hive.server2.thrift.bind.host</name> <value>192.168.66.66</value> </property> <property> <name>hive.server2.webui.host</name> <value>192.168.66.66</value> </property> <property> <name>hive.server2.webui.port</name> <value>10002</value> </property> <property> <name>hive.server2.long.polling.timeout</name> <value>5000</value> </property> <property> <name>hive.server2.enable.doAs</name> <value>true</value> </property> <property> <name>datanucleus.autoCreateSchema</name> <value>false</value> </property> <property> <name>datanucleus.fixedDatastore</name> <value>true</value> </property> <!-- hive on mr--> <!-- <property> <name>mapred.job.tracker</name> <value>http://192.168.66.66:9001</value> </property> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> --> <!--hive on spark or spark on yarn --> <property> <name>hive.enable.spark.execution.engine</name> <value>true</value> </property> <property> <name>hive.execution.engine</name> <value>spark</value> </property> <property> <name>spark.home</name> <value>/usr/app/spark</value> </property> <property> <name>spark.master</name> <value>yarn-client</value> </property> <property> <name>spark.submit.deployMode</name> <value>client</value> </property> <property> <name>spark.eventLog.enabled</name> <value>true</value> </property> <!-- <property> <name>spark.yarn.jar</name> <value>hdfs://192.168.66.66:9000/spark-assembly-1.6.0-hadoop2.4.0.jar</value> </property> <property> <name>spark.executor.cores</name> <value>1</value> </property>--> <property> <name>spark.eventLog.dir</name> <value>hdfs://192.168.66.66:9000/spark-log</value> </property> <property> <name>spark.serializer</name> <value>org.apache.spark.serializer.KryoSerializer</value> </property> <property> <name>spark.executor.memeory</name> <value>512m</value> </property> <!-- <property> <name>spark.driver.memeory</name> <value>512m</value> </property>--> <property> <name>spark.executor.extraJavaOptions</name> <value>-XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three"</value> </property> </configuration>

#拷贝hive-site.xml到spark/conf下(这点非常关键)

#新建目录

hadoop fs -mkdir -p /spark-log hadoop fs -chmod 777 /spark-log mkdir -p /usr/app/spark/work /usr/app/spark/logs /usr/app/spark/run mkdir -p /usr/app/hive/logs chmod -R 755 /usr/app/hive /usr/app/scala /usr/app/spark

#hive 其他参考配置

1.配置 hive2.2.0(前提要配置hadoop2.7.2,前面文档有介绍)

#官网下载二进制包,解压到/usr/app 下,配置/etc/profile:

export HIVE_HOME=/usr/app/hive

export PATH=$PATH:$HIVE_HOME/bin

#配置 hive/conf

#hive-env.sh加入

#export HADOOP_HEAPSIZE=1024

export HADOOP_HOME=/usr/app/hadoop

export HIVE_CONF_DIR=/usr/app/hive/conf

export HIVE_AUX_JARS_PATH=/usr/app/hive/lib

#source /etc/profile 立即生效

#新建目录

hdfs dfs -mkdir -p /usr/hive/warehouse

hdfs dfs -mkdir -p /usr/hive/tmp

hdfs dfs -mkdir -p /usr/hive/log

hdfs dfs -chmod o+rwx /usr/hive/warehouse

hdfs dfs -chmod o+rwx /usr/hive/tmp

hdfs dfs -chmod o+rwx /usr/hive/log

#配置日志目录

mkdir -p /usr/app/hive/logs

conf/hive-log4j.properties修改:

hive.log.dir=/usr/app/hive/logs

2.配置Mysql

#安装mysql->yum -y install mysql-devel mysql-server

#根据实际调整/etc/my.cnf配置,找不到可通过locate my.cnf查找

#cp /usr/share/mysql/my-medium.cnf /etc/my.cnf

#启动service mysqld start /restart/stop

#进行mysql授权操作

#mysql>grant all privileges on *.* to root@'%' identified by '1' with grant option;

#mysql>gant all privileges on *.* to root@'localhost' identified by '1' with grant option;

#mysql>flush privileges;

#mysql>exit

3.mysql 新建 hive 数据库

#mysql -uroot -p

#输入密码

#mysql>create database hive;

#mysql>alter database hive character set latin1;

#mysql>grant all privileges on hive.* to hive@'%' identified by '1';

#mysql>gant all privileges on *.* to hive@'localhost' identified by '1';

#mysql>flush privileges;

#exit

4.编译hive war(web接口)#下载hive2.1.1 src源码解压切换到 hive/hwi/web 执行 jar cvf hive-hwi-2.1.1.war ./* 拷贝到hive/lib 下

5.修改配置

修改hadoop -core-site.xml加入

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

6.初始化数据库(本文用的是mysql)

#$HIVE_HOME/bin/schematool -initSchema -dbType mysql

7.根据实际情况是否拷贝 jar 到 hive/lib 下(包可到网上搜索下载)

#拷贝 hadoop/lib/hadoop-lzo-0.4.21-SNAPSHOT.jar 到 hive/lib

#拷贝 mysql-connector-java-5.1.34.jar 到 hive/lib

#拷贝 jasper-compiler-5.5.23.jar jasper-runtime-5.5.23.jar commons-el-5.5.23.jar 到 hive/lib

#拷贝 ant/lib/ant-1.9.4.jar ant-launcher.jar 到 hive/lib(如果系统安装有ant就需要调整ant)

#如果启动包日志包重复需要删除

#根据实际修改hive/bin/hive:(根据spark2后的包分散了)

sparkAssemblyPath='ls ${SPARK_HOME}/lib/spark-assembly-*.jar'

将其修改为:sparkAssemblyPath='ls ${SPARK_HOME}/jars/*.jar'

8.启动 hive

#先启动hadoop

#hive --service metastore

#hive --service hiveserver2 #http://192.168.66.66:10002 进入hiveserver2服务

#netstat -nl |grep 10000

#hive #进入终端

#hive --service hwi #进入 hive web 页面http://192.168.66.66:9999/hwi/

#Hive on Spark/Spark on Yarn测试(以Hive2.1.1与spark1.6.0为例,需要重新编译spark1.6.0---不能包括Hive)

$ssh 192.168.66.66

$sh /usr/app/hadoop/sbin/start-all.sh ---启动hadoop

$sh /usr/app/spark/sbin/start-all.sh ---启动spark

#Hive on Spark=>Spark on Yarn模式

$hive ---进入Hive客户端

hive>set hive.execution.engine=spark;

hive>create database test;

hive>create table test.test(id int,name string);

hive>insert into test.test(id,name) values('1','china');

###hive>select count(*) from test.test;

Hive客户端

hive> select count(*) from test.test; Query ID = root_20171106100849_3068b783-b729-4d7a-9353-ffc7e29b3685 Total jobs = 1 Launching Job 1 out of 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapreduce.job.reduces=<number> Starting Spark Job = ef3b76c8-2438-4a73-bcca-2f6960c5a01e Job hasn't been submitted after 61s. Aborting it. Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc. Please check YARN or Spark driver's logs for further information. Status: SENT 10:10:21.625 [16caa78a-7bea-4435-85b7-f0b355dbeca1 main] ERROR org.apache.hadoop.hive.ql.exec.spark.status.SparkJobMonitor - Job hasn't been submitted after 61s. Aborting it. Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc. Please check YARN or Spark driver's logs for further information. 10:10:21.626 [16caa78a-7bea-4435-85b7-f0b355dbeca1 main] ERROR org.apache.hadoop.hive.ql.exec.spark.status.SparkJobMonitor - Status: SENT FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask 10:10:22.019 [16caa78a-7bea-4435-85b7-f0b355dbeca1 main] ERROR org.apache.hadoop.hive.ql.Driver - FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

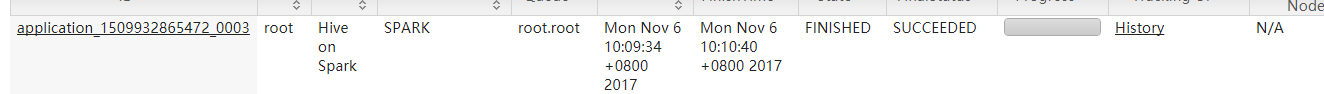

Yarn webui端:

#Hive on Spark模式

#修改hive-site.xml配置:

<property> <name>spark.master</name> <value>yarn-client</value> </property>

修改为

<property> <name>spark.master</name> <value>spark://192.168.66.66:7077</value> </property>

#修改spark-default.conf配置:

spark.master yarn-cluster 为 spark.master spark://192.168.66.66:7077

#重启spark

hive>select count(*) from test.test;

#结合Hive客户端信息,打开http://192.168.66.66:18080查看Spark任务状态

结果:执行成功

Hive端:

Starting Spark Job = 1ce13e09-0745-4230-904f-5bcd5e90eebb Job hasn't been submitted after 61s. Aborting it. Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc. Please check YARN or Spark driver's logs for further information. 10:18:10.765 [8c59fd25-3aac-445e-b34a-5f62e1729694 main] ERROR org.apache.hadoop.hive.ql.exec.spark.status.SparkJobMonitor - Job hasn't been submitted after 61s. Aborting it. Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc. Please check YARN or Spark driver's logs for further information. Status: SENT 10:18:10.773 [8c59fd25-3aac-445e-b34a-5f62e1729694 main] ERROR org.apache.hadoop.hive.ql.exec.spark.status.SparkJobMonitor - Status: SENT FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask 10:18:10.925 [8c59fd25-3aac-445e-b34a-5f62e1729694 main] ERROR org.apache.hadoop.hive.ql.Driver - FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

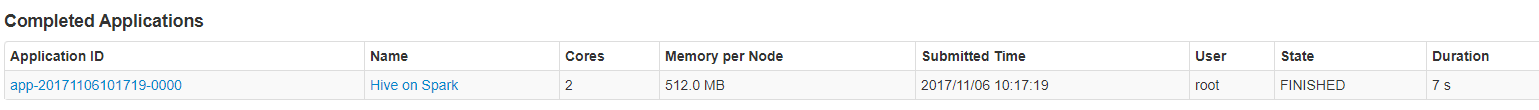

Spark web ui:

#Spark on Yarn模式

$ ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode client --driver-memory 512m --executor-memory 512m --executor-cores 1 --queue thequeue lib/spark-examples-1.6.0-hadoop2.4.0.jar 10

结果:Pi is roughly 3.140388

Yarn webui端:

#Spark on Spark单例模式

单例模式:修改spark-default.conf中的spark.master为spark://192.168.66.66:7077 在执行时设置--master spark://192.168.66.66:7077

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://192.168.66.66:7077 --deploy-mode client lib/spark-examples-1.6.0-hadoop2.4.0.jar 10

结果:Pi is roughly 3.14184

备注:hive on spark中没有包括hive编译后的spark不能使用sparksql,因为sparksql需要编译hive的包。

#hive --service metastore

#重新下载包-拷贝hive-site.xml到spark/conf下,加入配置<property>

<name>hive.metastore.uris</name>

<value>thrift://192.168.66.66:9083</value>

</property>