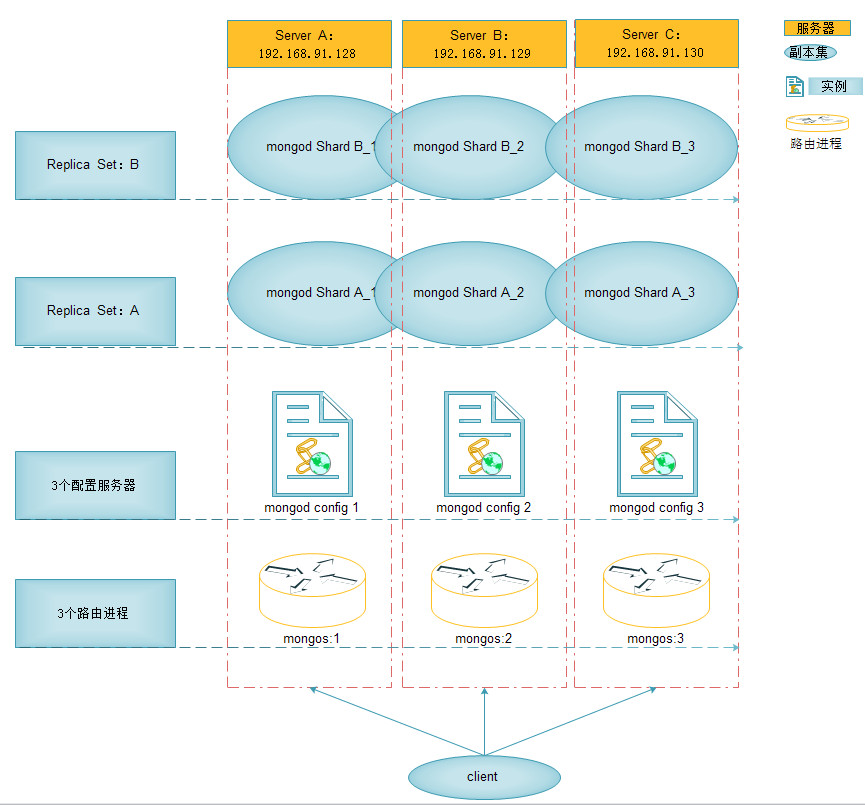

MongoDB的sharding解决了海量存储和动态扩容的问题。但是遇到单点故障就显得无能为力了。MongoDB的副本集可以很好的解决单点故障的问题。所以就有了Sharding+Replica Sets的高可用架构了。

架构图如上所述

环境配置如下:

1:Shard服务器:使用Replica Sets确保每个数据节点的数据都有备份,自动容灾转移,自动恢复的能力。

2:Config服务器:使用3个配置服务器确保元数据完整性。

3:路由进程:使用3个路由进程实现平衡,提高客户端接入的性能。

4:6个分片进程:Shard A_1、Shard A_2、Shard A_3组成一个副本集Shard A。Shard B_1、Shard B_2

、Shard B_3组成另外一个副本集 Shard B。

| Server | IP | 进程以及端口 |

|

Server A |

192.168.91.128 |

Mongod Shard A_1:27017 Mongod Shard B_1:27018 Mongod config:30000 Mongos:40000 |

|

Server B |

192.168.91.129 |

Mongod Shard A_2:27017 Mongod Shard B_2:27018 Mongod config:30000 Mongos:40000 |

|

Server C |

192.168.91.130 |

Mongod Shard A_3:27017 Mongod Shard B_3:27018 Mongod config:30000 Mongos:40000 |

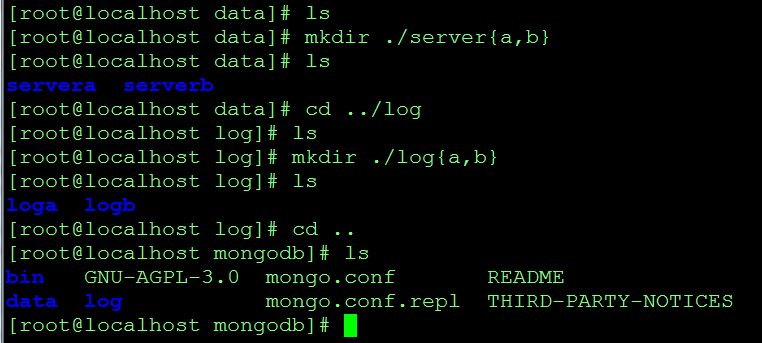

1:在Server A上启动mongod Shard A_1

[root@localhost mongodb]# ./bin/mongod --shardsvr --replSet shard1 --port 27017 --dbpath ./data/servera --logpath ./log/loga/sharda1 --fork about to fork child process, waiting until server is ready for connections. forked process: 4838 child process started successfully, parent exiting

在Server B上启动mongod Shard A_2

[root@localhost mongodb]# ./bin/mongod --shardsvr --replSet shard1 --port 27017 --dbpath ./data/servera --logpath ./log/loga/shardb1 --fork about to fork child process, waiting until server is ready for connections. forked process: 4540 child process started successfully, parent exiting

在Server C上启动mongod Shard A_3

[root@localhost mongodb]# ./bin/mongod --shardsvr --replSet shard1 --port 27017 --dbpath ./data/servera --logpath ./log/loga/shardc1 --fork about to fork child process, waiting until server is ready for connections. forked process: 4505 child process started successfully, parent exiting

在3台服务器的任意一台上执行

[root@localhost mongodb]# ./bin/mongo

MongoDB shell version: 2.6.9

connecting to: test

> config={_id:"shard1",members:[

... {_id:0,host:"192.168.91.128:27017"},

... {_id:1,host:"192.168.91.129:27017"},

... {_id:2,host:"192.168.91.130:27017"}

... ]}

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.91.128:27017"

},

{

"_id" : 1,

"host" : "192.168.91.129:27017"

},

{

"_id" : 2,

"host" : "192.168.91.130:27017"

}

]

}

> rs.initiate(config)

{

"info" : "Config now saved locally. Should come online in about a minute.",

"ok" : 1

}

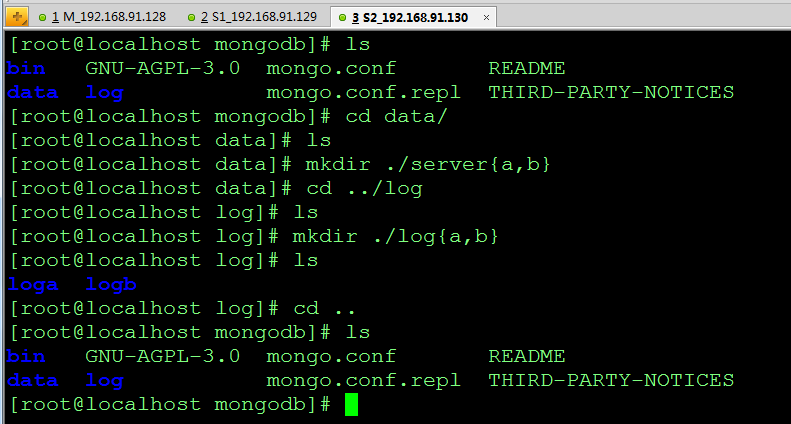

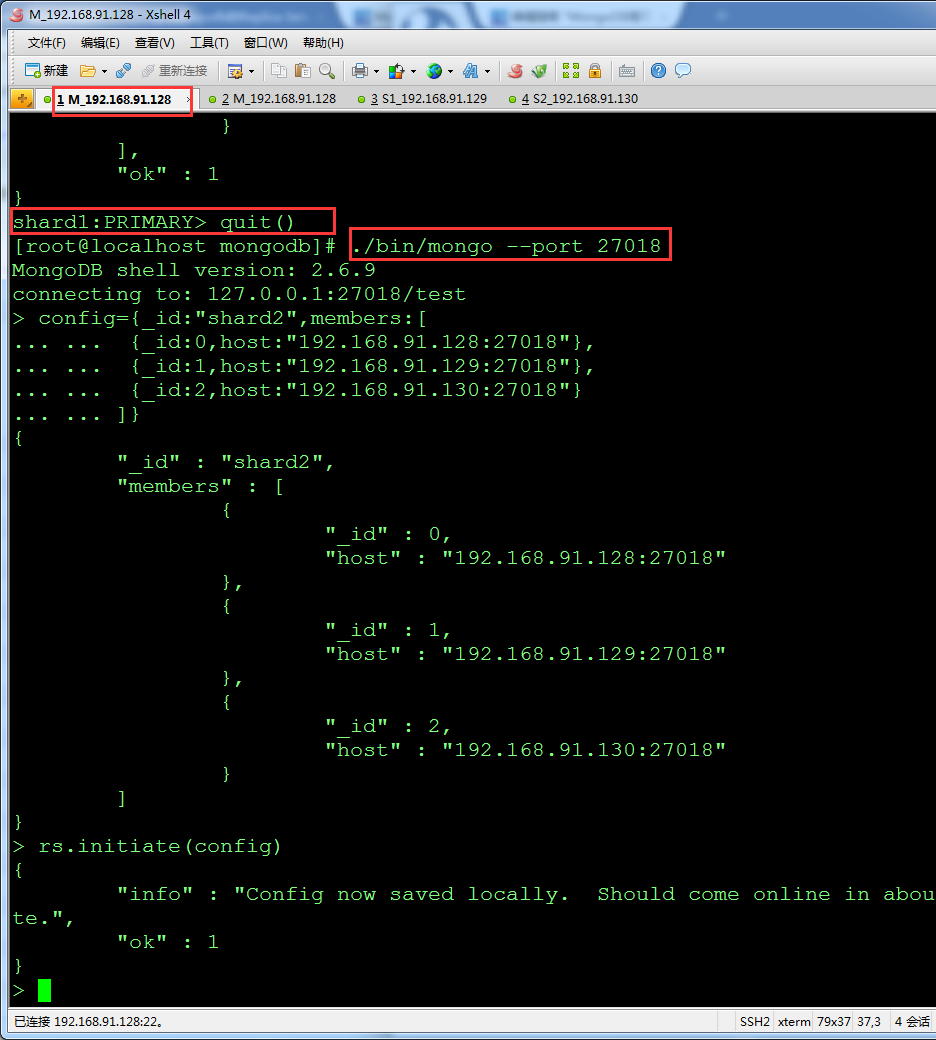

2:在Server A上启动mongod Shard B_1

[root@localhost mongodb]# ./bin/mongod --shardsvr --replSet shard2 --port 27018 --dbpath ./data/serverb --logpath ./log/logb/shardb1 --fork about to fork child process, waiting until server is ready for connections. forked process: 5150 child process started successfully, parent exiting

在Server B上启动mongod Shard B_2

[root@localhost mongodb]# ./bin/mongod --shardsvr --replSet shard2 --port 27018 --dbpath ./data/serverb --logpath ./log/logb/shardb2 --fork about to fork child process, waiting until server is ready for connections. forked process: 4861 child process started successfully, parent exiting

在Server C上启动mongod Shard B_3

[root@localhost mongodb]# ./bin/mongod --shardsvr --replSet shard2 --port 27018 --dbpath ./data/serverb --logpath ./log/logb/shardb3 --fork about to fork child process, waiting until server is ready for connections. forked process: 4800 child process started successfully, parent exiting

[root@localhost mongodb]# ./bin/mongo --port 27018

MongoDB shell version: 2.6.9

connecting to: 127.0.0.1:27018/test

> config={_id:"shard2",members:[

... ... {_id:0,host:"192.168.91.128:27018"},

... ... {_id:1,host:"192.168.91.129:27018"},

... ... {_id:2,host:"192.168.91.130:27018"}

... ... ]}

{

"_id" : "shard2",

"members" : [

{

"_id" : 0,

"host" : "192.168.91.128:27018"

},

{

"_id" : 1,

"host" : "192.168.91.129:27018"

},

{

"_id" : 2,

"host" : "192.168.91.130:27018"

}

]

}

> rs.initiate(config)

{

"info" : "Config now saved locally. Should come online in about a minute.",

"ok" : 1

}

注意:

到这里可以检查A、B、C上的相应的监听服务是否正常?

[root@localhost mongodb]# netstat -nat | grep 2701

副本集至此配置完毕。

3:分别在3台服务器上配置Config Server

在Server A上执行:

[root@localhost mongodb]# ./bin/mongod --port 30000 --dbpath ./data --logpath ./log/configlog --fork about to fork child process, waiting until server is ready for connections. forked process: 5430 child process started successfully, parent exiting

在Server B上执行:

[root@localhost mongodb]# ./bin/mongod --port 30000 --dbpath ./data --logpath ./log/configlog --fork about to fork child process, waiting until server is ready for connections. forked process: 5120 child process started successfully, parent exiting

在Server C上执行:

[root@localhost mongodb]# ./bin/mongod --port 30000 --dbpath ./data --logpath ./log/configlog --fork about to fork child process, waiting until server is ready for connections. forked process: 5056 child process started successfully, parent exiting

4:配置Route Process

在Server A上执行:

[root@localhost mongodb]# ./bin/mongos --configdb 192.168.91.128:30000,192.168.91.129:30000,192.168.91.130:30000 --port 40000 --logpath ./log/route.log --chunkSize 1 --fork about to fork child process, waiting until server is ready for connections. forked process: 5596 child process started successfully, parent exiting

chunkSize :指定chunk的大小,默认为200M。为了方便测试指定为1M。意思是当这个分片中插入的数据大于1M时候开始数据转移。

5:配置分片的表和片键

[root@localhost mongodb]# ./bin/mongo --port 40000

MongoDB shell version: 2.6.9

connecting to: 127.0.0.1:40000/test

mongos> use admin

switched to db admin

mongos> db.runCommand({addshard:"shard1/192.168.91.128:27017,192.168.91.129:27017,192.168.91.130:27017})

2015-05-06T00:38:37.360-0700 SyntaxError: Unexpected token ILLEGAL

mongos> db.runCommand({addshard:"shard1/192.168.91.128:27017,192.168.91.129:27017,192.168.91.130:27017"})

{ "shardAdded" : "shard1", "ok" : 1 }

mongos> db.runCommand({addshard:"shard2/192.168.91.128:27018,192.168.91.129:27018,192.168.91.130:27018"})

{ "shardAdded" : "shard2", "ok" : 1 }

mongos> db.person.insert({"uid":1,"uname":"gechong","tel":"158"})

WriteResult({ "nInserted" : 1 })

mongos> db.runCommand({enablesharding:"person"})

{ "ok" : 1 }

mongos> db.runCommand({shardcollection:"person.per",key:{_id:1}})

{ "collectionsharded" : "person.per", "ok" : 1 }

使用person库的per集合来做分片。片键是_id.

至此整个架构已经部署完毕。