学习了K8S的基础知识,我们的目的就是解决我们服务的迁移,那么接下去通过几个案例来感受一下K8s部署带来的便捷与效率。

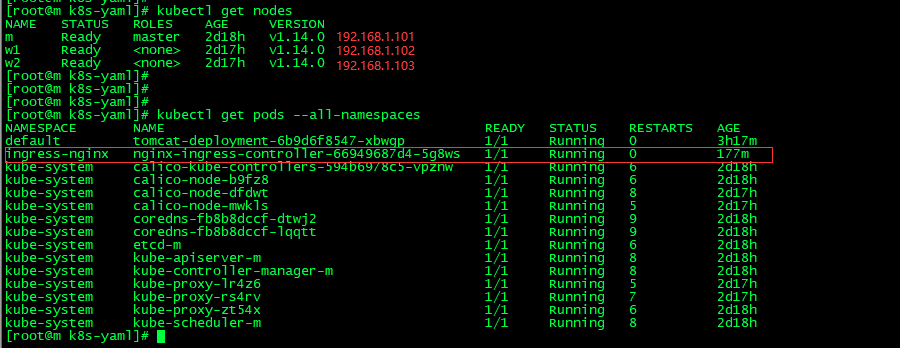

环境准备:

3个节点,然后我这边也安装了 Ingress。

部署wordpress+mysql(Service:NodePort模式):

(1)创建wordpress命名空间

kubectl create namespace wordpress

kubectl get ns

(2)创建wordpress-db.yaml文件,根据wordpress-db.yaml创建资源[mysql数据库]

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: mysql-deploy

namespace: wordpress

labels:

app: mysql

spec:

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:5.6

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

name: dbport

env:

- name: MYSQL_ROOT_PASSWORD

value: rootPassW0rd

- name: MYSQL_DATABASE

value: wordpress

- name: MYSQL_USER

value: wordpress

- name: MYSQL_PASSWORD

value: wordpress

volumeMounts:

- name: db

mountPath: /var/lib/mysql

volumes:

- name: db

hostPath:

path: /var/lib/mysql

---

apiVersion: v1

kind: Service

metadata:

name: mysql

namespace: wordpress

spec:

selector:

app: mysql

ports:

- name: mysqlport

protocol: TCP

port: 3306

targetPort: dbport

kubectl apply -f wordpress-db.yaml

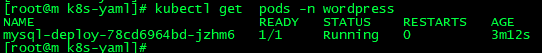

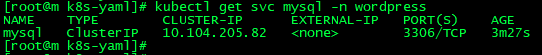

kubectl get pods -n wordpress

kubectl get svc mysql -n wordpress

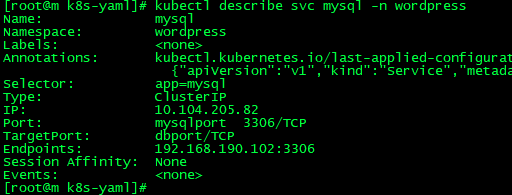

kubectl describe svc mysql -n wordpress 记录下 Endpoints的IP 或者IP ,需要配置到wordpress里面。

(3)创建wordpress.yaml文件,根据wordpress.yaml创建资源[wordpress]

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: wordpress-deploy

namespace: wordpress

labels:

app: wordpress

spec:

template:

metadata:

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: wdport

env:

- name: WORDPRESS_DB_HOST

value: 192.168.190.102:3306

- name: WORDPRESS_DB_USER

value: wordpress

- name: WORDPRESS_DB_PASSWORD

value: wordpress

---

apiVersion: v1

kind: Service

metadata:

name: wordpress

namespace: wordpress

spec:

type: NodePort

selector:

app: wordpress

ports:

- name: wordpressport

protocol: TCP

port: 80

targetPort: wdport

kubectl apply -f wordpress.yaml #修改其中mysql的ip地址,其实也可以使用service的name:mysql

kubectl get pods -n wordpress

kubectl get svc -n wordpress # 获取到转发后的端口,如这里的30078

(6)访问测试,win上访问集群中任意宿主机节点的IP:30078

部署Spring Boot项目(Ingress):

流程:确定服务-->编写Dockerfile制作镜像-->上传镜像到仓库-->编写K8S文件-->创建

(1)准备Spring Boot项目springboot-demo

@RestController

@RequestMapping("wuzz")

public class TestController {

@RequestMapping(value = "/k8s", method = {RequestMethod.GET})

public String k8s() {

return "Hello Kubernetes .....";

}

}

(2) mvn clean install生成xxx.jar,并且上传到 linux 自定义目录下(我这边是 /mysoft)

(3)编写Dockerfile文件 cd /mysoft 下 vi Dockerfile

FROM openjdk:8-jre-alpine

COPY springboot-demo-1.0-SNAPSHOT.jar /mysoft/springboot-demo.jar

ENTRYPOINT ["java","-jar","/mysoft/springboot-demo.jar"]

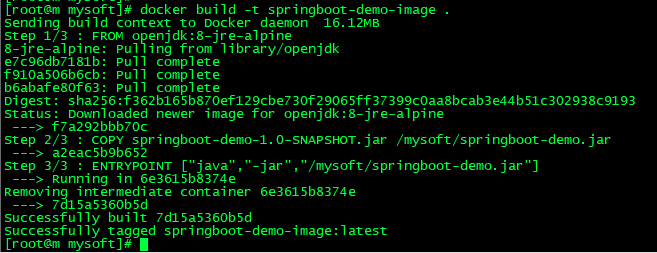

(4)根据Dockerfile创建image

docker build -t springboot-demo-image .

(5)使用docker run创建container

docker run -d --name s1 springboot-demo-image

(6)访问测试

docker inspect s1

curl ip:8080/wuzz/k8s

(7)将镜像推送到镜像仓库

# 登录阿里云镜像仓库

sudo docker login --username=随风去wuzz registry.cn-hangzhou.aliyuncs.com

docker tag springboot-demo-image registry.cn-hangzhou.aliyuncs.com/wuzz-docker/springboot-demo-image:v1.0

docker push registry.cn-hangzhou.aliyuncs.com/wuzz-docker/springboot-demo-image:v1.0

推送完成。

(8)编写Kubernetes配置文件vi springboot-demo.yaml

# 以Deployment部署Pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: springboot-demo

spec:

selector:

matchLabels:

app: springboot-demo

replicas: 1

template:

metadata:

labels:

app: springboot-demo

spec:

containers:

- name: springboot-demo

image: registry.cn-hangzhou.aliyuncs.com/wuzz-docker/springboot-demo-image:v1.0

ports:

- containerPort: 8080

---

# 创建Pod的Service

apiVersion: v1

kind: Service

metadata:

name: springboot-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: springboot-demo

---

# 创建Ingress,定义访问规则,一定要记得提前创建好nginx ingress controller

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-demo

spec:

rules:

- host: tomcat.wuzz.com

http:

paths:

- path: /

backend:

serviceName: springboot-demo

servicePort: 80

生成 pod :kubectl apply -f springboot-demo.yaml

(9)查看资源

kubectl get pods

kubectl get pods -o wide

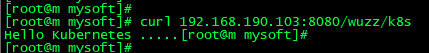

curl 192.168.190.103:8080/wuzz/k8s

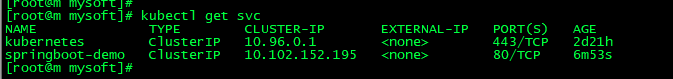

kubectl get svc

kubectl scale deploy springboot-demo --replicas=5

(10)win配置hosts文件[一定要记得提前创建好nginx ingress controller]

192.168.1.102 tomcat.wuzz.com

(11)win浏览器访问

部署传统分布式服务(user/order)及注册中心(Nacos):

将user/order服务注册到nacos,user服务能够找到order服务。

(1).启动两个Spring Boot项目,两者Pom.xml如下:

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

<spring-cloud.version>Greenwich.SR1</spring-cloud.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>2.1.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.10</version>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<!-- SpringCloud 所有子项目 版本集中管理. 统一所有SpringCloud依赖项目的版本依赖-->

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>0.9.0.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>2.1.6.RELEASE</version>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>2.1.6.RELEASE</version>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

application.properties :user端口8081,order9090,记得修改对应application.name

server.port=8081

spring.application.name=user

spring.cloud.nacos.discovery.server-addr=192.168.1.101:8848

user 需要提供一个接口以供测试:

@RestController

public class TestController {

@Autowired

private DiscoveryClient discoveryClient;

@RequestMapping(value = "/k8s", method = {RequestMethod.GET})

public String insert() {

List<ServiceInstance> list = discoveryClient.getInstances("order");

ServiceInstance serviceInstance = list.get(0);

URI uri = serviceInstance.getUri();

System.out.println(uri);

testUri(uri.toString());

return list.toString();

}

public void testUri(String uri) {

URL url = null;

try {

url = new URL(uri);

URLConnection urlConnection = url.openConnection();

urlConnection.connect();

System.out.println("连接可用");

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

}

然后查看nacos server的服务列表:

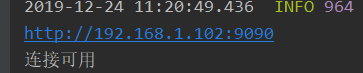

为了验证user能够发现order的地址,访问localhost:8081/k8s,查看日志输出,从而测试是否可以ping通order地址

(2) 本地测试无误以后将两个项目打成 jar 包,丢到 linux 目录中。编写 Dockerfile 文件:

FROM openjdk:8-jre-alpine

COPY user-1.0.0-SNAPSHOT.jar /user.jar

ENTRYPOINT ["java","-jar","/user.jar"]

-------

FROM openjdk:8-jre-alpine

COPY order-0.0.1-SNAPSHOT.jar /order.jar

ENTRYPOINT ["java","-jar","/order.jar"]

构建镜像:

docker build -t user-image:v1.0 .

docker build -t order-image:v1.0 .

将镜像推送到远程私人仓库:我这里选择了阿里云

#打 tag

docker tag user-image:v1.0 registry.cn-hangzhou.aliyuncs.com/wuzz-docker/user-image:v1.0

docker tag order-image:v1.0 registry.cn-hangzhou.aliyuncs.com/wuzz-docker/order-image:v1.0

#推送

docker push registry.cn-hangzhou.aliyuncs.com/wuzz-docker/user-image

docker push registry.cn-hangzhou.aliyuncs.com/wuzz-docker/order-image

(3) 编写 user.yaml ,order.yaml 文件:

user.yaml

# 以Deployment部署Pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: user

spec:

selector:

matchLabels:

app: user

replicas: 1

template:

metadata:

labels:

app: user

spec:

containers:

- name: user

image: registry.cn-hangzhou.aliyuncs.com/wuzz-docker/user-image:v1.0

ports:

- containerPort: 8081

---

# 创建Pod的Service

apiVersion: v1

kind: Service

metadata:

name: user

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8081

selector:

app: user

---

# 创建Ingress,定义访问规则,一定要记得提前创建好nginx ingress controller

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: user

spec:

rules:

- host: k8s.demo.gper.club

http:

paths:

- path: /

backend:

serviceName: user

servicePort: 80

order.yaml:

# 以Deployment部署Pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: order

spec:

selector:

matchLabels:

app: order

replicas: 1

template:

metadata:

labels:

app: order

spec:

containers:

- name: order

image: registry.cn-hangzhou.aliyuncs.com/wuzz-docker/order-image:v1.0

ports:

- containerPort: 9090

---

# 创建Pod的Service

apiVersion: v1

kind: Service

metadata:

name: order

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9090

selector:

app: order

(4) 构建 pods :kubectl apply -f user.yaml

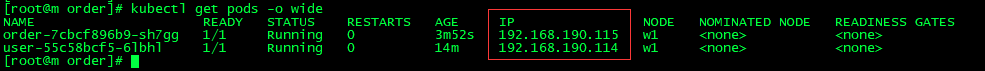

kubectl get pods 查看pods

kubectl get pods -o wide 查看pods详情

kubectl get svc 查看service

kubectl get ingress 查看ingress网络

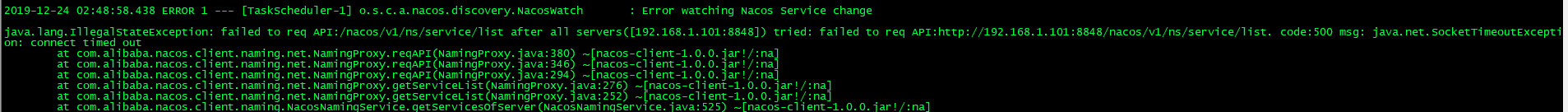

这个时候我通过:kubectl logs -f <pod-name> [主要是为了看日志输出,证明user能否访问order] 发现这个pod报错了,连接不上,经过测试 在本地跟直接在linux上通过java -jar 运行都是可以注册的 ,没问题。

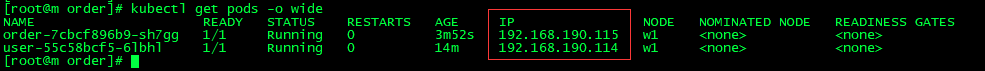

这个时候我们可以 docker exec -it containerId /bin/sh 进入对用容器 ping 一下 这里报错的地址,发现 ping 不通。这个时候获取到对应的 pod 的 IP:

在节点 192.168.190.114 增加一条路由规则:/sbin/iptables -t nat -I POSTROUTING -s 192.168.190.0/24 -j MASQUERADE

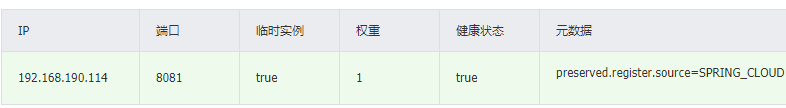

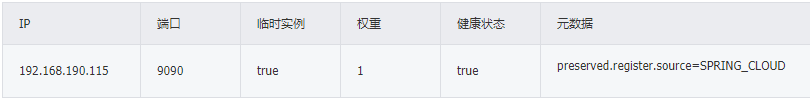

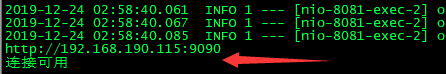

然后会发现注册成功:

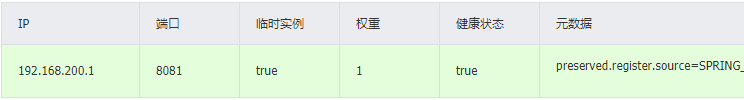

可以发现,注册到nacos server上的服务ip地址为pod的ip,比如192.168.190.114/192.168.190.115 .

(5) 测试:通过浏览器访问我们配置的 Ingress 的对应路径

看一下 pod 的日志发现调用没问题。如果服务都是在K8s集群中,最终将pod ip注册到了nacos server,那么最终服务通过pod ip发现。

假如user现在不在K8s集群中,order在K8s集群中:

比如user使用本地idea中的,order使用上面K8s中的 。

- 启动本地idea中的user服务

- 查看nacos server中的user服务列表

- 访问本地的localhost:8081/ks,并且观察idea中的日志打印,发现访问的是order的pod id,此时肯定是不能进行服务调用的,怎么解决呢?

之所以访问不了,是因为order的pod ip在外界访问不了,怎么解决呢?

- 可以将pod启动时所在的宿主机的ip写到容器中,也就是pod id和宿主机ip有一个对应关系

- pod和宿主机使用host网络模式,也就是pod直接用宿主机的ip,但是如果服务高可用会有端口冲突问题[可以使用pod的调度策略,尽可能在高可用的情况下,不会将pod调度在同一个worker中]

我们来演示一个host网络模式的方式,修改order.yaml文件,修改之后apply之前可以看一下各个节点的9090端口是否被占用

apiVersion: apps/v1 kind: Deployment metadata: name: order spec: selector: matchLabels: app: order replicas: 1 template: metadata: labels: app: order spec:

#主要是加上host映射 hostNetwork: true containers: - name: order image: registry.cn-hangzhou.aliyuncs.com/wuzz-docker/order-image:v1.0 ports: - containerPort: 9090 --- # 创建Pod的Service apiVersion: v1 kind: Service metadata: name: order spec: ports: - port: 80 protocol: TCP targetPort: 9090 selector: app: order

构建之前记得把之前哪个删掉:kubectl apply -f order.yaml

kubectl get pods -o wide --->找到pod运行在哪个机器上,比如w2,查看w2上的9090端口是否启动

构建完,启动本地的user服务,看是否注册上去:

访问本地发 user服务:

查看控制台的输出信息: