VDO虚拟数据优化

VDO(Virtual Data Optimize,虚拟数据优化)是一种通过压缩或删除存储设备上的数据来优化存储空间的技术。VDO是红帽公司收购了Permabit公司后获取的新技术,并与2019-2020年前后,多次在RHEL 7.5/7.6/7.7上进行测试,最终随RHEL 8系统正式公布。VDO技术的关键就是对硬盘内原有的数据进行删重操作,它有点类似于我们平时使用的网盘服务,在第一次正常上传文件时速度特别慢,在第二次上传相同的文件时仅作为一个数据指针,几乎可以达到“秒传”的效果,无须再多占用一份空间,也不用再漫长等待。除了删重操作,VDO技术还可以对日志和数据库进行自动压缩,进一步减少存储浪费的情况。

VDO技术支持本地存储和远程存储,可以作为本地文件系统、iSCSI或Ceph存储下的附加存储层使用。红帽公司在VDO介绍页面中提到,在部署虚拟机或容器时,建议采用逻辑存储与物理存储为10∶1的比例进行配置,即1TB物理存储对应10TB逻辑存储;而部署对象存储时 (例如使用Ceph)则采用逻辑存储与物理存储为3∶1的比例进行配置,即使用1TB物理存储对应3TB逻辑存储。

有两种特殊情况需要提前讲一下。

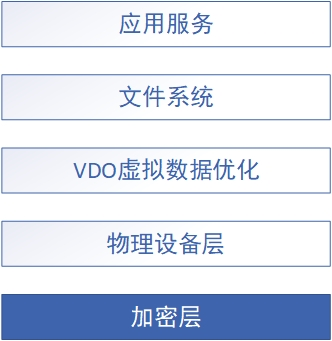

其一,公司服务器上已有的dm-crypt之类的技术是可以与VDO技术兼容的,但记得要先对卷进行加密再使用VDO。因为加密会使重复的数据变得有所不同,因此删重操作无法实现。要始终记得把加密层放到VDO之下,如图所示。

其二,VDO技术不可叠加使用,1TB的物理存储提升成10TB的逻辑存储没问题,但是再用10TB翻成100TB就不行了。左脚踩右脚,真的没法飞起来。

添加一块容量为20GB的新SATA硬盘进来。

[root@linuxprobe ~]# ls -l /dev/sdc brw-rw----. 1 root disk 8, 32 Jan 6 22:26 /dev/sdc

RHEL/CentOS 8系统中默认已经启用了VDO技术。VDO技术现在是红帽公司自己的技术,兼容性自然没得说。如果您所用的系统没有安装VDO的话也不要着急,用dnf命令即可完成安装:

[root@linuxprobe ~]# dnf install kmod-kvdo vdo

Updating Subscription Management repositories.

Unable to read consumer identity

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Last metadata expiration check: 0:01:56 ago on Wed 06 Jan 2021 10:37:19 PM CST.

Package kmod-kvdo-6.2.0.293-50.el8.x86_64 is already installed.

Package vdo-6.2.0.293-10.el8.x86_64 is already installed.

Dependencies resolved.

Nothing to do.

Complete!

首先,创建一个全新的VDO卷。

新添加进来的物理设备就是使用vdo命令来管理的,其中name参数代表新的设备卷的名称;device参数代表由哪块磁盘进行制作;vdoLogicalSize参数代表制作后的设备大小。依据红帽公司推荐的原则,20GB硬盘将翻成200GB的逻辑存储:

[root@superwu ~]# vdo create --name=hehe --device=/dev/sdc --vdoLogicalSize=200G

//从/dev/sdc创建一个hehe的VDO卷大小为200G,vdoLogicalSize参数中的L与S字母必须大写 Creating VDO storage Starting VDO storage Starting compression on VDO storage VDO instance 0 volume is ready at /dev/mapper/hehe

在创建成功后,使用status参数查看新建卷的概述信息:

[root@superwu ~]# vdo status --name=hehe //查看卷信息

VDO status:

Date: '2022-02-07 18:46:02+08:00'

Node: superwu.10

Kernel module:

Loaded: true

Name: kvdo

Version information:

kvdo version: 6.2.0.293

Configuration:

File: /etc/vdoconf.yml

Last modified: '2022-02-07 18:44:25'

VDOs:

hehe:

Acknowledgement threads: 1

Activate: enabled

Bio rotation interval: 64

Bio submission threads: 4

Block map cache size: 128M

Block map period: 16380

Block size: 4096

CPU-work threads: 2

Compression: enabled //是否压缩

Configured write policy: auto

Deduplication: enabled //是否删重

Device mapper status: 0 419430400 vdo /dev/sdc normal - online online 1051408 5242880

Emulate 512 byte: disabled

Hash zone threads: 1

Index checkpoint frequency: 0

Index memory setting: 0.25

Index parallel factor: 0

Index sparse: disabled

Index status: online

Logical size: 200G

Logical threads: 1

Max discard size: 4K

Physical size: 20G

Physical threads: 1

Slab size: 2G

Storage device: /dev/disk/by-id/ata-

......

其次,对新建卷进行格式化操作并挂载。

新建的VDO卷设备会被乖乖地存放在/dev/mapper目录下,并以设备名称命名,对它操作就行。另外,挂载前可以用udevadm settle命令对设备进行一次刷新操作,避免刚才的配置没有生效:

[root@superwu ~]# mkfs.xfs /dev/mapper/hehe //对vdo卷进行格式化(格式化时间可能较长) meta-data=/dev/mapper/hehe isize=512 agcount=4, agsize=13107200 blks = sectsz=4096 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 data = bsize=4096 blocks=52428800, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=25600, version=2 = sectsz=4096 sunit=1 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0

[root@superwu ~]#udevadm settle //刷新udev

[root@superwu ~]#mkdir /hehe //创建挂载点

[root@superwu ~]#mount /dev/mapper/hehe /hehe //将vdo文件系统挂载

将挂载信息写入/etc/fstab文件,实现开机挂载。

[root@superwu ~]# blkid /dev/mapper/hehe //对于逻辑存储设备建议使用UUID进行挂载 /dev/mapper/hehe: UUID="dd2fdad8-6c61-402b-9bb9-34745b9a2c2e" TYPE="xfs" [root@superwu ~]# vim /etc/fstab # # /etc/fstab # Created by anaconda on Tue Jan 11 03:26:57 2022 # # Accessible filesystems, by reference, are maintained under '/dev/disk/'. # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info. # # After editing this file, run 'systemctl daemon-reload' to update systemd # units generated from this file. # /dev/mapper/rhel-root / xfs defaults 0 0 UUID=d7f53471-c95f-44f2-aafe-f86bd5ecebd7 /boot xfs defaults,uquota 0 0 /dev/mapper/rhel-swap swap swap defaults 0 0 /dev/cdrom /media/cdrom iso9660 defaults 0 0 /dev/sdb1 /newFS xfs defaults 0 0 /dev/sdb2 swap swap defaults 0 0 UUID="dd2fdad8-6c61-402b-9bb9-34745b9a2c2e" /hehe xfs defaults,_netdev 0 0 //_netdev参数,表示等系统及网络都启动后再挂载VDO设备卷。

[root@superwu ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sdb1 2.0G 53M 2.0G 3% /newFS /dev/sda1 1014M 170M 845M 17% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 /dev/mapper/hehe 200G 1.5G 199G 1% /hehe

然后,查看并使用vdo

查看设备的实际使用情况,使用vdostats命令即可。human-readable参数的作用是将存储容量自动进位,以人们更易读的方式输出。

[root@superwu ~]# vdostats --human-readable

Device Size Used Available Use% Space saving%

/dev/mapper/hehe 20.0G 4.0G 16.0G 20% 99%

[root@superwu ~]# ls -lh /media/cdrom/images/install.img -r--r--r--. 1 root root 448M Apr 4 2019 /media/cdrom/images/install.img [root@superwu ~]# cd /hehe/ [root@superwu hehe]# cp /media/cdrom/images/install.img /hehe/ //复制一个文件到vdo的hehe卷中。 [root@superwu hehe]# ls -lh total 448M -r--r--r--. 1 root root 448M Feb 7 19:20 install.img [root@superwu hehe]# vdostats --human-readable Device Size Used Available Use% Space saving% /dev/mapper/hehe 20.0G 4.4G 15.6G 22% 18% [root@superwu hehe]# cp /media/cdrom/images/install.img /hehe/hehe.img //复制一个相同的文件到vdo的hehe卷中。 [root@superwu hehe]# ls -lh total 896M -r--r--r--. 1 root root 448M Feb 7 19:24 hehe.img -r--r--r--. 1 root root 448M Feb 7 19:20 install.img [root@superwu hehe]# vdostats --human-readable Device Size Used Available Use% Space saving% /dev/mapper/hehe 20.0G 4.5G 15.5G 22% 55% //原先448MB的文件这次只占用了不到100MB的容量,空间节省率也从18%提升到了55%。

RAID磁盘冗余阵列

RAID技术通过把多个硬盘设备组合成一个容量更大、安全性更好的磁盘阵列,并把数据切割成多个区段后分别存放在各个不同的物理硬盘设备上,然后利用分散读写技术来提升磁盘阵列整体的性能,同时把多个重要数据的副本同步到不同的物理硬盘设备上,从而起到了非常好的数据冗余备份效果。

RAID 0、1、5、10方案技术对比

| RAID级别 | 最少硬盘 | 可用容量 | 读写性能 | 安全性 | 特点 |

| 0 | 1 | n | n | 低 | 追求最大容量和速度,任何一块盘损坏,数据全部异常。 |

| 1 | 2 | n/2 | n | 高 | 追求最大安全性,只要阵列组中有一块硬盘可用,数据不受影响。 |

| 5 | 3 | n-1 | n-1 | 中 | 在控制成本的前提下,追求硬盘的最大容量、速度及安全性,允许有一块硬盘异常,数据不受影响。 |

| 10 | 4 | n/2 | n/2 | 高 | 综合RAID1和RAID0的优点,追求硬盘的速度和安全性,允许有一半硬盘异常(不可同组),数据不受影响 |

部署磁盘阵列

mdadm命令用于创建、调整、监控和管理RAID设备,英文全称为“multiple devices admin”,语法格式为“mdadm参数 硬盘名称”。

mdadm命令中的常用参数及作用如表所示

| 参数 | 作用 |

| -a | 检测设备名称 |

| -n | 指定设备数量 |

| -l | 指定RAID级别 |

| -C | 创建 |

| -v | 显示过程 |

| -f | 模拟设备损坏 |

| -r | 移除设备 |

| -Q | 查看摘要信息 |

| -D | 查看详细信息 |

| -S | 停止RAID磁盘阵列 |

创建RAID 10

1.创建RAID磁盘阵列

[root@superwu ~]# mdadm -Cv /dev/md/hoho -n 4 -l 10 /dev/sd[b-e] //在/dev/md目录下创建hoho阵列(默认没有md目录,创建阵列时会自动创建,且阵列卡必须在md目录下创建),使用sdb-e磁盘(也可以将硬盘单独写出/dev/sdb /dev/sdc /dev/sdd /dev/sde) mdadm: layout defaults to n2 mdadm: layout defaults to n2 mdadm: chunk size defaults to 512K mdadm: size set to 20954112K mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md/hoho started. [root@superwu md]# mdadm -Q /dev/md/hoho //磁盘阵列创建需要几分钟,查看阵列信息,-D可查看详细信息。 /dev/md/hoho: 39.97GiB raid10 4 devices, 0 spares. Use mdadm --detail for more detail.

2.格式化磁盘阵列

注意:创建磁盘阵列需要时间,建议等待几分钟或者查看阵列状态正常后进行格式化操作。

[root@superwu md]# mkfs.ext4 /dev/md/hoho

mke2fs 1.44.3 (10-July-2018)

Creating filesystem with 10477056 4k blocks and 2621440 inodes

Filesystem UUID: face2652-48c3-4883-9260-12fa11976a97

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624

Allocating group tables: done

Writing inode tables: done

Creating journal (65536 blocks): done

Writing superblocks and filesystem accounting information: done

3.挂载磁盘阵列

[root@superwu md]# mkdir /hoho //创建挂载点 [root@superwu md]# mount /dev/md/hoho /hoho [root@superwu md]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 152M 863M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 /dev/md127 40G 49M 38G 1% /hoho [root@superwu md]# echo "/dev/md/hoho /hoho ext4 defaults 0 0" >> /etc/fstab //将挂载信息写入配置文件,实现开机自动挂载。 [root@superwu md]# cat /etc/fstab # # /etc/fstab # Created by anaconda on Tue Jan 11 03:26:57 2022 # # Accessible filesystems, by reference, are maintained under '/dev/disk/'. # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info. # # After editing this file, run 'systemctl daemon-reload' to update systemd # units generated from this file. # /dev/mapper/rhel-root / xfs defaults 0 0 UUID=d7f53471-c95f-44f2-aafe-f86bd5ecebd7 /boot xfs defaults 0 0 /dev/mapper/rhel-swap swap swap defaults 0 0 /dev/cdrom /media/cdrom iso9660 defaults 0 0 /dev/md/hoho /hoho ext4 defaults 0 0 [root@superwu md]# mdadm -D /dev/md/hoho //查看磁盘阵列详细信息 /dev/md/hoho: Version : 1.2 Creation Time : Wed Feb 9 18:35:22 2022 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Wed Feb 9 18:53:54 2022 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Name : superwu.10:hoho (local to host superwu.10) UUID : 6af87cfc:47705900:20fcf416:eeac2363 Events : 17 Number Major Minor RaidDevice State 0 8 16 0 active sync set-A /dev/sdb 1 8 32 1 active sync set-B /dev/sdc 2 8 48 2 active sync set-A /dev/sdd 3 8 64 3 active sync set-B /dev/sde

磁盘阵列故障盘处理

磁盘阵列中如果有硬盘出现故障,需要及时更换,负责会造成数据丢失风险。

1.模拟硬盘故障

虚拟机环境需要模拟硬盘损坏

[root@superwu md]# mdadm /dev/md/hoho -f /dev/sdc //模拟一块硬盘失效 mdadm: set /dev/sdc faulty in /dev/md/hoho [root@superwu md]# mdadm -D /dev/md/hoho /dev/md/hoho: Version : 1.2 Creation Time : Wed Feb 9 18:35:22 2022 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Wed Feb 9 19:12:37 2022 State : clean, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 1 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Name : superwu.10:hoho (local to host superwu.10) UUID : 6af87cfc:47705900:20fcf416:eeac2363 Events : 19 Number Major Minor RaidDevice State 0 8 16 0 active sync set-A /dev/sdb - 0 0 1 removed 2 8 48 2 active sync set-A /dev/sdd 3 8 64 3 active sync set-B /dev/sde 1 8 32 - faulty /dev/sdc

2.将故障硬盘从阵列中移除

[root@superwu md]# mdadm /dev/md/hoho -r /dev/sdc //-r移除磁盘 mdadm: hot removed /dev/sdc from /dev/md/hoho [root@superwu md]# mdadm -D /dev/md/hoho /dev/md/hoho: Version : 1.2 Creation Time : Wed Feb 9 18:35:22 2022 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 3 Persistence : Superblock is persistent Update Time : Wed Feb 9 19:16:27 2022 State : clean, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Name : superwu.10:hoho (local to host superwu.10) UUID : 6af87cfc:47705900:20fcf416:eeac2363 Events : 20 Number Major Minor RaidDevice State 0 8 16 0 active sync set-A /dev/sdb - 0 0 1 removed 2 8 48 2 active sync set-A /dev/sdd 3 8 64 3 active sync set-B /dev/sde

// /dev/md/hoho阵列中已经没有了/dev/sdc硬盘。

3.更换硬盘

注意:在生产环境中,服务器一般都使用RAID卡,对于RAID1、10会自动同步。

[root@superwu md]# mdadm /dev/md/hoho -a /dev/sdc //更换硬盘后,将新硬盘加入到阵列中,-a添加 mdadm: added /dev/sdc [root@superwu md]# mdadm -D /dev/md/hoho /dev/md/hoho: Version : 1.2 Creation Time : Wed Feb 9 18:35:22 2022 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Wed Feb 9 19:25:14 2022 State : clean, degraded, recovering Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Rebuild Status : 15% complete Name : superwu.10:hoho (local to host superwu.10) UUID : 6af87cfc:47705900:20fcf416:eeac2363 Events : 24 Number Major Minor RaidDevice State 0 8 16 0 active sync set-A /dev/sdb 4 8 32 1 spare rebuilding /dev/sdc //此时raid正在重构,重构需要时间,重构时间与磁盘大小有关。 2 8 48 2 active sync set-A /dev/sdd 3 8 64 3 active sync set-B /dev/sde

磁盘阵列+备份盘

RAID5+备份盘

备份盘的核心理念就是准备一块足够大的硬盘,这块硬盘平时处于闲置状态,一旦RAID磁盘阵列中有硬盘出现故障后则会马上自动顶替上去。

注意:备份盘的大小应等于或大于RAID成员盘。

1.创建磁盘阵列

[root@superwu ~]# mdadm -Cv /dev/md/hehe -n 3 -l 5 -x 1 /dev/sdb /dev/sdc /dev/sdd /dev/sde //-x 表示有一块备份盘 mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 512K mdadm: size set to 20954112K mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md/hehe started. [root@superwu ~]# mdadm -D /dev/md/hehe /dev/md/hehe: Version : 1.2 Creation Time : Wed Feb 9 23:25:36 2022 Raid Level : raid5 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Wed Feb 9 23:25:43 2022 State : clean, degraded, recovering Active Devices : 2 Working Devices : 4 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 12% complete Name : superwu.10:hehe (local to host superwu.10) UUID : 2bb20f83:f96626bb:04d1ccd4:fc94809e Events : 2 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc 4 8 48 2 active sync /dev/sdd 3 8 64 - spare /dev/sde

2.格式化磁盘阵列

[root@superwu ~]# mkfs.ext4 /dev/md/hehe

mke2fs 1.44.3 (10-July-2018)

Creating filesystem with 10477056 4k blocks and 2621440 inodes

Filesystem UUID: 65b5dd45-b4a7-4db8-b5d9-1331a95b4fba

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624

Allocating group tables: done

Writing inode tables: done

Creating journal (65536 blocks): done

Writing superblocks and filesystem accounting information: done

3.挂载磁盘阵列

[root@superwu ~]# mkdir /opt/hehe [root@superwu ~]# mount /dev/md/hehe /opt/hehe/ [root@superwu ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 152M 863M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 /dev/md127 40G 49M 38G 1% /opt/hehe [root@superwu ~]# echo "/dev/md/hehe /opt/hehe ext4 defaults 0 0" >> /etc/fstab //加入开机自动挂载

当RAID中的硬盘故障时,备份盘会立即自动顶替故障盘。

[root@superwu ~]# mdadm /dev/md/hehe -f /dev/sdb //模拟sdb盘故障 mdadm: set /dev/sdb faulty in /dev/md/hehe [root@superwu ~]# mdadm -D /dev/md/hehe /dev/md/hehe: Version : 1.2 Creation Time : Wed Feb 9 23:25:36 2022 Raid Level : raid5 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Wed Feb 9 23:42:59 2022 State : clean, degraded, recovering Active Devices : 2 Working Devices : 3 Failed Devices : 1 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 1% complete Name : superwu.10:hehe (local to host superwu.10) UUID : 2bb20f83:f96626bb:04d1ccd4:fc94809e Events : 20 Number Major Minor RaidDevice State 3 8 64 0 spare rebuilding /dev/sde //sde自动顶替故障盘,并开始同步数据 1 8 32 1 active sync /dev/sdc 4 8 48 2 active sync /dev/sdd 0 8 16 - faulty /dev/sdb

删除磁盘阵列

1.卸载磁盘阵列,停用成员盘

[root@superwu ~]# umount /opt/hehe //卸载阵列 [root@superwu ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 152M 863M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 [root@superwu ~]# mdadm /dev/md/hehe -f /dev/sdb mdadm: set /dev/sdb faulty in /dev/md/hehe [root@superwu ~]# mdadm /dev/md/hehe -f /dev/sdc mdadm: set /dev/sdc faulty in /dev/md/hehe [root@superwu ~]# mdadm /dev/md/hehe -f /dev/sde mdadm: set /dev/sde faulty in /dev/md/hehe [root@superwu ~]# mdadm /dev/md/hehe -f /dev/sdd mdadm: set /dev/sdd faulty in /dev/md/hehe [root@superwu ~]# mdadm -D /dev/md/hehe /dev/md/hehe: Version : 1.2 Creation Time : Wed Feb 9 23:25:36 2022 Raid Level : raid5 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Wed Feb 9 23:52:31 2022 State : clean, FAILED Active Devices : 0 Failed Devices : 4 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Number Major Minor RaidDevice State - 0 0 0 removed - 0 0 1 removed - 0 0 2 removed 0 8 16 - faulty /dev/sdb 1 8 32 - faulty /dev/sdc 3 8 64 - faulty /dev/sde 4 8 48 - faulty /dev/sdd

2.移除成员盘

[root@superwu ~]# mdadm /dev/md/hehe -r /dev/sdb mdadm: hot removed /dev/sdb from /dev/md/hehe [root@superwu ~]# mdadm /dev/md/hehe -r /dev/sdc mdadm: hot removed /dev/sdc from /dev/md/hehe [root@superwu ~]# mdadm /dev/md/hehe -r /dev/sdd mdadm: hot removed /dev/sdd from /dev/md/hehe [root@superwu ~]# mdadm /dev/md/hehe -r /dev/sde mdadm: hot removed /dev/sde from /dev/md/hehe [root@superwu ~]# mdadm -D /dev/md/hehe /dev/md/hehe: Version : 1.2 Creation Time : Wed Feb 9 23:25:36 2022 Raid Level : raid5 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 0 Persistence : Superblock is persistent Update Time : Wed Feb 9 23:55:36 2022 State : clean, FAILED Active Devices : 0 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Number Major Minor RaidDevice State - 0 0 0 removed - 0 0 1 removed - 0 0 2 removed

3.停用磁盘阵列

[root@superwu ~]# mdadm --stop /dev/md/hehe

mdadm: stopped /dev/md/hehe

[root@superwu ~]# ls -l /dev/md/hehe

ls: cannot access '/dev/md/hehe': No such file or directory

LVM逻辑卷

LVM技术是在硬盘分区和文件系统之间添加了一个逻辑层,它提供了一个抽象的卷组,可以把多块硬盘进行卷组合并。这样一来,用户不必关心物理硬盘设备的底层架构和布局,就可以实现对硬盘分区的动态调整。

物理卷:Physical Volume,PV

卷组:Volume Group,VG

逻辑卷:Logical Volume,LV

基本单元:Physical Extent,PE,PE大小一般为4M。

注意:逻辑卷的大小一定是PE的倍数,即逻辑卷是由若干个PE组成。

部署逻辑卷

常用的LVM部署命令

| 功能/命令 | 物理卷管理 | 卷组管理 | 逻辑卷管理 |

| 扫描 | pvscan | vgscan | lvscan |

| 建立 | pvcreate | vgcreate | lvcreate |

| 显示 | pvdisplay | vgdisplay | lvdisplay |

| 删除 | pvremove | vgremove | lvremove |

| 扩展 | vgextend | lvextend | |

| 缩小 | vgreduce | lvreduce |

1.创建物理卷

[root@superwu10 ~]# pvcreate /dev/sdb /dev/sdc //对硬盘创建物理卷

Physical volume "/dev/sdb" successfully created.

Physical volume "/dev/sdc" successfully created.

2.创建卷组

[root@superwu10 ~]# vgcreate hehe /dev/sdb /dev/sdc //创建卷组hehe,并将硬盘加入卷组

Volume group "hehe" successfully created

[root@superwu10 ~]# ls -ld /dev/hehe //卷组会自动在/dev目录中创建 drwxr-xr-x. 2 root root 80 Feb 10 06:58 /dev/hehe

[root@superwu10 ~]# vgdisplay //查看卷组信息

--- Volume group ---

VG Name hehe

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 2

Act PV 2

VG Size 39.99 GiB

PE Size 4.00 MiB

Total PE 10238

Alloc PE / Size 0 / 0

Free PE / Size 10238 / 39.99 GiB

VG UUID Cma6sp-KXTQ-SoVz-xQ5c-rXCt-IrhY-nPdrVc

--- Volume group ---

VG Name rhel

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size <19.00 GiB

PE Size 4.00 MiB

Total PE 4863

Alloc PE / Size 4863 / <19.00 GiB

Free PE / Size 0 / 0

VG UUID oMDZ59-DQqg-ok7A-YJMV-vXBO-KU5L-Slk8pe

3.创建逻辑卷

[root@superwu10 ~]# lvcreate -n juan1 -l 30 hehe //-n 表示创建逻辑卷的名称,-l 表示逻辑卷的大小为30个PE。在hehe卷组上创建逻辑卷juan1,大小为30*4M. Logical volume "juan1" created. [root@superwu10 ~]# lvdisplay //查看逻辑卷信息 --- Logical volume --- LV Path /dev/hehe/juan1 //逻辑卷存在与卷组下 LV Name juan1 VG Name hehe LV UUID 9e0cRI-T9RO-PbBC-zQWd-Bfi2-rVUx-MPnrIW LV Write Access read/write LV Creation host, time superwu10.10, 2022-02-10 06:57:31 +0800 LV Status available # open 0 LV Size 120.00 MiB //30个PE 30*4M=120M. Current LE 30 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:2 --- Logical volume --- LV Path /dev/rhel/swap LV Name swap VG Name rhel LV UUID 620uVZ-3mFM-BfSd-G2D7-H6QI-JmZi-nfEeWg LV Write Access read/write LV Creation host, time superwu10.10, 2022-01-10 03:42:19 +0800 LV Status available # open 2 LV Size 2.00 GiB Current LE 512 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:1 --- Logical volume --- LV Path /dev/rhel/root LV Name root VG Name rhel LV UUID T0O43Y-pZqz-pKJH-BTI7-lChV-0RMU-iXS0UC LV Write Access read/write LV Creation host, time superwu10.10, 2022-01-10 03:42:19 +0800 LV Status available # open 1 LV Size <17.00 GiB Current LE 4351 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:0 [root@superwu10 ~]# lvcreate -n ceshi2 -L 150M hehe //-L参数可直接指定创建卷的大小 Rounding up size to full physical extent 152.00 MiB //卷大小如果不是4M的倍数,会自动微调为4的倍数。 Logical volume "ceshi2" created. [root@superwu10 ~]# lvdisplay --- Logical volume --- LV Path /dev/hehe/juan1 LV Name juan1 VG Name hehe LV UUID 9e0cRI-T9RO-PbBC-zQWd-Bfi2-rVUx-MPnrIW LV Write Access read/write LV Creation host, time superwu10.10, 2022-02-10 06:57:31 +0800 LV Status available # open 0 LV Size 120.00 MiB Current LE 30 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:2 --- Logical volume --- LV Path /dev/hehe/ceshi2 LV Name ceshi2 VG Name hehe LV UUID k5jQcY-vC37-24XV-CtT5-28H4-zH6Z-ResmXr LV Write Access read/write LV Creation host, time superwu10.10, 2022-02-10 06:58:56 +0800 LV Status available # open 0 LV Size 152.00 MiB Current LE 38 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:3 --- Logical volume --- LV Path /dev/rhel/swap LV Name swap VG Name rhel LV UUID 620uVZ-3mFM-BfSd-G2D7-H6QI-JmZi-nfEeWg LV Write Access read/write LV Creation host, time superwu10.10, 2022-01-10 03:42:19 +0800 LV Status available # open 2 LV Size 2.00 GiB Current LE 512 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:1 --- Logical volume --- LV Path /dev/rhel/root LV Name root VG Name rhel LV UUID T0O43Y-pZqz-pKJH-BTI7-lChV-0RMU-iXS0UC LV Write Access read/write LV Creation host, time superwu10.10, 2022-01-10 03:42:19 +0800 LV Status available # open 1 LV Size <17.00 GiB Current LE 4351 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:0

4.格式化逻辑卷

如果使用了逻辑卷管理器,则不建议用XFS文件系统,因为XFS文件系统自身就可以使用xfs_growfs命令进行磁盘扩容。这虽然不比LVM灵活,但起码也够用。

[root@superwu10 ~]# mkfs.ext4 /dev/hehe/juan1

mke2fs 1.44.3 (10-July-2018)

Creating filesystem with 122880 1k blocks and 30720 inodes

Filesystem UUID: 76a02fab-9c6c-4416-a82d-82f0d8e681a5

Superblock backups stored on blocks:

8193, 24577, 40961, 57345, 73729

Allocating group tables: done

Writing inode tables: done

Creating journal (4096 blocks): done

Writing superblocks and filesystem accounting information: done

5.挂载使用

[root@superwu10 ~]# mkdir /opt/juan [root@superwu10 ~]# mount /dev/hehe/juan1 /opt/juan/ [root@superwu10 ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 4.2G 13G 25% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 153M 862M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 /dev/mapper/hehe-juan1 113M 1.6M 103M 2% /opt/juan [root@superwu10 ~]# echo "/dev/hehe/juan1 /opt/juan ext4 defaults 0 0" >> /etc/fstab

[root@superwu10 ~]# cd /opt/juan/ [root@superwu10 juan]# ll total 12 drwx------. 2 root root 12288 Feb 10 07:13 lost+found [root@superwu10 juan]# touch test.txt [root@superwu10 juan]# ll total 13 drwx------. 2 root root 12288 Feb 10 07:13 lost+found -rw-r--r--. 1 root root 0 Feb 10 07:19 test.txt

扩容逻辑卷

用户在使用存储设备时感知不到设备底层的架构和布局,更不用关心底层是由多少块硬盘组成的,只要卷组中有足够的资源,就可以一直为逻辑卷扩容。扩容前请一定要记得卸载设备和挂载点的关联。

1.卸载设备

[root@superwu ~]# umount /opt/data1

[root@superwu ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 969M 0 969M 0% /dev

tmpfs 984M 0 984M 0% /dev/shm

tmpfs 984M 9.6M 974M 1% /run

tmpfs 984M 0 984M 0% /sys/fs/cgroup

/dev/mapper/rhel-root 17G 3.9G 14G 23% /

/dev/sr0 6.7G 6.7G 0 100% /media/cdrom

/dev/sda1 1014M 152M 863M 15% /boot

tmpfs 197M 16K 197M 1% /run/user/42

tmpfs 197M 3.5M 194M 2% /run/user/0

2.扩容逻辑卷

[root@superwu ~]# lvextend -L 250M /dev/hehe/juan1 //扩容逻辑卷至250M

Rounding size to boundary between physical extents: 252.00 MiB.

Size of logical volume hehe/juan1 changed from 120.00 MiB (30 extents) to 252.00 MiB (63 extents).

Logical volume hehe/juan1 successfully resized.

3.检查硬盘的完整性,确认目录结构、内容和文件内容没有丢失。没有报错,为正常情况。

[root@superwu ~]# e2fsck -f /dev/hehe/juan1

e2fsck 1.44.3 (10-July-2018)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/hehe/juan1: 12/30720 files (0.0% non-contiguous), 9530/122880 blocks

4.重置设备在系统中的容量。刚刚是对LV(逻辑卷)设备进行了扩容操作,但系统内核还没有同步到这部分新修改的信息,需要手动进行同步。

[root@superwu ~]# resize2fs /dev/hehe/juan1

resize2fs 1.44.3 (10-July-2018)

Resizing the filesystem on /dev/hehe/juan1 to 258048 (1k) blocks.

The filesystem on /dev/hehe/juan1 is now 258048 (1k) blocks long.

5.重新挂载设备

[root@superwu ~]# mount -a [root@superwu ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 152M 863M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 /dev/mapper/hehe-juan1 240M 2.1M 222M 1% /opt/data1 [root@superwu ~]# lvdisplay --- Logical volume --- LV Path /dev/hehe/juan1 LV Name juan1 VG Name hehe LV UUID KvHDJu-CMPo-uMwK-56B0-dMWB-hONK-OvhUxH LV Write Access read/write LV Creation host, time superwu.10, 2022-02-10 16:42:58 +0800 LV Status available # open 1 LV Size 252.00 MiB //已经扩容至252M Current LE 63 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:2 --- Logical volume --- LV Path /dev/rhel/swap LV Name swap VG Name rhel LV UUID 5r17mN-Xzxt-YbDv-fg9m-9JT1-AtsD-K6h5yZ LV Write Access read/write LV Creation host, time superwu.10, 2022-01-11 16:26:54 +0800 LV Status available # open 2 LV Size 2.00 GiB Current LE 512 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:1 --- Logical volume --- LV Path /dev/rhel/root LV Name root VG Name rhel LV UUID Ng4387-Ok4t-nWlF-zhtl-ahge-12Le-sk4mri LV Write Access read/write LV Creation host, time superwu.10, 2022-01-11 16:26:54 +0800 LV Status available # open 1 LV Size <17.00 GiB Current LE 4351 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:0

缩小逻辑卷

缩容操作,有数据丢失风险,建议先备份数据再进行缩容。

在对LVM逻辑卷缩容前,首先要检查文件系统的完整性。

1.卸载LVM逻辑卷设备

[root@superwu ~]# umount /opt/data1

[root@superwu ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 969M 0 969M 0% /dev

tmpfs 984M 0 984M 0% /dev/shm

tmpfs 984M 9.6M 974M 1% /run

tmpfs 984M 0 984M 0% /sys/fs/cgroup

/dev/mapper/rhel-root 17G 3.9G 14G 23% /

/dev/sr0 6.7G 6.7G 0 100% /media/cdrom

/dev/sda1 1014M 152M 863M 15% /boot

tmpfs 197M 16K 197M 1% /run/user/42

tmpfs 197M 3.5M 194M 2% /run/user/0

2.检查文件系统完整性

[root@superwu ~]# e2fsck -f /dev/hehe/juan1

e2fsck 1.44.3 (10-July-2018)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

/dev/hehe/juan1: 12/65536 files (0.0% non-contiguous), 14432/258048 blocks

3.通知内核缩减逻辑卷后的大小

[root@superwu ~]# resize2fs /dev/hehe/juan1 150M //将LVM逻辑卷缩减到150M

resize2fs 1.44.3 (10-July-2018)

Resizing the filesystem on /dev/hehe/juan1 to 153600 (1k) blocks.

The filesystem on /dev/hehe/juan1 is now 153600 (1k) blocks long.

4.缩减LVM逻辑卷容量

[root@superwu ~]# lvreduce -L 150M /dev/hehe/juan1 //注意:缩减逻辑卷的大小应与通知内核时的大小相同。 Rounding size to boundary between physical extents: 152.00 MiB. WARNING: Reducing active logical volume to 152.00 MiB. THIS MAY DESTROY YOUR DATA (filesystem etc.) Do you really want to reduce hehe/juan1? [y/n]: y //需要二次确认 Size of logical volume hehe/juan1 changed from 252.00 MiB (63 extents) to 152.00 MiB (38 extents). Logical volume hehe/juan1 successfully resized.

5.挂载并使用LVM逻辑卷

[root@superwu ~]# mount -a [root@superwu ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 152M 863M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 /dev/mapper/hehe-juan1 142M 1.6M 130M 2% /opt/data1 [root@superwu ~]# lvdisplay --- Logical volume --- LV Path /dev/hehe/juan1 LV Name juan1 VG Name hehe LV UUID KvHDJu-CMPo-uMwK-56B0-dMWB-hONK-OvhUxH LV Write Access read/write LV Creation host, time superwu.10, 2022-02-10 16:42:58 +0800 LV Status available # open 1 LV Size 152.00 MiB //已经将逻辑卷缩小到150M Current LE 38 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:2

逻辑卷快照

LVM还具备有“快照卷”功能,该功能类似于虚拟机软件的还原时间点功能。例如,对某一个逻辑卷设备做一次快照,如果日后发现数据被改错了,就可以利用之前做好的快照卷进行覆盖还原。LVM的快照卷功能有两个特点:

快照卷的容量必须等同于逻辑卷的容量;

快照卷仅一次有效,一旦执行还原操作后则会被立即自动删除。

1.查看要备份的LVM逻辑卷的大小、卷组的大小是否够用。

[root@superwu ~]# lvdisplay --- Logical volume --- LV Path /dev/hehe/juan1 LV Name juan1 VG Name hehe LV UUID KvHDJu-CMPo-uMwK-56B0-dMWB-hONK-OvhUxH LV Write Access read/write LV Creation host, time superwu.10, 2022-02-10 16:42:58 +0800 LV Status available # open 1 LV Size 152.00 MiB Current LE 38 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:2 [root@superwu data1]# vgdisplay --- Volume group --- VG Name hehe System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 4 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 1 Max PV 0 Cur PV 2 Act PV 2 VG Size 39.99 GiB PE Size 4.00 MiB Total PE 10238 Alloc PE / Size 38 / 152.00 MiB Free PE / Size 10200 / 39.84 GiB VG UUID jW63WM-Xw4t-dMIc-if0Y-6oPN-Rdbh-qloflg

2.创建快照

[root@superwu data1]# lvcreate -s -n hehekuaizhao -L 150M /dev/hehe/juan1 //-s参数表示创建快照(对juan1逻辑卷创建快照),快照大小等于LVM逻辑卷。 Rounding up size to full physical extent 152.00 MiB Logical volume "hehekuaizhao" created. [root@superwu data1]# lvdisplay --- Logical volume --- LV Path /dev/hehe/juan1 LV Name juan1 VG Name hehe LV UUID KvHDJu-CMPo-uMwK-56B0-dMWB-hONK-OvhUxH LV Write Access read/write LV Creation host, time superwu.10, 2022-02-10 16:42:58 +0800 LV snapshot status source of hehekuaizhao [active] //此处可看到此逻辑卷已经被创建了快照hehekuaizhao LV Status available # open 1 LV Size 152.00 MiB Current LE 38 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:2 --- Logical volume --- LV Path /dev/hehe/hehekuaizhao LV Name hehekuaizhao VG Name hehe LV UUID qfyr6Y-lg1h-9Hs1-G5VQ-9svJ-kDie-NS1v67 LV Write Access read/write LV Creation host, time superwu.10, 2022-02-10 17:33:12 +0800 LV snapshot status active destination for juan1 //此处可看到本快照是基于juan1创建的 LV Status available # open 0 LV Size 152.00 MiB Current LE 38 COW-table size 152.00 MiB COW-table LE 38 Allocated to snapshot 0.01% Snapshot chunk size 4.00 KiB Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:5

3.还原快照

lvconvert命令用于管理逻辑卷的快照,语法格式为“lvconvert [参数]快照卷名称”。

[root@superwu ~]#cd /opt/data1 //修改LVM逻辑卷中的内容 [root@superwu data1]# touch ceshi1 [root@superwu data1]# touch ceshi2 [root@superwu data1]# touch ceshi3 [root@superwu data1]# ll total 17 -rw-r--r--. 1 root root 0 Feb 10 17:36 ceshi1 -rw-r--r--. 1 root root 0 Feb 10 17:36 ceshi2 -rw-r--r--. 1 root root 0 Feb 10 17:36 ceshi3 -rw-r--r--. 1 root root 10 Feb 10 17:30 hehe drwx------. 2 root root 12288 Feb 10 16:44 lost+found [root@superwu data1]cd ~

还原快照需要先卸载LVM逻辑卷设备 [root@superwu ~]# umount /opt/data1 //卸载逻辑卷 [root@superwu ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 152M 863M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 [root@superwu ~]# lvconvert --merge /dev/hehe/hehekuaizhao //将逻辑卷恢复到hehekuaizhao快照 Merging of volume hehe/hehekuaizhao started. hehe/juan1: Merged: 100.00% [root@superwu ~]# mount -a //挂载逻辑卷 [root@superwu ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 969M 0 969M 0% /dev tmpfs 984M 0 984M 0% /dev/shm tmpfs 984M 9.6M 974M 1% /run tmpfs 984M 0 984M 0% /sys/fs/cgroup /dev/mapper/rhel-root 17G 3.9G 14G 23% / /dev/sr0 6.7G 6.7G 0 100% /media/cdrom /dev/sda1 1014M 152M 863M 15% /boot tmpfs 197M 16K 197M 1% /run/user/42 tmpfs 197M 3.5M 194M 2% /run/user/0 /dev/mapper/hehe-juan1 142M 1.6M 130M 2% /opt/data1 [root@superwu ~]# cd /opt/data1/ [root@superwu data1]# ll total 14 -rw-r--r--. 1 root root 10 Feb 10 17:30 hehe //快照已恢复 drwx------. 2 root root 12288 Feb 10 16:44 lost+found

[root@superwu data1]# lvdisplay //快照被恢复后会自动删除,即快照只一次有效

--- Logical volume ---

LV Path /dev/hehe/juan1

LV Name juan1

VG Name hehe

LV UUID KvHDJu-CMPo-uMwK-56B0-dMWB-hONK-OvhUxH

LV Write Access read/write

LV Creation host, time superwu.10, 2022-02-10 16:42:58 +0800

LV Status available

# open 1

LV Size 152.00 MiB

Current LE 38

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

删除逻辑卷

删除逻辑卷需要执行严格的操作步骤,不能颠倒顺序。删除逻辑卷 --> 卷组 --> 物理卷设备。

1.卸载逻辑卷,删除开机自动挂载

[root@superwu ~]# umount /opt/data1

[root@superwu ~]# vim /etc/fstab

[root@superwu ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Jan 11 03:26:57 2022

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/rhel-root / xfs defaults 0 0

UUID=d7f53471-c95f-44f2-aafe-f86bd5ecebd7 /boot xfs defaults 0 0

/dev/mapper/rhel-swap swap swap defaults 0 0

/dev/cdrom /media/cdrom iso9660 defaults 0 0

2.删除逻辑卷

[root@superwu ~]# lvremove /dev/hehe/juan1 Do you really want to remove active logical volume hehe/juan1? [y/n]: y //需要二次确认 Logical volume "juan1" successfully removed

3.删除卷组

[root@superwu ~]# vgremove hehe //直接写卷组名称即可,不需要写完整路径 Volume group "hehe" successfully removed

4.删除物理卷

[root@superwu ~]# pvremove /dev/sdb /dev/sdc

Labels on physical volume "/dev/sdb" successfully wiped.

Labels on physical volume "/dev/sdc" successfully wiped.

删除后,可执行lvdisplay、vgdisplay、pvdisplay命令查看删除是否成功。