版权声明:本文为博主原创文章,支持原创,转载请附上原文出处链接和本声明。

本文地址:https://www.cnblogs.com/wannengachao/p/13821812.html

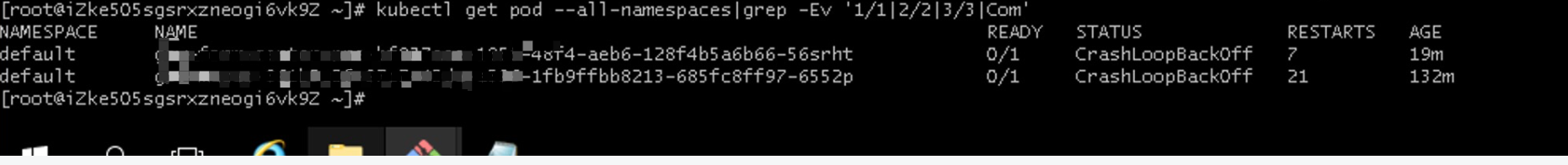

kubernetes部署Pod一直处于CrashLookBackOff状态,此状态会有多种问题,我的问题只是其中一种,但是排查思路大同小异。

1、在k8s的master集群上执行 kubectl get pod --all-namespaces|grep -Ev '1/1|2/2|3/3|Com'

2、kubectl describe $pod名 输出见下:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 26m default-scheduler Successfully assigned default/cov-test-center-pre-bf837aae-1855-48f4-aeb6-128f4b5a6b66-56srht

to cn-macau-macauproject-d01.i-ke505sgsrxzq29iemkmn

Normal Started 22m (x4 over 26m) kubelet Started container

Normal Pulling 21m (x5 over 26m) kubelet pulling image

"cr.registry.acloud.intestac.cov.mo/zwzt_pre/cov-test-center:1.0.9"

Normal Pulled 21m (x5 over 26m) kubelet Successfully pulled image

"cr.registry.acloud.intestac.cov.mo/zwzt_pre/cov-test-center:1.0.9"

Normal Created 21m (x5 over 26m) kubelet Created container

Warning BackOff 6m16s (x63 over 24m) kubelet Back-off restarting failed

container

上面内容其实没有多大意义,下面的内容是问题出现的原因,describe 出来的结果发现Pod没有设置limits与requests。

limits:

cpu: "0"

memory: "0"

requests:

cpu: "0"

memory: "0"

3、kubectl get rs $异常Pod的rs名 -oyaml

进一步验证发现确实没有设置limits、requests

resources:

limits:

cpu: "0"

memory: "0"

requests:

cpu: "0"

memory: "0"

4、解决方法:设置limits与requests后从新部署。