负载均衡功能验证

参考链接:

https://www.sdnlab.com/19245.html

https://www.jianshu.com/p/836f80e4cf4d

ovn 使用流表实现了一套简单的LB:

- 支持 sctp, tcp, or udp;

- 支持 health check

- 支持根据filed(eth_src、eth_dst、ip_dst、ip_src、tp_src、tp_dst ) 的hash,以及默认的dp_hash。

- OVN使用select类型的openflow group实现负载分担调度。

可见是一套L3&L4 Based LB,调度算法简单,没有实现7层 LB和其他调度算法。

这里解释一下 dp_hash和fields_hash:

- dp_hash: datapath hash可以理解为按包hash,这个hash值是由datapath来计算的,根据包hash的结果选择bucket,因为每个包都得计算hash值,所以比较耗费CPU;

- fields_hash: openvswitch根据fields进行hash计算选择一个bucket,然后给datapath安装流表,后续包直接在datapath 匹配安装的流表转发,这种hash算法可以理解了按流hash,hash计算时选择了哪些field,安装流时就匹配哪些field,其它都被mask掉了。如果流很多那么datapath就会被安装很多流,但是hash计算的工作量小,只有首包时计算一下。

LB 配置的位置

- logic sw lb 在使用LB 的client端vm的宿主机上完成调度封装,是分布式的

- logic router lb在网关上,是集中式的

OVN中的负载平衡可以应用于逻辑交换机或逻辑路由器。选择何种方式取决于您的具体要求。每种方法都有注意事项。

当应用于逻辑路由器时,需要牢记以下注意事项:

(1)负载平衡只能应用于“集中式”路由器(即网关路由器)。

(2)第1个注意事项已经决定了路由器上的负载平衡是非分布式服务。

应用于逻辑交换机时,需要牢记以下注意事项:

(1)负载平衡是“分布式”的,因为它被应用于潜在的多个OVS主机。

(2)仅在来自VIF(虚拟接口)的流量入口处评估逻辑交换机上的负载平衡。这意味着它必须应用在“客户端”逻辑交换机上,而不是在“服务器”逻辑交换机上。

(3)由于第2个注意事项,您可能需要根据您的设计规模对多个逻辑交换机应用负载平衡。

在逻辑交换机上配置负载均衡(负载均衡应该设置到用户的logical switch而不是server的logical switch)

# 所有ovn-nbctl全部在master节点上操作

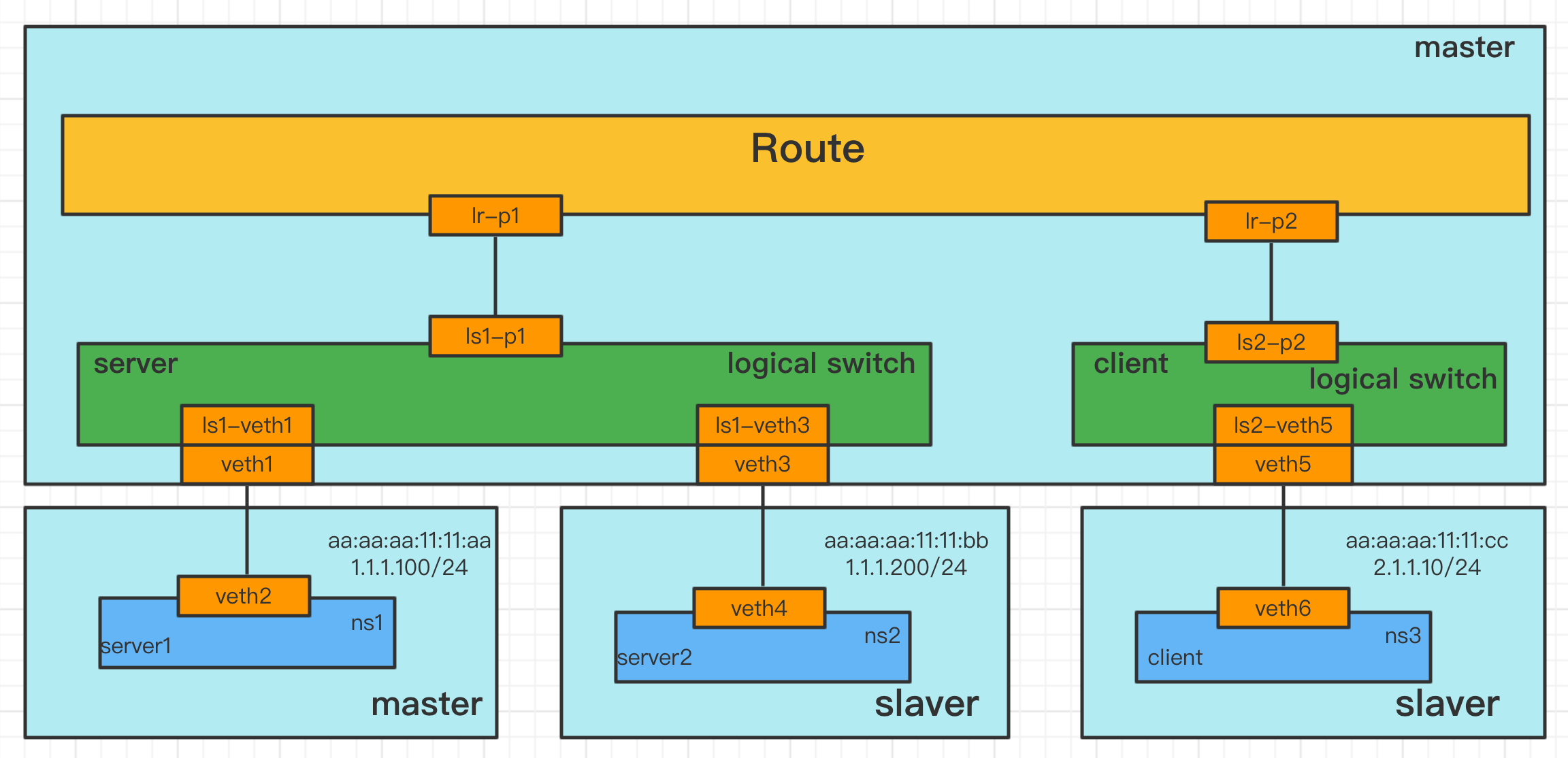

# 配置逻辑交换机及逻辑port

# 创建logical switch

ovn-nbctl ls-add ls1

ovn-nbctl ls-add ls2

# 创建 logical port ls1-veth1

ovn-nbctl lsp-add ls1 ls1-veth1

ovn-nbctl lsp-set-addresses ls1-veth1 "aa:aa:aa:11:11:aa 1.1.1.100"

ovn-nbctl lsp-set-port-security ls1-veth1 aa:aa:aa:11:11:aa

# 创建 logical port ls1-veth3

ovn-nbctl lsp-add ls1 ls1-veth3

ovn-nbctl lsp-set-addresses ls1-veth3 "aa:aa:aa:11:11:bb 1.1.1.200"

ovn-nbctl lsp-set-port-security ls1-veth3 aa:aa:aa:11:11:bb

# 创建 logical port ls2-veth5

ovn-nbctl lsp-add ls2 ls2-veth5

ovn-nbctl lsp-set-addresses ls2-veth5 "aa:aa:aa:11:11:cc 2.1.1.10"

ovn-nbctl lsp-set-port-security ls2-veth5 aa:aa:aa:11:11:cc

# 配置逻辑路由器及逻辑port(全部master节点操作)

# 创建逻辑路由器 ovn-nbctl lr-add r1 # 创建逻辑路由器port ovn-nbctl lrp-add r1 r1-p1 00:00:00:00:10:00 1.1.1.1/24 ovn-nbctl lrp-add r1 r1-p2 00:00:00:00:20:00 2.1.1.1/24 # 创建逻辑交换机port并关联路由器port ovn-nbctl lsp-add ls1 ls1-p1 ovn-nbctl lsp-add ls2 ls2-p2 ovn-nbctl lsp-set-type ls1-p1 router ovn-nbctl lsp-set-type ls2-p2 router ovn-nbctl lsp-set-addresses ls1-p1 "00:00:00:00:10:00 1.1.1.1" ovn-nbctl lsp-set-addresses ls2-p2 "00:00:00:00:20:00 2.1.1.1" ovn-nbctl lsp-set-options ls1-p1 router-port=r1-p1 ovn-nbctl lsp-set-options ls2-p2 router-port=r1-p2

# 查看配置

[root@master ~]# ovn-nbctl show switch d0c5ff9c-e1fa-4a37-a986-eb54b44544d2 (ls1) port ls1-p1 type: router addresses: ["00:00:00:00:10:00 1.1.1.1"] router-port: r1-p1 port ls1-veth3 addresses: ["aa:aa:aa:11:11:bb 1.1.1.200"] port ls1-veth1 addresses: ["aa:aa:aa:11:11:aa 1.1.1.100"] switch b40260fb-befe-4cee-9a00-b803c6ef66ed (ls2) port ls2-veth5 addresses: ["aa:aa:aa:11:11:cc 2.1.1.10"] port ls2-p2 type: router addresses: ["00:00:00:00:20:00 2.1.1.1"] router-port: r1-p2 router cfa5807d-4acd-4428-8ddc-11e774d93dad (r1) port r1-p1 mac: "00:00:00:00:10:00" networks: ["1.1.1.1/24"] port r1-p2 mac: "00:00:00:00:20:00" networks: ["2.1.1.1/24"]

# 配置命名空间

# master:(配置server1) ip netns add ns1 ip link add veth1 type veth peer name veth2 ifconfig veth1 up ifconfig veth2 up ip link set veth2 netns ns1 ip netns exec ns1 ip link set veth2 address aa:aa:aa:11:11:aa ip netns exec ns1 ip addr add 1.1.1.100/24 dev veth2 ip netns exec ns1 ip link set veth2 up

ip netns exec ns1 ip r add default via 1.1.1.1 ovs-vsctl add-port br-int veth1 ovs-vsctl set Interface veth1 external_ids:iface-id=ls1-veth1 ip netns exec ns1 ip addr show # slaver:(配置server2) ip netns add ns2 ip link add veth3 type veth peer name veth4 ifconfig veth3 up ifconfig veth4 up ip link set veth4 netns ns2 ip netns exec ns2 ip link set veth4 address aa:aa:aa:11:11:bb ip netns exec ns2 ip addr add 1.1.1.200/24 dev veth4 ip netns exec ns2 ip link set veth4 up

ip netns exec ns2 ip r add default via 1.1.1.1 ovs-vsctl add-port br-int veth3 ovs-vsctl set Interface veth3 external_ids:iface-id=ls1-veth3 ip netns exec ns2 ip addr show # slaver:(配置client) ip netns add ns3 ip link add veth5 type veth peer name veth6 ifconfig veth5 up ifconfig veth6 up ip link set veth6 netns ns3 ip netns exec ns3 ip link set veth6 address aa:aa:aa:11:11:cc ip netns exec ns3 ip addr add 2.1.1.10/24 dev veth6 ip netns exec ns3 ip link set veth6 up

ip netns exec ns3 ip r add default via 2.1.1.1 ovs-vsctl add-port br-int veth5 ovs-vsctl set Interface veth5 external_ids:iface-id=ls2-veth5 ip netns exec ns3 ip addr show

# 启动web服务器

# master mkdir /tmp/www -p echo "i am master" > /tmp/www/index.html cd /tmp/www ip netns exec ns1 python -m SimpleHTTPServer 8000 # slaver mkdir /tmp/www -p echo "i am slaver" > /tmp/www/index.html cd /tmp/www ip netns exec ns2 python -m SimpleHTTPServer 8000

# 配置负载均衡

# master节点 ovn-nbctl lb-add lb1 1.1.1.50:8000 "1.1.1.100:8000,1.1.1.200:8000" tcp ovn-nbctl ls-lb-add ls2 lb1

# 查看LB配置

[root@master ~]# ovn-nbctl ls-lb-list ls2 UUID LB PROTO VIP IPs efed6f6f-cee7-438a-bc56-5d55a3c1449e lb1 tcp 1.1.1.50:8000 1.1.1.100:8000,1.1.1.200:8000

# 功能测试

[root@slaver ~]# ip netns exec ns3 curl 1.1.1.50:8000 i am master [root@slaver ~]# ip netns exec ns3 curl 1.1.1.50:8000 i am master [root@slaver ~]# ip netns exec ns3 curl 1.1.1.50:8000 i am slaver [root@slaver ~]# ip netns exec ns3 curl 1.1.1.50:8000 i am master [root@slaver ~]# ip netns exec ns3 curl 1.1.1.50:8000 i am slaver

# 可通过修改load balance的selection_fields为ip_src,这样只会根据源ip计算,这样同一个ns发出的报文只会发给同一个endpoint

待验证

[root@ovn-master ~]# ovn-sbctl dump-flows ls2 | grep ct_lb table=10(ls_in_stateful ), priority=120 , match=(ct.new && ip4.dst == 1.1.1.50 && tcp.dst == 8000), action=(ct_lb(1.1.1.100:8000,1.1.1.200:8000);) table=10(ls_in_stateful ), priority=100 , match=(reg0[2] == 1), action=(ct_lb;) table=7 (ls_out_stateful ), priority=100 , match=(reg0[2] == 1), action=(ct_lb;)

# ovn-trace

[root@ovn-master ~]# ovn-trace --detailed --lb-dst=1.1.1.50:8000 --ct=est ls2 'inport=="ls2-veth5" && eth.src== aa:aa:aa:11:11:cc && eth.dst == 00:00:00:00:20:00 && ip4.src==2.1.1.10 && ip.ttl==64 && ip4.dst==1.1.1.50 && tcp.dst==8000' # tcp,reg14=0x1,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:cc,dl_dst=00:00:00:00:20:00,nw_src=2.1.1.10,nw_dst=1.1.1.50,nw_tos=0,nw_ecn=0,nw_ttl=64,tp_src=0,tp_dst=8000,tcp_flags=0 ingress(dp="ls2", inport="ls2-veth5") ------------------------------------- 0. ls_in_port_sec_l2 (ovn-northd.c:4843): inport == "ls2-veth5" && eth.src == {aa:aa:aa:11:11:cc}, priority 50, uuid 6ecbbab8 next; 4. ls_in_pre_lb (ovn-northd.c:3969): ip && ip4.dst == 1.1.1.50, priority 100, uuid 9d2daad0 reg0[0] = 1; next; 5. ls_in_pre_stateful (ovn-northd.c:3992): reg0[0] == 1, priority 100, uuid a2bffb6b ct_next; ct_next(ct_state=est|trk) ------------------------- 9. ls_in_lb (ovn-northd.c:4575): ct.est && !ct.rel && !ct.new && !ct.inv, priority 65535, uuid 086001ea reg0[2] = 1; next; 10. ls_in_stateful (ovn-northd.c:4607): reg0[2] == 1, priority 100, uuid 58fa64dc ct_lb; ct_lb ----- 17. ls_in_l2_lkup (ovn-northd.c:5359): eth.dst == 00:00:00:00:20:00, priority 50, uuid 3183656f outport = "ls2-p2"; output; egress(dp="ls2", inport="ls2-veth5", outport="ls2-p2") ------------------------------------------------------ 0. ls_out_pre_lb (ovn-northd.c:3977): ip, priority 100, uuid 0266402e reg0[0] = 1; next; 2. ls_out_pre_stateful (ovn-northd.c:3994): reg0[0] == 1, priority 100, uuid 7cfa5fe4 ct_next; ct_next(ct_state=est|trk /* default (use --ct to customize) */) --------------------------------------------------------------- 3. ls_out_lb (ovn-northd.c:4578): ct.est && !ct.rel && !ct.new && !ct.inv, priority 65535, uuid a900607d reg0[2] = 1; next; 7. ls_out_stateful (ovn-northd.c:4609): reg0[2] == 1, priority 100, uuid c7744075 ct_lb; ct_lb ----- 9. ls_out_port_sec_l2 (ovn-northd.c:5503): outport == "ls2-p2", priority 50, uuid bc275bd3 output; /* output to "ls2-p2", type "patch" */ ingress(dp="r1", inport="r1-p2") -------------------------------- 0. lr_in_admission (ovn-northd.c:6198): eth.dst == 00:00:00:00:20:00 && inport == "r1-p2", priority 50, uuid 3ea8bf63 next; 7. lr_in_ip_routing (ovn-northd.c:5780): ip4.dst == 1.1.1.0/24, priority 49, uuid b241f017 ip.ttl--; reg0 = ip4.dst; reg1 = 1.1.1.1; eth.src = 00:00:00:00:10:00; outport = "r1-p1"; flags.loopback = 1; next; 9. lr_in_arp_resolve (ovn-northd.c:7810): ip4, priority 0, uuid 87ecd08f get_arp(outport, reg0); /* No MAC binding. */ next; 13. lr_in_arp_request (ovn-northd.c:7994): eth.dst == 00:00:00:00:00:00, priority 100, uuid e23aaaa4 arp { eth.dst = ff:ff:ff:ff:ff:ff; arp.spa = reg1; arp.tpa = reg0; arp.op = 1; output; }; arp --- eth.dst = ff:ff:ff:ff:ff:ff; arp.spa = reg1; arp.tpa = reg0; arp.op = 1; output; egress(dp="r1", inport="r1-p2", outport="r1-p1") ------------------------------------------------ 3. lr_out_delivery (ovn-northd.c:8029): outport == "r1-p1", priority 100, uuid 2c45bb40 output; /* output to "r1-p1", type "patch" */ ingress(dp="ls1", inport="ls1-p1") ---------------------------------- 0. ls_in_port_sec_l2 (ovn-northd.c:4843): inport == "ls1-p1", priority 50, uuid 18f4bc34 next; 17. ls_in_l2_lkup (ovn-northd.c:5308): eth.mcast, priority 70, uuid e9f7ecb8 outport = "_MC_flood"; output; multicast(dp="ls1", mcgroup="_MC_flood") ---------------------------------------- egress(dp="ls1", inport="ls1-p1", outport="ls1-p1") --------------------------------------------------- /* omitting output because inport == outport && !flags.loopback */ egress(dp="ls1", inport="ls1-p1", outport="ls1-veth1") ------------------------------------------------------ 9. ls_out_port_sec_l2 (ovn-northd.c:5480): eth.mcast, priority 100, uuid 2439078a output; /* output to "ls1-veth1", type "" */ egress(dp="ls1", inport="ls1-p1", outport="ls1-veth3") ------------------------------------------------------ 9. ls_out_port_sec_l2 (ovn-northd.c:5480): eth.mcast, priority 100, uuid 2439078a output; /* output to "ls1-veth3", type "" */

# 流量trace,流表分析

[root@slaver ~]# ovs-appctl ofproto/trace br-int in_port=veth5,tcp,ct_state=new,dl_src=aa:aa:aa:11:11:cc,dl_dst=00:00:00:00:20:00,nw_src=2.1.1.10,nw_dst=1.1.1.50,tp_dst=8000 -generate Flow: ct_state=new,tcp,in_port=7,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:cc,dl_dst=00:00:00:00:20:00,nw_src=2.1.1.10,nw_dst=1.1.1.50,nw_tos=0,nw_ecn=0,nw_ttl=0,tp_src=0,tp_dst=8000,tcp_flags=0 bridge("br-int") ---------------- 0. in_port=7, priority 100 set_field:0x8->reg13 set_field:0x4->reg11 set_field:0x2->reg12 set_field:0xa->metadata set_field:0x1->reg14 resubmit(,8) 8. reg14=0x1,metadata=0xa,dl_src=aa:aa:aa:11:11:cc, priority 50, cookie 0xdded0345 resubmit(,9) 9. metadata=0xa, priority 0, cookie 0x8b0017f4 resubmit(,10) 10. metadata=0xa, priority 0, cookie 0xe89b0c91 resubmit(,11) 11. metadata=0xa, priority 0, cookie 0x9ecd4c45 resubmit(,12) 12. ip,metadata=0xa,nw_dst=1.1.1.50, priority 100, cookie 0xca4afa66 load:0x1->NXM_NX_XXREG0[96] resubmit(,13) 13. ip,reg0=0x1/0x1,metadata=0xa, priority 100, cookie 0xeb3294df ct(table=14,zone=NXM_NX_REG13[0..15]) drop -> A clone of the packet is forked to recirculate. The forked pipeline will be resumed at table 14. -> Sets the packet to an untracked state, and clears all the conntrack fields. Final flow: tcp,reg0=0x1,reg11=0x4,reg12=0x2,reg13=0x8,reg14=0x1,metadata=0xa,in_port=7,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:cc,dl_dst=00:00:00:00:20:00,nw_src=2.1.1.10,nw_dst=1.1.1.50,nw_tos=0,nw_ecn=0,nw_ttl=0,tp_src=0,tp_dst=8000,tcp_flags=0 Megaflow: recirc_id=0,eth,ip,in_port=7,vlan_tci=0x0000/0x1000,dl_src=aa:aa:aa:11:11:cc,nw_dst=1.1.1.50,nw_frag=no Datapath actions: ct(zone=8),recirc(0x72) =============================================================================== recirc(0x72) - resume conntrack with default ct_state=trk|new (use --ct-next to customize) =============================================================================== Flow: recirc_id=0x72,ct_state=new|trk,ct_zone=8,eth,tcp,reg0=0x1,reg11=0x4,reg12=0x2,reg13=0x8,reg14=0x1,metadata=0xa,in_port=7,vlan_tci=0x0000,dl_src=aa:aa:aa:11:11:cc,dl_dst=00:00:00:00:20:00,nw_src=2.1.1.10,nw_dst=1.1.1.50,nw_tos=0,nw_ecn=0,nw_ttl=0,tp_src=0,tp_dst=8000,tcp_flags=0 bridge("br-int") ---------------- thaw Resuming from table 14 14. metadata=0xa, priority 0, cookie 0xf5b40090 resubmit(,15) 15. metadata=0xa, priority 0, cookie 0xe0642e4 resubmit(,16) 16. metadata=0xa, priority 0, cookie 0x5a618e6d resubmit(,17) 17. metadata=0xa, priority 0, cookie 0xd6c4e562 resubmit(,18) 18. ct_state=+new+trk,tcp,metadata=0xa,nw_dst=1.1.1.50,tp_dst=8000, priority 120, cookie 0x28e7bdb3 group:1 -> no live bucket Final flow: unchanged Megaflow: recirc_id=0x72,ct_state=+new-est-rel-inv+trk,eth,tcp,in_port=7,nw_dst=1.1.1.50,nw_frag=no,tp_dst=8000 Datapath actions: hash(l4(0)),recirc(0x73)

在网关路由器上配置负载均衡

# 清除逻辑交换机上的LB配置

ovn-nbctl ls-lb-del ls2 lb1

ovn-nbctl lb-del lb1

# 新建LB

# master节点 ovn-nbctl lb-add lb1 2.1.1.50:8000 "1.1.1.100:8000,1.1.1.200:8000" tcp ovn-nbctl lr-lb-add r1 lb1

# LB关联到逻辑路由器上

ovn-nbctl lr-lb-add r1 lb1

# 查看配置

[root@master ~]# ovn-nbctl lr-lb-list r1 UUID LB PROTO VIP IPs efed6f6f-cee7-438a-bc56-5d55a3c1449e lb1 tcp 1.1.1.50:8000 1.1.1.100:8000,1.1.1.200:8000

# 功能验证

Not Work,待研究