参考博客 :https://www.cnblogs.com/wupeiqi/p/6229292.html

Scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。 其可以应用在数据挖掘,信息处理或存储历史数据等一系列的程序中。

Scrapy 使用了 Twisted异步网络库来处理网络通讯。

安装 scrapy

1 Linux 2 pip3 install scrapy 3 4 5 Windows 6 a. pip3 install wheel 7 b. 下载twisted http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted 8 c. 进入下载目录,执行 pip3 install Twisted‑17.1.0‑cp35‑cp35m‑win_amd64.whl 9 d. pip3 install scrapy 10 e. 下载并安装pywin32:https://sourceforge.net/projects/pywin32/files/

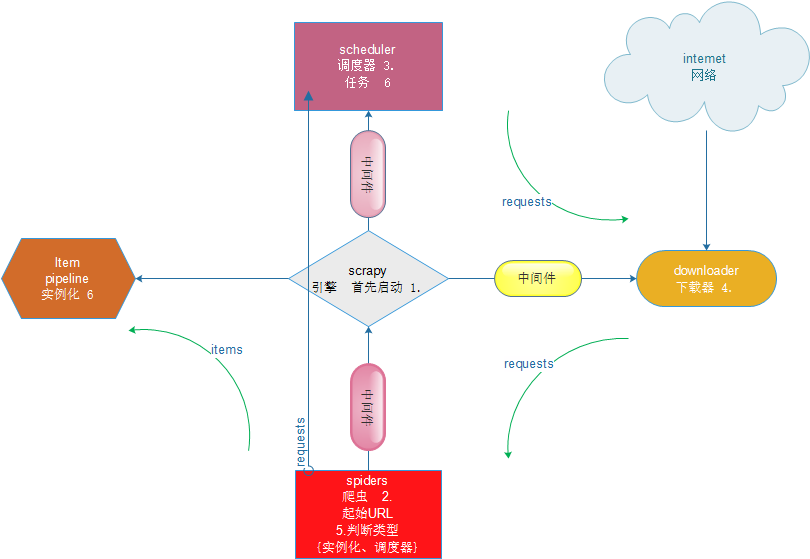

scrapy 基本使用流程

一、 创建项目 (projects) 命令: scrapy startproject 项目名

二、创建任务 (spide) 命令:scrapy genspider 任务名 域名

三、运行作务 命令: scrapy crawl 任务名

PS: 命令:scrapy list #查看爬虫 任务名 列表

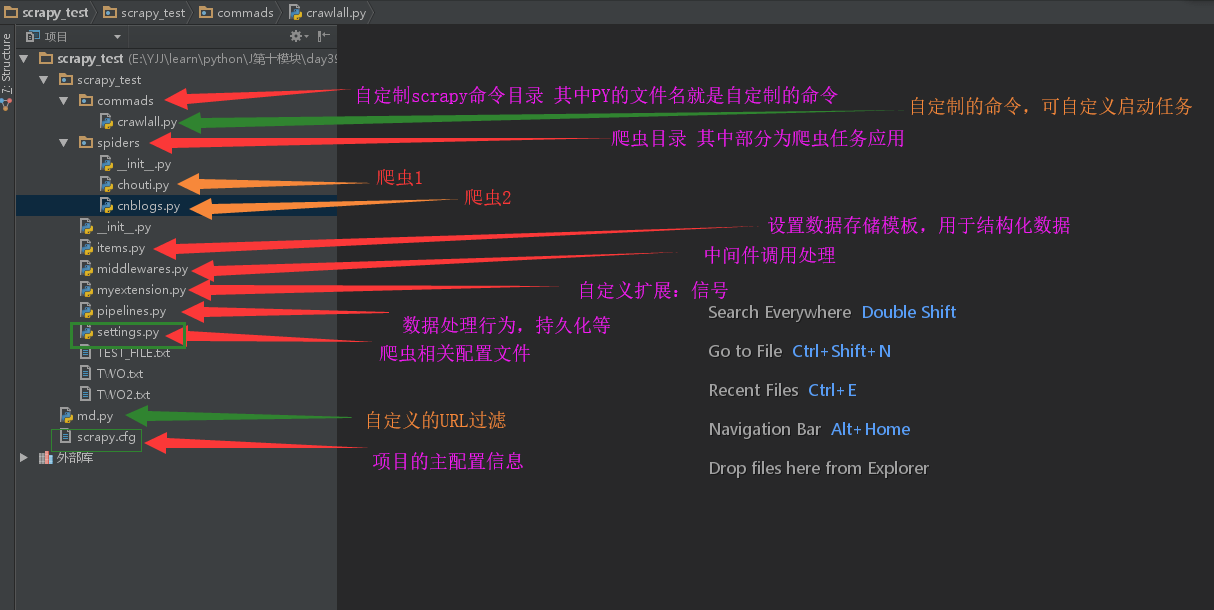

SCRAPY 项目结构图

scrapy_test

|---commads

|--crawlall.py

#自制scrapy命令 使用如图

1 #!usr/bin/env python 2 #-*-coding:utf-8-*- 3 # Author calmyan 4 #scrapy_test 5 #2018/6/7 11:14 6 #__author__='Administrator' 7 8 from scrapy.commands import ScrapyCommand 9 from scrapy.utils.project import get_project_settings 10 11 12 class Command(ScrapyCommand): 13 14 requires_project = True 15 16 def syntax(self): 17 return '[options]' 18 19 def short_desc(self): 20 return 'Runs all of the spiders' 21 22 def run(self, args, opts): 23 spider_list = self.crawler_process.spiders.list()#爬虫任务列表 24 for name in spider_list: 25 self.crawler_process.crawl(name, **opts.__dict__) #加载到执行列表 26 self.crawler_process.start()#开始并发执行

|---spiders

|----cnblogs.py

#任务一、 cnblogs 目标:爬取博客园 首页的文章标题与链接

1 # -*- coding: utf-8 -*- 2 import scrapy 3 import io 4 import sys 5 from scrapy.http import Request 6 # from scrapy.dupefilter import RFPDupeFilter 7 from scrapy.selector import Selector,HtmlXPathSelector #特殊对象 筛选器 Selector.xpath 8 # sys.stdout=io.TextIOWrapper(sys.stdout.buffer, encoding='gb18030')#字符转换 9 from ..items import ScrapyTestItem 10 class CnblogsSpider(scrapy.Spider): 11 name = 'cnblogs' 12 allowed_domains = ['cnblogs.com'] 13 start_urls = ['http://cnblogs.com/'] 14 15 def parse(self, response): 16 # print(response.body) 17 #print(response.text) 18 hxs=Selector(response=response).xpath('//div[@id="post_list"]/div[@class="post_item"]') #转换为对象格式 19 # //全局对象目录 ./当前对象目录 //往下多层查找 text()获取内容 extract()格式化 extract_first()第一个 20 # itme_list=hxs.xpath('./div[@class="post_item_body"]//a[@class="titlelnk"]/text()').extract_first()#text()获取内容 extract()格式化 21 itme_list=hxs.xpath('./div[@class="post_item_body"]//a[@class="titlelnk"]/text()').extract()#text()获取内容 extract()格式化 22 # href_list=hxs.xpath('./div[@class="post_item_body"]//a[@class="titlelnk"]/@href').extract_first()#text()获取内容 extract()格式化 23 href_list=hxs.xpath('./div[@class="post_item_body"]//a[@class="titlelnk"]/@href').extract()#text()获取内容 extract()格式化 24 # for item in itme_list: 25 # print('itme----',item) 26 27 yield ScrapyTestItem(title=itme_list,href=href_list) 28 #如果有分页 继续下载页面 29 pags_list=Selector(response=response).xpath('//div[@id="paging_block"]//a/@href').extract() 30 print(pags_list) 31 for pag in pags_list: 32 url='http://cnblogs.com/'+pag 33 yield Request(url=url) 34 # yield Request(url=url,callback=self.fun()) #可自定义使用方法 35 def fun(self): 36 print('===========')

|----chouti.py

#任务二、 chouti 目标:爬取抽屉新热榜首页的文章 并进行点赞

# -*- coding: utf-8 -*- import scrapy from scrapy.selector import HtmlXPathSelector,Selector from scrapy.http.request import Request from scrapy.http.cookies import CookieJar import getpass from scrapy import FormRequest class ChouTiSpider(scrapy.Spider): # 爬虫应用的名称,通过此名称启动爬虫命令 name = "chouti" # 允许的域名 allowed_domains = ["chouti.com"] cookie_dict = {} has_request_set = {} #起始就自定义 def start_requests(self): url = 'https://dig.chouti.com/' # self.headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:57.0) Gecko/20100101 Firefox/57.0'} # return [Request(url=url, callback=self.login,headers=self.headers)] yield Request(url=url, callback=self.login) def login(self, response): cookie_jar = CookieJar() #创建存放cookie的对象 cookie_jar.extract_cookies(response, response.request)#解析提取存入对象 # for k, v in cookie_jar._cookies.items(): # for i, j in v.items(): # for m, n in j.items(): # self.cookie_dict[m] = n.value # print('-----',m,'::::::::',n.value,'--------') self.cookie=cookie_jar._cookies#存入cookie phone=input('请输入手机号:').split() password=getpass.getpass('请输入密码:').split() body='phone=86%s&password=%s&oneMonth=1'%(phone,password) req = Request( url='https://dig.chouti.com/login', method='POST', headers={'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8'}, # headers=self.headers, # body=body, body='phone=86%s&password=%s&oneMonth=1'%(phone,password), # cookies=self.cookie_dict, cookies=self.cookie, callback=self.check_login#回调函数 ) yield req def check_login(self, response): print('登陆状态------------',response.text) req = Request( url='https://dig.chouti.com/', method='GET', callback=self.show, cookies=self.cookie, # cookies=self.cookie_dict, dont_filter=True ) yield req def show(self, response): # print(response) hxs = HtmlXPathSelector(response) news_list = hxs.select('//div[@id="content-list"]/div[@class="item"]') # hxs=Selector(response=response) # news_list = hxs.xpath('//div[@id="content-list"]/div[@class="item"]') for new in news_list: # temp = new.xpath('div/div[@class="part2"]/@share-linkid').extract() link_id = new.xpath('*/div[@class="part2"]/@share-linkid').extract_first() yield Request( url='https://dig.chouti.com/link/vote?linksId=%s' %link_id, method='POST', cookies=self.cookie, # cookies=self.cookie_dict, callback=self.do_favor ) #分页 page_list = hxs.select('//div[@id="dig_lcpage"]//a[re:test(@href, "/all/hot/recent/d+")]/@href').extract() for page in page_list: page_url = 'https://dig.chouti.com%s' % page import hashlib hash = hashlib.md5() hash.update(bytes(page_url,encoding='utf-8')) key = hash.hexdigest() if key in self.has_request_set: pass else: self.has_request_set[key] = page_url yield Request( url=page_url, method='GET', callback=self.show ) def do_favor(self, response): print(response.text)

|---items.py

#这里可自定义的字段项 通过pipelines 进行持久化

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your scraped items 4 # 5 # See documentation in: 6 # https://doc.scrapy.org/en/latest/topics/items.html 7 8 import scrapy 9 10 11 class ScrapyTestItem(scrapy.Item): 12 # define the fields for your item here like: 13 #这里可自定义的字段项 14 15 # name = scrapy.Field() 16 title=scrapy.Field() 17 href=scrapy.Field() 18 pass

|---middlewares.py

#中间件 通过中间件可以对任务进行不同的处理 如:代理的设置 ,请求需要被下载时,经过所有下载器中间件的process_request调用 , 中间件处理异常等

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your spider middleware 4 # 5 # See documentation in: 6 # https://doc.scrapy.org/en/latest/topics/spider-middleware.html 7 8 from scrapy import signals 9 10 11 class ScrapyTestSpiderMiddleware(object): 12 # Not all methods need to be defined. If a method is not defined, 13 # scrapy acts as if the spider middleware does not modify the 14 # passed objects. 15 16 @classmethod 17 def from_crawler(cls, crawler): 18 # This method is used by Scrapy to create your spiders. 19 s = cls() 20 crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) 21 return s 22 23 def process_spider_input(self, response, spider): 24 # Called for each response that goes through the spider 25 # middleware and into the spider. 26 """ 27 下载完成,执行,然后交给parse处理 28 :param response: 29 :param spider: 30 :return: 31 """ 32 # Should return None or raise an exception. 33 return None 34 35 def process_spider_output(self, response, result, spider): 36 # Called with the results returned from the Spider, after 37 # it has processed the response. 38 """ 39 spider处理完成,返回时调用 40 :param response: 41 :param result: 42 :param spider: 43 :return: 必须返回包含 Request 或 Item 对象的可迭代对象(iterable) 44 """ 45 # Must return an iterable of Request, dict or Item objects. 46 for i in result: 47 yield i 48 49 def process_spider_exception(self, response, exception, spider): 50 # Called when a spider or process_spider_input() method 51 # (from other spider middleware) raises an exception. 52 """ 53 异常调用 54 :param response: 55 :param exception: 56 :param spider: 57 :return: None,继续交给后续中间件处理异常;含 Response 或 Item 的可迭代对象(iterable),交给调度器或pipeline 58 """ 59 # Should return either None or an iterable of Response, dict 60 # or Item objects. 61 pass 62 63 def process_start_requests(self, start_requests, spider): 64 # Called with the start requests of the spider, and works 65 # similarly to the process_spider_output() method, except 66 # that it doesn’t have a response associated. 67 """ 68 爬虫启动时调用 69 :param start_requests: 70 :param spider: 71 :return: 包含 Request 对象的可迭代对象 72 """ 73 # Must return only requests (not items). 74 for r in start_requests: 75 yield r 76 77 def spider_opened(self, spider): 78 spider.logger.info('Spider opened: %s' % spider.name) 79 80 81 class ScrapyTestDownloaderMiddleware(object): 82 # Not all methods need to be defined. If a method is not defined, 83 # scrapy acts as if the downloader middleware does not modify the 84 # passed objects. 85 86 @classmethod 87 def from_crawler(cls, crawler): 88 # This method is used by Scrapy to create your spiders. 89 s = cls() 90 crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) 91 return s 92 93 def process_request(self, request, spider): 94 # Called for each request that goes through the downloader 95 # middleware. 96 """ 97 请求需要被下载时,经过所有下载器中间件的process_request调用 98 :param request: 99 :param spider: 100 :return: 101 None,继续后续中间件去下载; 102 Response对象,停止process_request的执行,开始执行process_response 103 Request对象,停止中间件的执行,将Request重新调度器 104 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception 105 """ 106 # Must either: 107 # - return None: continue processing this request 108 # - or return a Response object 109 # - or return a Request object 110 # - or raise IgnoreRequest: process_exception() methods of 111 # installed downloader middleware will be called 112 return None 113 114 def process_response(self, request, response, spider): 115 # Called with the response returned from the downloader. 116 117 # Must either; 118 # - return a Response object 119 # - return a Request object 120 # - or raise IgnoreRequest 121 """ 122 spider处理完成,返回时调用 123 :param response: 124 :param result: 125 :param spider: 126 :return: 127 Response 对象:转交给其他中间件process_response 128 Request 对象:停止中间件,request会被重新调度下载 129 raise IgnoreRequest 异常:调用Request.errback 130 """ 131 return response 132 133 def process_exception(self, request, exception, spider): 134 # Called when a download handler or a process_request() 135 # (from other downloader middleware) raises an exception. 136 """ 137 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 138 :param response: 139 :param exception: 140 :param spider: 141 :return: 142 None:继续交给后续中间件处理异常; 143 Response对象:停止后续process_exception方法 144 Request对象:停止中间件,request将会被重新调用下载 145 """ 146 # Must either: 147 # - return None: continue processing this exception 148 # - return a Response object: stops process_exception() chain 149 # - return a Request object: stops process_exception() chain 150 pass 151 152 def spider_opened(self, spider): 153 spider.logger.info('Spider opened: %s' % spider.name) 154 155 #=================自定义代理设置===================================== 156 from scrapy.contrib.downloadermiddleware.httpproxy import HttpProxyMiddleware 157 import random,base64,six 158 159 def to_bytes(text, encoding=None, errors='strict'): 160 if isinstance(text, bytes): 161 return text 162 if not isinstance(text, six.string_types): 163 raise TypeError('to_bytes must receive a unicode, str or bytes ' 164 'object, got %s' % type(text).__name__) 165 if encoding is None: 166 encoding = 'utf-8' 167 return text.encode(encoding, errors) 168 169 class ProxyMiddleware(object): 170 def process_request(self, request, spider): 171 PROXIES = [ 172 {'ip_port': '111.11.228.75:80', 'user_pass': ''}, 173 {'ip_port': '120.198.243.22:80', 'user_pass': ''}, 174 {'ip_port': '111.8.60.9:8123', 'user_pass': ''}, 175 {'ip_port': '101.71.27.120:80', 'user_pass': ''}, 176 {'ip_port': '122.96.59.104:80', 'user_pass': ''}, 177 {'ip_port': '122.224.249.122:8088', 'user_pass': ''}, 178 ] 179 proxy = random.choice(PROXIES) 180 if proxy['user_pass'] is not None: 181 request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) 182 encoded_user_pass = base64.encodestring(to_bytes(proxy['user_pass'])) 183 request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass) 184 print("**************ProxyMiddleware have pass************" + proxy['ip_port']) 185 else: 186 print("**************ProxyMiddleware no pass************" + proxy['ip_port']) 187 request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port'])

|---myextension.py

#自定义扩展:信号 通过触发特定信号进行自定义的数据操作 如:任务开始时执行等

1 #!usr/bin/env python 2 #-*-coding:utf-8-*- 3 # Author calmyan 4 #scrapy_test 5 #2018/6/7 11:39 6 #__author__='Administrator' 7 from scrapy import signals 8 import time,datetime 9 10 class MyExtension(object): 11 def __init__(self, value): 12 self.value = value 13 self.time_format='%y-%m-%d:%H-%M-%S' 14 print('open-------->>>>>>>>>>>>>>>>>>',value) 15 16 #类实例化前执行 17 @classmethod 18 def from_crawler(cls, crawler): 19 val = crawler.settings.get('MYEXTENSION_PATH') 20 ext = cls(val) 21 22 # crawler.signals.connect(ext.engine_started, signal=signals.engine_started) 23 # crawler.signals.connect(ext.engine_stopped, signal=signals.engine_stopped) 24 crawler.signals.connect(ext.spider_opened, signal=signals.spider_opened) 25 crawler.signals.connect(ext.spider_closed, signal=signals.spider_closed) 26 # crawler.signals.connect(ext.spider_error, signal=signals.spider_error) 27 crawler.signals.connect(ext.response_downloaded, signal=signals.response_downloaded) 28 29 return ext 30 31 def response_downloaded(self,spider): 32 print('下载时运行中===============') 33 34 def engine_started(self,spider): 35 self.f=open(self.value,'a+',encoding='utf-8') 36 def engine_stopped(self,spider): 37 self.f.write('任务结束时间:') 38 self.f.close() 39 40 #任务开始时调用 41 def spider_opened(self, spider): 42 print('open-------->>>>>>>>>>>>>>>>>>') 43 self.f=open(self.value,'a+',encoding='utf-8') 44 45 # start_time=time.time() 46 start_time=time.strftime(self.time_format) 47 print(start_time,'开始时间',type(start_time)) 48 self.f.write('任务开始时间:'+str(start_time)+' ') 49 #任务结束时调用 50 def spider_closed(self, spider): 51 print('close-<<<<<<<<<<<<<--------',time.ctime()) 52 end_time=datetime.date.fromtimestamp(time.time()) 53 end_time=time.strftime(self.time_format) 54 self.f.write('任务结束时间:'+str(end_time)+' ') 55 self.f.close() 56 57 #出现错误时调用 58 def spider_error(self,spider): 59 print('----->>-----出现错误------<<------')

|---pipelines.py

#数据持久化操作

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html 7 import types 8 from scrapy.exceptions import DropItem #中止pipelines任务 9 import time,datetime 10 class ScrapyTestPipeline(object): 11 def process_item(self, item, spider): 12 print('打印输出到屏幕',item) 13 print(spider,'=======') 14 if spider=="cnblogs": 15 raise DropItem() 16 return item 17 18 class ScrapyTestPipeline2(object): 19 def __init__(self,v): 20 self.path = v 21 print(self.path,'```````````') 22 #self.f=open(v,'a+') 23 self.time_format='%y-%m-%d:%H-%M-%S' 24 25 @classmethod 26 def from_crawler(cls, crawler): 27 """ 28 初始化时候,用于创建pipeline对象 29 :param crawler: 30 :return: 31 """ 32 val = crawler.settings.get('MYFILE_PATH')##可以读取settings中的定义变量 33 return cls(val) 34 35 def open_spider(self,spider): 36 """ 37 爬虫开始执行时,调用 38 :param spider: 39 :return: 40 """ 41 self.f= open(self.path,'a+',encoding="utf-8") 42 start_time=time.strftime(self.time_format) 43 self.f.write('开始时间:'+str(start_time)+' ') 44 45 print('000000') 46 47 def close_spider(self,spider): 48 """ 49 爬虫关闭时,被调用 50 :param spider: 51 :return: 52 """ 53 start_time=time.strftime(self.time_format) 54 self.f.write('结束时间:'+str(start_time)+' ') 55 self.f.close() 56 print('111111') 57 58 def process_item(self, item, spider): 59 print('保存到文件',type(item),item) 60 print('[]============>') 61 print(type(item['title'])) 62 print('lambda end') 63 if isinstance(item['title'],list) :#判断数据类型 64 nubme=len(item['title']) 65 for x in range(nubme): 66 self.f.write('标题:'+item['title'][x]+' ') 67 self.f.write('链接:'+item['href'][x]+' ') 68 elif isinstance(item['title'],str) : 69 self.f.write('标题:'+item['title']+' ') 70 self.f.write('链接<'+item['href']+'> ') 71 return item

|---settings.py

#爬虫任务的相关配置

1 # -*- coding: utf-8 -*- 2 3 # Scrapy settings for scrapy_test project 4 # 5 # For simplicity, this file contains only settings considered important or 6 # commonly used. You can find more settings consulting the documentation: 7 # 8 # https://doc.scrapy.org/en/latest/topics/settings.html 9 # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html 10 # https://doc.scrapy.org/en/latest/topics/spider-middleware.html 11 12 #--------------自定义文件的路径------------ 13 MYFILE_PATH='TEST_FILE.txt' 14 MYEXTENSION_PATH='TWO2.txt' 15 ##--------------自定义命令注册------------ 16 COMMANDS_MODULE = 'scrapy_test.commads'#'项目名称.目录名称' 17 18 19 20 # 1. 爬虫总项目名称 21 BOT_NAME = 'scrapy_test' 22 23 # 2. 爬虫应用路径 24 SPIDER_MODULES = ['scrapy_test.spiders'] 25 NEWSPIDER_MODULE = 'scrapy_test.spiders' 26 27 28 # Crawl responsibly by identifying yourself (and your website) on the user-agent 29 # 3. 客户端 user-agent请求头 30 #USER_AGENT = 'scrapy_test (+http://www.yourdomain.com)' 31 32 # Obey robots.txt rules 33 # 4. 禁止爬虫配置 遵守网站爬虫声明 34 ROBOTSTXT_OBEY = True 35 36 # Configure maximum concurrent requests performed by Scrapy (default: 16) 37 # 5. 并发请求数 38 #CONCURRENT_REQUESTS = 32 39 40 # Configure a delay for requests for the same website (default: 0) 41 # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay 42 # See also autothrottle settings and docs 43 44 # 6. 延迟下载秒数 45 #DOWNLOAD_DELAY = 3 46 # The download delay setting will honor only one of: 47 # 7. 单域名访问并发数,并且延迟下次秒数也应用在每个域名 48 #CONCURRENT_REQUESTS_PER_DOMAIN = 16 49 # 单IP访问并发数,如果有值则忽略:CONCURRENT_REQUESTS_PER_DOMAIN,并且延迟下次秒数也应用在每个IP 50 #CONCURRENT_REQUESTS_PER_IP = 16 51 52 # Disable cookies (enabled by default) 53 # 8. 是否支持cookie,cookiejar进行操作cookie 54 #COOKIES_ENABLED = False 55 56 # Disable Telnet Console (enabled by default) 57 # 9. Telnet用于查看当前爬虫的信息,操作爬虫等... 58 # 使用telnet ip port ,然后通过命令操作 59 #TELNETCONSOLE_ENABLED = False 60 #TELNETCONSOLE_HOST = '127.0.0.1' 61 #TELNETCONSOLE_PORT = [6023,] 62 63 # 10. 默认请求头 64 # Override the default request headers: 65 #DEFAULT_REQUEST_HEADERS = { 66 # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 67 # 'Accept-Language': 'en', 68 #} 69 70 71 # Configure item pipelines 72 # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html 73 # 11. 定义pipeline处理请求 74 #注册后 通过 Item 调用任务处理 200 数字小优先 75 ITEM_PIPELINES = { 76 'scrapy_test.pipelines.ScrapyTestPipeline': 200, 77 'scrapy_test.pipelines.ScrapyTestPipeline2': 300, 78 } 79 80 # Enable or disable extensions 81 # See https://doc.scrapy.org/en/latest/topics/extensions.html 82 # 12. 自定义扩展,基于信号进行调用 83 EXTENSIONS = { 84 # 'scrapy.extensions.telnet.TelnetConsole': None, 85 'scrapy_test.myextension.MyExtension': 100, 86 } 87 88 # 13. 爬虫允许的最大深度,可以通过meta查看当前深度;0表示无深度 89 DEPTH_LIMIT=1 #深度查找的层数 90 91 # 14. 爬取时,0表示深度优先Lifo(默认);1表示广度优先FiFo 92 93 # 后进先出,深度优先 94 # DEPTH_PRIORITY = 0 95 # SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleLifoDiskQueue' 96 # SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.LifoMemoryQueue' 97 # 先进先出,广度优先 98 99 # DEPTH_PRIORITY = 1 100 # SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleFifoDiskQueue' 101 # SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.FifoMemoryQueue' 102 103 104 # 15. 调度器队列 105 # SCHEDULER = 'scrapy.core.scheduler.Scheduler' 106 # from scrapy.core.scheduler import Scheduler 107 108 # 16. 访问URL去重 scrapy默认使用 109 # DUPEFILTER_CLASS='scrapy.dupefilter.RFPDupeFilter'#过滤 访问过的网址的类 110 DUPEFILTER_CLASS='md.RepeatFilter'#自定义过滤 访问过的网址的类 111 # DUPEFILTER_DEBUG=False #是否调用默认 112 113 114 115 """ 116 17. 自动限速算法 117 from scrapy.contrib.throttle import AutoThrottle 118 自动限速设置 119 1. 获取最小延迟 DOWNLOAD_DELAY 120 2. 获取最大延迟 AUTOTHROTTLE_MAX_DELAY 121 3. 设置初始下载延迟 AUTOTHROTTLE_START_DELAY 122 4. 当请求下载完成后,获取其"连接"时间 latency,即:请求连接到接受到响应头之间的时间 123 5. 用于计算的... AUTOTHROTTLE_TARGET_CONCURRENCY 124 target_delay = latency / self.target_concurrency 125 new_delay = (slot.delay + target_delay) / 2.0 # 表示上一次的延迟时间 126 new_delay = max(target_delay, new_delay) 127 new_delay = min(max(self.mindelay, new_delay), self.maxdelay) 128 slot.delay = new_delay 129 """ 130 # Enable and configure the AutoThrottle extension (disabled by default) 131 # See https://doc.scrapy.org/en/latest/topics/autothrottle.html 132 # 开始自动限速 133 #AUTOTHROTTLE_ENABLED = True 134 # The initial download delay 135 # 初始下载延迟 136 #AUTOTHROTTLE_START_DELAY = 5 137 # The maximum download delay to be set in case of high latencies 138 # 最大下载延迟 139 #AUTOTHROTTLE_MAX_DELAY = 60 140 # The average number of requests Scrapy should be sending in parallel to 141 # each remote server 142 # 平均每秒并发数 143 #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 144 # Enable showing throttling stats for every response received: 145 # 是否显示节流统计每一个接收到的响应: 146 #AUTOTHROTTLE_DEBUG = False 147 148 """ 149 18. 启用缓存 150 目的用于将已经发送的请求或相应缓存下来,以便以后使用 151 from scrapy.downloadermiddlewares.httpcache import HttpCacheMiddleware 152 from scrapy.extensions.httpcache import DummyPolicy 153 from scrapy.extensions.httpcache import FilesystemCacheStorage 154 """ 155 156 # Enable and configure HTTP caching (disabled by default) 157 # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings 158 # 是否启用缓存策略 159 #HTTPCACHE_ENABLED = True 160 161 # 缓存策略:所有请求均缓存,下次在请求直接访问原来的缓存即可 162 # HTTPCACHE_POLICY = "scrapy.extensions.httpcache.DummyPolicy" 163 164 # 缓存策略:根据Http响应头:Cache-Control、Last-Modified 等进行缓存的策略 165 # HTTPCACHE_POLICY = "scrapy.extensions.httpcache.RFC2616Policy" 166 167 # 缓存超时时间 168 #HTTPCACHE_EXPIRATION_SECS = 0 169 170 # 缓存保存路径 171 #HTTPCACHE_DIR = 'httpcache' 172 173 # 缓存忽略的Http状态码 174 #HTTPCACHE_IGNORE_HTTP_CODES = [] 175 176 # 缓存存储的插件 177 #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' 178 179 """ 180 19. 代理,需要在环境变量中设置 181 from scrapy.contrib.downloadermiddleware.httpproxy import HttpProxyMiddleware 182 183 方式一:使用默认 184 os.environ 185 { 186 http_proxy:http://root:woshiniba@192.168.11.11:9999/ 187 https_proxy:http://192.168.11.11:9999/ 188 } 189 方式二:使用自定义下载中间件 190 191 def to_bytes(text, encoding=None, errors='strict'): 192 if isinstance(text, bytes): 193 return text 194 if not isinstance(text, six.string_types): 195 raise TypeError('to_bytes must receive a unicode, str or bytes ' 196 'object, got %s' % type(text).__name__) 197 if encoding is None: 198 encoding = 'utf-8' 199 return text.encode(encoding, errors) 200 201 class ProxyMiddleware(object): 202 def process_request(self, request, spider): 203 PROXIES = [ 204 {'ip_port': '111.11.228.75:80', 'user_pass': ''}, 205 {'ip_port': '120.198.243.22:80', 'user_pass': ''}, 206 {'ip_port': '111.8.60.9:8123', 'user_pass': ''}, 207 {'ip_port': '101.71.27.120:80', 'user_pass': ''}, 208 {'ip_port': '122.96.59.104:80', 'user_pass': ''}, 209 {'ip_port': '122.224.249.122:8088', 'user_pass': ''}, 210 ] 211 proxy = random.choice(PROXIES) 212 if proxy['user_pass'] is not None: 213 request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) 214 encoded_user_pass = base64.encodestring(to_bytes(proxy['user_pass'])) 215 request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass) 216 print "**************ProxyMiddleware have pass************" + proxy['ip_port'] 217 else: 218 print "**************ProxyMiddleware no pass************" + proxy['ip_port'] 219 request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) 220 221 DOWNLOADER_MIDDLEWARES = { 222 'step8_king.middlewares.ProxyMiddleware': 500, 223 } 224 225 """ 226 227 """ 228 20. Https访问 229 Https访问时有两种情况: 230 1. 要爬取网站使用的可信任证书(默认支持) 231 DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory" 232 DOWNLOADER_CLIENTCONTEXTFACTORY = "scrapy.core.downloader.contextfactory.ScrapyClientContextFactory" 233 234 2. 要爬取网站使用的自定义证书 235 DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory" 236 DOWNLOADER_CLIENTCONTEXTFACTORY = "step8_king.https.MySSLFactory" 237 238 # https.py 239 from scrapy.core.downloader.contextfactory import ScrapyClientContextFactory 240 from twisted.internet.ssl import (optionsForClientTLS, CertificateOptions, PrivateCertificate) 241 242 class MySSLFactory(ScrapyClientContextFactory): 243 def getCertificateOptions(self): 244 from OpenSSL import crypto 245 v1 = crypto.load_privatekey(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.key.unsecure', mode='r').read()) 246 v2 = crypto.load_certificate(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.pem', mode='r').read()) 247 return CertificateOptions( 248 privateKey=v1, # pKey对象 249 certificate=v2, # X509对象 250 verify=False, 251 method=getattr(self, 'method', getattr(self, '_ssl_method', None)) 252 ) 253 其他: 254 相关类 255 scrapy.core.downloader.handlers.http.HttpDownloadHandler 256 scrapy.core.downloader.webclient.ScrapyHTTPClientFactory 257 scrapy.core.downloader.contextfactory.ScrapyClientContextFactory 258 相关配置 259 DOWNLOADER_HTTPCLIENTFACTORY 260 DOWNLOADER_CLIENTCONTEXTFACTORY 261 262 """ 263 264 #21. 爬虫中间件 265 # Enable or disable spider middlewares 266 # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html 267 #SPIDER_MIDDLEWARES = { 268 # 'scrapy_test.middlewares.ScrapyTestSpiderMiddleware': 543, 269 # 'scrapy.contrib.spidermiddleware.httperror.HttpErrorMiddleware': 50, 270 # 'scrapy.contrib.spidermiddleware.offsite.OffsiteMiddleware': 500, 271 # 'scrapy.contrib.spidermiddleware.referer.RefererMiddleware': 700, 272 # 'scrapy.contrib.spidermiddleware.urllength.UrlLengthMiddleware': 800, 273 # 'scrapy.contrib.spidermiddleware.depth.DepthMiddleware': 900, 274 #} 275 #22. 下载中间件 276 # Enable or disable downloader middlewares 277 # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html 278 DOWNLOADER_MIDDLEWARES = { 279 # 'scrapy_test.middlewares.ScrapyTestDownloaderMiddleware': 543, 280 # 默认下载中间件 281 # 'scrapy_test.middlewares.ProxyMiddleware': 543, 282 # 'scrapy.contrib.downloadermiddleware.robotstxt.RobotsTxtMiddleware': 100, 283 # 'scrapy.contrib.downloadermiddleware.httpauth.HttpAuthMiddleware': 300, 284 # 'scrapy.contrib.downloadermiddleware.downloadtimeout.DownloadTimeoutMiddleware': 350, 285 # 'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': 400, 286 # 'scrapy.contrib.downloadermiddleware.retry.RetryMiddleware': 500, 287 # 'scrapy.contrib.downloadermiddleware.defaultheaders.DefaultHeadersMiddleware': 550, 288 # 'scrapy.contrib.downloadermiddleware.redirect.MetaRefreshMiddleware': 580, 289 # 'scrapy.contrib.downloadermiddleware.httpcompression.HttpCompressionMiddleware': 590, 290 # 'scrapy.contrib.downloadermiddleware.redirect.RedirectMiddleware': 600, 291 # 'scrapy.contrib.downloadermiddleware.cookies.CookiesMiddleware': 700, 292 # 'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 750, 293 # 'scrapy.contrib.downloadermiddleware.chunked.ChunkedTransferMiddleware': 830, 294 # 'scrapy.contrib.downloadermiddleware.stats.DownloaderStats': 850, 295 # 'scrapy.contrib.downloadermiddleware.httpcache.HttpCacheMiddleware': 900, 296 }

|---md.py

#自制的URL过滤

1 #!usr/bin/env python 2 #-*-coding:utf-8-*- 3 # Author calmyan 4 #scrapy_test 5 #2018/6/6 15:19 6 #__author__='Administrator' 7 class RepeatFilter(object): #过滤类 8 def __init__(self): 9 self.visited_url=set() #生成一个集合 10 pass 11 12 @classmethod 13 def from_settings(cls, settings): 14 #初始化是 调用 15 return cls() 16 17 #是否访问过 18 def request_seen(self, request): 19 if request.url in self.visited_url: 20 return True 21 self.visited_url.add(request.url)#没有访问过 加入集合 返回False 22 return False 23 24 #爬虫开启时执行 打开文件 数据库 25 def open(self): # can return deferred 26 pass 27 #爬虫结束后执行 28 def close(self, reason): # can return a deferred 29 print('关闭,close ,') 30 pass 31 #日志 32 def log(self, request, spider): # log that a request has been filtered 33 print('URL',request.url) 34 pass