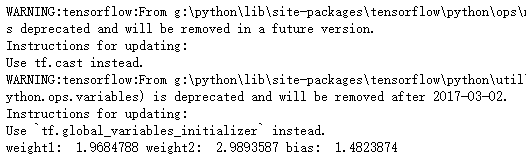

import numpy as np import tensorflow as tf import matplotlib.pyplot as plt threshold = 1.0e-2 x1_data = np.random.randn(100).astype(np.float32) x2_data = np.random.randn(100).astype(np.float32) y_data = x1_data * 2 + x2_data * 3 + 1.5 weight1 = tf.Variable(1.) weight2 = tf.Variable(1.) bias = tf.Variable(1.) x1_ = tf.placeholder(tf.float32) x2_ = tf.placeholder(tf.float32) y_ = tf.placeholder(tf.float32) y_model = tf.add(tf.add(tf.multiply(x1_, weight1), tf.multiply(x2_, weight2)),bias) loss = tf.reduce_mean(tf.pow((y_model - y_),2)) train_op = tf.train.GradientDescentOptimizer(0.01).minimize(loss) sess = tf.Session() init = tf.initialize_all_variables() sess.run(init) flag = 1 while(flag): for (x,y) in zip(zip(x1_data, x2_data),y_data): sess.run(train_op, feed_dict={x1_:x[0],x2_:x[1], y_:y}) if sess.run(loss, feed_dict={x1_:x[0],x2_:x[1], y_:y}) <= threshold: flag = 0 print("weight1: " ,weight1.eval(sess),"weight2: " ,weight2.eval(sess),"bias: " ,bias.eval(sess))

import tensorflow as tf matrix1 = tf.constant([[3., 3.]]) matrix2 = tf.constant([3., 3.]) sess = tf.Session() print(matrix1) print(sess.run(matrix1)) print("----------------------") print(matrix2) print(sess.run(matrix2))

import tensorflow as tf matrix1 = tf.constant([[3., 3.]]) matrix2 = tf.constant([3., 3.]) sess = tf.Session() print(sess.run(tf.add(matrix1,matrix2)))

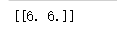

import tensorflow as tf matrix1 = tf.constant([1, 2, 3, 4, 5, 6], shape=[2, 3]) matrix2 = tf.constant([1, 1, 1, 1, 1, 1], shape=[2, 3]) result1 = tf.multiply(matrix1,matrix2) sess = tf.Session() print(sess.run(result1))

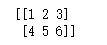

import tensorflow as tf matrix1 = tf.constant([1, 2, 3, 4, 5, 6], shape=[2, 3]) matrix2 = tf.constant([1, 1, 1, 1, 1, 1], shape=[3, 2]) result2 = tf.matmul(matrix1,matrix2) sess = tf.Session() print(sess.run(result2))

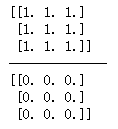

import tensorflow as tf matrix1 = tf.Variable(tf.ones([3,3])) matrix2 = tf.Variable(tf.zeros([3,3])) result = tf.matmul(matrix1,matrix2) init=tf.initialize_all_variables() sess = tf.Session() sess.run(init) print(sess.run(matrix1)) print("--------------") print(sess.run(matrix2))

import tensorflow as tf a = tf.constant([[1,2],[3,4]]) matrix2 = tf.placeholder('float32',[2,2]) matrix1 = matrix2 sess = tf.Session() a = sess.run(a) print(sess.run(matrix1,feed_dict={matrix2:a}))

import tensorflow as tf import numpy as np houses = 100 features = 2 #设计的模型为 2 * x1 + 3 * x2 x_data = np.zeros([houses,2]) for house in range(houses): x_data[house,0] = np.round(np.random.uniform(50., 150.)) x_data[house,1] = np.round(np.random.uniform(3., 7.)) weights = np.array([[2.],[3.]]) y_data = np.dot(x_data,weights) x_data_ = tf.placeholder(tf.float32,[None,2]) y_data_ = tf.placeholder(tf.float32,[None,1]) weights_ = tf.Variable(np.ones([2,1]),dtype=tf.float32) y_model = tf.matmul(x_data_,weights_) loss = tf.reduce_mean(tf.pow((y_model - y_data_),2)) train_op = tf.train.GradientDescentOptimizer(0.01).minimize(loss) sess = tf.Session() init = tf.global_variables_initializer() sess.run(init) for _ in range(10): for x,y in zip(x_data,y_data): z1 = x.reshape(1,2) z2 = y.reshape(1,1) sess.run(train_op,feed_dict={x_data_:z1,y_data_:z2}) print(weights_.eval(sess))

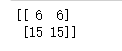

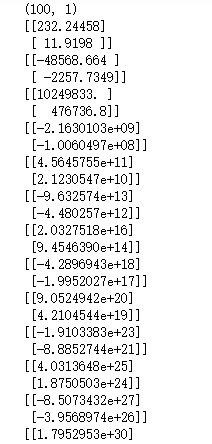

import tensorflow as tf import numpy as np houses = 100 features = 2 #设计的模型为 2 * x1 + 3 * x2 x_data = np.zeros([100,2]) for house in range(houses): x_data[house,0] = np.round(np.random.uniform(50., 150.)) x_data[house,1] = np.round(np.random.uniform(3., 7.)) weights = np.array([[2.],[3.]]) y_data = np.dot(x_data,weights) x_data_ = tf.placeholder(tf.float32,[None,2]) weights_ = tf.Variable(np.ones([2,1]),dtype=tf.float32) y_model = tf.matmul(x_data_,weights_) loss = tf.reduce_mean(tf.pow((y_model - y_data),2)) train_op = tf.train.GradientDescentOptimizer(0.01).minimize(loss) sess = tf.Session() init = tf.initialize_all_variables() sess.run(init) for _ in range(20): sess.run(train_op,feed_dict={x_data_:x_data}) print(weights_.eval(sess))

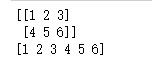

import numpy as np a = np.array([[1,2,3],[4,5,6]]) print(a) b = a.flatten() print(b)

import numpy as np import tensorflow as tf filename_queue = tf.train.string_input_producer(["D:\F\TensorFlow_deep_learn\data\cancer.txt"], shuffle=False) reader = tf.TextLineReader() key, value = reader.read(filename_queue) record_defaults = [[1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0]] col1, col2, col3, col4, col5 , col6 , col7 = tf.decode_csv(value,record_defaults=record_defaults) print(col1)

import numpy as np import tensorflow as tf def readFile(filename): filename_queue = tf.train.string_input_producer(filename, shuffle=False) reader = tf.TextLineReader() key, value = reader.read(filename_queue) record_defaults = [[1.0], [1.0], [1.0], [1.0], [1.0], [1.0], [1.0]] col1, col2, col3, col4, col5 , col6 , col7 = tf.decode_csv(value,record_defaults=record_defaults) label = tf.stack([col1,col2]) features = tf.stack([col3, col4, col5, col6, col7]) example_batch, label_batch = tf.train.shuffle_batch([features,label],batch_size=3, capacity=100, min_after_dequeue=10) return example_batch,label_batch example_batch,label_batch = readFile(["D:\F\TensorFlow_deep_learn\data\cancer.txt"]) weight = tf.Variable(np.random.rand(5,1).astype(np.float32)) bias = tf.Variable(np.random.rand(3,1).astype(np.float32)) x_ = tf.placeholder(tf.float32, [None, 5]) y_model = tf.matmul(x_, weight) + bias y = tf.placeholder(tf.float32, [None, 1]) loss = -tf.reduce_sum(y*tf.log(y_model)) train = tf.train.GradientDescentOptimizer(0.1).minimize(loss) init = tf.initialize_all_variables() with tf.Session() as sess: sess.run(init) coord = tf.train.Coordinator() threads = tf.train.start_queue_runners(coord=coord) flag = 1 while(flag): e_val, l_val = sess.run([example_batch, label_batch]) sess.run(train, feed_dict={x_: e_val, y: l_val}) if sess.run(loss,{x_: e_val, y: l_val}) <= 1: flag = 0 print(sess.run(weight))