- Issues:

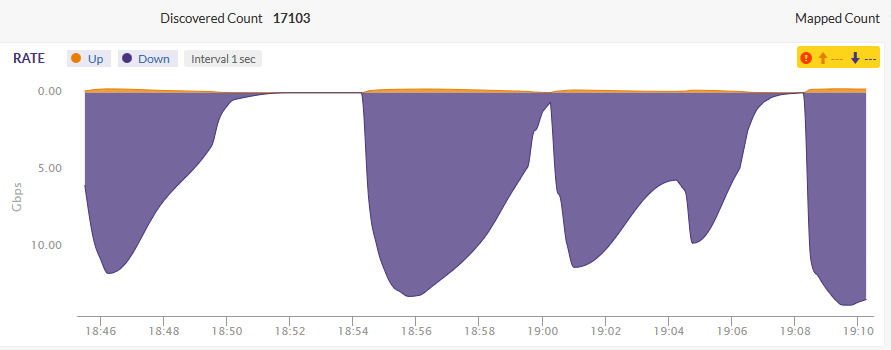

customer report that real time will interrupted frequently as below:

- Root Cause:

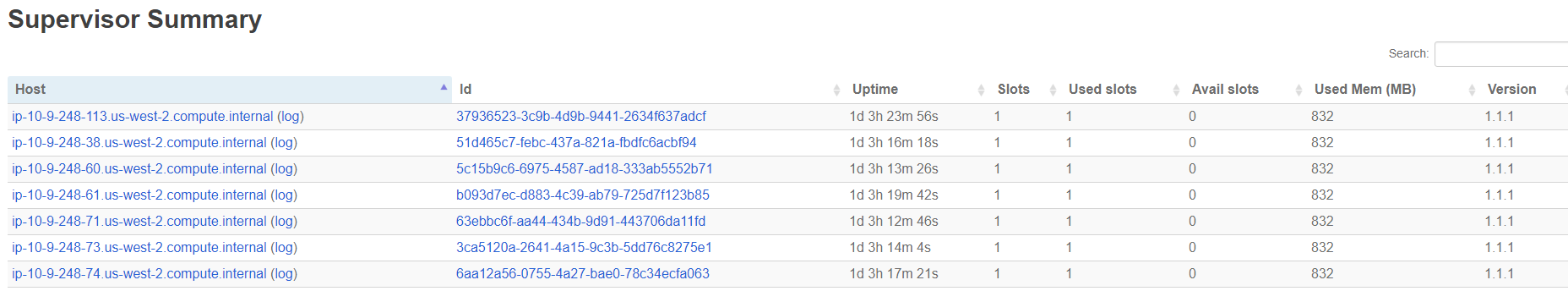

some storm workers execute full gc with too many time and cause nimbus reset the worker , so the data is missing.

- Steps to invesigates this issues

1. check the storm UI with workers and found that all workers are normal ,but some task failed

check logs from storm workers and found there are some exceptions as below:

2018-09-26 07:35:55.081 FlowKafkaReadSpout getDataThread-8 [INFO] partition:8,offset:676160000,key:2018-09-26 07:35:53_15067,valueLength:1755 2018-09-26 07:36:00.490 o.a.s.m.n.StormServerHandler Netty-server-localhost-6700-worker-1 [ERROR] server errors in handling the request java.io.IOException: Connection reset by peer at sun.nio.ch.FileDispatcherImpl.read0(Native Method) ~[?:1.7.0_80] at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39) ~[?:1.7.0_80] at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223) ~[?:1.7.0_80] at sun.nio.ch.IOUtil.read(IOUtil.java:192) ~[?:1.7.0_80] at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:384) ~[?:1.7.0_80] at org.apache.storm.shade.org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:64) [storm-core-1.1.1.jar:1.1.1] at org.apache.storm.shade.org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:108) [storm-core-1.1.1.jar:1.1.1] at org.apache.storm.shade.org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:318) [storm-core-1.1.1.jar:1.1.1] at org.apache.storm.shade.org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:89) [storm-core-1.1.1.jar:1.1.1] at org.apache.storm.shade.org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:178) [storm-core-1.1.1.jar:1.1.1] at org.apache.storm.shade.org.jboss.netty.util.ThreadRenamingRunnable.run(ThreadRenamingRunnable.java:108) [storm-core-1.1.1.jar:1.1.1] at org.apache.storm.shade.org.jboss.netty.util.internal.DeadLockProofWorker$1.run(DeadLockProofWorker.java:42) [storm-core-1.1.1.jar:1.1.1] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) [?:1.7.0_80] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) [?:1.7.0_80] at java.lang.Thread.run(Thread.java:745) [?:1.7.0_80] 2018-09-26 07:36:00.490 o.a.s.m.n.StormClientHandler client-worker-1 [INFO] Connection to ip-10-9-248-74.us-west-2.compute.internal/10.9.248.74:6700 failed: java.io.IOException: Connection reset by peer at sun.nio.ch.FileDispatcherImpl.read0(Native Method) ~[?:1.7.0_80] at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39) ~[?:1.7.0_80] at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223) ~[?:1.7.0_80] at sun.nio.ch.IOUtil.read(IOUtil.java:192) ~[?:1.7.0_80] at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:384) ~[?:1.7.0_80]

it seems that some of workers can't connect and also can find others exceptions of "Connection reset by peer" with zookeeper cluster and kafaka cluster , check the port usage and found that :

tcp6 0 0 10.9.248.61:38050 10.9.248.70:9092 TIME_WAIT tcp6 0 0 10.9.248.61:38066 10.9.248.70:9092 TIME_WAIT tcp6 0 0 10.9.248.61:39160 10.9.248.97:2181 TIME_WAIT

we restarted all storm workers and kafaka cluster and zookeeper cluster , the issue not fix also.

we check the storm workers again and found on other workers there also have some issues as below:

2018-09-26 06:34:34.834 STDERR Thread-2 [INFO] 740.606: [Full GC [PSYoungGen: 1298054K->370618K(6126592K)] [ParOldGen: 5032811K->5122788K(6748672K)] 6330866K->5493406K(12875264K) [PSPermGen: 55526K->55525K(524288K)], 6.4880090 secs] [Times: user=100.76 sys=0.00, real=6.49 secs] 2018-09-26 06:34:34.834 o.a.s.s.o.a.z.ClientCnxn Thread-23-$mastercoord-bg1-executor[2 2]-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Client session timed out, have not heard from server in 9008ms for sessionid 0xb65a69ab5380782, closing socket connection and attempting reconnect 2018-09-26 06:34:34.840 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-SendThread(ip-10-9-248-98.us-west-2.compute.internal:2181) [INFO] Client session timed out, have not heard from server in 10147ms for sessionid 0xa5beb7fcf46ff88, closing socket connection and attempting reconnect 2018-09-26 06:34:34.835 o.a.s.s.o.a.z.ClientCnxn main-SendThread(ip-10-9-248-98.us-west-2.compute.internal:2181) [INFO] Client session timed out, have not heard from server in 9398ms for sessionid 0xa5beb7fcf46ff82, closing socket connection and attempting reconnect 2018-09-26 06:34:34.935 o.a.s.s.o.a.c.f.s.ConnectionStateManager Thread-23-$mastercoord-bg1-executor[2 2]-EventThread [INFO] State change: SUSPENDED 2018-09-26 06:34:34.941 o.a.c.f.s.ConnectionStateManager Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-EventThread [INFO] State change: SUSPENDED 2018-09-26 06:34:34.942 o.a.s.s.o.a.c.f.s.ConnectionStateManager main-EventThread [INFO] State change: SUSPENDED 2018-09-26 06:34:34.943 o.a.s.u.StormBoundedExponentialBackoffRetry executor-heartbeat-timer [WARN] WILL SLEEP FOR 1001ms (NOT MAX) 2018-09-26 06:34:34.943 o.a.s.u.StormBoundedExponentialBackoffRetry refresh-active-timer [WARN] WILL SLEEP FOR 1001ms (NOT MAX) 2018-09-26 06:34:34.943 o.a.s.u.StormBoundedExponentialBackoffRetry refresh-connections-timer [WARN] WILL SLEEP FOR 1001ms (NOT MAX) 2018-09-26 06:34:34.943 o.a.s.c.zookeeper-state-factory main-EventThread [WARN] Received event :disconnected::none: with disconnected Writer Zookeeper. 2018-09-26 06:34:35.182 o.a.s.s.o.a.z.ClientCnxn Thread-23-$mastercoord-bg1-executor[2 2]-SendThread(ip-10-9-248-98.us-west-2.compute.internal:2181) [INFO] Opening socket connection to server ip-10-9-248-98.us-west-2.compute.internal/10.9.248.98:2181. Will not attempt to authenticate using SASL (unknown error) 2018-09-26 06:34:35.183 o.a.s.s.o.a.z.ClientCnxn Thread-23-$mastercoord-bg1-executor[2 2]-SendThread(ip-10-9-248-98.us-west-2.compute.internal:2181) [INFO] Socket connection established to ip-10-9-248-98.us-west-2.compute.internal/10.9.248.98:2181, initiating session 2018-09-26 06:34:35.183 o.a.s.s.o.a.z.ClientCnxn Thread-23-$mastercoord-bg1-executor[2 2]-SendThread(ip-10-9-248-98.us-west-2.compute.internal:2181) [INFO] Session establishment complete on server ip-10-9-248-98.us-west-2.compute.internal/10.9.248.98:2181, sessionid = 0xb65a69ab5380782, negotiated timeout = 10000 2018-09-26 06:34:35.183 o.a.s.s.o.a.c.f.s.ConnectionStateManager Thread-23-$mastercoord-bg1-executor[2 2]-EventThread [INFO] State change: RECONNECTED 2018-09-26 06:34:35.787 o.a.s.s.o.a.z.ClientCnxn main-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Opening socket connection to server ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181. Will not attempt to authenticate using SASL (unknown error) 2018-09-26 06:34:35.787 o.a.s.s.o.a.z.ClientCnxn main-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Socket connection established to ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181, initiating session 2018-09-26 06:34:35.789 o.a.s.s.o.a.z.ClientCnxn main-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Unable to reconnect to ZooKeeper service, session 0xa5beb7fcf46ff82 has expired, closing socket connection 2018-09-26 06:34:35.789 o.a.s.s.o.a.c.f.s.ConnectionStateManager main-EventThread [INFO] State change: LOST 2018-09-26 06:34:35.789 o.a.s.c.zookeeper-state-factory main-EventThread [WARN] Received event :expired::none: with disconnected Writer Zookeeper. 2018-09-26 06:34:35.789 o.a.s.s.o.a.c.ConnectionState main-EventThread [WARN] Session expired event received 2018-09-26 06:34:35.789 o.a.s.s.o.a.z.ZooKeeper main-EventThread [INFO] Initiating client connection, connectString=ec2-52-27-163-101.us-west-2.compute.amazonaws.com:2181,ec2-52-27-236-22.us-west-2.compute.amazonaws.com:2181,ec2-52-24-149-36.us-west-2.compute.amazonaws.com:2181/storm111 sessionTimeout=90000 watcher=org.apache.storm.shade.org.apache.curator.ConnectionState@383fbe82 2018-09-26 06:34:35.802 o.a.s.s.o.a.z.ClientCnxn main-EventThread [INFO] EventThread shut down 2018-09-26 06:34:35.802 o.a.s.s.o.a.z.ClientCnxn main-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Opening socket connection to server ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181. Will not attempt to authenticate using SASL (unknown error) 2018-09-26 06:34:35.802 o.a.s.s.o.a.z.ClientCnxn main-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Socket connection established to ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181, initiating session 2018-09-26 06:34:35.804 o.a.s.s.o.a.z.ClientCnxn main-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Session establishment complete on server ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181, sessionid = 0xb65a69ab538078c, negotiated timeout = 10000 2018-09-26 06:34:35.805 o.a.s.s.o.a.c.f.s.ConnectionStateManager main-EventThread [INFO] State change: RECONNECTED 2018-09-26 06:34:35.935 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Opening socket connection to server ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181. Will not attempt to authenticate using SASL (unknown error) 2018-09-26 06:34:35.935 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Socket connection established to ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181, initiating session 2018-09-26 06:34:35.937 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Unable to reconnect to ZooKeeper service, session 0xa5beb7fcf46ff88 has expired, closing socket connection 2018-09-26 06:34:35.937 o.a.c.f.s.ConnectionStateManager Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-EventThread [INFO] State change: LOST 2018-09-26 06:34:35.937 o.a.c.ConnectionState Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-EventThread [WARN] Session expired event received 2018-09-26 06:34:35.938 o.a.z.ZooKeeper Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-EventThread [INFO] Initiating client connection, connectString=zookeeper-prod-1.compass-calix.com:2181,zookeeper-prod-2.compass-calix.com:2181,zookeeper-prod-3.compass-calix.com:2181 sessionTimeout=60000 watcher=org.apache.curator.ConnectionState@5b51a389 2018-09-26 06:34:36.177 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Opening socket connection to server ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181. Will not attempt to authenticate using SASL (unknown error) 2018-09-26 06:34:36.177 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-EventThread [INFO] EventThread shut down 2018-09-26 06:34:36.177 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Socket connection established to ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181, initiating session 2018-09-26 06:34:36.179 o.a.z.ClientCnxn Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-SendThread(ip-10-9-248-97.us-west-2.compute.internal:2181) [INFO] Session establishment complete on server ip-10-9-248-97.us-west-2.compute.internal/10.9.248.97:2181, sessionid = 0xb65a69ab538078d, negotiated timeout = 10000 2018-09-26 06:34:36.180 o.a.c.f.s.ConnectionStateManager Thread-279-spout-DataKafkaSpout1537942916091-executor[824 824]-EventThread [INFO] State change: RECONNECTED 2018-09-26 06:34:36.217 c.c.s.r.z.ZNodeTreeListener Curator-TreeCache-0 [INFO] Listen: Add path:/realtime/subscriptions/location/1127582/1033 , timestamp is:

from logs, we will found that , sometimes the worker will execute Full GC exceed more than 30s , and the worker's "topology.message.timeout.secs=30" , so when Full GC executed more than 30s , the other workers can't get the response from this worker and nimbers will disconnect this worker ,