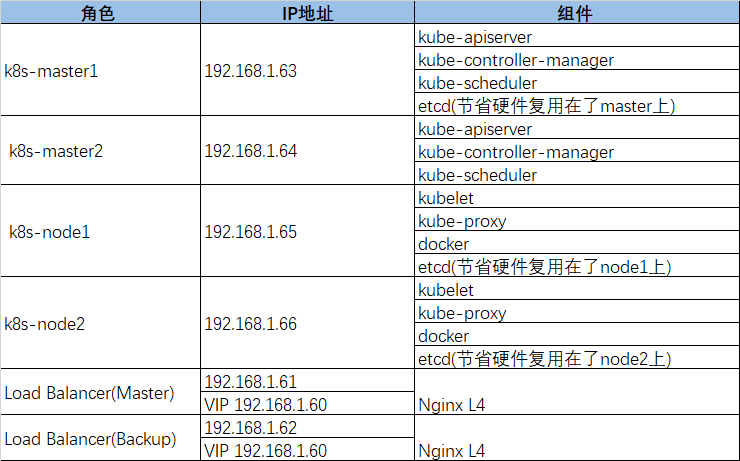

主机清单及软件规划

主机的初始化配置

systemctl stop firewalld

systemctl disabled firewalld

sed -i 's/enforcing/disabled' /etc/selinux/config

swapoff -a

ntpdate time.windows.com

添加hosts文件解析

192.168.1.61 k8s-lb1

192.168.1.62 k8s-lb2

192.168.1.63 k8s-master1

192.168.1.64 k8s-master2

192.168.1.65 k8s-node1

192.168.1.66 k8s-node2

使用cfssl工具自签证书

#执行cfssl.sh脚本使本机支持cfssl指令 [root@ansible ~]# cd /root/k8s/TLS/ [root@ansible TLS]# ls cfssl cfssl-certinfo cfssljson cfssl.sh etcd k8s [root@ansible TLS]# ./cfssl.sh #为etcd颁发证书 [root@ansible TLS]# cd etcd/ [root@ansible etcd]# ls ca-config.json ca-csr.json server-csr.json generate_etcd_cert.sh [root@ansible etcd]# #使用cfssl,根据 ca-csr.json生成一套CA #然后再根据CA文件和ca-config.json、server-csr.json #为etcd数据生成证书文件,这两步操作通过脚本 #generate_etcd_cert.sh来完成 [root@ansible etcd]# ./generate_etcd_cert.sh [root@ansible etcd]# ls *.pem ca-key.pem ca.pem server-key.pem server.pem [root@ansible etcd]# #为apisever颁发证书 [root@ansible TLS]# cd k8s/ [root@ansible k8s]# ls ca-config.json ca-csr.json kube-proxy-csr.json server-csr.json generate_k8s_cert.sh [root@ansible k8s]# #使用脚本文件generate_k8s_cert.sh根据 #生成一套CA文件 #根据CA文件与server-csr.json、ca-config.json #为apisever颁发证书 #根据CA文件与ca-config.json、kube-proxy-csr.json #为kube-proxy分发证书 [root@ansible k8s]# ./generate_k8s_cert.sh [root@ansible k8s]# ls *.pem ca-key.pem ca.pem kube-proxy-key.pem kube-proxy.pem server-key.pem server.pem [root@ansible k8s]#

配置etcd数据库

#把ETCD目录下的etcd.service文件拷贝到etcd数据库的 #/usr/lib/systemd/system/ #把cfssl为etcd生成的证书文件拷贝到二进制文件包的 #etcd/ssl/目录下 [root@ansible k8s]# cd ETCD/ [root@ansible ETCD]# ls etcd etcd.service etcd.tar.gz [root@ansible ETCD]# cd etcd/ssl/ [root@ansible ssl]# ls *.pem ca.pem server-key.pem server.pem [root@ansible ETCD]# cd etcd/cfg/ [root@ansible cfg]# ls etcd.conf [root@ansible cfg]# #修改etcd数据库服务器/usr/lib/systemd/system/etcd.service #20-21行指定client访问ectd集群时用的证书文件在哪里 #24行指定颁发这个证书的CA机构 #22-23指定etcd集群内部之间通讯使用的证书文件 #25行指定颁发这个证书的CA机构 #非自签证书不需要24,25 20 --cert-file=/opt/etcd/ssl/server.pem 21 --key-file=/opt/etcd/ssl/server-key.pem 22 --peer-cert-file=/opt/etcd/ssl/server.pem 23 --peer-key-file=/opt/etcd/ssl/server-key.pem 24 --trusted-ca-file=/opt/etcd/ssl/ca.pem 25 --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem #修改etcd/cfg/etcd.conf #定义etcd节点的主机名 #指定供etcd集群内部各节点相互通讯时自己的地址和端口 #指定client访问etcd集群时自己的地址和端口 #[Clustering]部分 #指定etcd集群内各节点之间发通告信息用的地址和端口 #通告client访问etcd集群的地址和端口 #指定etcd集群中的成员 #指定etcd集群内部成员之间认识口令为etcd-cluster #该节点加入etcd集群时,属于新建集群所以是new #否则为exsiting 2 #[Member] 3 ETCD_NAME="etcd-1" 4 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" 5 ETCD_LISTEN_PEER_URLS="https://192.168.1.63:2380" 6 ETCD_LISTEN_CLIENT_URLS="https://192.168.1.63:2379" 8 #[Clustering] 9 ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.63:2380" 10 ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.63:2379" 11 ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.1.63:2380,etcd-2= https://192.168.1.65:2380,etcd-3=https://192.168.1.66:2380" 12 ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" 13 ETCD_INITIAL_CLUSTER_STATE="new" #以上两个配置文件修改后把整个etcd目录拷贝到etcd数据库的/opt/下 #所有etcd节点都要拷贝/etc/目录和service文件 #启动服务 systemctl daemon-reload systemctl start etcd.service systemctl enable etcd 注意事项: etcd/ssl目录下的证书如果没有更新,etcd服务无法启动 ansible 的copy模块拷贝etcd目录到etcd数据库节点时etcd/bin/目录下的文件会丢失x权限 etcd/cfg/etcd.conf文件中IP地址和节点名称需要修改 ansible etcd -m copy -a "src=/root/k8s/ETCD/etcd.service dest=/usr/lib/systemd/system/" ansible etcd -m copy -a "src=/root/k8s/ETCD/etcd dest=/opt/ mode=0755"

配置Master节点

#(1)把kube-apiserver,kube-controller-manager,kube-scheduler的service #文件拷贝到Master主机节点的/usr/lib/systemd/system/目录下 #(2)把cfssl工具为api-server生成的密钥拷贝到kubernetes/ssl/目录下 #查看kubernetes目录结构: #(3)bin目录是二进制的可执行文件,githib下载新版本后替换这4个文件 #即可对k8s集群的maste节点程序升级 #(4)bin/目录下的kubectl文件拷贝到master节点的环境变量目录 #/usr/local/bin/方便在master节点使用kubectl工具 [root@ansible ~]# cd /root/k8s/MASTER/ [root@ansible MASTER]# ls kube-apiserver.service kube-controller-manager.service kubernetes kube-scheduler.service [root@ansible MASTER]# cd kubernetes/ssl/ [root@ansible ssl]# ls ca-key.pem ca.pem server-key.pem server.pem [root@ansible ssl]# [root@ansible kubernetes]# tree . . ├── bin │ ├── kube-apiserver │ ├── kube-controller-manager │ ├── kubectl │ └── kube-scheduler ├── cfg │ ├── kube-apiserver.conf │ ├── kube-controller-manager.conf │ ├── kube-scheduler.conf │ └── token.csv ├── logs └── ssl ├── ca-key.pem ├── ca.pem ├── server-key.pem └── server.pem [root@ansible kubernetes]# #(5)修改apiserver配置文件 #定义日志的输出级别及k8s的日志目录 #指定apiserver去连接etcd时的地址和端口 #当前apiserver监控的地址和端口 #通告node哪个地址可以连接到本机的api #允许使用超级权限创建容器 #指定service的IP从10.0.0.0/24地址段中取 #指定可以使用的插件,k8s的高级功能 #其它模块访问apiserver时,使用RBAC认证 #为node分配权限时使用的token文件位置 #service资源暴露时可用端口的范围 1 KUBE_APISERVER_OPTS="--logtostderr=false 2 --v=2 3 --log-dir=/opt/kubernetes/logs 4 --etcd-servers=https://192.168.1.63:2379,https://192.168.1.65:2379, https://192.168.1.66:2379 5 --bind-address=192.168.1.63 6 --secure-port=6443 7 --advertise-address=192.168.1.63 8 --allow-privileged=true 9 --service-cluster-ip-range=10.0.0.0/24 10 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,Res ourceQuota,NodeRestriction 11 --authorization-mode=RBAC,Node 12 --enable-bootstrap-token-auth=true 13 --token-auth-file=/opt/kubernetes/cfg/token.csv 14 --service-node-port-range=30000-32767 #api-server访问kubelet时使用证书的位置 15 --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem 16 --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem #api-server访问https时使用的证书位置 17 --tls-cert-file=/opt/kubernetes/ssl/server.pem 18 --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem 19 --client-ca-file=/opt/kubernetes/ssl/ca.pem #service-account使用的私钥 20 --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem #api-server访问etcd时使用证书的位置 21 --etcd-cafile=/opt/etcd/ssl/ca.pem 22 --etcd-certfile=/opt/etcd/ssl/server.pem 23 --etcd-keyfile=/opt/etcd/ssl/server-key.pem #对访问api-server动作做审计 24 --audit-log-maxage=30 25 --audit-log-maxbackup=3 26 --audit-log-maxsize=100 27 --audit-log-path=/opt/kubernetes/logs/k8s-audit.log" #(6)修改controller-manager配置文件 #指定日志级别和目录 #开启leader-elect功能配合etcd的选举 #controller-manager去本地的8080端口去找apiserver #controller-manager只监听本机地址,协助apiserver #完成工作,它不需要与外部通讯 #allocate-node-cidrs表示是否允许安装cni的插件 #cni插件的IP地址要与10.244.0.0/16地址段一致 #service的IP地址范围为10.0.0.0/24 1 KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false 2 --v=2 3 --log-dir=/opt/kubernetes/logs 4 --leader-elect=true 5 --master=127.0.0.1:8080 6 --address=127.0.0.1 7 --allocate-node-cidrs=true 8 --cluster-cidr=10.244.0.0/16 9 --service-cluster-ip-range=10.0.0.0/24 #node加入集群会自动颁发kubelet的证书,而kubelet的证书 #是由controller-manager通过如下两行的证书为之颁发 10 --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem 11 --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem #ServiceAccount认证用如下两行的CA和证书 12 --root-ca-file=/opt/kubernetes/ssl/ca.pem 13 --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem #为每个node颁发的kubelet证书的时间是10年 14 --experimental-cluster-signing-duration=87600h0m0s" #(7)修改scheduler配置文件 #指定日志级别和目录 #schedulerc使用选举 #连接本地的aipserver时用127.0.0.1:8080 #监控本地地址 1 KUBE_SCHEDULER_OPTS="--logtostderr=false 2 --v=2 3 --log-dir=/opt/kubernetes/logs 4 --leader-elect 5 --master=127.0.0.1:8080 6 --address=127.0.0.1" #(8)把kubernetes目录拷贝到Master主机的/opt/目录 #完成对aipsever,controller-manager,scheduler的安装 #(9)分别启动这三个服务并查看日志 systemctl start kube-apiserver kube-controller-manager kube-scheduler ps -ef | grep kube-apiserver kube-controller-manager kube-scheduler less kube-apiserver.INFO less kube-controller-manager.INFO less kube-scheduler.INFO #(10)配置开机自动启动,查看资源 for i in $(ls /opt/kubernetes/bin/);do systemctl enable $i;done kubectl get cs #(11)启用TLS Bootstrapping #当work节点后续逐渐加入集群时可以自动为kubelet颁发证书 #api-server配置中添加第13行配置指定token.csv文件的位置 #是/opt/kubernetes/cfg/token.csv #token.csv文件格式: # token,用户,uid,用户组 #其中token的值也可以手动自己生成: # head -c 16 /dev/urandom |od -An -t x | tr -d ‘’ #注意: #token.csv文件中token的值必须要与node节点上的 #bootstrap.kubeconfig配置里一致 [root@master1 cfg]# more token.csv c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper" [root@master1 cfg]# #为kubelet-bootstrap用户赋予权限 #把用户kubelet-bootstrap绑定到system: #node-bootstrapper这个组里面,使它具有授权权限 [root@master1 cfg]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created [root@master1 cfg]#

部署Node节点

#(1)把docker目录的二进文件拷贝到Node节点的/usr/bin/下 #docker的配置文件daemon.json拷贝到Node节点的/etc/docker #(2)把kubelet,kube-proxy,docker的service文件拷贝到node节点的 #/usr/lib/systemd/system/ #(3)把cfssl工具为kube-proxy生成的证书拷贝到kubernetes/ssl/ #(4)查看Node节点kubernetes的目录结构: [root@ansible NODE]# tar -zxvf k8s-node.tar.gz [root@ansible NODE]# ls daemon.json docker-18.09.6.tgz k8s-node.tar.gz kube-proxy.service docker docker.service kubelet.service kubernetes [root@ansible NODE]#tar -zxvf docker-18.09.6.tgz [root@ansible NODE]#cd docker/ [root@ansible docker]# ls containerd ctr dockerd docker-proxy containerd-shim docker docker-init runc [root@ansible docker]# cd /root/k8s/NODE/kubernetes [root@ansible kubernetes]# tree . . ├── bin │ ├── kubelet │ └── kube-proxy ├── cfg │ ├── bootstrap.kubeconfig │ ├── kubelet.conf │ ├── kubelet-config.yml │ ├── kube-proxy.conf │ ├── kube-proxy-config.yml │ └── kube-proxy.kubeconfig ├── logs └── ssl ├── ca.pem ├── kube-proxy-key.pem └── kube-proxy.pem [root@ansible kubernetes]# #(5)修改kubelet.conf文件 #修改日志等级和日志目录 #当前结点的主机名为Node1 #指定网络插件为cni #指定kubelet.kubeconfig的文件路径 #指定bootstrap.kubeconfig的文件路径 #指定kubelet-config.yml文件路径 #为我自动颁发的证书放在/opt/kubernetes/ssl #启动pod时用到的镜像pause-amd64:3.0 1 KUBELET_OPTS="--logtostderr=false 2 --v=2 3 --log-dir=/opt/kubernetes/logs 4 --hostname-override=Node1 5 --network-plugin=cni 6 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig 7 --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig 8 --config=/opt/kubernetes/cfg/kubelet-config.yml 9 --cert-dir=/opt/kubernetes/ssl 10 --pod-infra-container-image=lizhenliang/pause-amd64:3.0" #(6)修改bootstrap.kubeconfig文件 #bootstrap主要目的是为加入到k8s集群的node节点自动颁发kubelet #证书,所有要连接apisever的模块都是需证书.这个文件可以用指令 #kubectl config生成 #指定使用到的ca证书是/opt/kubernetes/ssl/ca.pem #指定Master节点的地址192.168.1.63:6443 #定义token的值, #这个token要与/opt/kubernetes/cfg/token.csv中一致 1 apiVersion: v1 2 clusters: 3 - cluster: 4 certificate-authority: /opt/kubernetes/ssl/ca.pem 5 server: https://192.168.1.63:6443 6 name: kubernetes 7 contexts: 8 - context: 9 cluster: kubernetes 10 user: kubelet-bootstrap 11 name: default 12 current-context: default 13 kind: Config 14 preferences: {} 15 users: 16 - name: kubelet-bootstrap 17 user: 18 token: c47ffb939f5ca36231d9e3121a252940 #(7)修改kubelet-config.yml文件 #定义的使用对象为KubeletConfiguration #指定api版本 1 kind: KubeletConfiguration 2 apiVersion: kubelet.config.k8s.io/v1beta1 3 address: 0.0.0.0 4 port: 10250 #kublet当前监听的地址和端口 5 readOnlyPort: 10255 6 cgroupDriver: cgroupfs #这里的驱动要与docke inof中显示一致 7 clusterDNS: #kubelet默认配置的内部DNS地址 8 - 10.0.0.2 9 clusterDomain: cluster.local 10 failSwapOn: false #关闭swapon分区 11 authentication: #以下8行是认证信息 12 anonymous: 13 enabled: false 14 webhook: 15 cacheTTL: 2m0s 16 enabled: true 17 x509: 18 clientCAFile: /opt/kubernetes/ssl/ca.pem 19 authorization: 20 mode: Webhook 21 webhook: 22 cacheAuthorizedTTL: 5m0s 23 cacheUnauthorizedTTL: 30s 24 evictionHard: 25 imagefs.available: 15% 26 memory.available: 100Mi 27 nodefs.available: 10% 28 nodefs.inodesFree: 5% 29 maxOpenFiles: 1000000 30 maxPods: 110 #(8)修改kube-proxy.kubeconfig文件 #kube-proxy连接apiserver时用的CA证书在 #/opt/kubernetes/ssl/ca.pem #kube-proxy使用到的证书文件: #/opt/kubernetes/ssl/kube-proxy.pem #/opt/kubernetes/ssl/kube-proxy-key.pem 1 apiVersion: v1 2 clusters: 3 - cluster: 4 certificate-authority: /opt/kubernetes/ssl/ca.pem 5 server: https://192.168.1.63:6443 6 name: kubernetes 7 contexts: 8 - context: 9 cluster: kubernetes 10 user: kube-proxy 11 name: default 12 current-context: default 13 kind: Config 14 preferences: {} 15 users: 16 - name: kube-proxy 17 user: 18 client-certificate: /opt/kubernetes/ssl/kube-proxy.pem 19 client-key: /opt/kubernetes/ssl/kube-proxy-key.pem #(9)修改kube-proxy-config.yml文件 #该yml文件是为了动态调整kube-proxy配置 #kube-proxy监听的地址为0.0.0.0 #通过暴露0.0.0.0:10249,供监控系统使用 1 kind: KubeProxyConfiguration 2 apiVersion: kubeproxy.config.k8s.io/v1alpha1 3 address: 0.0.0.0 4 metricsBindAddress: 0.0.0.0:10249 5 clientConnection: 6 kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig 7 hostnameOverride: Node1 #注册到k8s集群的主机名 8 clusterCIDR: 10.0.0.0/24 #集群中service的IP地址段 9 mode: ipvs 10 ipvs: 11 scheduler: "rr" 12 iptables: 13 masqueradeAll: true #(10)把kubenetes目录拷贝到node节点的/opt目录 #(11)启动服务并查看日志 systemctl start kubelet kube-proxy systemctl enable kubelet systemctl enable kube-proxy less /opt/kubernetes/logs/kubelet.INFO less /opt/kubernetes/logs/kube-proxy.INFO #(12)在master节点查看node向kubelet-bootstrap请求发 #放证书的信息,并手动为它颁发证书 #(13)在node1上查看master节点为它颁发的kubelet证书 #同时会自动生成kubelet.kubeconfig文件,kubelet #使用这个文件连接apiserve [root@master1 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-XcxFwsj3qE6-c9ayjPe2sHehWiwepsquOBIGyfP5orQ 27m kubelet-bootstrap Pending [root@master1 ~]# [root@master1 ~]# kubectl certificate approve node-csr-XcxFwsj3qE6-c9ayjPe2sHehWi wepsquOBIGyfP5orQ [root@Node1 ~]# cd /opt/kubernetes/ssl/ [root@Node1 ssl]# ls kubelet* kubelet-client-2020-08-30-09-30-55.pem kubelet-client-current.pem kubelet.crt kubelet.key [root@Node1 ~]# ls /opt/kubernetes/cfg/kubelet.kubeconfig /opt/kubernetes/cfg/kubelet.kubeconfig [root@Node1 ~]# #(14)把kubenetes目录拷贝到第二个node节点/opt目录 #(16)启动服务并查看日志 #(17)在maste上手动为第二个node颁发证书 #(18)在maste上查看node节点信息 [root@master1 ~]# kubectl get node NAME STATUS ROLES AGE VERSION node1 NotReady <none> 59m v1.16.0 node2 NotReady <none> 45s v1.16.0 [root@master1 ~]#

部署CNI网络

https://github.com/containernetworking/plugins/releases

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

#(1)在node节点上创建cni需要使用的目录 #(2)把cni的压缩包直接拷贝到worker节点的/opt/cni/bin/ #(3)解压压缩包后,node可以接收第三方网络的cni插件 #(4)下载flannel组件对应的yml文件kube-flannel.yaml #(5)通过yml文件下载安装flannel组件 #(6)flannel组件是以镜像方式存在,通过指令查看 #(7)此时node工作状态是否已变正常(Ready) #(8)其它node节点做同样操作 [root@Node1 ~]# mkdir -p /opt/cni/bin /etc/cni/net.d [root@Node1 ~]# cd /opt/cni/bin/ [root@Node1 bin]# tar -zxvf cni-plugins-linux-amd64-v0.8.2.tgz [root@master1 ~]# kubectl apply -f kube-flannel.yaml [root@master1 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE kube-flannel-ds-amd64-h9dg6 1/1 Running 0 42m kube-flannel-ds-amd64-w7b9x 1/1 Running 0 42m [root@master1 ~]# kubectl get node NAME STATUS ROLES AGE VERSION node1 Ready <none> 6h9m v1.16.0 node2 Ready <none> 5h10m v1.16.0 [root@master1 ~]# #kube-flannel.yaml文件内容,该文件中的网络信息会写 #入到/etc/cni/net.d中 --- 97 kind: ConfigMap 98 apiVersion: v1 99 metadata: 100 name: kube-flannel-cfg 101 namespace: kube-system 102 labels: 103 tier: node 104 app: flannel 105 data: 106 cni-conf.json: | 107 { 108 "cniVersion": "0.2.0", 109 "name": "cbr0", 110 "plugins": [ 111 { 112 "type": "flannel", 113 "delegate": { 114 "hairpinMode": true, 115 "isDefaultGateway": true 116 } 117 }, 118 { 119 "type": "portmap", 120 "capabilities": { 121 "portMappings": true 122 } 123 } 124 ] 125 } 126 net-conf.json: | #flannel使用的网络信息,这个信息要与 127 { #/opt/kubernetes/cfg/kube-controller-manager.conf 128 "Network": "10.244.0.0/16", #中cluster-cidr信息一致 129 "Backend": { 130 "Type": "vxlan" #网络封装模式是vxlan 131 } 132 } 133 --- 134 apiVersion: apps/v1 135 kind: DaemonSet #DaemonSet的类型,表示每个worker node节点上都会一个 136 metadata: #独立的进程,维护各自的路由表 137 name: kube-flannel-ds-amd64 138 namespace: kube-system 139 labels: 140 tier: node 141 app: flannel 142 spec: 143 selector: 144 matchLabels: 145 app: flannel 146 template: 147 metadata: 148 labels: 149 tier: node 150 app: flannel 151 spec: 152 affinity: 153 nodeAffinity: 154 requiredDuringSchedulingIgnoredDuringExecution: 155 nodeSelectorTerms: 156 - matchExpressions: 157 - key: beta.kubernetes.io/os 158 operator: In 159 values: 160 - linux 161 - key: beta.kubernetes.io/arch 162 operator: In 163 values: 164 - amd64 #使用平台是amd64 165 hostNetwork: true #使用宿主机的网络,即Node1,Node2的网络 166 tolerations: 167 - operator: Exists 168 effect: NoSchedule 169 serviceAccountName: flannel 170 initContainers: 171 - name: install-cni 172 image: lizhenliang/flannel:v0.11.0-amd64 173 command: 174 - cp 175 args: 176 - -f 177 - /etc/kube-flannel/cni-conf.json 178 - /etc/cni/net.d/10-flannel.conflist 179 volumeMounts: 180 - name: cni 181 mountPath: /etc/cni/net.d 182 - name: flannel-cfg 183 mountPath: /etc/kube-flannel/ 184 containers: #该网络插件使用到的镜像文件 185 - name: kube-flannel 186 image: lizhenliang/flannel:v0.11.0-amd64 187 command: 188 - /opt/bin/flanneld

授权apiserver访问kubelet

#(1)为提供安全性,kubelet禁止匿名访问,必须授权才 #可以.apiserver-to-kubelet-rbac.yaml通过这个yml #文件实现kubelet对apiserver授权 #(2)授权之后就可以在maste节点上查看pod的日志 #(3)每个node上会有一个flannel网络 #(4)在maste节点创建一个pod,并查看状态 #需要等pod的状态由ContainerCreating变为Running #(5)把这个pod提供的web服务暴露出去 #(6)查看pod信息和service信息,在浏览器中访问web #http://192.168.1.65:31513/可以看到nginx的欢迎界面 [root@master1 ~]# kubectl apply -f apiserver-to-kubelet-rbac.yaml [root@master1 ~]# kubectl logs -n kube-system kube-flannel-ds-amd64-h9dg6 [root@Node1 ~]# ifconfig flannel.1 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.244.0.0 netmask 255.255.255.255 broadcast 0.0.0.0 ether f6:2a:44:e5:f1:8f txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@Node1 ~]# [root@master1 ~]# kubectl create deployment web --image=nginx [root@master1 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE web-d86c95cc9-v6ws9 1/1 Running 0 9m30s 10.244.0.2 node1 [root@master1 ~]# [root@master1 ~]# kubectl expose deployment web --port=80 --type=NodePort [root@master1 ~]# kubectl get pods,svc NAME READY STATUS RESTARTS AGE pod/web-d86c95cc9-v6ws9 1/1 Running 0 15m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 2d service/web NodePort 10.0.0.98 <none> 80:31513/TCP 49s [root@master1 ~]#

部署dashboard

https://github.com/kubernetes/dashboard

https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/

#(1)通过dashboard.yaml文件部署dashboard. #(2)kubernetes-dashboard命名空间内去查看pod #的运行信息,状态信息需要从ContainerCreating #变为Running #(3)查看发布的端口信息,通过浏览器访问任意Node #节点的30001端口https://192.168.1.65:30001/ #(4)通过dashboard-adminuser.yaml文件创建一个 #ServiceAccount用户,由它来创建token对该 #ServiceAccount授权,使它加入cluster-admin #(5)通过shell指令获取token的值,把token值粘贴 #到浏览器 #(6)通过UI对提供web服务的pod进行扩容 #左侧导航栏Workloads-->Deployment-->右侧容器web #后面的... 选择 Scale,副本数据设置为3 [root@master1 ~]# kubectl apply -f dashboard.yaml [root@master1 ~]# kubectl get pods -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-566cddb686-mdnf8 1/1 Running 0 17m kubernetes-dashboard-7b5bf5d559-4hjbr 1/1 Running 0 17m [root@master1 ~] [root@master1 ~]# kubectl get pods,svc -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE pod/dashboard-metrics-scraper-566cddb686-mdnf8 1/1 Running 0 19m pod/kubernetes-dashboard-7b5bf5d559-4hjbr 1/1 Running 0 19m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/dashboard-metrics-scraper ClusterIP 10.0.0.186 <none> 8000/TCP 19m service/kubernetes-dashboard NodePort 10.0.0.211 <none> 443:30001/TCP 19m [root@master1 ~]# [root@master1 ~]# kubectl apply -f dashboard-adminuser.yaml [root@master1 ~]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}') [root@master1 ~]# [root@master1 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web-d86c95cc9-v6ws9 1/1 Running 0 91m [root@master1 ~]# [root@master1 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web-d86c95cc9-srqb2 1/1 Running 0 2m56s web-d86c95cc9-v6ws9 1/1 Running 0 97m web-d86c95cc9-z4wzd 1/1 Running 0 2m56s [root@master1 ~]#

部署CroeDNS

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns/coredns

#(1)查看当前的资源,为测试DNS做准备 #(2)编辑coredns.yaml,通过这个yaml文件来实现 #自动部署coreDNS #(3)创建busybox这个镜像,以测试DNS功能 [root@master1 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 2d1h web NodePort 10.0.0.98 <none> 80:31513/TCP 91m [root@master1 ~]# [root@master1 ~]# vim coredns.yaml 173 spec: 174 selector: 175 k8s-app: kube-dns 176 clusterIP: 10.0.0.2 #这个是DNS这个service的IP,kubelet向这个ip 177 ports: #发送DNS解析请求。这个地址要与Node节点上 178 - name: dns #/opt/kubernetes/cfg/kubelet-config.yml 179 port: 53 #中的clusterDNS定义的地址一致 180 protocol: UDP 181 - name: dns-tcp 182 port: 53 183 protocol: TCP [root@Node1 ~]# sed -n '7,8p' /opt/kubernetes/cfg/kubelet-config.yml clusterDNS: - 10.0.0.2 [root@Node1 ~]# [root@master1 ~]# kubectl apply -f coredns.yaml [root@master1 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-6d8cfdd59d-td2wf 1/1 Running 0 115s kube-flannel-ds-amd64-h9dg6 1/1 Running 0 3h51m kube-flannel-ds-amd64-w7b9x 1/1 Running 0 3h51m [root@master1 ~]# [root@master1 ~]# kubectl apply -f bs.yaml [root@master1 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE busybox 1/1 Running 0 78s web-d86c95cc9-srqb2 1/1 Running 0 34m web-d86c95cc9-v6ws9 1/1 Running 0 129m web-d86c95cc9-z4wzd 1/1 Running 0 34m [root@master1 ~]# [root@master1 ~]# kubectl exec -it busybox sh / # ping 10.0.0.98 PING 10.0.0.98 (10.0.0.98): 56 data bytes 64 bytes from 10.0.0.98: seq=0 ttl=255 time=0.116 ms 64 bytes from 10.0.0.98: seq=1 ttl=255 time=0.080 ms / # ping web PING web (10.0.0.98): 56 data bytes 64 bytes from 10.0.0.98: seq=0 ttl=255 time=0.043 ms 64 bytes from 10.0.0.98: seq=1 ttl=255 time=0.062 ms / # nslookup kubernetes Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local / # / # nslookup web Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: web Address 1: 10.0.0.98 web.default.svc.cluster.local / #

配置load balancer

#(1)在LB主机上以rpm安装nginx的1.16版本 #(2)在配置文件中http{}的上面添加stream{} #(3)启动nginx服务 #(4)安装keepalive软件 #(5)删除默认的keepalived.conf文件,把模板 #文件放在/etc/keepalived/下并修改配置 #(6)为check_nginx.sh赋权限 #(7)启动keepalive服务,并查看进程 #(8)以同样的方法配置LB2 #(9)用ip add show查看LB1,LB2上的vip [root@LB1 ~]# rpm -ivh http://nginx.org/packages/rhel/7/x86_64/RPMS/ nginx-1.16.1-1.el7.ngx.x86_64.rpm [root@LB1 ~]# vim /etc/nginx/nginx.conf stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.1.63:6443; server 192.168.1.64:6443; } server { listen 6443; proxy_pass k8s-apiserver; } } [root@LB1 ~]# systemctl start nginx [root@LB1 ~]# systemctl enable nginx [root@LB1 ~]# yum -y install keepalived.x86_64 [root@LB1 ~]# cd /etc/keepalived/ [root@LB1 keepalived]# cat keepalived.conf global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER } #在global_def{}与vrrp_instance {}之间添加故障 #切换脚本 vrrp_script check_nginx { #对过脚本check_nginx.sh判断nginx服务是否正常 script "/etc/keepalived/check_nginx.sh" } vrrp_instance VI_1 { state MASTER #备节点用BACKUP interface eth0 #在eth0上启用VIP virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.1.60/24 } track_script { #在实例中添加健康检查 check_nginx #当check_nginx返回非0,则表明nginx有故障 } #keepalived就执行故障切换 } [root@LB1 keepalived]# [root@LB1 keepalived]# chmod +x check_nginx.sh [root@LB1 keepalived]# cat check_nginx.sh #!/bin/bash #检查nginx进程是否存在,如果存在返回1, #否则返回0 count=$(ps -ef |grep nginx |egrep -cv "grep|$$") if [ "$count" -eq 0 ];then exit 1 else exit 0 fi [root@LB1 keepalived]# [root@LB1 ~]# systemctl start keepalived [root@LB1 ~]# systemctl enable keepalived [root@LB1 ~]# ps -ef | grep keep [root@LB1 ~]# ip add

修改Node访问api的地址

#(1)在两个Node上批量修改bootstrap.kubeconfig, #kubelet.kubeconfig,kube-proxy.kubeconfig文件 #中api-server的地址 #(2)在Node上启动kubelet,kube-proxy服务并查 #看日志 #(3)验证VIP地址是否在生效 #在node节目上用master:/opt/kubernetes/cfg/ #token文件中的token值,以curl -k去查询 #aipserver的版本 [root@Node1 ~]# cd /opt/kubernetes/cfg/ [root@Node1 cfg]# grep 192.168.1.63 ./* ./bootstrap.kubeconfig: server: https://192.168.1.63:6443 ./kubelet.kubeconfig: server: https://192.168.1.63:6443 ./kube-proxy.kubeconfig: server: https://192.168.1.63:6443 [root@Node1 cfg]# [root@Node1 cfg]# sed -i 's#192.168.1.63#192.168.1.60#' ./* [root@Node2 ~]# cd /opt/kubernetes/cfg/ [root@Node2 cfg]# sed -i 's#192.168.1.63#192.168.1.60#' ./* [root@Node1 cfg]# systemctl restart kubelet [root@Node1 cfg]# systemctl restart kube-proxy [root@Node2 cfg]# systemctl restart kubelet [root@Node2 cfg]# systemctl restart kube-proxy [root@LB1 ~]# tail -f /var/log/nginx/k8s-access.log 192.168.1.65 192.168.1.63:6443 - [31/Aug/2020:15:05:16 +0800] 200 1155 192.168.1.65 192.168.1.64:6443 - [31/Aug/2020:15:05:16 +0800] 200 1156 192.168.1.66 192.168.1.63:6443 - [31/Aug/2020:15:12:18 +0800] 200 1156 192.168.1.66 192.168.1.63:6443 - [31/Aug/2020:15:12:18 +0800] 200 1155 [root@Node1 ~]# curl -k --header "Authorization: Bearer c47ffb939f5ca36231d9e3121a252940" https://192.168.1.60:6443/version { "major": "1", "minor": "16", "gitVersion": "v1.16.0", "gitCommit": "2bd9643cee5b3b3a5ecbd3af49d09018f0773c77", "gitTreeState": "clean", "buildDate": "2019-09-18T14:27:17Z", "goVersion": "go1.12.9", "compiler": "gc", "platform": "linux/amd64" } [root@Node1 ~]#