0、将用户绑定到系统集群角色,至/opt/kubernetes/cfg/下执行【master】

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

#删除上面创建的kubelet-bootstrap【仅创建错误时或启动失败kubelet、时删除】

kubectl delete clusterrolebinding kubelet-bootstrap

1、创建kubeconfig文件,写入以下配置【master】

vi /opt/kubernetes/cfg/kubernetes.sh # 创建kubelet bootstrapping kubeconfig BOOTSTRAP_TOKEN=b2h326jj2sns6bryahz4i1m6cklesj9o #/opt/kubernetes/cfg/token.csv token一致 KUBE_APISERVER="https://172.17.217.232:6443" #apiserver地址 # 设置集群参数 kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=bootstrap.kubeconfig # 设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig # 设置上下文参数 kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig # 设置默认上下文 kubectl config use-context default --kubeconfig=bootstrap.kubeconfig #------------------------------------------------------------------- #------------------------------------------------------------------- # 创建kube-proxy kubeconfig文件 kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy --client-certificate=/opt/kubernetes/ssl/kube-proxy.pem --client-key=/opt/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

2、设置权限,执行脚本、生成配置【master】

#设置权限

chmod 777 /opt/kubernetes/cfg/kubernetes.sh

#执行脚本 生成bootstrap.kubeconfig kube-proxy.kubeconfig两个配置文件 ./kubernetes.sh

3、将生成配置文件发送至Node节点【master】

#将生成配置拷贝到226,node节点

scp /opt/kubernetes/cfg/bootstrap.kubeconfig kube-proxy.kubeconfig root@172.17.217.226:/opt/kubernetes/cfg

#将生成配置拷贝到228,node节点

scp /opt/kubernetes/cfg/bootstrap.kubeconfig kube-proxy.kubeconfig root@172.17.217.228:/opt/kubernetes/cfg

4、将kubelet、kube-proxy复制至Node节点【master】

#kubelet kube-proxy复制至226节点【找到kubernetes解压文件里面这两个二进制文件】

scp /usr/local/bin/kubernetes/server/bin/{kubelet,kuber-proxy} root@172.17.217.226:/opt/kubernetes/bin

#kubelet kube-proxy 复制至228节点 scp /usr/local/bin/kubernetes/server/bin/{kubelet,kuber-proxy} root@172.17.217.228:/opt/kubernetes/bin

5、将server.pem、server-key.pem复制至Node节点【master】

scp /opt/kubernetes/ssl/{server,server-key}.pem root@172.17.217.226:/opt/kubernetes/ssl

6、创建kubelet配置文件【Node】

#创建配置文件

vi /opt/kubernetes/cfg/kubelet.conf

#写入配置

KUBELET_OPTS="--logtostderr=true --v=4 --log-dir=/opt/kubernetes/logs --hostname-override=k8s-node-1 #本机主机名【或IP】 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig #【kubeconfig配置文件,会自动生成】 --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig #bootstrap配置 --config=/opt/kubernetes/cfg/kubelet.yml #kubelet配置 --cert-dir=/opt/kubernetes/ssl #证书地址 --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

7、创建kublet.yml配置文件【Node】

#创建配置文件

vi /opt/kubernetes/cfg/kubelet.yml

#写入配置

kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 172.17.217.226 #本节点IP port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: ["10.0.0.2"] clusterDomain: cluster.local. failSwapOn: false authentication: anonymous: enabled: true

8 、创建kubelet.service服务文件【Node】

#创建服务文件

vi /opt/kubernetes/kubelet.service

#写入配置 [Unit] Description=Kubernetes Kubelet After=docker.service #依赖docker Requires=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf #指定配置文件 ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

#复制至系统服务启动地址

cp /opt/kubernetes/kubelet.service /usr/bin/systemd/system/

9、安装docker【k8s版本不一样安装docker版本不一样、传送门,当前k8s版本:1.16.2,Node】

#卸载旧版本docker【如有安装请卸载重装】 yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine

#安装docker所需的软件包 yum install -y yum-utils device-mapper-persistent-data lvm2

#配置镜像仓库 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

#列出docker的版本 yum list docker-ce --showduplicates |sort -r

#安装指定版本的docker yum install docker-ce-18.06.3.ce-3.el7

#启动docker systemctl start docker

#设置开机启动 systemctl enable docker

10、添加docker配置【Node】

#创建配置文件

vi /etc/docker/daemon.json

#写入配置

{ "exec-opts": ["native.cgroupdriver=cgroupfs"],#执行配置 "log-driver": "json-file",#日志 "log-opts": { "max-size": "100m" #日志文件大小 }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ], "registry-mirrors":["https://o06qyoz6.mirror.aliyuncs.com"]#阿里云镜像 }

#服务重新加载配置

systemctl daemon.json

#重启docker

systemctl restart docker

11、启动、开机自启kubelet 【Node】

#启动kubelet systemctl start kubelet #设置开机自启 systemctl enable kubelet #查看日志 journalctl -xe #查看kubelet日志 journalctl -amu kubelet

12、启动kubelet出错【Node】

大致错误:

#错误信息 misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "systemd" #解决方案 修改docker配置 vi /etc/docker/daemon.json "exec-opts": ["native.cgroupdriver=cgroupfs"],#将systemd改成cgroupfs

#重启docker

systemctl restart docker

#重启kubelet

systemctl restart kubelet

13、在master节点将启动的所有Node节点加入集群【所有Node节点都要加入,Master】

#查询所有Node

kubectl get cs

#condtion approve 后Approved,Issued kubectl certificate approve node-csr-jbk21dTsMgMt-12jKUkpPCZySDnARPk1tU5Li8F_Ypg【对应Node-->Name】

#查看node 【Ready为正常状态】

kubectl get node

14、Node节点配置kubelet-proxy【Node】

#创建配置文件

vi /opt/kubernetes/cfg/kubelet-proxy.conf

#写入配置

KUBE_PROXY_OPTS="--logtostderr=true

--v=4

--log-dir=/opt/kubernetes/logs

--hostname-override=172.17.217.226 #或k8s-node-1 HostName

--cluster-cidr=10.0.0.0/24

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

15、创建kubelet-proxy启动服务【Node】

#创建服务文件 vi /usr/lib/systemd/system/kubelet-proxy.service #写入配置 [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

16、启动kubelet-proxy【Node】

systemctl daemon-reload systemctl start kubelet-proxy systemctl enable kubelet-proxy

17、安装flannel【Node】

#安装wget

yum install wget -y

#下载安装包

wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz -o /opt/flannel/flannel-v0.10.0-linux-amd64.tar.gz

#解压文件 tar -vxf /opt/flannel/flannel-v0.10.0-linux-amd64.tar.gz

#将执行文件移动至/opt/flannel/bin

mv /opt/flannel/flanneld mk-docker-opts.sh /opt/flannel/bin

18、创建flannel配置文件、服务文件【Node】

#创建配置文件

vi /opt/flannel/cfg/flanneld.conf

#写入配置 FLANNEL_OPTIONS="--etcd-endpoints=https://172.17.217.226:2379,https://172.17.217.228:2379 --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem" #创建服务启动文件 vi /opt/flannel/service/flanneld.service

#写入配置 [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/opt/flannel/cfg/flanneld.conf ExecStart=/opt/flannel/bin/flanneld --ip-masq $FLANNEL_OPTIONS ExecStartPost=/opt/flannel/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target

#复制服务至服务启动位置

cp /opt/flannel/flanneld.service /usr/lib/systemd/system/

19、启动flannel【Node】

#启动flanneld

systemctl start flanneld

#开机自启

systemctl enable flanneld

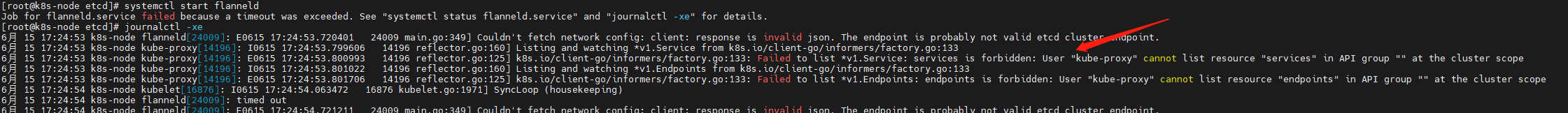

可能出现问题:

1)、etcd还未将flannel存储至子网

解决【单个节点执行即可,Node】:

# etcd V3 --endpoint:Node节点,Network:docker网段【flannel默认不支持V3版本可直接使用下面第2)-->②点解决方案】 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.110:2379" put /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}' #etcd v2 /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.110:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

查看加入状态【单个节点执行即可,Node】:

#查看加入状态 --endpoint:Node节点

/opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.110:2379" get /coreos.com/network/config

2)、 启动flannel错误:

解决,参考地址:

#给用户授集群管理权限【master】

kubectl create clusterrolebinding kube-proxy-cluster-admin --clusterrole=cluster-admin --user=kube-proxy

#重新启动proxy【Node】

systemctl restart kubelet-proxy

再次启动flannel出现如下错误【flanneld目前不能与etcdV3直接交互】:

解决,参考地址:

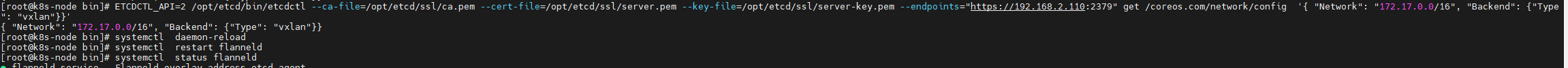

①、删除原来配置【Node】

/opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.110:2379" del /coreos.com/network/config

②、设置etcd支持V2功能【master、Node】

#编辑etcd服务 vi /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/etcd.conf ExecStart=/opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem --enable-v2 #开启支持V2 Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target #重新加载配置 systemctl daemon-reload #重启服务 systemctl restart etcd #查看集群状态 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --key=/opt/etcd/ssl/server-key.pem --cert=/opt/etcd/ssl/server.pem --endpoints="https://192.168.2.200:2379,https://192.168.2.110:2379" endpoint health

③、重新使用V2写入子网信息

# 指定使用v2版本命令

ETCDCTL_API=2 /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.110:2379" get /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

#重新加载配置

systemctl daemon-reload

#重启flanneld

systemctl restart flanneld

#查看flanned状态

systemctl status flanneld

#设置开机自启

systemctl enable flanneld

#重启docker

systemctl restart docker

#查看启动状态

systemctl status docker

查看确保docker与flanneld处于同一网段:

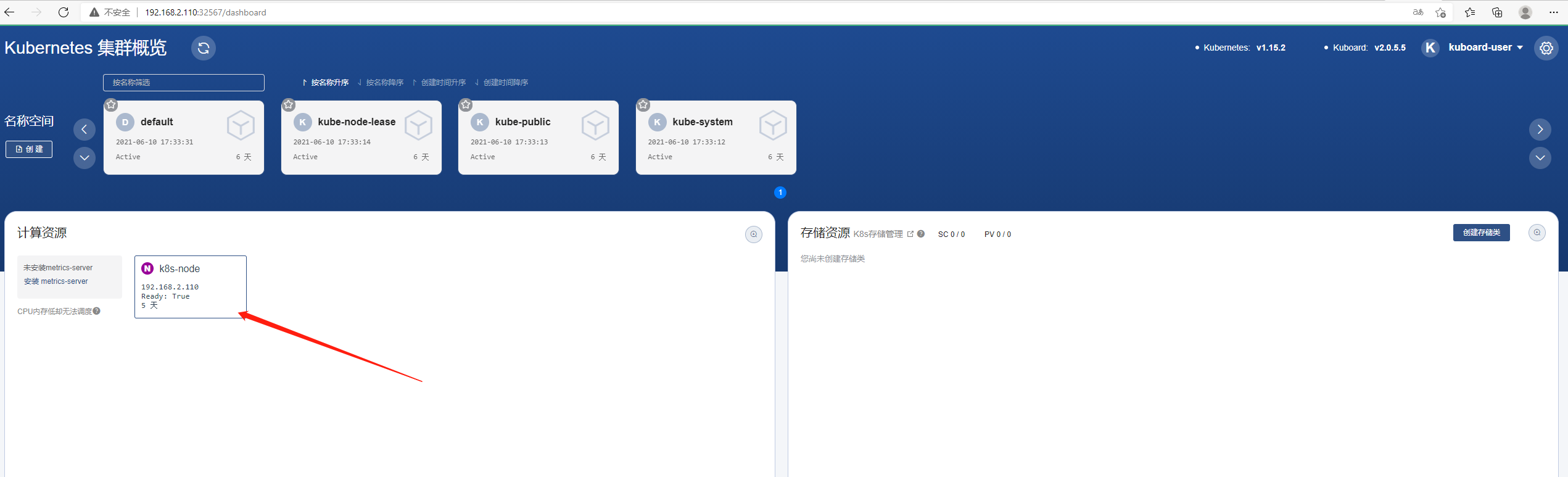

20、安装Kubernetes 集群概览【master】

①、安装 kuboard

kubectl apply -f https://kuboard.cn/install-script/kuboard.yaml

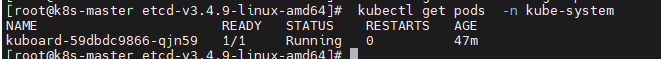

②、查看运行状态

kubectl get pods -n kube-system

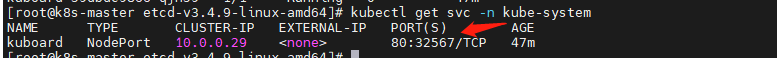

③、查看kuboard暴露端口

kubectl get svc -n kube-system

④、获取登录Token

kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d

⑤、查看运行状态

#Node节点IP:kuboard端口 192.168.2.110:32567