1.糗事百科段子.py

# 目标:爬取糗事百科段子信息(文字)

# 信息包括:作者头像,作者名字,作者等级,段子内容,好笑数目,评论数目

# 解析用学过的几种方法都实验一下①正则表达式.②BeautifulSoup③xpath

import requests

import re # 正则表达式

import json

from bs4 import BeautifulSoup # BS

from lxml import etree # xpath

def get_one_page(url):

response = requests.get(url)

if response.status_code == 200:

return response.text

return None

def zhengze_parse(html):

pattern = re.compile(

'<img src="//(.*?)".*?alt="(.*?)".*?<a.*?<div class=".*?">(.*?)</div>'

+ '.*?<div class=.*?<span>(.*?)</span>.*?<span class=".*?".*?<i.*?>(.*?)<'

+ '.*?<i.*?>(.*?)<',

re.S)

items = re.findall(pattern, html)

for item in items:

content = item[3].replace('<br/>', '').strip()

content = content.replace('x01', '')

if item[5] == '京公网安备11010502031601号':

break

yield {

'image': "http://" + item[0],

'name': item[1],

'grade': item[2],

'content': content,

'fun_Num': item[4],

'com_Num': item[5]

}

def soup_parse(html):

soup = BeautifulSoup(html, 'lxml')

for data in soup.find_all('div', class_='article'):

image = "http:" + data.img['src']

name = data.img['alt']

# 匿名用户没有等级

if name=="匿名用户":

grade = "匿名用户"

else:

grade = data.find('div', class_='articleGender').text

content = data.find('div', class_='content').span.text.strip()

fun_Num = data.find('i', class_='number').text

com_Num = data.find('a', class_='qiushi_comments').i.text

yield {

'image': image,

'name': name,

'grade': grade,

'content': content,

'fun_Num': fun_Num,

'com_Num': com_Num,

}

def xpath_parse(html):

html = etree.HTML(html)

for data in html.xpath('//div[@class="col1"]/div'):

image = "http:"+ str(data.xpath('.//img/@src')[0])

name = data.xpath('.//img/@alt')[0]

if name == '匿名用户':

grade = '匿名用户'

else:

grade = data.xpath('./div[1]/div/text()')[0]

content = data.xpath('./a/div/span/text()')[0:]

content = str(content).strip().replace('\n','')

fun_Num = data.xpath('./div[2]/span[1]/i/text()')[0]

com_Num = data.xpath('.//div[2]/span[2]/a/i/text()')[0]

# print(image, name, grade, content, fun_Num, com_Num)

yield {

'image': image,

'name': name,

'grade': grade,

'content': content,

'fun_Num': fun_Num,

'com_Num': com_Num,

}

def write_to_file(content, flag):

with open('糗百段子(' + str(flag) + ').txt', 'a', encoding='utf-8')as f:

f.write(json.dumps(content, ensure_ascii=False) + '

')

def search(Num):

url = 'https://www.qiushibaike.com/text/page/' + str(Num) + '/'

html = get_one_page(url)

# 正则匹配不到匿名用户的等级,不会匹配匿名用户的段子,所以少一些数据

# 稍微加个判断逻辑就行了,懒得弄了

for item in zhengze_parse(html):

write_to_file(item, '正则表达式')

for item in soup_parse(html):

write_to_file(item, 'BS4')

for item in xpath_parse(html):

write_to_file(item, 'xpath')

page = str(Num)

print("正在爬取第" + page + '页')

def main():

# 提供页码

for Num in range(1, 14):

search(Num)

print("爬取完成")

if __name__ == '__main__':

# 入口

main()

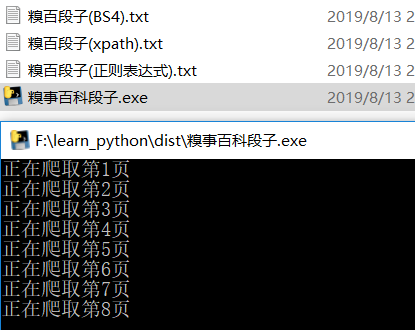

2.打包

pyinstaller -F 糗事百科段子.py

3.运行效果

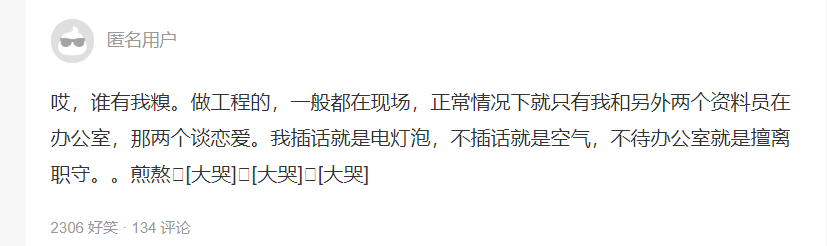

网页上匿名用户段子的显示情况