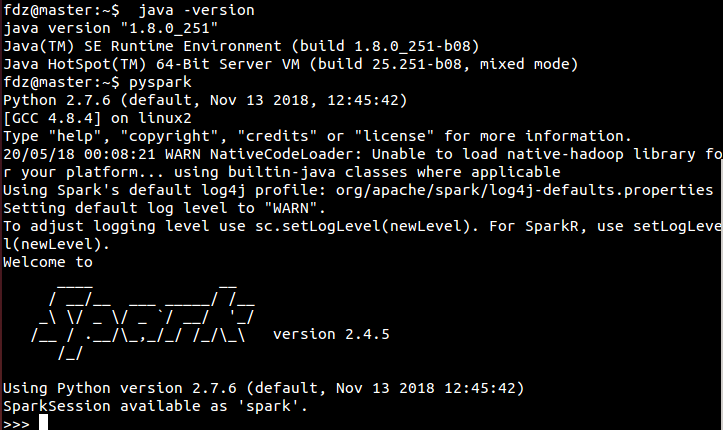

安装好spark(spark-2.0.0-bin-hadoop2.6)后在ubuntu的终端输入pyspark启动时出现错误提示:Exception in thread "main" java.lang.UnsupportedClassVersionError,上百度搜了一下,很多博客说这是因为The problem is that you compiled with/for Java 8, but you are running Spark on Java 7 or older,所以就下载安装了java8(注意要每个节点都要安装java8) 重新在ubuntu终端输入pyspark启动后成功:

如何在ubuntu14下下载安装java8可以参照zhuxp1的博客:https://blog.csdn.net/zhuxiaoping54532/article/details/70158200