只要是insert into 的就是没分区

需求一:用户活跃主题

DWS层--(用户行为宽表层)

目标:统计当日、当周、当月活动的每个设备明细

1 每日活跃设备明细 dwd_start_log--->dws_uv_detail_day

--把相同的字段collect_set到一个数组, 按mid_id分组(便于后边统计)

collect_set将某字段的值进行去重汇总,产生array类型字段。如: concat_ws('|', collect_set(user_id)) user_id,

建分区表dws_uv_detail_day: partitioned by ('dt' string)

drop table if exists dws_uv_detail_day; create table dws_uv_detail_day( `mid_id` string COMMENT '设备唯一标识', `user_id` string COMMENT '用户标识', `version_code` string COMMENT '程序版本号', `version_name` string COMMENT '程序版本名', `lang` string COMMENT '系统语言', `source` string COMMENT '渠道号', `os` string COMMENT '安卓系统版本', `area` string COMMENT '区域', `model` string COMMENT '手机型号', `brand` string COMMENT '手机品牌', `sdk_version` string COMMENT 'sdkVersion', `gmail` string COMMENT 'gmail', `height_width` string COMMENT '屏幕宽高', `app_time` string COMMENT '客户端日志产生时的时间', `network` string COMMENT '网络模式', `lng` string COMMENT '经度', `lat` string COMMENT '纬度' ) COMMENT '活跃用户按天明细' PARTITIONED BY ( `dt` string) stored as parquet location '/warehouse/gmall/dws/dws_uv_detail_day/' ;

数据导入

按周分区;过滤出一周内的数据;按设备id分组; ===>count(*)得到最终结果;

partition(dt='2019-02-10') from dwd_start_log where dt='2019-02-10' group by mid_id ( mid_id设备唯一标示 )

以用户单日访问为key进行聚合,如果某个用户在一天中使用了两种操作系统、两个系统版本、多个地区,登录不同账号,只取其中之一

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dws_uv_detail_day partition(dt='2019-02-10') select mid_id, concat_ws('|', collect_set(user_id)) user_id, concat_ws('|', collect_set(version_code)) version_code, concat_ws('|', collect_set(version_name)) version_name, concat_ws('|', collect_set(lang))lang, concat_ws('|', collect_set(source)) source, concat_ws('|', collect_set(os)) os, concat_ws('|', collect_set(area)) area, concat_ws('|', collect_set(model)) model, concat_ws('|', collect_set(brand)) brand, concat_ws('|', collect_set(sdk_version)) sdk_version, concat_ws('|', collect_set(gmail)) gmail, concat_ws('|', collect_set(height_width)) height_width, concat_ws('|', collect_set(app_time)) app_time, concat_ws('|', collect_set(network)) network, concat_ws('|', collect_set(lng)) lng, concat_ws('|', collect_set(lat)) lat from dwd_start_log where dt='2019-02-10' group by mid_id;

查询导入结果;

hive (gmall)> select * from dws_uv_detail_day limit 1;

###最后count(*)即是每日活跃设备的个数; hive (gmall)> select count(*) from dws_uv_detail_day;

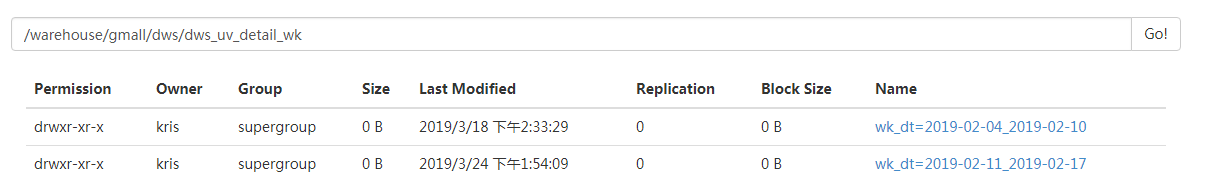

2 每周(dws_uv_detail_wk)活跃设备明细 partition(wk_dt)

周一到周日concat(date_add(next_day('2019-02-10', 'MO'), -7), '_', date_add(next_day('2019-02-10', 'MO'), -1))即 2019-02-04_2019-02-10

创建分区表: partitioned by('wk_dt' string)

hive (gmall)> drop table if exists dws_uv_detail_wk; create table dws_uv_detail_wk( `mid_id` string COMMENT '设备唯一标识', `user_id` string COMMENT '用户标识', `version_code` string COMMENT '程序版本号', `version_name` string COMMENT '程序版本名', `lang` string COMMENT '系统语言', `source` string COMMENT '渠道号', `os` string COMMENT '安卓系统版本', `area` string COMMENT '区域', `model` string COMMENT '手机型号', `brand` string COMMENT '手机品牌', `sdk_version` string COMMENT 'sdkVersion', `gmail` string COMMENT 'gmail', `height_width` string COMMENT '屏幕宽高', `app_time` string COMMENT '客户端日志产生时的时间', `network` string COMMENT '网络模式', `lng` string COMMENT '经度', `lat` string COMMENT '纬度', `monday_date` string COMMENT '周一日期', `sunday_date` string COMMENT '周日日期' ) COMMENT '活跃用户按周明细' PARTITIONED BY (`wk_dt` string) stored as parquet location '/warehouse/gmall/dws/dws_uv_detail_wk/' ;

导入数据:以周为分区;过滤出一个月内的数据,按设备id分组;

周一: date_add(next_day('2019-05-16','MO'),-7);

周日:date_add(next_day('2019-05-16','MO'),-1);

周一---周日:concat(date_add(next_day('2019-05-16', 'MO'), -7), "_", date_add(next_day('2019-05-16', 'MO'), -1));

insert overwrite table dws_uv_detail_wk partition(wk_dt) select mid_id, concat_ws('|', collect_set(user_id)) user_id, concat_ws('|', collect_set(version_code)) version_code, concat_ws('|', collect_set(version_name)) version_name, concat_ws('|', collect_set(lang)) lang, concat_ws('|', collect_set(source)) source, concat_ws('|', collect_set(os)) os, concat_ws('|', collect_set(area)) area, concat_ws('|', collect_set(model)) model, concat_ws('|', collect_set(brand)) brand, concat_ws('|', collect_set(sdk_version)) sdk_version, concat_ws('|', collect_set(gmail)) gmail, concat_ws('|', collect_set(height_width)) height_width, concat_ws('|', collect_set(app_time)) app_time, concat_ws('|', collect_set(network)) network, concat_ws('|', collect_set(lng)) lng, concat_ws('|', collect_set(lat)) lat, date_add(next_day('2019-02-10', 'MO'), -7), date_add(next_day('2019-02-10', 'MO'), -1), concat(date_add(next_day('2019-02-10', 'MO'), -7), '_', date_add(next_day('2019-02-10', 'MO'), -1)) from dws_uv_detail_day where dt >= date_add(next_day('2019-02-10', 'MO'), -7) and dt <= date_add(next_day('2019-02-10', 'MO'), -1) group by mid_id;

查询导入结果

hive (gmall)> select * from dws_uv_detail_wk limit 1; hive (gmall)> select count(*) from dws_uv_detail_wk;

3 每月活跃设备明细 dws_uv_detail_mn partition(mn) - 把每日的数据插入进去

DWS层创建分区表 partitioned by(mn string)

hive (gmall)> drop table if exists dws_uv_detail_mn; create external table dws_uv_detail_mn( `mid_id` string COMMENT '设备唯一标识', `user_id` string COMMENT '用户标识', `version_code` string COMMENT '程序版本号', `version_name` string COMMENT '程序版本名', `lang` string COMMENT '系统语言', `source` string COMMENT '渠道号', `os` string COMMENT '安卓系统版本', `area` string COMMENT '区域', `model` string COMMENT '手机型号', `brand` string COMMENT '手机品牌', `sdk_version` string COMMENT 'sdkVersion', `gmail` string COMMENT 'gmail', `height_width` string COMMENT '屏幕宽高', `app_time` string COMMENT '客户端日志产生时的时间', `network` string COMMENT '网络模式', `lng` string COMMENT '经度', `lat` string COMMENT '纬度' ) COMMENT '活跃用户按月明细' PARTITIONED BY (`mn` string) stored as parquet location '/warehouse/gmall/dws/dws_uv_detail_mn/' ;

数据导入 按月分区;过滤出一个月内的数据,按照设备id分组;

data_format('2019-03-10', 'yyyy-MM') ---> 2019-03

where date_format('dt', 'yyyy-MM') = date_format('2019-02-10', 'yyyy-MM') group by mid_id;

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dws_uv_detail_mn partition(mn) select mid_id, concat_ws('|', collect_set(user_id)) user_id, concat_ws('|', collect_set(version_code)) version_code, concat_ws('|', collect_set(version_name)) version_name, concat_ws('|', collect_set(lang)) lang, concat_ws('|', collect_set(source)) source, concat_ws('|', collect_set(os)) os, concat_ws('|', collect_set(area)) area, concat_ws('|', collect_set(model)) model, concat_ws('|', collect_set(brand)) brand, concat_ws('|', collect_set(sdk_version)) sdk_version, concat_ws('|', collect_set(gmail)) gmail, concat_ws('|', collect_set(height_width)) height_width, concat_ws('|', collect_set(app_time)) app_time, concat_ws('|', collect_set(network)) network, concat_ws('|', collect_set(lng)) lng, concat_ws('|', collect_set(lat)) lat, date_format('2019-02-10','yyyy-MM') from dws_uv_detail_day where date_format(dt,'yyyy-MM') = date_format('2019-02-10','yyyy-MM') group by mid_id;

查询导入结果

hive (gmall)> select * from dws_uv_detail_mn limit 1; hive (gmall)> select count(*) from dws_uv_detail_mn ;

DWS层加载数据脚本

在hadoop101的/home/kris/bin目录下创建脚本

[kris@hadoop101 bin]$ vim dws.sh

#!/bin/bash # 定义变量方便修改 APP=gmall hive=/opt/module/hive/bin/hive # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dws_uv_detail_day partition(dt='$do_date') select mid_id, concat_ws('|', collect_set(user_id)) user_id, concat_ws('|', collect_set(version_code)) version_code, concat_ws('|', collect_set(version_name)) version_name, concat_ws('|', collect_set(lang)) lang, concat_ws('|', collect_set(source)) source, concat_ws('|', collect_set(os)) os, concat_ws('|', collect_set(area)) area, concat_ws('|', collect_set(model)) model, concat_ws('|', collect_set(brand)) brand, concat_ws('|', collect_set(sdk_version)) sdk_version, concat_ws('|', collect_set(gmail)) gmail, concat_ws('|', collect_set(height_width)) height_width, concat_ws('|', collect_set(app_time)) app_time, concat_ws('|', collect_set(network)) network, concat_ws('|', collect_set(lng)) lng, concat_ws('|', collect_set(lat)) lat from "$APP".dwd_start_log where dt='$do_date' group by mid_id; insert overwrite table "$APP".dws_uv_detail_wk partition(wk_dt) select mid_id, concat_ws('|', collect_set(user_id)) user_id, concat_ws('|', collect_set(version_code)) version_code, concat_ws('|', collect_set(version_name)) version_name, concat_ws('|', collect_set(lang)) lang, concat_ws('|', collect_set(source)) source, concat_ws('|', collect_set(os)) os, concat_ws('|', collect_set(area)) area, concat_ws('|', collect_set(model)) model, concat_ws('|', collect_set(brand)) brand, concat_ws('|', collect_set(sdk_version)) sdk_version, concat_ws('|', collect_set(gmail)) gmail, concat_ws('|', collect_set(height_width)) height_width, concat_ws('|', collect_set(app_time)) app_time, concat_ws('|', collect_set(network)) network, concat_ws('|', collect_set(lng)) lng, concat_ws('|', collect_set(lat)) lat, date_add(next_day('$do_date','MO'),-7), date_add(next_day('$do_date','SU'),-7), concat(date_add( next_day('$do_date','MO'),-7), '_' , date_add(next_day('$do_date','MO'),-1) ) from "$APP".dws_uv_detail_day where dt>=date_add(next_day('$do_date','MO'),-7) and dt<=date_add(next_day('$do_date','MO'),-1) group by mid_id; insert overwrite table "$APP".dws_uv_detail_mn partition(mn) select mid_id, concat_ws('|', collect_set(user_id)) user_id, concat_ws('|', collect_set(version_code)) version_code, concat_ws('|', collect_set(version_name)) version_name, concat_ws('|', collect_set(lang))lang, concat_ws('|', collect_set(source)) source, concat_ws('|', collect_set(os)) os, concat_ws('|', collect_set(area)) area, concat_ws('|', collect_set(model)) model, concat_ws('|', collect_set(brand)) brand, concat_ws('|', collect_set(sdk_version)) sdk_version, concat_ws('|', collect_set(gmail)) gmail, concat_ws('|', collect_set(height_width)) height_width, concat_ws('|', collect_set(app_time)) app_time, concat_ws('|', collect_set(network)) network, concat_ws('|', collect_set(lng)) lng, concat_ws('|', collect_set(lat)) lat, date_format('$do_date','yyyy-MM') from "$APP".dws_uv_detail_day where date_format(dt,'yyyy-MM') = date_format('$do_date','yyyy-MM') group by mid_id; " $hive -e "$sql"

增加脚本执行权限 chmod 777 dws.sh

脚本使用[kris@hadoop101 module]$ dws.sh 2019-02-11

查询结果

hive (gmall)> select count(*) from dws_uv_detail_day; hive (gmall)> select count(*) from dws_uv_detail_wk; hive (gmall)> select count(*) from dws_uv_detail_mn ;

脚本执行时间;企业开发中一般在每日凌晨30分~1点

ADS层 目标:当日、当周、当月活跃设备数 使用 day_count表 join wk_count join mn_count , 把3张表连接一起

建表ads_uv_count表:

字段有day_count、wk_count、mn_count

is_weekend if(date_add(next_day('2019-02-10', 'MO'), -1) = '2019-02-10', 'Y', 'N')

is_monthend if(last_day('2019-02-10') = '2019-02-10', 'Y', 'N')

drop table if exists ads_uv_count; create external table ads_uv_count( `dt` string comment '统计日期', `day_count` bigint comment '当日用户量', `wk_count` bigint comment '当周用户量', `mn_count` bigint comment '当月用户量', `is_weekend` string comment 'Y,N是否是周末,用于得到本周最终结果', `is_monthend` string comment 'Y,N是否是月末,用于得到本月最终结果' ) comment '每日活跃用户数量' stored as parquet location '/warehouse/gmall/ads/ads_uv_count/';

导入数据:

hive (gmall)> insert overwrite table ads_uv_count select '2019-02-10' dt, daycount.ct, wkcount.ct, mncount.ct, if(date_add(next_day('2019-02-10','MO'),-1)='2019-02-10','Y','N') , if(last_day('2019-02-10')='2019-02-10','Y','N') from ( select '2019-02-10' dt, count(*) ct from dws_uv_detail_day where dt='2019-02-10' )daycount join ( select '2019-02-10' dt, count (*) ct from dws_uv_detail_wk where wk_dt=concat(date_add(next_day('2019-02-10','MO'),-7),'_' ,date_add(next_day('2019-02-10','MO'),-1) ) ) wkcount on daycount.dt=wkcount.dt join ( select '2019-02-10' dt, count (*) ct from dws_uv_detail_mn where mn=date_format('2019-02-10','yyyy-MM') )mncount on daycount.dt=mncount.dt ;

查询导入结果

hive (gmall)> select * from ads_uv_count ;

ADS层加载数据脚本

1)在hadoop101的/home/kris/bin目录下创建脚本

[kris@hadoop101 bin]$ vim ads.sh

#!/bin/bash # 定义变量方便修改 APP=gmall hive=/opt/module/hive/bin/hive # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert into table "$APP".ads_uv_count select '$do_date' dt, daycount.ct, wkcount.ct, mncount.ct, if(date_add(next_day('$do_date','MO'),-1)='$do_date','Y','N') , if(last_day('$do_date')='$do_date','Y','N') from ( select '$do_date' dt, count(*) ct from "$APP".dws_uv_detail_day where dt='$do_date' )daycount join ( select '$do_date' dt, count (*) ct from "$APP".dws_uv_detail_wk where wk_dt=concat(date_add(next_day('$do_date','MO'),-7),'_' ,date_add(next_day('$do_date','MO'),-1) ) ) wkcount on daycount.dt=wkcount.dt join ( select '$do_date' dt, count (*) ct from "$APP".dws_uv_detail_mn where mn=date_format('$do_date','yyyy-MM') )mncount on daycount.dt=mncount.dt; " $hive -e "$sql"

增加脚本执行权限 chmod 777 ads.sh

脚本使用 ads.sh 2019-02-11

查询导入结果 hive (gmall)> select * from ads_uv_count ;

需求二:用户新增主题

首次联网使用应用的用户。如果一个用户首次打开某APP,那这个用户定义为新增用户;卸载再安装的设备,不会被算作一次新增。新增用户包括日新增用户、周新增用户、月新增用户。

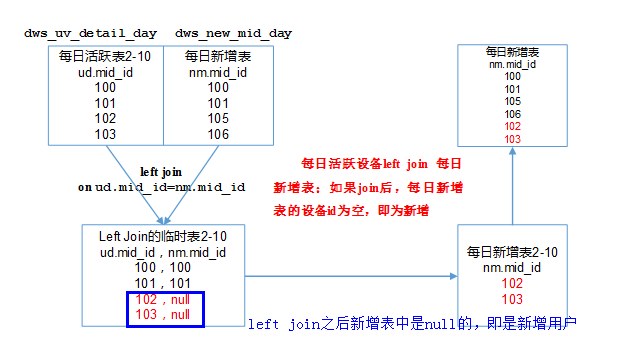

每日新增(老用户不算,之前没登陆过,今天是第一次登陆)设备--没有分区

-->以往的新增库里边没有他,但他今天活跃了即新增加的用户;

1 DWS层(每日新增设备明细表)

创建每日新增设备明细表:dws_new_mid_day

hive (gmall)> drop table if exists dws_new_mid_day; create table dws_new_mid_day ( `mid_id` string COMMENT '设备唯一标识', `user_id` string COMMENT '用户标识', `version_code` string COMMENT '程序版本号', `version_name` string COMMENT '程序版本名', `lang` string COMMENT '系统语言', `source` string COMMENT '渠道号', `os` string COMMENT '安卓系统版本', `area` string COMMENT '区域', `model` string COMMENT '手机型号', `brand` string COMMENT '手机品牌', `sdk_version` string COMMENT 'sdkVersion', `gmail` string COMMENT 'gmail', `height_width` string COMMENT '屏幕宽高', `app_time` string COMMENT '客户端日志产生时的时间', `network` string COMMENT '网络模式', `lng` string COMMENT '经度', `lat` string COMMENT '纬度', `create_date` string comment '创建时间' ) COMMENT '每日新增设备信息' stored as parquet location '/warehouse/gmall/dws/dws_new_mid_day/';

dws_uv_detail_day(每日活跃设备明细) left join dws_new_mid_day nm(以往的新增用户表, 新建字段create_time2019-02-10) nm.mid_id is null;

导入数据

用每日活跃用户表 left join 每日新增设备表,关联的条件是mid_id相等。如果是每日新增的设备,则在每日新增设备表中为null。

from dws_uv_detail_day ud left join dws_new_mid_day nm on ud.mid_id=nm.mid_id

where ud.dt='2019-02-10' and nm.mid_id is null;

hive (gmall)> insert into table dws_new_mid_day select ud.mid_id, ud.user_id , ud.version_code , ud.version_name , ud.lang , ud.source, ud.os, ud.area, ud.model, ud.brand, ud.sdk_version, ud.gmail, ud.height_width, ud.app_time, ud.network, ud.lng, ud.lat, '2019-02-10' from dws_uv_detail_day ud left join dws_new_mid_day nm on ud.mid_id=nm.mid_id where ud.dt='2019-02-10' and nm.mid_id is null;

查询导入数据

hive (gmall)> select count(*) from dws_new_mid_day ;

2 ADS层(每日新增设备表)

创建每日新增设备表ads_new_mid_count

hive (gmall)> drop table if exists `ads_new_mid_count`; create table `ads_new_mid_count` ( `create_date` string comment '创建时间' , `new_mid_count` BIGINT comment '新增设备数量' ) COMMENT '每日新增设备信息数量' row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_new_mid_count/';

导入数据 count(*) dws_new_mid_day表即可

加了create_date就必须group by create_time,否则报错:not in GROUP BY key 'create_date'

hive (gmall)> insert into table ads_new_mid_count select create_date , count(*) from dws_new_mid_day where create_date='2019-02-10' group by create_date ;

查询导入数据

hive (gmall)> select * from ads_new_mid_count;

扩展每月新增:

--每月新增 drop table if exists dws_new_mid_mn; create table dws_new_mid_mn( `mid_id` string COMMENT '设备唯一标识', `user_id` string COMMENT '用户标识', `version_code` string COMMENT '程序版本号', `version_name` string COMMENT '程序版本名', `lang` string COMMENT '系统语言', `source` string COMMENT '渠道号', `os` string COMMENT '安卓系统版本', `area` string COMMENT '区域', `model` string COMMENT '手机型号', `brand` string COMMENT '手机品牌', `sdk_version` string COMMENT 'sdkVersion', `gmail` string COMMENT 'gmail', `height_width` string COMMENT '屏幕宽高', `app_time` string COMMENT '客户端日志产生时的时间', `network` string COMMENT '网络模式', `lng` string COMMENT '经度', `lat` string COMMENT '纬度' )comment "每月新增明细" partitioned by(mn string) stored as parquet location "/warehouse/gmall/dws/dws_new_mid_mn"; insert overwrite table dws_new_mid_mn partition(mn) select um.mid_id, um.user_id , um.version_code , um.version_name , um.lang , um.source, um.os, um.area, um.model, um.brand, um.sdk_version, um.gmail, um.height_width, um.app_time, um.network, um.lng, um.lat, date_format('2019-02-10', 'yyyy-MM') from dws_uv_detail_mn um left join dws_new_mid_mn nm on um.mid_id = nm.mid_id where um.mn =date_format('2019-02-10', 'yyyy-MM') and nm.mid_id = null; ----为什么加上它就是空的??查不到数据了呢 --##注意这里不能写出date_format(um.mn, 'yyyy-MM') =date_format('2019-02-10', 'yyyy-MM') |

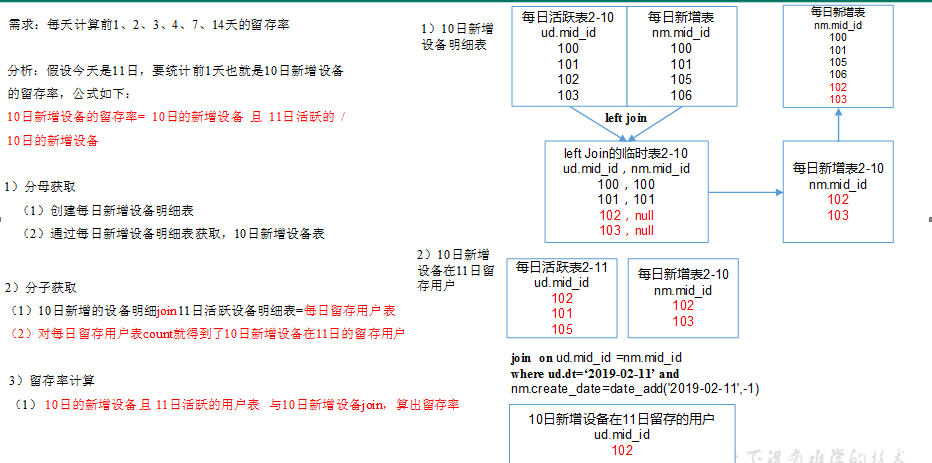

需求三:用户留存主题

如果不考虑2019-02-11和2019-02-12的新增用户:2019-02-10新增100人,一天后它的留存率是30%,2天12号它的留存率是25%,3天后留存率32%;

站在2019-02-12号看02-11的留存率:新增200人,12号的留存率是20%;

站在2019-02-13号看02-12的留存率:新增100人,13号即一天后留存率是25%;

用户留存率的分析: 昨日的新增且今天是活跃的 / 昨日的新增用户量

如今天11日,要统计10日的 用户留存率---->10日的新设备且是11日活跃的 / 10日新增设备

分母:10日的新增设备(每日活跃 left join 以往新增设备表(nm) nm.mid_id is null )

分子:每日活跃表(ud) join 每日新增表(nm) where ud.dt='今天' and nm.create_date = '昨天'

① DWS层(每日留存用户明细表dws_user_retention_day)

用户1天留存的分析: ===>>

留存用户=前一天新增 join 今天活跃

用户留存率=留存用户/前一天新增

创建表: dws_user_retention_day

hive (gmall)> drop table if exists `dws_user_retention_day`; create table `dws_user_retention_day` ( `mid_id` string COMMENT '设备唯一标识', `user_id` string COMMENT '用户标识', `version_code` string COMMENT '程序版本号', `version_name` string COMMENT '程序版本名', `lang` string COMMENT '系统语言', `source` string COMMENT '渠道号', `os` string COMMENT '安卓系统版本', `area` string COMMENT '区域', `model` string COMMENT '手机型号', `brand` string COMMENT '手机品牌', `sdk_version` string COMMENT 'sdkVersion', `gmail` string COMMENT 'gmail', `height_width` string COMMENT '屏幕宽高', `app_time` string COMMENT '客户端日志产生时的时间', `network` string COMMENT '网络模式', `lng` string COMMENT '经度', `lat` string COMMENT '纬度', `create_date` string comment '设备新增时间', `retention_day` int comment '截止当前日期留存天数' ) COMMENT '每日用户留存情况' PARTITIONED BY ( `dt` string) stored as parquet location '/warehouse/gmall/dws/dws_user_retention_day/' ;

导入数据(每天计算前1天的新用户访问留存明细)

from dws_uv_detail_day每日活跃设备 ud join dws_new_mid_day每日新增设备 nm on ud.mid_id =nm.mid_id

where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-1);

hive (gmall)> insert overwrite table dws_user_retention_day partition(dt="2019-02-11") select nm.mid_id, nm.user_id , nm.version_code , nm.version_name , nm.lang , nm.source, nm.os, nm.area, nm.model, nm.brand, nm.sdk_version, nm.gmail, nm.height_width, nm.app_time, nm.network, nm.lng, nm.lat, nm.create_date, 1 retention_day from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-1);

查询导入数据(每天计算前1天的新用户访问留存明细)

hive (gmall)> select count(*) from dws_user_retention_day;

② DWS层(1,2,3,n天留存用户明细表)直接插入数据: dws_user_retention_day 用union all连接起来,汇总到一个表中;

1)直接导入数据(每天计算前1,2,3,n天的新用户访问留存明细)

直接改变这个即可以,date_add('2019-02-11',-3); -1是一天的留存率; -2是两天的留存率、-3是三天的留存率

hive (gmall)> insert overwrite table dws_user_retention_day partition(dt="2019-02-11") select nm.mid_id, nm.user_id , nm.version_code , nm.version_name , nm.lang , nm.source, nm.os, nm.area, nm.model, nm.brand, nm.sdk_version, nm.gmail, nm.height_width, nm.app_time, nm.network, nm.lng, nm.lat, nm.create_date, 1 retention_day from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-1) union all select nm.mid_id, nm.user_id , nm.version_code , nm.version_name , nm.lang , nm.source, nm.os, nm.area, nm.model, nm.brand, nm.sdk_version, nm.gmail, nm.height_width, nm.app_time, nm.network, nm.lng, nm.lat, nm.create_date, 2 retention_day from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-2) union all select nm.mid_id, nm.user_id , nm.version_code , nm.version_name , nm.lang , nm.source, nm.os, nm.area, nm.model, nm.brand, nm.sdk_version, nm.gmail, nm.height_width, nm.app_time, nm.network, nm.lng, nm.lat, nm.create_date, 3 retention_day from dws_uv_detail_day ud join dws_new_mid_day nm on ud.mid_id =nm.mid_id where ud.dt='2019-02-11' and nm.create_date=date_add('2019-02-11',-3);

2)查询导入数据(每天计算前1,2,3天的新用户访问留存明细)

hive (gmall)> select retention_day , count(*) from dws_user_retention_day group by retention_day;

③ ADS层 留存用户数 ads_user_retention_day_count 直接count( * )即可

1)创建 ads_user_retention_day_count表:

hive (gmall)> drop table if exists `ads_user_retention_day_count`; create table `ads_user_retention_day_count` ( `create_date` string comment '设备新增日期', `retention_day` int comment '截止当前日期留存天数', `retention_count` bigint comment '留存数量' ) COMMENT '每日用户留存情况' stored as parquet location '/warehouse/gmall/ads/ads_user_retention_day_count/';

导入数据 按创建日期create_date 和 留存天数retention_day进行分组group by;

hive (gmall)> insert into table ads_user_retention_day_count select create_date, retention_day, count(*) retention_count from dws_user_retention_day where dt='2019-02-11' group by create_date,retention_day;

查询导入数据

hive (gmall)> select * from ads_user_retention_day_count;

---> 2019-02-10 1 112

④ 留存用户比率 retention_count / new_mid_count 即留存个数 / 新增个数

创建表 ads_user_retention_day_rate

hive (gmall)> drop table if exists `ads_user_retention_day_rate`; create table `ads_user_retention_day_rate` ( `stat_date` string comment '统计日期', `create_date` string comment '设备新增日期', `retention_day` int comment '截止当前日期留存天数', `retention_count` bigint comment '留存数量', `new_mid_count` string comment '当日设备新增数量', `retention_ratio` decimal(10,2) comment '留存率' ) COMMENT '每日用户留存情况' stored as parquet location '/warehouse/gmall/ads/ads_user_retention_day_rate/';

导入数据

join ads_new_mid_countt --->每日新增设备表

hive (gmall)> insert into table ads_user_retention_day_rate select '2019-02-11' , ur.create_date, ur.retention_day, ur.retention_count , nc.new_mid_count, ur.retention_count/nc.new_mid_count*100 from ( select create_date, retention_day, count(*) retention_count from `dws_user_retention_day` where dt='2019-02-11' group by create_date,retention_day ) ur join ads_new_mid_count nc on nc.create_date=ur.create_date;

查询导入数据

hive (gmall)>select * from ads_user_retention_day_rate;

2019-02-11 2019-02-10 1 112 442 25.34

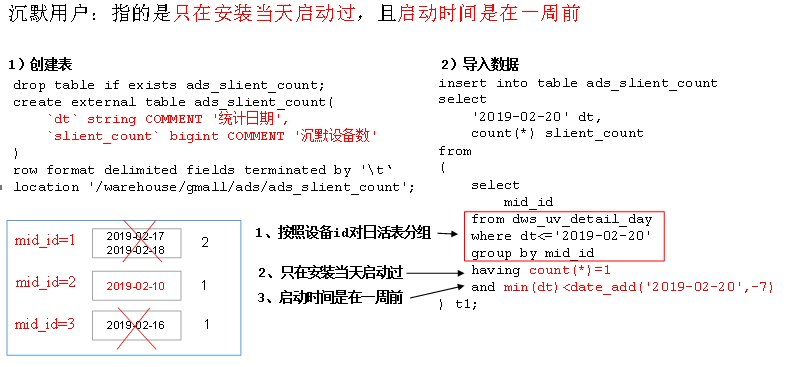

需求四:沉默用户数

沉默用户:指的是只在安装当天启动过,且启动时间是在一周前

使用日活明细表dws_uv_detail_day作为DWS层数据

建表语句

hive (gmall)> drop table if exists ads_slient_count; create external table ads_slient_count( `dt` string COMMENT '统计日期', `slient_count` bigint COMMENT '沉默设备数' ) row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_slient_count';

导入数据

hive (gmall)> insert into table ads_slient_count select '2019-02-20' dt, count(*) slient_count from ( select mid_id from dws_uv_detail_day where dt<='2019-02-20' group by mid_id having count(*)=1 and min(dt)<date_add('2019-02-20',-7) ) t1;

需求五:本周回流用户数

本周回流=本周活跃-本周新增-上周活跃

使用日活明细表dws_uv_detail_day作为DWS层数据

本周回流(上周以前活跃过,上周没活跃,本周活跃了)=本周活跃-本周新增-上周活跃

本周回流=本周活跃left join 本周新增 left join 上周活跃,且本周新增id为null,上周活跃id为null;

建表:

hive (gmall)> drop table if exists ads_back_count; create external table ads_back_count( `dt` string COMMENT '统计日期', `wk_dt` string COMMENT '统计日期所在周', `wastage_count` bigint COMMENT '回流设备数' ) row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_back_count';

导入数据

hive (gmall)> insert into table ads_back_count select '2019-02-20' dt, concat(date_add(next_day('2019-02-20','MO'),-7),'_',date_add(next_day('2019-02-20','MO'),-1)) wk_dt, count(*) from ( select t1.mid_id from ( select mid_id from dws_uv_detail_wk where wk_dt=concat(date_add(next_day('2019-02-20','MO'),-7),'_',date_add(next_day('2019-02-20','MO'),-1)) )t1 left join ( select mid_id from dws_new_mid_day where create_date<=date_add(next_day('2019-02-20','MO'),-1) and create_date>=date_add(next_day('2019-02-20','MO'),-7) )t2 on t1.mid_id=t2.mid_id left join ( select mid_id from dws_uv_detail_wk where wk_dt=concat(date_add(next_day('2019-02-20','MO'),-7*2),'_',date_add(next_day('2019-02-20','MO'),-7-1)) )t3 on t1.mid_id=t3.mid_id where t2.mid_id is null and t3.mid_id is null )t4;

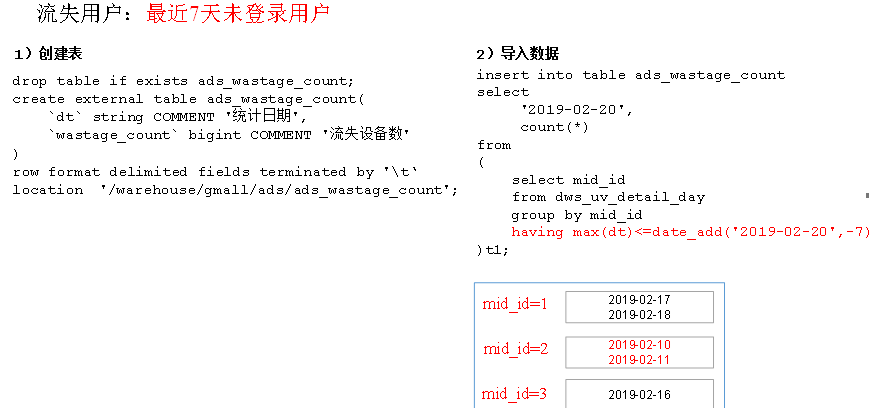

需求六:流失用户数

流失用户:最近7天未登录我们称之为流失用户

使用日活明细表dws_uv_detail_day作为DWS层数据

建表语句

hive (gmall)> drop table if exists ads_wastage_count; create external table ads_wastage_count( `dt` string COMMENT '统计日期', `wastage_count` bigint COMMENT '流失设备数' ) row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_wastage_count';

导入数据

hive (gmall)> insert into table ads_wastage_count select '2019-02-20', count(*) from ( select mid_id from dws_uv_detail_day group by mid_id having max(dt)<=date_add('2019-02-20',-7) )t1;

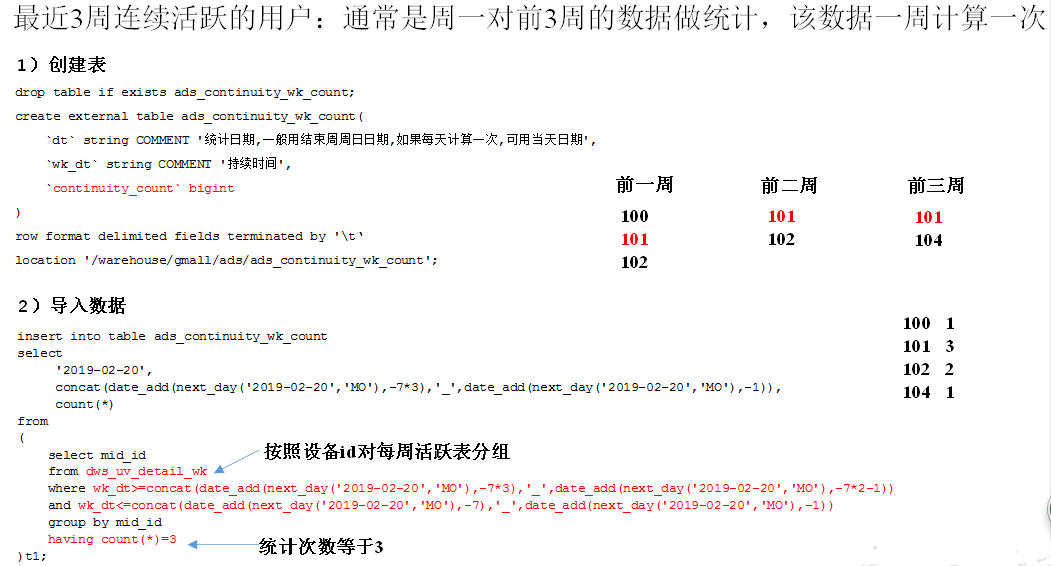

需求七:最近连续3周活跃用户数

最近3周连续活跃的用户:通常是周一对前3周的数据做统计,该数据一周计算一次。

使用周活明细表dws_uv_detail_wk作为DWS层数据

建表语句

hive (gmall)> drop table if exists ads_continuity_wk_count; create external table ads_continuity_wk_count( `dt` string COMMENT '统计日期,一般用结束周周日日期,如果每天计算一次,可用当天日期', `wk_dt` string COMMENT '持续时间', `continuity_count` bigint ) row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_continuity_wk_count';

导入数据

hive (gmall)> insert into table ads_continuity_wk_count select '2019-02-20', concat(date_add(next_day('2019-02-20','MO'),-7*3),'_',date_add(next_day('2019-02-20','MO'),-1)), count(*) from ( select mid_id from dws_uv_detail_wk where wk_dt>=concat(date_add(next_day('2019-02-20','MO'),-7*3),'_',date_add(next_day('2019-02-20','MO'),-7*2-1)) and wk_dt<=concat(date_add(next_day('2019-02-20','MO'),-7),'_',date_add(next_day('2019-02-20','MO'),-1)) group by mid_id having count(*)=3 )t1;

需求八:最近七天内连续三天活跃用户数

说明:最近7天内连续3天活跃用户数

使用日活明细表dws_uv_detail_day作为DWS层数据

建表

hive (gmall)> drop table if exists ads_continuity_uv_count; create external table ads_continuity_uv_count( `dt` string COMMENT '统计日期', `wk_dt` string COMMENT '最近7天日期', `continuity_count` bigint ) COMMENT '连续活跃设备数' row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_continuity_uv_count';

导入数据

hive (gmall)> insert into table ads_continuity_uv_count select '2019-02-12', concat(date_add('2019-02-12',-6),'_','2019-02-12'), count(*) from ( select mid_id from ( select mid_id from ( select mid_id, date_sub(dt,rank) date_dif from ( select mid_id, dt, rank() over(partition by mid_id order by dt) rank from dws_uv_detail_day where dt>=date_add('2019-02-12',-6) and dt<='2019-02-12' )t1 )t2 group by mid_id,date_dif having count(*)>=3 )t3 group by mid_id )t4;

==================================================业务数据处理分析=================================================

ODS层跟原始字段要一模一样;

DWD层

dwd_order_info订单表

dwd_order_detail订单详情(订单和商品)

dwd_user_info用户表

dwd_payment_info支付流水

dwd_sku_info商品表(增加分类)

每日用户行为宽表 dws_user_action

字段: user_id、order_count、order_amount、payment_count、payment_amount 、comment_count

drop table if exists dws_user_action; create external table dws_user_action( user_id string comment '用户id', order_count bigint comment '用户下单数', order_amount decimal(16, 2) comment '下单金额', payment_count bigint comment '支付次数', payment_amount decimal(16, 2) comment '支付金额', comment_count bigint comment '评论次数' )comment '每日用户行为宽表' partitioned by(`dt` string) stored as parquet location '/warehouse/gmall/dws/dws_user_action/' tblproperties("parquet.compression"="snappy");

导入数据

0占位符,第一个字段要有别名

with tmp_order as( select user_id, count(*) order_count, sum(oi.total_amount) order_amount from dwd_order_info oi where date_format(oi.create_time, 'yyyy-MM-dd')='2019-02-10' group by user_id ), tmp_payment as( select user_id, count(*) payment_count, sum(pi.total_amount) payment_amount from dwd_payment_info pi where date_format(pi.payment_time, 'yyyy-MM-dd')='2019-02-10' group by user_id ), tmp_comment as( select user_id, count(*) comment_count from dwd_comment_log c where date_format(c.dt, 'yyyy-MM-dd')='2019-02-10' group by user_id ) insert overwrite table dws_user_action partition(dt='2019-02-10') select user_actions.user_id, sum(user_actions.order_count), sum(user_actions.order_amount), sum(user_actions.payment_count), sum(user_actions.payment_amount), sum(user_actions.comment_count) from( select user_id, order_count, order_amount, 0 payment_count, 0 payment_amount, 0 comment_count from tmp_order union all select user_id, 0, 0, payment_count, payment_amount, 0 from tmp_payment union all select user_id, 0, 0, 0, 0, comment_count from tmp_comment ) user_actions group by user_id;

需求四. GMV(Gross Merchandise Volume):一段时间内的成交总额

GMV拍下订单金额;包括付款和未付款;

建表ads_gmv_sum_day语句:

drop table if exists ads_gmv_sum_day; create table ads_gmv_sum_day( `dt` string comment '统计日期', `gmv_count` bigint comment '当日GMV订单个数', `gmv_amount` decimal(16, 2) comment '当日GMV订单总额', `gmv_payment` decimal(16, 2) comment '当日支付金额' ) comment 'GMV' row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_gmv_sum_day';

导入数据: from用户行为宽表dws_user_action

sum(order_count) gmv_count 、 sum(order_amount) gmv_amount 、sum(payment_amount) payment_amount 过滤日期,以dt分组;

insert into table ads_gmv_sum_day select '2019-02-10' dt, sum(order_count) gmv_count, sum(order_amount) gmv_amount, sum(payment_amount) gmv_payment from dws_user_action where dt='2019-02-10' group by dt;

编写脚本:

#/bin/bash APP=gmall hive=/opt/module/hive/bin/hive if [ -n "$1" ]; then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" insert into table "$APP".ads_gmv_sum_day select '$do_date' dt, sum(order_count) gmv_count, sum(order_amount) gmv_amount, sum(payment_amount) gmv_payment from "$APP".dws_user_action where dt='$do_date' group by dt; " $hive -e "$sql";

需求五. 转化率=新增用户/日活用户

ads_user_convert_day

dt

uv_m_count 当日活跃设备

new_m_count 当日新增设备

new_m_ratio 新增占日活比率

ads_uv_count 用户活跃数(在行为数仓中;) day_count dt

ads_new_mid_count 用户新增表(行为数仓中) new_mid_count create_date

建表ads_user_convert_day

drop table if exists ads_user_convert_day; create table ads_user_convert_day( `dt` string comment '统计日期', `uv_m_count` bigint comment '当日活跃设备', `new_m_count` bigint comment '当日新增设备', `new_m_radio` decimal(10, 2) comment '当日新增占日活比率' )comment '转化率' row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_user_convert_day/';

数据导入

cast(sum( uc.nmc)/sum( uc.dc)*100 as decimal(10,2)) new_m_ratio ; 使用union all

insert into table ads_user_convert_day select '2019-02-10', sum(uc.dc) sum_dc, sum(uc.nmc) sum_nmc, cast(sum(uc.nmc)/sum(uc.dc) * 100 as decimal(10, 2)) new_m_radio from(select day_count dc, 0 nmc from ads_uv_count where dt='2019-02-10' union all select 0 dc, new_mid_count from ads_new_mid_count where create_date='2019-02-10' )uc;

用户行为漏斗分析

访问到下单转化率| 下单到支付转化率

ads_user_action_convert_day

dt

total_visitor_m_count 总访问人数

order_u_count 下单人数

visitor2order_convert_ratio 访问到下单转化率

payment_u_count 支付人数

order2payment_convert_ratio 下单到支付转化率

dws_user_action (宽表中)

user_id

order_count

order_amount

payment_count

payment_amount

comment_count

ads_uv_count 用户活跃数(行为数仓中)

dt

day_count

wk_count

mn_count

is_weekend

is_monthend

建表

drop table if exists ads_user_action_convert_day; create table ads_user_action_convert_day( `dt` string comment '统计日期', `total_visitor_m_count` bigint comment '总访问人数', `order_u_count` bigint comment '下单人数', `visitor2order_convert_radio` decimal(10, 2) comment '访问到下单转化率', `payment_u_count` bigint comment '支付人数', `order2payment_convert_radio` decimal(10, 2) comment '下单到支付的转化率' )COMMENT '用户行为漏斗分析' row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_user_convert_day/' ;

插入数据

insert into table ads_user_action_convert_day select '2019-02-10', uv.day_count, ua.order_count, cast(ua.order_count/uv.day_count * 100 as decimal(10, 2)) visitor2order_convert_radio, ua.payment_count, cast(ua.payment_count/ua.order_count * 100 as decimal(10, 2)) order2payment_convert_radio from( select sum(if(order_count>0, 1, 0)) order_count, sum(if(payment_count>0, 1, 0)) payment_count from dws_user_action where dt='2019-02-10' )ua, ads_uv_count uv where uv.dt='2019-02-10';

需求六. 品牌复购率

需求:以月为单位统计,购买2次以上商品的用户

用户购买商品明细表 dws_sale_detail_daycount:(宽表)

建表dws_sale_detail_daycount

drop table if exists dws_sale_detail_daycount; create external table dws_sale_detail_daycount( user_id string comment '用户 id', sku_id string comment '商品 Id', user_gender string comment '用户性别', user_age string comment '用户年龄', user_level string comment '用户等级', order_price decimal(10,2) comment '商品价格', sku_name string comment '商品名称', sku_tm_id string comment '品牌id', sku_category3_id string comment '商品三级品类id', sku_category2_id string comment '商品二级品类id', sku_category1_id string comment '商品一级品类id', sku_category3_name string comment '商品三级品类名称', sku_category2_name string comment '商品二级品类名称', sku_category1_name string comment '商品一级品类名称', spu_id string comment '商品 spu', sku_num int comment '购买个数', order_count string comment '当日下单单数', order_amount string comment '当日下单金额' ) comment '用户购买商品明细表' partitioned by(`dt` string) stored as parquet location '/warehouse/gmall/dws/dws_sale_detail_daycount' tblproperties("parquet.compression"="snappy");

数据导入

ods_order_detail订单详情表、dwd_user_info用户表、dwd_sku_info商品表

with tmp_detail as( select user_id, sku_id, sum(sku_num) sku_num, count(*) order_count, sum(od.order_price*sku_num) order_amount from ods_order_detail od where od.dt='2019-02-10' and user_id is not null group by user_id, sku_id ) insert overwrite table dws_sale_detail_daycount partition(dt='2019-02-10') select tmp_detail.user_id, tmp_detail.sku_id, u.gender, months_between('2019-02-10', u.birthday)/12 age, u.user_level, price, sku_name, tm_id, category3_id , category2_id , category1_id , category3_name , category2_name , category1_name , spu_id, tmp_detail.sku_num, tmp_detail.order_count, tmp_detail.order_amount from tmp_detail left join dwd_user_info u on u.id=tmp_detail.user_id and u.dt='2019-02-10' left join dwd_sku_info s on s.id=tmp_detail.sku_id and s.dt='2019-02-10';

ADS层 品牌复购率报表分析

建表ads_sale_tm_category1_stat_mn

buycount 购买人数、buy_twice_last两次以上购买人数、

buy_twice_last_ratio '单次复购率'、

buy_3times_last '三次以上购买人数',

buy_3times_last_ratio 多次复购率'

drop table ads_sale_tm_category1_stat_mn; create table ads_sale_tm_category1_stat_mn ( tm_id string comment '品牌id ' , category1_id string comment '1级品类id ', category1_name string comment '1级品类名称 ', buycount bigint comment '购买人数', buy_twice_last bigint comment '两次以上购买人数', buy_twice_last_ratio decimal(10,2) comment '单次复购率', buy_3times_last bigint comment '三次以上购买人数', buy_3times_last_ratio decimal(10,2) comment '多次复购率' , stat_mn string comment '统计月份', stat_date string comment '统计日期' ) COMMENT '复购率统计' row format delimited fields terminated by ' ' location '/warehouse/gmall/ads/ads_sale_tm_category1_stat_mn/' ;

插入数据

sum(if(mn.order_count>=1,1,0)) buycount,

sum(if(mn.order_count>=2,1,0)) buyTwiceLast,

sum(if(mn.order_count>=2,1,0))/sum( if(mn.order_count>=1,1,0)) buyTwiceLastRatio,

sum(if(mn.order_count>=3,1,0)) buy3timeLast ,

sum(if(mn.order_count>=3,1,0))/sum( if(mn.order_count>=1,1,0)) buy3timeLastRatio ,

date_format('2019-02-10' ,'yyyy-MM') stat_mn,

insert into table ads_sale_tm_category1_stat_mn select mn.sku_tm_id, mn.sku_category1_id, mn.sku_category1_name, sum(if(mn.order_count >= 1, 1, 0)) buycount, sum(if(mn.order_count >= 2, 1, 0)) buyTwiceLast, sum(if(mn.order_count >= 2, 1, 0)) / sum(if(mn.order_count >= 1, 1, 0)) buyTwiceLastRatio, sum(if(mn.order_count >= 3, 1, 0)) buy3timeLast, sum(if(mn.order_count >= 3, 1, 0)) / sum(if(mn.order_count >= 1, 1, 0)) buy3timeLastRadio, date_format ('2019-02-10' ,'yyyy-MM') stat_mn, '2019-02-10' stat_date from ( select sd.sku_tm_id, sd.sku_category1_id, sd.sku_category1_name, user_id, sum(order_count) order_count from dws_sale_detail_daycount sd where date_format(dt, 'yyyy-MM') <= date_format('2019-02-10', 'yyyy-MM') group by sd.sku_tm_id, sd.sku_category1_id, user_id, sd.sku_category1_name ) mn group by mn.sku_tm_id, mn.sku_category1_id, mn.sku_category1_name ;

数据导入脚本

1)在/home/kris/bin目录下创建脚本ads_sale.sh

[kris@hadoop101 bin]$ vim ads_sale.sh

#!/bin/bash # 定义变量方便修改 APP=gmall hive=/opt/module/hive/bin/hive # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert into table "$APP".ads_sale_tm_category1_stat_mn select mn.sku_tm_id, mn.sku_category1_id, mn.sku_category1_name, sum(if(mn.order_count>=1,1,0)) buycount, sum(if(mn.order_count>=2,1,0)) buyTwiceLast, sum(if(mn.order_count>=2,1,0))/sum( if(mn.order_count>=1,1,0)) buyTwiceLastRatio, sum(if(mn.order_count>=3,1,0)) buy3timeLast , sum(if(mn.order_count>=3,1,0))/sum( if(mn.order_count>=1,1,0)) buy3timeLastRatio , date_format('$do_date' ,'yyyy-MM') stat_mn, '$do_date' stat_date from ( select od.sku_tm_id, od.sku_category1_id, od.sku_category1_name, user_id , sum(order_count) order_count from "$APP".dws_sale_detail_daycount od where date_format(dt,'yyyy-MM')<=date_format('$do_date' ,'yyyy-MM') group by od.sku_tm_id, od.sku_category1_id, user_id, od.sku_category1_name ) mn group by mn.sku_tm_id, mn.sku_category1_id, mn.sku_category1_name; " $hive -e "$sql" 增加脚本执行权限 [kris@hadoop101 bin]$ chmod 777 ads_sale.sh 执行脚本导入数据 [kris@hadoop101 bin]$ ads_sale.sh 2019-02-11 查看导入数据 hive (gmall)>select * from ads_sale_tm_category1_stat_mn limit 2;

品牌复购率结果输出到MySQL

1)在MySQL中创建ads_sale_tm_category1_stat_mn表

create table ads_sale_tm_category1_stat_mn ( tm_id varchar(200) comment '品牌id ' , category1_id varchar(200) comment '1级品类id ', category1_name varchar(200) comment '1级品类名称 ', buycount varchar(200) comment '购买人数', buy_twice_last varchar(200) comment '两次以上购买人数', buy_twice_last_ratio varchar(200) comment '单次复购率', buy_3times_last varchar(200) comment '三次以上购买人数', buy_3times_last_ratio varchar(200) comment '多次复购率' , stat_mn varchar(200) comment '统计月份', stat_date varchar(200) comment '统计日期' )

2)编写Sqoop导出脚本

在/home/kris/bin目录下创建脚本sqoop_export.sh

[kris@hadoop101 bin]$ vim sqoop_export.sh

#!/bin/bash db_name=gmall export_data() { /opt/module/sqoop/bin/sqoop export --connect "jdbc:mysql://hadoop101:3306/${db_name}?useUnicode=true&characterEncoding=utf-8" --username root --password 123456 --table $1 --num-mappers 1 --export-dir /warehouse/$db_name/ads/$1 --input-fields-terminated-by " " --update-key "tm_id,category1_id,stat_mn,stat_date" --update-mode allowinsert --input-null-string '\N' --input-null-non-string '\N' } case $1 in "ads_sale_tm_category1_stat_mn") export_data "ads_sale_tm_category1_stat_mn" ;; "all") export_data "ads_sale_tm_category1_stat_mn" ;; esac

3)执行Sqoop导出脚本

[kris@hadoop101 bin]$ chmod 777 sqoop_export.sh

[kris@hadoop101 bin]$ sqoop_export.sh all

4)在MySQL中查看结果

SELECT * FROM ads_sale_tm_category1_stat_mn;

求每个等级的用户对应的复购率前十的商品排行

1)每个等级,每种商品,买一次的用户数,买两次的用户数=》得出复购率

2)利用开窗函数,取每个等级的前十

3)形成脚本

用户购买明细宽表 dws_sale_detail_daycount

① t1--按user_leval, sku_id, user_id统计下单次数

select user_level, sku_id, user_id, sum(order_count) order_count_sum from dws_sale_detail_daycount where date_format(dt, 'yyyy-MM') = date_format('2019-02-13', 'yyyy-MM') group by user_level, sku_id, user_id limit 10;

② t2 --求出每个等级,每种商品,买一次的用户数,买两次的用户数 得出复购率

select t1.user_level, t1.sku_id, sum(if(t1.order_count_sum > 0, 1, 0)) buyOneCount, sum(if(t1.order_count_sum > 1, 1, 0)) buyTwiceCount, sum(if(t1.order_count_sum > 1, 1, 0)) / sum(if(t1.order_count_sum > 0, 1, 0)) * 100 buyTwiceCountRatio, '2019-02-13' stat_date from( select user_level, sku_id, user_id, sum(order_count) order_count_sum from dws_sale_detail_daycount where date_format(dt, 'yyyy-MM') = date_format('2019-02-13', 'yyyy-MM') group by user_level, sku_id, user_id ) t1 group by t1.user_level, t1.sku_id;

③ t3 --按用户等级分区,复购率排序

select t2.user_level, t2.sku_id, t2.buyOneCount, t2.buyTwiceCount, t2.buyTwiceCountRatio, t2.stat_date from( select t1.user_level, t1.sku_id, sum(if(t1.order_count_sum > 0, 1, 0)) buyOneCount, sum(if(t1.order_count_sum > 1, 1, 0)) buyTwiceCount, sum(if(t1.order_count_sum > 1, 1, 0)) / sum(if(t1.order_count_sum > 0, 1, 0)) * 100 buyTwiceCountRatio, '2019-02-13' stat_date from( select user_level, sku_id, user_id, sum(order_count) order_count_sum from dws_sale_detail_daycount where date_format(dt, 'yyyy-MM') = date_format('2019-02-13', 'yyyy-MM') group by user_level, sku_id, user_id ) t1 group by t1.user_level, t1.sku_id )t2

④ -分区排序 rank()

select t2.user_level, t2.sku_id, t2.buyOneCount, t2.buyTwiceCount, t2.buyTwiceCountRatio, rank() over(partition by t2.sku_id order by t2.buyTwiceCount) rankNo from( select t1.user_level, t1.sku_id, sum(if(t1.order_count_sum > 0, 1, 0)) buyOneCount, sum(if(t1.order_count_sum > 1, 1, 0)) buyTwiceCount, sum(if(t1.order_count_sum > 1, 1, 0)) / sum(if(t1.order_count_sum > 0, 1, 0)) * 100 buyTwiceCountRatio, '2019-02-13' stat_date from( select user_level, sku_id, user_id, sum(order_count) order_count_sum from dws_sale_detail_daycount where date_format(dt, 'yyyy-MM') = date_format('2019-02-13', 'yyyy-MM') group by user_level, sku_id, user_id ) t1 group by t1.user_level, t1.sku_id )t2

⑤ 作为子查询取前10

select t3.user_level, t3.sku_id, t3.buyOneCount, t3.buyTwiceCount, t3.buyTwiceCountRatio, t3.rankNo from( select t2.user_level, t2.sku_id, t2.buyOneCount, t2.buyTwiceCount, t2.buyTwiceCountRatio, rank() over(partition by t2.sku_id order by t2.buyTwiceCount) rankNo from( select t1.user_level, t1.sku_id, sum(if(t1.order_count_sum > 0, 1, 0)) buyOneCount, sum(if(t1.order_count_sum > 1, 1, 0)) buyTwiceCount, sum(if(t1.order_count_sum > 1, 1, 0)) / sum(if(t1.order_count_sum > 0, 1, 0)) * 100 buyTwiceCountRatio, '2019-02-13' stat_date from( select user_level, sku_id, user_id, sum(order_count) order_count_sum from dws_sale_detail_daycount where date_format(dt, 'yyyy-MM') = date_format('2019-02-13', 'yyyy-MM') group by user_level, sku_id, user_id ) t1 group by t1.user_level, t1.sku_id )t2 ) t3 where rankNo <= 10;