Oozie的功能模块

workflow

由多个工作单元组成

工作单元之间有依赖关系

MR1->MR2->MR3->result

hadoop jar:提交1个MR

oozie:监控当前工作单元状态,完成之后自动提交下一个工作单元

scheduler

crontab:是linux简单调度脚本

定时调度工作单元

模块:

1) workflow:定义工作流程;顺序执行流程节点,支持fork(分支多个节点),join(合并多个节点为一个)

2) Coordinator:协调器,调度工作流程,只能定义一个周期;定时触发workflow

3) Bundle:绑定多个定时任务;绑定多个Coordinator

workflow常用节点:

1) 工作单元-对应->动作节点Action Nodes

2) 指明工作流走向->控制节点Control Flow Nodes

部署Hadoop(CDH版本的)

apache版本的oozie需要编译(即用maven导入相应的jar包)

Namenode:core-site.xml

hdfs:9000->8020为了区别之前的hadoop

core-site.xml

<!-- 指定HDFS中NameNode的地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://hadoop101:8020</value> </property> <!-- 指定Hadoop运行时产生文件的存储目录 --> <property> <name>hadoop.tmp.dir</name> <value>/opt/module/cdh/hadoop-2.5.0-cdh5.3.6</value> </property> <!-- Oozie Server的Hostname --> <property> <name>hadoop.proxyuser.kris.hosts</name> <value>*</value> </property> <!-- 允许被Oozie代理的用户组 --> <property> <name>hadoop.proxyuser.kris.groups</name> <value>*</value> </property>

hadoop代理用户

有可能没有权限,让代理去做无关紧要的事

kris被代理,user1代理;oozie用到了hadoop的代理机制

kris能够代理用户的节点

kris能够代理的用户组

即kris能够代理所有节点上的所有用户

hdfs-site.xml

<!-- 数据的副本数量 --> <property> <name>dfs.replication</name> <value>3</value> </property> <!-- 指定Hadoop辅助名称节点主机配置 --> <property> <name>dfs.namenode.secondary.http-address</name> <value>hadoop103:50090</value> </property>

yarn-site.xml

<!-- Reducer获取数据的方式 --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 指定YARN的ResourceManager的地址 --> <property> <name>yarn.resourcemanager.hostname</name> <value>hadoop102</value> </property> <!-- 任务历史服务 --> <property> <name>yarn.log.server.url</name> <value>http://hadoop101:19888/jobhistory/logs/</value> </property>

改名为mapred-site.xml

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <!-- 配置 MapReduce JobHistory Server 地址 ,默认端口10020 --> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop101:10020</value> </property> <!-- 配置 MapReduce JobHistory Server web ui 地址, 默认端口19888 --> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop101:19888</value> </property>

oozie是通过历史服务器进行监控工作单元

服务地址端口10020,web页面的端口是19888

slaves

hadoop101

hadoop102

hadoop103

启动hadoop集群:

[kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$bin/hdfs namenode -format ##如果出现reformat就点击N

[kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$sbin/start-dfs.sh

[kris@hadoop102 hadoop-2.5.0-cdh5.3.6]$sbin/start-yarn.sh

[kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$sbin/mr-jobhistory-daemon.sh start historyserver

部署Oozie

1. 解压Oozie [kris@hadoop101 software]$ tar -zxvf /opt/software/oozie-4.0.0-cdh5.3.6.tar.gz -C /opt/module 2. 在oozie根目录下解压oozie-hadooplibs-4.0.0-cdh5.3.6.tar.gz;因为它上级目录与这个目录一样,它就会直接把hadooplibs解压到根目录; [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ tar -zxvf oozie-hadooplibs-4.0.0-cdh5.3.6.tar.gz -C ../

也可以: tar -zxf oozie-hadooplibs-4.0.0-cdh5.3.6.tar.gz

mv hadooplibs/ ../

完成后Oozie目录下会出现hadooplibs目录。 3. 在Oozie目录下创建libext目录 [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ mkdir libext/ 4. 拷贝依赖的Jar包;jar包、mysql驱动类、js框架放到libext目录下; 1)将hadooplibs里面的jar包,拷贝到libext目录下: [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ cp -ra hadooplibs/hadooplib-2.5.0-cdh5.3.6.oozie-4.0.0-cdh5.3.6/* libext/ 2)拷贝Mysql驱动包到libext目录下: [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ cp -a /opt/software/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar ./libext/ 3)将ext-2.2.zip拷贝到libext/目录下 ext是一个js框架,用于展示oozie前端页面: [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ cp -a /opt/software/ext-2.2.zip libext/

5. 修改Oozie配置文件

oozie-site.xml

属性:oozie.service.JPAService.jdbc.driver

属性值:com.mysql.jdbc.Driver

解释:JDBC的驱动

属性:oozie.service.JPAService.jdbc.url

属性值:jdbc:mysql://hadoop101:3306/oozie

解释:oozie所需的数据库地址

属性:oozie.service.JPAService.jdbc.username

属性值:root

解释:数据库用户名

属性:oozie.service.JPAService.jdbc.password

属性值:123456

解释:数据库密码

属性:oozie.service.HadoopAccessorService.hadoop.configurations

属性值:*=/opt/module/cdh/hadoop-2.5.0-cdh5.3.6/etc/hadoop

解释:让Oozie引用Hadoop的配置文件

6. 在Mysql中创建Oozie的数据库

进入Mysql并创建oozie数据库:

$ mysql -uroot -p123456

mysql> create database oozie;

7、初始化Oozie

1)上传Oozie目录下的yarn.tar.gz文件到HDFS:

oozie会自动解压上传到hdfs

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie-setup.sh sharelib create -fs hdfs://hadoop101:8020 -locallib oozie-sharelib-4.0.0-cdh5.3.6-yarn.tar.gz

执行成功之后,去50070检查对应目录有没有文件生成。

2)创建oozie.sql文件 ;它会生成一个.sql文件,如果不指定它会在tmp那个目录创建.sql文件

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/ooziedb.sh create -sqlfile oozie.sql -run

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

3)打包项目,生成war包

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie-setup.sh prepare-war

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

New Oozie WAR file with added 'ExtJS library, JARs' at /opt/module/oozie-4.0.0-cdh5.3.6/oozie-server/webapps/oozie.war

4) Oozie的启动与关闭

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozied.sh start

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozied.sh stop

访问Oozie的Web页面

http://hadoop101:11000/oozie/

如果没有oozied.sh 起来,把oozied.pid文件给删掉,重新起下;

[kris@hadoop101 temp]$ pwd

/opt/module/oozie-4.0.0-cdh5.3.6/oozie-server/temp

[kris@hadoop101 temp]$ ll

总用量 8

drwxrwxr-x. 3 kris kris 4096 3月 2 10:52 oozie-kris4439005802986311795.dir

-rw-rw-r--. 1 kris kris 5 3月 2 10:52 oozie.pid

-rwxr-xr-x. 1 kris kris 0 11月 14 2014 safeToDelete.tmp

Oozie的使用

Oozie调度shell脚本

1)创建工作目录

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ mkdir -p oozie-apps/shell

2)在oozie-apps/shell目录下创建两个文件——job.properties和workflow.xml文件

[kris@hadoop101 shell]$ touch workflow.xml

[kris@hadoop101 shell]$ touch job.properties

3)编辑job.properties和workflow.xml文件

job.properties

#HDFS地址 nameNode=hdfs://hadoop101:8020 #ResourceManager地址 jobTracker=hadoop102:8032 #队列名称 queueName=default examplesRoot=oozie-apps oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/shell

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.4" name="shell-wf"> <!--开始节点--> <start to="shell-node"/> <!--动作节点--> <action name="shell-node"> <!--shell动作--> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <!--要执行的脚本--> <exec>mkdir</exec> <argument>/opt/module/d</argument> <capture-output/> </shell> <ok to="end"/> <error to="fail"/> </action> <!--kill节点--> <kill name="fail"> <message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <!--结束节点--> <end name="end"/> </workflow-app>

job-tracker是hadoop1.x 中ResourceManager

oozie.coord.application.path:${nameNode}/user/${user.name}/${examplesRoot}/shell 这个路径是这两个配置文件要上传到HDFS上的路径

hdfs://hadoop101:8020/user/kris/oozie-apps/shell

[kris@hadoop101 shell]$ ll

总用量 8

-rw-rw-r--. 1 kris kris 226 3月 2 18:36 job.properties

-rw-rw-r--. 1 kris kris 900 3月 2 18:37 workflow.xml

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/bin/hadoop fs -put oozie-apps/ /user/kris

19/03/02 18:41:08 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

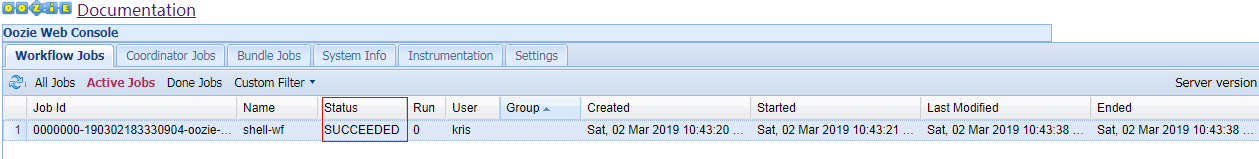

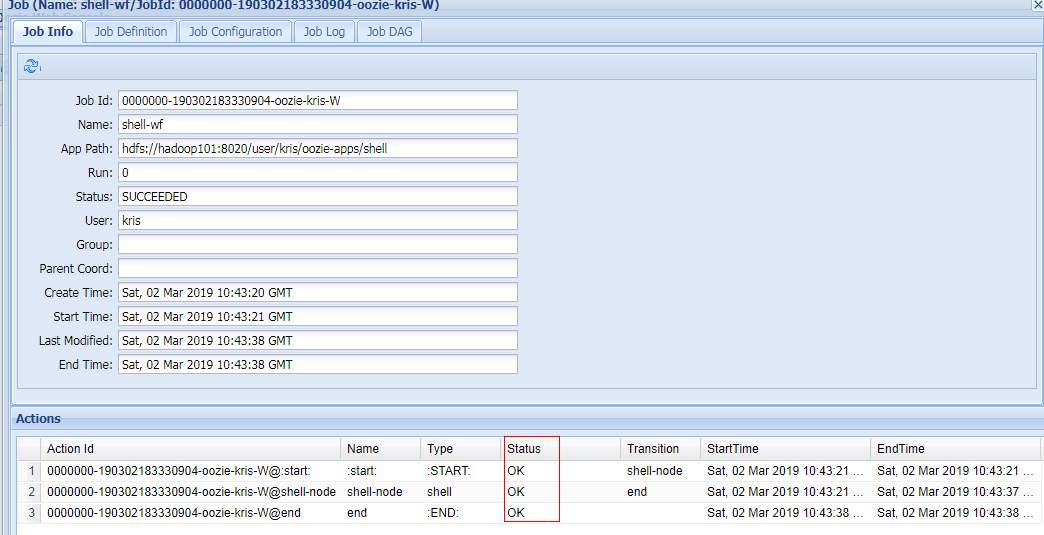

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie job -oozie http://hadoop101:11000/oozie -config oozie-apps/shell/job.properties -run

job: 0000000-190302183330904-oozie-kris-W

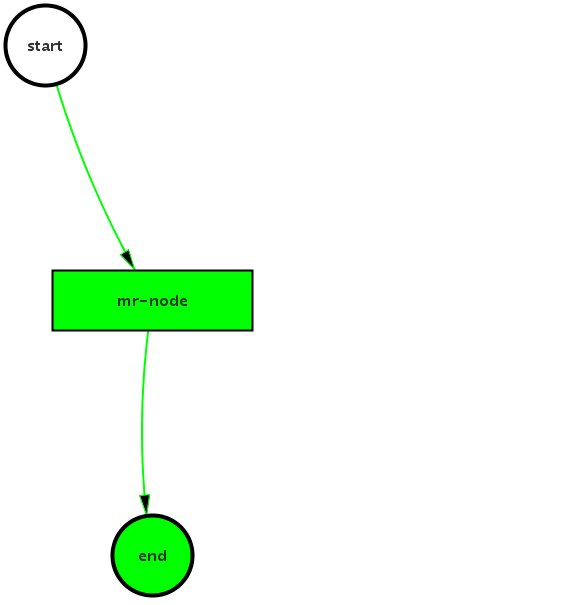

DAG有向无环图,没有形成死循环

start

↓

action → kill

↓

end

颜色是绿色才代表成功,否则就是出错了;

杀死某个任务 [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie job -oozie http://hadoop101:11000/oozie -kill 0000000-190302183330904-oozie-kris-W

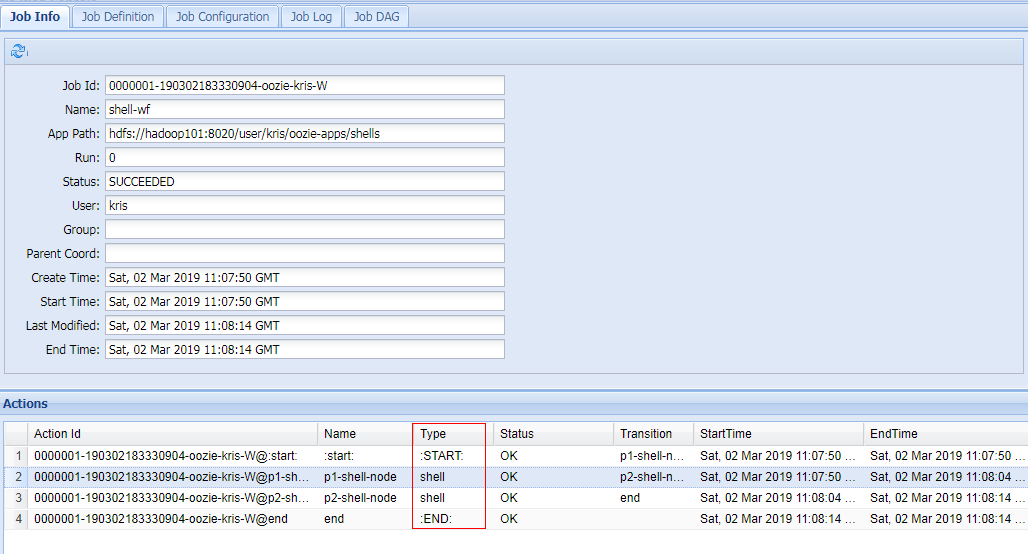

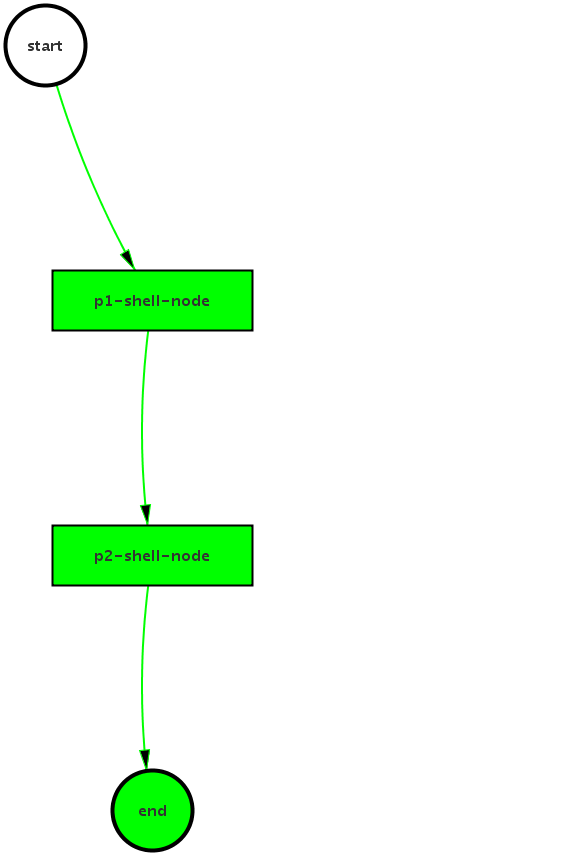

Oozie逻辑调度执行多个Job| 两个工作单元

job.properties

nameNode=hdfs://hadoop101:8020 jobTracker=hadoop102:8032 queueName=default examplesRoot=oozie-apps oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/shells

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.4" name="shell-wf"> <start to="p1-shell-node"/> <action name="p1-shell-node"> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <exec>mkdir</exec> <argument>/opt/module/d1</argument> <capture-output/> </shell> <ok to="p2-shell-node"/> <error to="fail"/> </action> <action name="p2-shell-node"> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <exec>mkdir</exec> <argument>/opt/module/d2</argument> <capture-output/> </shell> <ok to="end"/> <error to="fail"/> </action> <kill name="fail"> <message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <end name="end"/> </workflow-app>

上传任务配置

[kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$ /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/bin/hadoop fs -put oozie-apps/shells/ /user/kris/oozie-apps19/03/02 19:06:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

执行任务

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie job -oozie http://hadoop101:11000/oozie -config oozie-apps/shells/job.properties -run

job: 0000001-190302183330904-oozie-kris-W

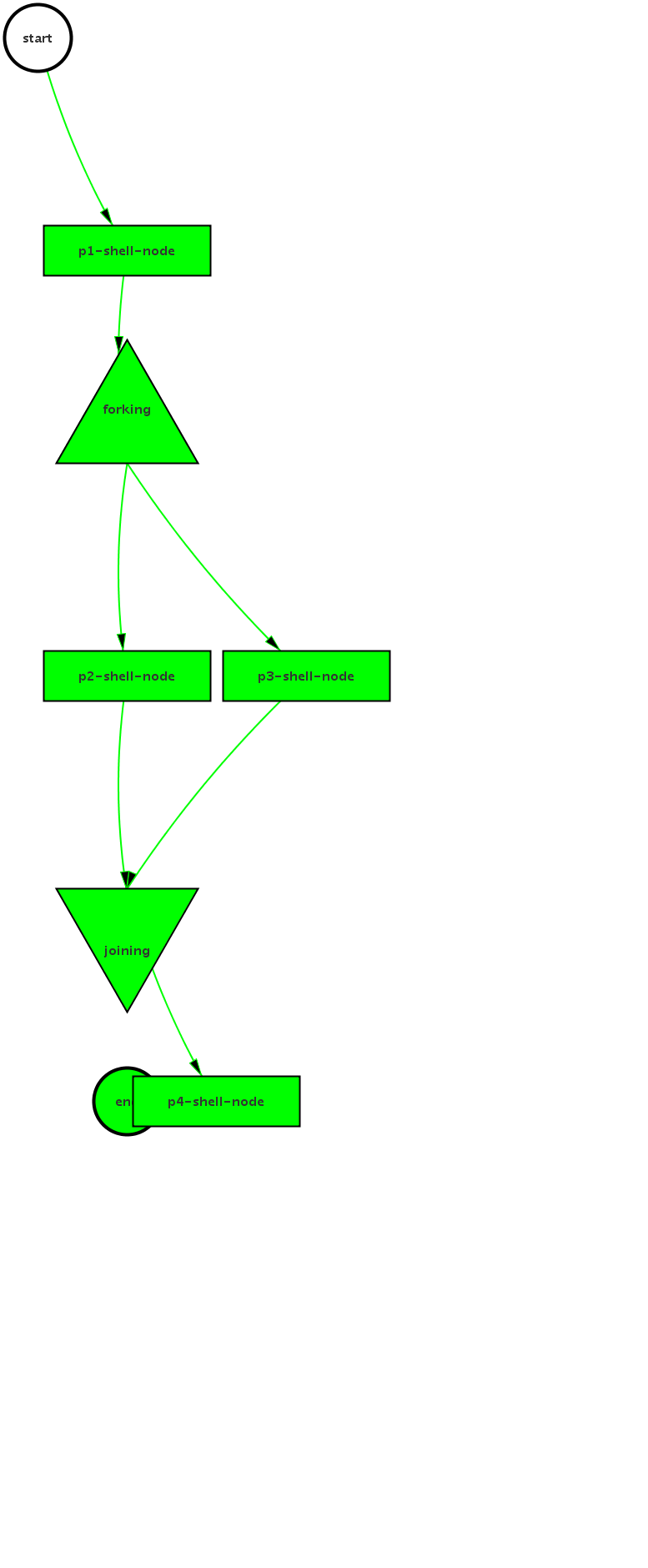

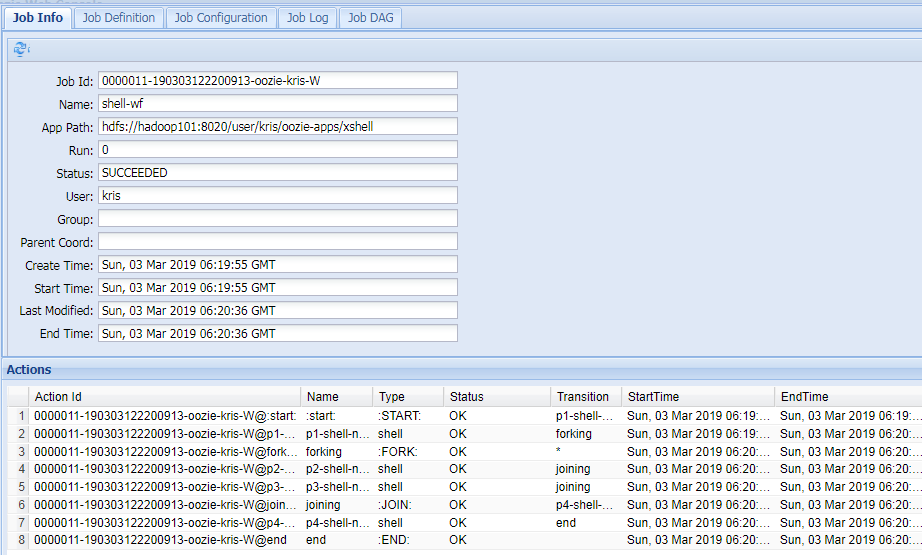

Oozie调用有分支的任务| 4个工作单元

fock和join的用法见官网:http://oozie.apache.org/docs/4.0.0/WorkflowFunctionalSpec.html#a3.1.5_Fork_and_Join_Control_Nodes

---to-->p2-shell-node

p1-shell-node --to-->forking ---它俩 --join---to -->p4-shell-node

---to-->p3-shell-node

job.properties

nameNode=hdfs://hadoop101:8020

jobTracker=hadoop102:8032

queueName=default

examplesRoot=oozie-apps

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/xshell

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.4" name="shell-wf"> <start to="p1-shell-node"/> <action name="p1-shell-node"> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <exec>mkdir</exec> <argument>/opt/module/d1</argument> <capture-output/> </shell> <ok to="forking"/> <error to="fail"/> </action> <action name="p2-shell-node"> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <exec>mkdir</exec> <argument>/opt/module/d2</argument> <capture-output/> </shell> <ok to="joining"/> <error to="fail"/> </action> <action name="p3-shell-node"> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <exec>mkdir</exec> <argument>/opt/module/d3</argument> <capture-output/> </shell> <ok to="joining"/> <error to="fail"/> </action> <action name="p4-shell-node"> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <exec>mkdir</exec> <argument>/opt/module/d4</argument> <capture-output/> </shell> <ok to="end"/> <error to="fail"/> </action> <fork name="forking"> <path start="p2-shell-node"/> <path start="p3-shell-node"/> </fork> <join name="joining" to="p4-shell-node"/> <kill name="fail"> <message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <end name="end"/> </workflow-app>

上传

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/bin/hadoop fs -put oozie-apps/xshell/ /user/kris/oozie-apps

19/03/03 14:18:33 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

执行任务

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie job -oozie http://hadoop101:11000/oozie -config oozie-apps/xshell/job.properties -run

job: 0000011-190303122200913-oozie-kris-W

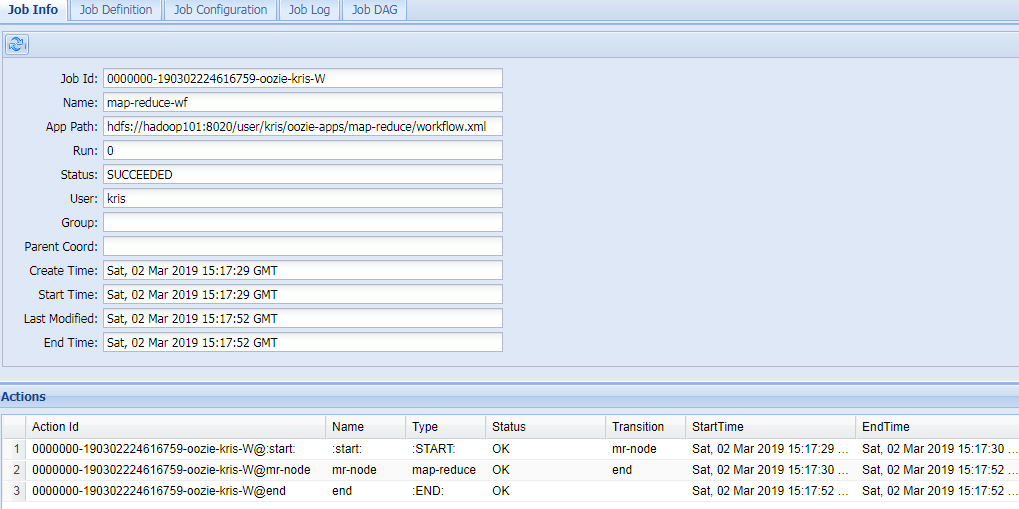

Oozie调度MapReduce任务

oozie.examples.tar.gz包:即所有的案例:

[kris@hadoop101 apps]$ ll

总用量 88

drwxr-xr-x. 3 kris kris 4096 3月 2 19:19 aggregator

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 bundle

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 cron ##新的

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 cron-schedule ##配置定时的,适合linux

drwxr-xr-x. 3 kris kris 4096 3月 2 19:19 custom-main

drwxr-xr-x. 3 kris kris 4096 3月 2 19:19 datelist-java-main

drwxr-xr-x. 3 kris kris 4096 3月 2 19:19 demo

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 distcp

drwxr-xr-x. 3 kris kris 4096 3月 2 19:19 hadoop-el

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 hcatalog

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 hive

drwxr-xr-x. 3 kris kris 4096 3月 2 19:19 java-main #java类

drwxr-xr-x. 3 kris kris 4096 3月 2 19:19 map-reduce #

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 no-op

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 pig

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 shell #

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 sla

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 sqoop #

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 sqoop-freeform

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 ssh

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 streaming

drwxr-xr-x. 2 kris kris 4096 3月 2 19:19 subwf

[kris@hadoop101 apps]$ pwd

/opt/module/oozie-4.0.0-cdh5.3.6/examples/apps

[kris@hadoop101 map-reduce]$ ll

总用量 20

-rw-r--r--. 1 kris kris 1012 7月 29 2015 job.properties

-rw-r--r--. 1 kris kris 1028 7月 29 2015 job-with-config-class.properties #带有配置类的;之前写的那三个mapreduce类,Driver是输入输出路径以及k,v格式类型;roozie调用MR时有两种配置:

一种是专门写一个配置类,oozie提供好了接口到时去继承它就可以,实现里边的方法;

另外一种是直接在workflow.xml文件中配置;我们采用第二种方式;

drwxrwxr-x. 2 kris kris 4096 3月 2 19:19 lib #放jar包;

-rw-r--r--. 1 kris kris 2274 7月 29 2015 workflow-with-config-class.xml

-rw-r--r--. 1 kris kris 2559 7月 29 2015 workflow.xml

1) 拷贝官方模板到oozie-apps [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ cp -r /opt/module/oozie-4.0.0-cdh5.3.6/examples/apps/map-reduce/ oozie-apps/ [kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$ bin/hadoop fs -mkdir /input 19/03/02 19:34:02 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2) 测试一下wordcount在yarn中的运行 [kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/bin/yarn jar /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar wordcount /input/ /output/ 19/03/02 19:34:32 INFO mapreduce.Job: map 0% reduce 100% 19/03/02 19:34:33 INFO mapreduce.Job: Job job_1551522372459_0005 completed successfully 19/03/02 19:34:33 INFO mapreduce.Job: Counters: 38 ########下面是另外一种:

创建输入文件wordcount.txt [kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$ vim wordcount.txt java java java kafka kafka oozie 把它上传到hdfs上 [kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$ bin/hadoop fs -put wordcount.txt / 执行下官方提供的wordcount的jar包 [kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$ bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar wordcount /wordcount.txt /output

把官方案例的wordcount的jar包放在lib下面 [kris@hadoop101 lib]$ ll 总用量 28 -rw-r--r--. 1 kris kris 24707 3月 3 14:49 oozie-examples-4.0.0-cdh5.3.6.jar [kris@hadoop101 lib]$ rm -rf oozie-examples-4.0.0-cdh5.3.6.jar [kris@hadoop101 lib]$ cp /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar ./ [kris@hadoop101 lib]$ ll 总用量 272 -rw-r--r--. 1 kris kris 275958 3月 3 14:54 hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar [kris@hadoop101 lib]$

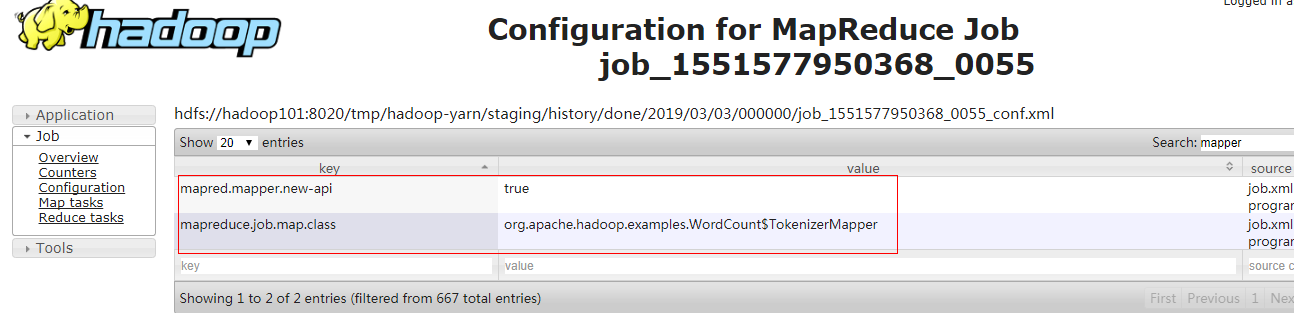

3) 配置map-reduce任务的job.properties以及workflow.xml

job.properties

nameNode=hdfs://hadoop101:8020 jobTracker=hadoop102:8032 queueName=default examplesRoot=oozie-apps oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/map-reduce/workflow.xml outputDir=map-reduce

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.2" name="map-reduce-wf"> <start to="mr-node"/> <action name="mr-node"> <map-reduce> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <prepare> <delete path="${nameNode}/output/"/> </prepare> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> <!-- 配置调度MR任务时,使用新的API --> <property> <name>mapred.mapper.new-api</name> <value>true</value> </property> <property> <name>mapred.reducer.new-api</name> <value>true</value> </property> <!-- 指定Job Key输出类型 --> <property> <name>mapreduce.job.output.key.class</name> <value>org.apache.hadoop.io.Text</value> </property> <!-- 指定Job Value输出类型 --> <property> <name>mapreduce.job.output.value.class</name> <value>org.apache.hadoop.io.IntWritable</value> </property> <!-- 指定输入路径 --> <property> <name>mapred.input.dir</name> <value>/wordcount.txt</value> </property> <!-- 指定输出路径 --> <property> <name>mapred.output.dir</name> <value>/output/</value> </property> <!-- 指定Map类 --> <property> <name>mapreduce.job.map.class</name> <value>org.apache.hadoop.examples.WordCount$TokenizerMapper</value> </property> <!-- 指定Reduce类 --> <property> <name>mapreduce.job.reduce.class</name> <value>org.apache.hadoop.examples.WordCount$IntSumReducer</value> </property> </configuration> </map-reduce> <ok to="end"/> <error to="fail"/> </action> <kill name="fail"> <message>Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <end name="end"/> </workflow-app>

<delete path="${nameNode}/output/"/> ##跑在mapreduce中输入路径不能存在;即把output输入文件给删掉

#mapred是不对的,老版的;可以去官网有新旧的对比;也可以直接看wordcount的源码看设置的什么属性

如果map端输出的k v和最终输出的k v类型是一样的,配置一个就可以了

#mapreduce的个数不指定,让它自己决定;看它的源码反编译,jar包反编译就可以得出它的value

<property>

<name>mapred.map.tasks</name> ##可以不指定

<value>1</value>

</property>

5) 上传配置好的文件夹到HDFS

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/bin/hdfs dfs -put oozie-apps/map-reduce/ /user/kris/oozie-apps

6) 执行任务

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie job -oozie http://hadoop101:11000/oozie -config oozie-apps/map-reduce/job.properties -run

job: 0000000-190302224616759-oozie-kris-W

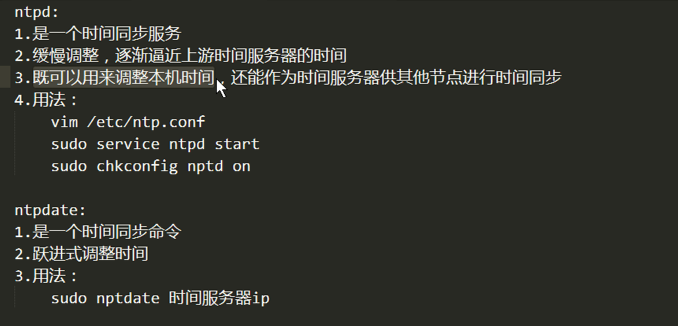

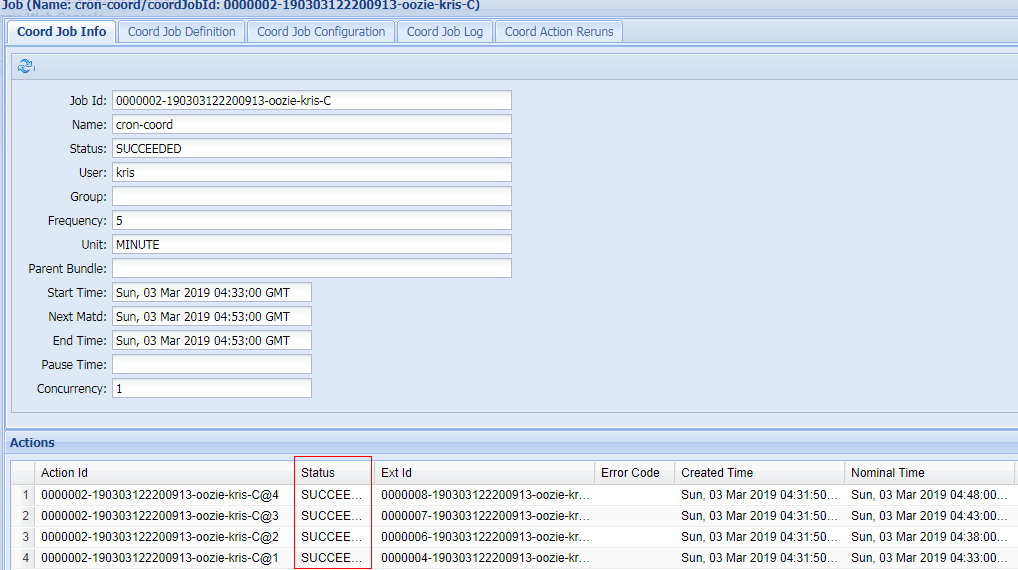

Oozie定时任务/循环任务

目标:Coordinator周期性调度任务

分步实现:

1) 配置Linux时区以及时间服务器

2) 检查系统当前时区:

# date -R

[kris@hadoop101 hadoop-2.5.0-cdh5.3.6]$ date -R

Sun, 03 Mar 2019 12:58:37 +0800

[kris@hadoop102 module]$ date -R

Sun, 03 Mar 2019 12:58:02 +0800

[kris@hadoop103 ~]$ date -R

Sun, 03 Mar 2019 12:58:56 +0800

配置oozie-site.xml文件; 注:该属性去oozie-default.xml中找到即可

[kris@hadoop101 conf]$ vim oozie-site.xml ;将默认的UTC改成GMT+0800

<property>

<name>oozie.processing.timezone</name>

<value>GMT+0800</value>

<description>

Oozie server timezone. Valid values are UTC and GMT(+/-)####, for example 'GMT+0530' would be India

timezone. All dates parsed and genered dates by Oozie Coordinator/Bundle will be done in the specified

timezone. The default value of 'UTC' should not be changed under normal circumtances. If for any reason

is changed, note that GMT(+/-)#### timezones do not observe DST changes.

</description>

</property>

修改js框架中的关于时间设置的代码

function getTimeZone() {

Ext.state.Manager.setProvider(new Ext.state.CookieProvider());

return Ext.state.Manager.get("TimezoneId","GMT+0800");

}

重启oozie服务,并重启浏览器(一定要注意清除缓存)

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozied.sh stop

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozied.sh start

拷贝官方模板配置定时任务

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ cp -r examples/apps/cron oozie-apps/

修改模板job.properties和coordinator.xml以及workflow.xml

job.properties

nameNode=hdfs://hadoop101:8020

jobTracker=hadoop102:8032

queueName=default

examplesRoot=oozie-apps

oozie.coord.application.path=${nameNode}/user/${user.name}/${examplesRoot}/cron

#start:必须设置为未来时间,否则任务失败

start=2019-03-03T12:33+0800

end=2019-03-03T12:53+0800

workflowAppUri=${nameNode}/user/${user.name}/${examplesRoot}/cron

EXEC=p1.sh

coordinator.xml

<coordinator-app name="cron-coord" frequency="${coord:minutes(5)}" start="${start}" end="${end}" timezone="GMT+0800" xmlns="uri:oozie:coordinator:0.2"> <action> <workflow> <app-path>${workflowAppUri}</app-path> <configuration> <property> <name>jobTracker</name> <value>${jobTracker}</value> </property> <property> <name>nameNode</name> <value>${nameNode}</value> </property> <property> <name>queueName</name> <value>${queueName}</value> </property> </configuration> </workflow> </action> </coordinator-app>

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.5" name="one-op-wf"> <start to="shell-node"/> <action name="shell-node"> <shell xmlns="uri:oozie:shell-action:0.2"> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <configuration> <property> <name>mapred.job.queue.name</name> <value>${queueName}</value> </property> </configuration> <exec>${EXEC}</exec> <file>/user/kris/oozie-apps/cron/${EXEC}#${EXEC}</file> <capture-output/> </shell> <ok to="end"/> <error to="fail"/> </action> <kill name="fail"> <message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <end name="end"/> </workflow-app>

其中 #${EXEC}是给起别名; 需要自定义配置的:frequency start end timezone;定时调度的workflow:路径hdfs上的

workflowAppUri 这个路径是放workflow.xml文件和job.properties的HDFS上的路径

<!--要执行的脚本的写法-->

<exec>p1.sh</exec>

<file>/opt/module/oozie-apps/cron/p1.sh</file> #hdfs上的路径

<capture-output/>

p1.sh也可以自己创建lib目录下就不需写路径了

yarn通过container收集日志;可以查看它的log日志

[kris@hadoop101 cron]$ vim p1.sh #这里要写绝对路径

#!/bin/bash

date >> /opt/module/p1.sh

上传配置

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ /opt/module/cdh/hadoop-2.5.0-cdh5.3.6/bin/hadoop fs -put oozie-apps/cron/ /user/kris/oozie-apps

启动任务

[kris@hadoop101 oozie-4.0.0-cdh5.3.6]$ bin/oozie job -oozie http://hadoop101:11000/oozie -config oozie-apps/cron/job.properties -run

job: 0000002-190303122200913-oozie-kris-C

可以看到

[kris@hadoop102 module]$ cat p1.sh

2019年 03月 03日 星期日 12:32:14 CST

2019年 03月 03日 星期日 12:33:09 CST

2019年 03月 03日 星期日 12:37:07 CST

hive的配置

[kris@hadoop101 hive]$ ll 总用量 12 -rw-r--r--. 1 kris kris 1000 7月 29 2015 job.properties -rw-r--r--. 1 kris kris 939 7月 29 2015 script.q -rw-r--r--. 1 kris kris 2003 7月 29 2015 workflow.xml [kris@hadoop101 hive]$ pwd /opt/module/oozie-4.0.0-cdh5.3.6/examples/apps/hive

[kris@hadoop101 hive]$ vim script.q

CREATE EXTERNAL TABLE test (a INT) STORED AS TEXTFILE LOCATION '${INPUT}';

INSERT OVERWRITE DIRECTORY '${OUTPUT}' SELECT * FROM test;

[kris@hadoop101 hive]$ vim workflow.xml

<script>script.q</script>...