2.3 直接使用编译好的 Griffin 包(选择)

2.3.1 修改 jar 配置文件

Griffin编译完成后,会在Service和Measure模块的target目录下分别看到service-0.6.0.jar

和 measure-0.6.0.jar 两个 jar 包。因为我们使用的是直接编译好的 jar 包,所以需要将

service-0.6.0.jar 中的配置文件修改成与环境一致。

1)使用 WinRaR 等解压工具打开 service-0.6.0.jar(注意:是打开不是解压)

2)修改 BOOT-INF/classes/application.properties

# Apache Griffin 应用名称 spring.application.name=griffin_service # MySQL 数据库配置信息 spring.datasource.url=jdbc:mysql://hadoop102:3306/quartz?autoR econnect=true&useSSL=false spring.datasource.username=root spring.datasource.password=123456 spring.jpa.generate-ddl=true spring.datasource.driver-class-name=com.mysql.jdbc.Driver spring.jpa.show-sql=true # Hive metastore 配置信息 hive.metastore.uris=thrift://hadoop102:9083 hive.metastore.dbname=default hive.hmshandler.retry.attempts=15 hive.hmshandler.retry.interval=2000ms # Hive cache time cache.evict.hive.fixedRate.in.milliseconds=900000 # Kafka schema registry 按需配置 kafka.schema.registry.url=http://hadoop102:8081 # Update job instance state at regular intervals jobInstance.fixedDelay.in.milliseconds=60000 # Expired time of job instance which is 7 days that is 604800000 milliseconds.Time unit only supports milliseconds jobInstance.expired.milliseconds=604800000 # schedule predicate job every 5 minutes and repeat 12 times at most #interval time unit s:second m:minute h:hour d:day,only support these four units predicate.job.interval=5m predicate.job.repeat.count=12 # external properties directory location external.config.location= # external BATCH or STREAMING env external.env.location= # login strategy ("default" or "ldap") login.strategy=default # ldap ldap.url=ldap://hostname:port ldap.email=@example.com ldap.searchBase=DC=org,DC=example ldap.searchPattern=(sAMAccountName={0}) # hdfs default name fs.defaultFS= # elasticsearch

elasticsearch.host=hadoop102

elasticsearch.port=9200

elasticsearch.scheme=http # elasticsearch.user = user # elasticsearch.password = password # livy livy.uri=http://hadoop102:8998/batches # yarn url yarn.uri=http://hadoop103:8088 # griffin event listener internal.event.listeners=GriffinJobEventHook

2)修改 BOOT-INF/classes/sparkProperties.json

{ "file": "hdfs://hadoop102:9000/griffin/griffin-measure.jar", "className": "org.apache.griffin.measure.Application", "name": "griffin", "queue": "default", "numExecutors": 2, "executorCores": 1, "driverMemory": "1g", "executorMemory": "1g", "conf": { "spark.yarn.dist.files": "hdfs://hadoop102:9000/home/spark_conf/hive-site.xml" },"files": [ ] }

3)修改 BOOT-INF/classes/hive-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://hadoop102:3306/metastore?createDatabaseIfN otExist=true</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> <description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> <description>password to use against metastore database</description> </property> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property> <property> <name>hive.cli.print.header</name> <value>true</value> </property> <property> <name>hive.cli.print.current.db</name> <value>true</value> </property> <property> <name>hive.metastore.schema.verification</name> <value>false</value> </property> <property> <name>datanucleus.schema.autoCreateAll</name> <value>true</value> </property> <!-- <property> <name>hive.execution.engine</name> <value>tez</value> </property> --> <property> <name>hive.metastore.uris</name> <value>thrift://hadoop102:9083</value> </property> </configuration>

4)修改 BOOT-INF/classes/application-mysql.properties

#Data Access Properties spring.datasource.url=jdbc:mysql://192.168.1.102:3306/quartz?a utoReconnect=true&useSSL=false spring.datasource.username=root spring.datasource.password=123456 spring.jpa.generate-ddl=true spring.datasource.driver-class-name=com.mysql.jdbc.Driver spring.jpa.show-sql=true

spring.jpa.hibernate.ddl-auto=update

5)修改 BOOT-INF/classes/env/env_batch.json

{ "spark": { "log.level": "INFO" }, "sinks": [

{ "type": "CONSOLE", "config": { "max.log.lines": 10 } },

{ "type": "HDFS", "config": { "path": "hdfs://hadoop102:9000/griffin/persist", "max.persist.lines": 10000, "max.lines.per.file": 10000 } },

{ "type": "ELASTICSEARCH", "config": { "method": "post", "api": "http://hadoop102:9200/griffin/accuracy", "connection.timeout": "1m", "retry": 10 } } ], "griffin.checkpoint": [] }

6)修改 BOOT-INF/classes/env/env_streaming.json

{ "spark": { "log.level": "WARN", "checkpoint.dir": "hdfs:///griffin/checkpoint/${JOB_NAME}", "init.clear": true, "batch.interval": "1m", "process.interval": "5m", "config": { "spark.default.parallelism": 4, "spark.task.maxFailures": 5, "spark.streaming.kafkaMaxRatePerPartition": 1000, "spark.streaming.concurrentJobs": 4, "spark.yarn.maxAppAttempts": 5, "spark.yarn.am.attemptFailuresValidityInterval": "1h", "spark.yarn.max.executor.failures": 120, "spark.yarn.executor.failuresValidityInterval": "1h", "spark.hadoop.fs.hdfs.impl.disable.cache": true } }, "sinks": [ { "type": "CONSOLE", "config": { "max.log.lines": 100 } },{ "type": "HDFS", "config": { "path": "hdfs://hadoop102:9000/griffin/persist", "max.persist.lines": 10000, "max.lines.per.file": 10000 } },{ "type": "ELASTICSEARCH", "config": { "method": "post", "api": "http://hadoop102:9200/griffin/accuracy" } } ], "griffin.checkpoint": [ { "type": "zk", "config": { "hosts": "zk:2181", "namespace": "griffin/infocache", "lock.path": "lock", "mode": "persist", "init.clear": true, "close.clear": false } } ] }

2.4 上传执行 Griffin

2.4.1 修改名称并上传 HDFS

命令执行完成后,会在 Service 和 Measure 模块的 target 目录下分别看到 service-0.6.0.jar

和 measure-0.6.0.jar 两个 jar 包。

1)修改/opt/module/griffin-master/measure/target/measure-0.6.0-SNAPSHOT.jar 名称

[atguigu@hadoop102 measure]$ mv measure-0.6.0-SNAPSHOT.jar griffin-measure.jar

2)上传 griffin-measure.jar 到 HDFS 文件目录里

[atguigu@hadoop102 measure]$ hadoop fs -mkdir /griffin/ [atguigu@hadoop102 measure]$ hadoop fs -put griffin-measure.jar /griffin/

注意:这样做的目的主要是因为 Spark 在 YARN 集群上执行任务时,需要到 HDFS 的/griffin 目录下加载 griffin-measure.jar,避免发生类 org.apache.griffin.measure.Application 找不到的错误。

3)上传 hive-site.xml 文件到 HDFS 的/home/spark_conf/路径

[atguigu@hadoop102 ~]$ hadoop fs -mkdir -p /home/spark_conf/

[atguigu@hadoop102 ~]$ hadoop fs -put /opt/module/hive/conf/hive-site.xml /home/spark_conf/

2.4.2 执行 Griffin

1)确保其他服务已经启动

① 启动 HDFS & YARN :

[atguigu@hadoop102 module]$ /opt/module/hadoop-2.7.2/sbin/start-dfs.sh

[atguigu@hadoop103 module]$ /opt/module/hadoop-2.7.2/sbin/start-yarn.sh

② 启动 elasticsearch 服务:

[atguigu@hadoop102 module]$ nohup /opt/module/elasticsearch-5.2.2/bin/elasticsearch &

③ 启动 hive 服务:

[atguigu@hadoop102 hive]$ nohup /opt/module/hive/bin/hive --service metastore &

[atguigu@hadoop102 hive]$ nohup /opt/module/hive/bin/hive --service hiveserver2 &

④ 启动 livy 服务:

[atguigu@hadoop102 livy]$ /opt/module/livy/bin/livy-server start

2)进入到/opt/module/griffin-master/service/target/路径,运行 service-0.6.0-SNAPSHOT.jar

控制台启动:控制台打印信息

[atguigu@hadoop102 target]$ java -jar /opt/module/griffin/service-0.6.0-SNAPSHOT.jar

后台启动:启动后台并把日志归写倒 service.out

[atguigu@hadoop102 ~]$ nohup java -jar service-0.6.0-SNAPSHOT.jar>service.out 2>&1 &

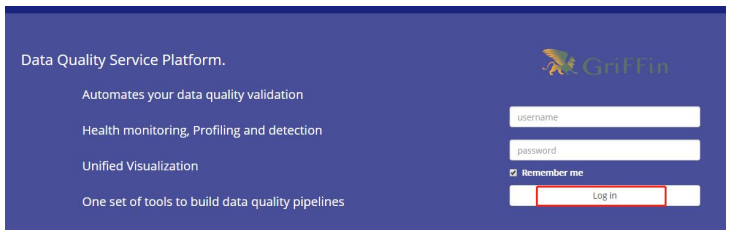

2.4.3 浏览器访问

http://hadoop102:8080 默认账户和密码都是无