唉,说句实在话,最近些爬虫也写的比较多了,经常爬一些没有反爬措施,或者反爬只停留在验证cookies、UA、referer的网站真的没太多乐趣。前端时间在知乎上看见了一个专栏,反反爬虫系列,于是乎也就入了坑,目前除了第二个之外全部都跟着作者的思路复现了代码,收获真的挺多的。话说python爬虫在知乎上的活跃度真的挺高的,经常有一些前辈、大牛在上面分享经验、教程。在知乎上查看、学习、讨论、复现他们的代码,很方便而且收获挺多!

好了,废话也不多说了,开始今天的主题把。汽车之家汽车参数配置的爬虫。

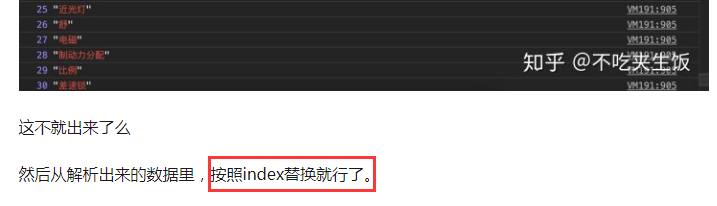

其实刚开始看完这篇文章我是懵逼的,懵逼的地方不在于解析JS获取真实数据,而是在于这句话,

按照index替换就行?我将通过JS拿到的数据认真的同网页进行了比对,怎么也找不到这返回的数据跟网页有什么相似的地方,无奈最后网上各种找,终于找到了一个博主写的文章了,运行了之后成功的拿到了数据,心中很是欣喜,然后也终于明白了按照index替换就行这句话的含义。

下面我讲我写的代码贴出来,当然了,这段代码只是为了跑通并拿到数据,并没有对其进行结构化封装。

1 import re 2 import os 3 import json 4 5 import requests 6 import xlwt 7 from selenium import webdriver 8 9 10 url = "https://car.autohome.com.cn/config/series/2357.html#pvareaid=3454437" 11 headers = { 12 "User-agent": "Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) " 13 "Chrome/71.0.3578.98 Safari/537.36" 14 } 15 # 运行JS的DOM 16 DOM = ("var rules = '2';" 17 "var document = {};" 18 "function getRules(){return rules}" 19 "document.createElement = function() {" 20 " return {" 21 " sheet: {" 22 " insertRule: function(rule, i) {" 23 " if (rules.length == 0) {" 24 " rules = rule;" 25 " } else {" 26 " rules = rules + '#' + rule;" 27 " }" 28 " }" 29 " }" 30 " }" 31 "};" 32 "document.querySelectorAll = function() {" 33 " return {};" 34 "};" 35 "document.head = {};" 36 "document.head.appendChild = function() {};" 37 38 "var window = {};" 39 "window.decodeURIComponent = decodeURIComponent;") 40 41 response = requests.get(url=url, headers=headers, timeout=10) 42 # print(response.content.decode("utf-8")) 43 html = response.content.decode("utf-8") 44 45 # 匹配汽车的参数 46 car_info = "" 47 config = re.search("var config = (.*?)};", html, re.S) # 车的参数 48 option = re.search("var option = (.*?)};", html, re.S) # 主被动安全装备 49 bag = re.search("var bag = (.*?)};", html, re.S) # 选装包 50 js_list = re.findall('((function([a-zA-Z]{2}.*?_).*?(document);)', html) 51 # 拼接车型的所有参数car_info 52 if config and option and bag: 53 car_info = car_info + config.group(0) + option.group(0) + bag.group(0) 54 # print(car_info) 55 56 # 封装JS成本地文件通过selenium执行,得到true_text 57 for item in js_list: 58 DOM = DOM + item 59 html_type = "<html><meta http-equiv='Content-Type' content='text/html; charset=utf-8' /><head></head><body> <script type='text/javascript'>" 60 js = html_type + DOM + " document.write(rules)</script></body></html>" # 待执行的JS字符串 61 os.makedirs("D:\test11") 62 with open("D:\test11\asd.html", "w", encoding="utf-8") as f: 63 f.write(js) 64 browser = webdriver.Chrome(executable_path="D:chromedrivechromedriver.exe") 65 browser.get("file://D:/test11/asd.html") 66 true_text = browser.find_element_by_tag_name('body').text 67 # print(true_text) 68 69 span_list = re.findall("<span(.*?)></span>", car_info) # 匹配车辆参数中所有的span标签 70 71 # 按照span标签与true_text中的关键字进行替换 72 for span in span_list: 73 info = re.search("'(.*?)'", span) 74 if info: 75 class_info = str(info.group(1)) + "::before { content:(.*?)}" # 76 content = re.search(class_info, true_text).group(1) # 匹配到的字体 77 car_info = car_info.replace(str("<span class='" + info.group(1) + "'></span>"), 78 re.search(""(.*?)"", content).group(1)) 79 # print(car_info) 80 81 # 持久化 82 car_item = {} 83 config = re.search("var config = (.*?);", car_info).group(1) 84 option = re.search("var option = (.*?);var", car_info).group(1) 85 bag = re.search("var bag = (.*?);", car_info).group(1) 86 87 config_re = json.loads(config) 88 option_re = json.loads(option) 89 bag_re = json.loads(bag) 90 91 config_item = config_re['result']['paramtypeitems'][0]['paramitems'] 92 option_item = option_re['result']['configtypeitems'][0]['configitems'] 93 bag_item = bag_re['result']['bagtypeitems'][0]['bagitems'] 94 95 for car in config_item: 96 car_item[car['name']] = [] 97 for value in car['valueitems']: 98 car_item[car['name']].append(value['value']) 99 100 for car in option_item: 101 car_item[car['name']] = [] 102 for value in car['valueitems']: 103 car_item[car['name']].append(value['value']) 104 105 for car in bag_item[0]['valueitems']: 106 car_item[car['name']] = [] 107 car_item[car['name']].append(car['bagid']) 108 car_item[car['name']].append(car['pricedesc']) 109 car_item[car['name']].append(car['description']) 110 111 # 生成表格 112 workbook = xlwt.Workbook(encoding='ascii') # 创建一个文件 113 worksheet = workbook.add_sheet('汽车之家') # 创建一个表 114 cols = 0 115 start_row = 0 116 117 for co in car_item: 118 cols = cols + 1 119 worksheet.write(start_row, cols, co) # 在第0(一)行写入车的配置信息 120 121 end_row_num = start_row + len(car_item['车型名称']) # 车辆款式记录数 122 for row in range(start_row, end_row_num): 123 col_num = 0 # 列数 124 row += 1 125 for col in car_item: 126 col_num = col_num + 1 127 worksheet.write(row, col_num, str(car_item[col][row - 1])) 128 129 workbook.save('d:\test.xls')

ok,在强调一遍,这段代码只是为了跑通并拿到数据,并没有对其进行结构化封装。

最后感谢这位博主:https://www.cnblogs.com/kangz/p/10011348.html

通过这段时间跟着知乎的专栏的学习,明显看见了自己的不足!一定要沉着冷静的去分析、思考,网站对API进行了加密,一定能够解密!