题目太长了!下载地址【传送门】

第1题

简述:识别图片上的数字。

第1步:读取数据文件:

%% Setup the parameters you will use for this part of the exercise

input_layer_size = 400; % 20x20 Input Images of Digits

num_labels = 10; % 10 labels, from 1 to 10

% (note that we have mapped "0" to label 10)

% Load Training Data

fprintf('Loading and Visualizing Data ...

')

load('ex3data1.mat'); % training data stored in arrays X, y

m = size(X, 1);

% Randomly select 100 data points to display

rand_indices = randperm(m);

sel = X(rand_indices(1:100), :);

displayData(sel);

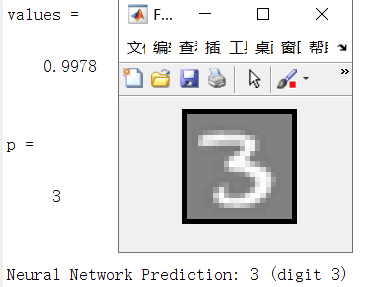

第2步:实现displayData函数:

function [h, display_array] = displayData(X, example_width)

% Set example_width automatically if not passed in

if ~exist('example_width', 'var') || isempty(example_width)

example_width = round(sqrt(size(X, 2)));

end

% Gray Image

colormap(gray);

% Compute rows, cols

[m n] = size(X);

example_height = (n / example_width);

% Compute number of items to display

display_rows = floor(sqrt(m));

display_cols = ceil(m / display_rows);

% Between images padding

pad = 1;

% Setup blank display

display_array = - ones(pad + display_rows * (example_height + pad), ...

pad + display_cols * (example_width + pad));

% Copy each example into a patch on the display array

curr_ex = 1;

for j = 1:display_rows

for i = 1:display_cols

if curr_ex > m,

break;

end

% Copy the patch

% Get the max value of the patch

max_val = max(abs(X(curr_ex, :)));

display_array(pad + (j - 1) * (example_height + pad) + (1:example_height), ...

pad + (i - 1) * (example_width + pad) + (1:example_width)) = ...

reshape(X(curr_ex, :), example_height, example_width) / max_val;

curr_ex = curr_ex + 1;

end

if curr_ex > m,

break;

end

end

% Display Image

h = imagesc(display_array, [-1 1]);

% Do not show axis

axis image off

drawnow;

end

运行结果:

第3步:计算θ:

lambda = 0.1; [all_theta] = oneVsAll(X, y, num_labels, lambda);

其中oneVsAll函数:

function [all_theta] = oneVsAll(X, y, num_labels, lambda)

% Some useful variables

m = size(X, 1);

n = size(X, 2);

% You need to return the following variables correctly

all_theta = zeros(num_labels, n + 1);

% Add ones to the X data matrix

X = [ones(m, 1) X];

for c = 1:num_labels,

initial_theta = zeros(n+1, 1);

options = optimset('GradObj', 'on', 'MaxIter', 50);

[theta] = ...

fmincg(@(t)(lrCostFunction(t, X, (y==c), lambda)), initial_theta, options);

all_theta(c,:) = theta;

end;

end

第4步:实现lrCostFunction函数:

function [J, grad] = lrCostFunction(theta, X, y, lambda) % Initialize some useful values m = length(y); % number of training examples % You need to return the following variables correctly J = 0; grad = zeros(size(theta)); theta2 = theta(2:end,1); h = sigmoid(X*theta); J = 1/m*(-y'*log(h)-(1-y')*log(1-h)) + lambda/(2*m)*sum(theta2.^2); theta(1,1) = 0; grad = 1/m*(X'*(h-y)) + lambda/m*theta; grad = grad(:); end

第5步:实现sigmoid函数:

function g = sigmoid(z) g = 1.0 ./ (1.0 + exp(-z)); end

第6步:计算预测的准确性:

pred = predictOneVsAll(all_theta, X);

fprintf('

Training Set Accuracy: %f

', mean(double(pred == y)) * 100);

其中predictOneVsAll函数:

function p = predictOneVsAll(all_theta, X)

m = size(X, 1);

num_labels = size(all_theta, 1);

% You need to return the following variables correctly

p = zeros(size(X, 1), 1);

% Add ones to the X data matrix

X = [ones(m, 1) X];

g = zeros(size(X, 1), num_labels);

for c = 1: num_labels,

theta = all_theta(c, :);

g(:, c) = sigmoid(X*theta');

end

[value, p] = max(g, [], 2);

end

运行结果:

![]()

第2题

简介:使用神经网络实现数字识别(Θ已提供)

第1步:读取文档数据:

%% Setup the parameters you will use for this exercise

input_layer_size = 400; % 20x20 Input Images of Digits

hidden_layer_size = 25; % 25 hidden units

num_labels = 10; % 10 labels, from 1 to 10

% (note that we have mapped "0" to label 10)

% Load Training Data

fprintf('Loading and Visualizing Data ...

')

load('ex3data1.mat');

m = size(X, 1);

% Randomly select 100 data points to display

sel = randperm(size(X, 1));

sel = sel(1:100);

displayData(X(sel, :));

% Load the weights into variables Theta1 and Theta2

load('ex3weights.mat');

第2步:实现神经网络:

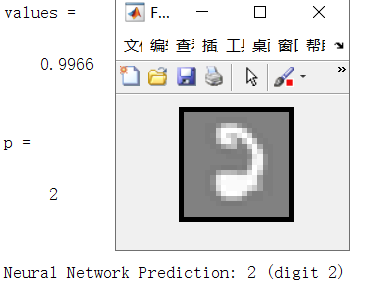

pred = predict(Theta1, Theta2, X);

fprintf('

Training Set Accuracy: %f

', mean(double(pred == y)) * 100);

其中predict函数:

function p = predict(Theta1, Theta2, X) % Useful values m = size(X, 1); num_labels = size(Theta2, 1); % You need to return the following variables correctly p = zeros(size(X, 1), 1); X = [ones(m,1) X]; z2 = X*Theta1'; a2 = sigmoid(z2); a2 = [ones(size(a2, 1), 1) a2]; z3 = a2*Theta2'; a3 = sigmoid(z3) [values, p] = max(a3, [], 2) end

运行结果:

![]()

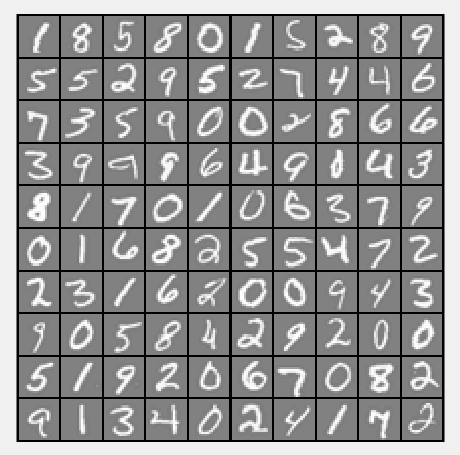

第3步:实现单个数字识别:

rp = randperm(m);

for i = 1:m

% Display

fprintf('

Displaying Example Image

');

displayData(X(rp(i), :));

pred = predict(Theta1, Theta2, X(rp(i),:));

fprintf('

Neural Network Prediction: %d (digit %d)

', pred, mod(pred, 10));

% Pause with quit option

s = input('Paused - press enter to continue, q to exit:','s');

if s == 'q'

break

end

end

运行结果: