0x01 基于chrome+selenium爬取京东商城8G内存条

from selenium import webdriver

from selenium.webdriver import ActionChains #获取属性

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

import pymongo

import csv

import time

from pyecharts.charts import Bar

from pyecharts import options as opts

import pandas as pd

#连接mongdb数据库

client=pymongo.MongoClient(host='localhost',port=27017)

db=client.JD

datalist=[]

def spider_data():

browser=webdriver.Chrome()

url='https://www.jd.com/'

browser.get(url)

browser.find_element_by_id('key').send_keys('8G内存条')

browser.find_element_by_id('key').send_keys(Keys.ENTER)

#显示等待下一页元素加载完成

#WebDriverWait(browser,1000).until(EC.presence_of_element_located((By.CLASS_NAME,'pn-next')))

count=0

while True:

try:

count+=1

# 显示等待,直到所有商品信息加载完成

WebDriverWait(browser,1000).until(EC.presence_of_element_located((By.CLASS_NAME,'gl-item')))

#滚动条下拉到最下面

browser.execute_script('document.documentElement.scrollTop=10000')

time.sleep(3)

browser.execute_script('document.documentElement.scrollTop=0')

lists = browser.find_elements_by_class_name('gl-item')

for li in lists:

name=li.find_element_by_xpath('.//div[@class="p-name p-name-type-2"]//em').text

price=li.find_element_by_xpath('.//div[@class="p-price"]//i').text

commit=li.find_element_by_xpath('.//div[@class="p-commit"]//a').text

shop_name=li.find_element_by_xpath('.//div[@class="p-shop"]//a').text

datas={}

datas['name']=name

datas['price']=price

datas['commit']=commit

datas['shop_name']=shop_name

datalist.append(datas)

#连接mongodb数据库

collection=db.datas

collection.insert(datas)

print(datas)

except :

print('ERROR')

if count==1:

break

#爬取下一页

next=browser.find_element_by_css_selector('a.pn-next')

next.click()

print("数据爬取完成")

#写入数据

def write_data():

with open('E:/data_csv.csv','w',encoding='utf-8',newline='') as f:

try:

title=datalist[0].keys()

writer=csv.DictWriter(f,title)

writer.writeheader()

writer.writerows(datalist)

except:

print('Error')

print('文件写入完成')

# 数据清洗

def clear_data():

data = pd.read_csv('E:data_csv.csv')

# 删除_id列

data.drop('_id', axis=1, inplace=True)

# 删除'去看二手'行

data.drop(data[data['commit'].str.contains('去看二手')].index, inplace=True)

def convert_data(var):

# 将+,万去除

new_value = var.replace('+', '').replace('万', '')

return float(new_value)

# 写入cvs文件中

data['commit'] = data['commit'].apply(convert_data)

# 清除commit数大于100的行

data.drop(data[data['commit'] >= 100].index, inplace=True)

# 保存为csv文件

data.to_csv('E:clear_data.csv')

def group_data():

# 数据清洗

data1 = pd.read_csv('E:clear_data.csv')

# 删除其它品牌,保留金士顿

data1.drop(data1[data1['name'].str.contains('十铨|宇瞻|光威|美商海盗船|威刚|芝奇|三星|金百达|英睿达|联想|佰微')].index, inplace=True)

# 保存为csv文件

data1.to_csv('E:Kingston.csv')

data2 = pd.read_csv('E:clear_data.csv')

# 筛选出威刚

data2.drop(data2[data2['name'].str.contains('金士顿|十铨|宇瞻|光威|美商海盗船|芝奇|三星|金百达|英睿达|联想|佰微')].index, inplace=True)

# 保存为csv文件

data2.to_csv('E:weigang.csv')

print('数据清洗完成')

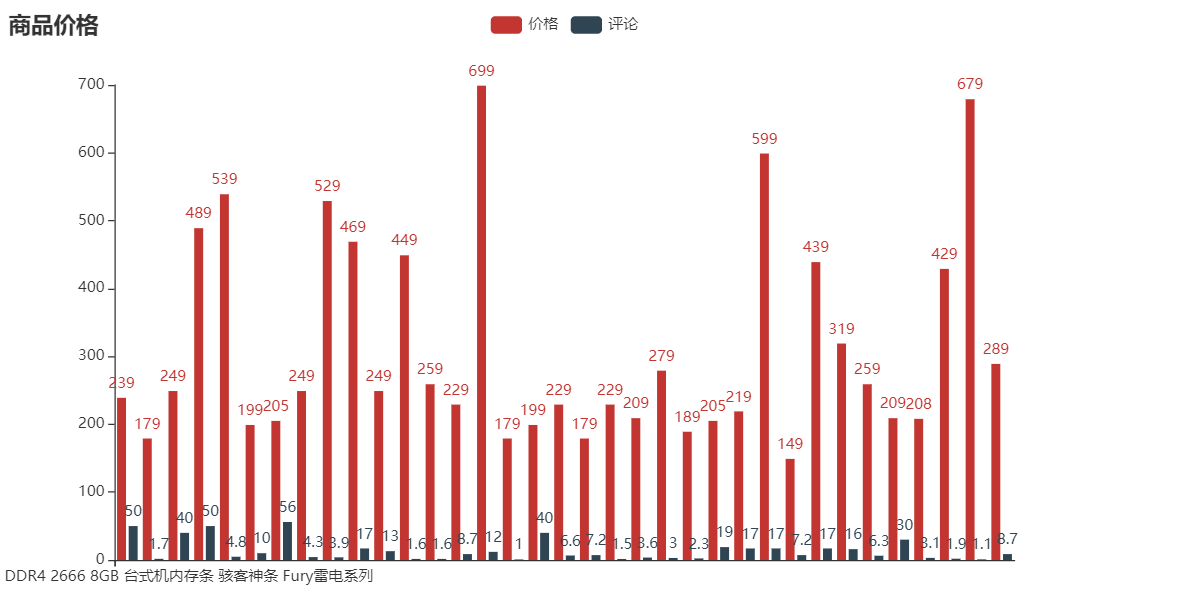

#数据可视化

def show_data():

data_path=pd.read_csv('E:clear_data.csv')

bar=Bar()

bar.add_xaxis(data_path['name'].tolist())

bar.add_yaxis('价格',data_path['price'].tolist())

bar.add_yaxis('评论',data_path['commit'].tolist())

bar.set_global_opts(title_opts=opts.TitleOpts(title="商品价格"))

bar.render('all_data.html')

data_path1 = pd.read_csv('E:Kingston.csv')

bar1 = Bar()

bar1.add_xaxis(data_path1['name'].tolist())

bar1.add_yaxis('价格', data_path1['price'].tolist())

bar1.add_yaxis('评论', data_path1['commit'].tolist())

bar1.set_global_opts(title_opts=opts.TitleOpts(title="商品价格"))

bar1.render('Kingston.html')

data_path2 = pd.read_csv('E:weigang.csv')

bar2 = Bar()

bar2.add_xaxis(data_path2['name'].tolist())

bar2.add_yaxis('价格', data_path2['price'].tolist())

bar2.add_yaxis('评论', data_path2['commit'].tolist())

bar2.set_global_opts(title_opts=opts.TitleOpts(title="商品价格"))

bar2.render('weigang.html')

if __name__=='__main__':

spider_data()

write_data()

clear_data()

group_data()

show_data()

0x02 结果展示

参考链接:

https://blog.csdn.net/weixin_44024393/article/details/89289694