九.部署kube-controller-manager

kube-controller-manager是Kube-Master相关的3个服务之一,是有状态的服务,会修改集群的状态信息。

如果多个master节点上的相关服务同时生效,则会有同步与一致性问题,所以多master节点中的kube-controller-manager服务只能是主备的关系,kukubernetes采用租赁锁(lease-lock)实现leader的选举,具体到kube-controller-manager,设置启动参数"--leader-elect=true"。

1. 创建kube-controller-manager证书

1)创建kube-conftroller-manager证书签名请求

# kube-controller-mamager与kubei-apiserver通信采用双向TLS认证; # kube-apiserver提取CN作为客户端的用户名,即system:kube-controller-manager。 kube-apiserver预定义的 RBAC使用的ClusterRoleBindings system:kube-controller-manager将用户system:kube-controller-manager与ClusterRole system:kube-controller-manager绑定 [root@kubenode1 ~]# mkdir -p /etc/kubernetes/controller-manager [root@kubenode1 ~]# cd /etc/kubernetes/controller-manager/ [root@kubenode1 controller-manager]# touch controller-manager-csr.json [root@kubenode1 controller-manager]# vim controller-manager-csr.json { "CN": "system:kube-controller-manager", "hosts": [ "172.30.200.21", "172.30.200.22", "172.30.200.23" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "ChengDu", "L": "ChengDu", "O": "system:kube-controller-manager", "OU": "cloudteam" } ] }

2)生成kube-controller-mamager证书与私钥

[root@kubenode1 controller-manager]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes controller-manager-csr.json | cfssljson -bare controller-manager

# 分发controller-manager.pem,controller-manager-key.pem [root@kubenode1 controller-manager]# scp controller-manager*.pem root@172.30.200.22:/etc/kubernetes/controller-manager/ [root@kubenode1 controller-manager]# scp controller-manager*.pem root@172.30.200.23:/etc/kubernetes/controller-manager/

2. 创建kube-controller-manager kubeconfig文件

kube-controller-manager kubeconfig文件中包含Master地址信息与必要的认证信息。

# 配置集群参数; # --server:指定api-server,采用ha之后的vip; # cluster名自定义,设定之后需保持一致; # --kubeconfig:指定kubeconfig文件路径与文件名;如果不设置,默认生成在~/.kube/config文件 [root@kubenode1 controller-manager]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://172.30.200.10:6443 --kubeconfig=controller-manager.conf # 配置客户端认证参数; # 认证用户为前文签名中的“system:kube-controller-manager”; # 指定对应的公钥证书/私钥等 [root@kubenode1 controller-manager]# kubectl config set-credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/controller-manager/controller-manager.pem --embed-certs=true --client-key=/etc/kubernetes/controller-manager/controller-manager-key.pem --kubeconfig=controller-manager.conf # 配置上下文参数 [root@kubenode1 controller-manager]# kubectl config set-context system:kube-controller-manager@kubernetes --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=controller-manager.conf # 配置默认上下文 [root@kubenode1 controller-manager]# kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=controller-manager.conf

# 分发controller-manager.conf文件到所有master节点; [root@kubenode1 controller-manager]# scp controller-manager.conf root@172.30.200.22:/etc/kubernetes/controller-manager/ [root@kubenode1 controller-manager]# scp controller-manager.conf root@172.30.200.23:/etc/kubernetes/controller-manager/

3. 配置kube-controller-manager的systemd unit文件

相关可执行文件在部署kubectl时已部署完成。

# kube-controller-manager在kube-apiserver启动之后启动 [root@kubenode1 ~]# touch /usr/lib/systemd/system/kube-controller-manager.service [root@kubenode1 ~]# vim /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target After=kube-apiserver.service [Service] EnvironmentFile=/usr/local/kubernetes/kube-controller-manager.conf ExecStart=/usr/local/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target # 启动参数文件 # --kubeconfig:kubeconfig配置文件路径,配置文件中包含master地址信息与必要的认证信息; # --allocate-node:设置为true时,使用云服务商为Pod分配的cidrs,一般仅用在公有云; # --cluster-name:集群名称,默认即kubernetes; # --cluster-signing-cert-file / --cluster-signing-key-file:用于集群范围的认证; # --service-account-private-key-file:用于service account token签名的私钥文件路径; # --root-ca-file:根ca证书路径,被用于service account 的token secret中 # --insecure-experimental-approve-all-kubelet-csrs-for-group:controller-manager自动授权kubelet客户端证书csr组 # --use-service-account-credentials:设置为true时,表示为每个controller分别设置service account; # --controllers:启动的contrller列表,默认为”*”,启用所有的controller,但不包含” bootstrapsigner”与”tokencleaner”; # --leader-elect:设置为true时进行leader选举,集群高可用部署时controller-manager必须选举leader,默认即true [root@kubenode1 ~]# touch /usr/local/kubernetes/kube-controller-manager.conf [root@kubenode1 ~]# vim /usr/local/kubernetes/kube-controller-manager.conf KUBE_CONTROLLER_MANAGER_ARGS="--master=https://172.30.200.10:6443 --kubeconfig=/etc/kubernetes/controller-manager/controller-manager.conf --allocate-node-cidrs=true --service-cluster-ip-range=169.169.0.0/16 --cluster-cidr=10.254.0.0/16 --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem --root-ca-file=/etc/kubernetes/ssl/ca.pem --insecure-experimental-approve-all-kubelet-csrs-for-group=system:bootstrappers --use-service-account-credentials=true --controllers=*,bootstrapsigner,tokencleaner --leader-elect=true --logtostderr=false --log-dir=/var/log/kubernetes/controller-manager --v=2 1>>/var/log/kubernetes/kube-controller-manager.log 2>&1" # 创建日志目录 [root@kubenode1 ~]# mkdir -p /var/log/kubernetes/controller-manager

4. 启动并验证

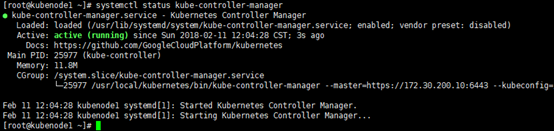

1)kube-conftroller-manager状态验证

[root@kubenode1 ~]# systemctl daemon-reload [root@kubenode1 ~]# systemctl enable kube-controller-manager [root@kubenode1 ~]# systemctl start kube-controller-manager [root@kubenode1 ~]# systemctl status kube-controller-manager

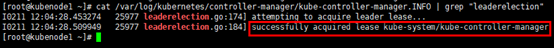

2)kube-conftroller-manager选举查看

# 因kubenode1是第一个启动kube-controller-manager服务的节点,尝试获取leader权限,成功 [root@kubenode1 ~]# cat /var/log/kubernetes/controller-manager/kube-controller-manager.INFO | grep "leaderelection"

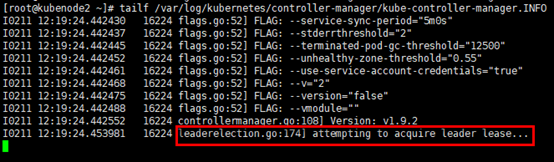

# 在kubenode2上观察,kubenode2在尝试获取leader权限,但未成功,后续操作挂起 [root@kubenode2 ~]# tailf /var/log/kubernetes/controller-manager/kube-controller-manager.INFO