五.部署高可用etcd集群

etcd是key-value存储(同zookeeper),在整个kubernetes集群中处于中心数据库地位,以集群的方式部署,可有效避免单点故障。

这里采用静态配置的方式部署(另也可通过etcd提供的rest api在运行时动态添加,修改或删除集群成员)。

以kubenode1为例,kubenode2&kubenode3做适当小调整。

1. 下载

[root@kubenode1 ~]# cd /usr/local/src/ [root@kubenode1 src]# wget https://github.com/coreos/etcd/releases/download/v3.3.0/etcd-v3.3.0-linux-amd64.tar.gz [root@kubenode1 src]# tar -zxvf etcd-v3.3.0-linux-amd64.tar.gz # 解压目录整体拷贝并更名,可执行文件在解压目录中 [root@kubenode1 src]# mv etcd-v3.3.0-linux-amd64/ /usr/local/etcd

2. 创建etcd TLS证书与私钥

客户端(etcdctl)与etcd集群,etcd集群之间通信采用TLS加密。

1)创建etcd证书签名请求

[root@kubenode1 ~]# mkdir -p /etc/kubernetes/etcdssl [root@kubenode1 ~]# cd /etc/kubernetes/etcdssl/ [root@kubenode1 etcdssl]# touch etcd-csr.json # hosts字段指定使用该证书的ip或域名列表,这里指定了所有节点ip,生成的证书可被etcd集群各节点使用,kubenode2&kubenode3节点可不用生成证书,由kubenode1直接分发即可 [root@kubenode1 etcdssl]# vim etcd-csr.json { "CN": "etcd", "hosts": [ "127.0.0.1", "172.30.200.21", "172.30.200.22", "172.30.200.23" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "ChengDu", "L": "ChengDu", "O": "k8s", "OU": "cloudteam" } ] }

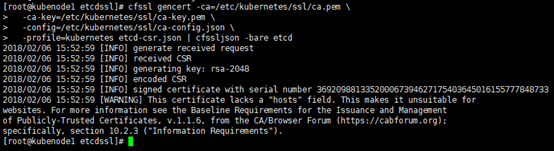

2)生成etcd证书与私钥

# 指定相应的ca证书,秘钥,签名文件等 [root@kubenode1 etcdssl]# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

# 分发etcd.pem,etcd-key.pem,另有ca.pem(前面已分发) [root@kubenode1 etcdssl]# scp etcd.pem etcd-key.pem root@172.30.200.22:/etc/kubernetes/etcdssl/ [root@kubenode1 etcdssl]# scp etcd.pem etcd-key.pem root@172.30.200.23:/etc/kubernetes/etcdssl/

3. 设置iptables

[root@kubenode1 ~]# vim /etc/sysconfig/iptables -A INPUT -p tcp -m state --state NEW -m tcp --dport 2379 -j ACCEPT -A INPUT -p tcp -m state --state NEW -m tcp --dport 2380 -j ACCEPT [root@kubenode1 ~]# service iptables restart

4. 配置etcd的systemd unit文件

# 创建工作目录 [root@kubenode1 ~]# mkdir -p /var/lib/etcd # WorkingDirectory:指定工作/数据目录为上面创建的目录; # EnvironmentFile:这里将etcd的启动参数放在unit文件之外,方便修改后不用重载; # ExecStart:启动文件位置,并带上指定参数的变量 [root@kubenode1 ~]# touch /usr/lib/systemd/system/etcd.service [root@kubenode1 ~]# vim /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server Documentation=https://github.com/coreos After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify WorkingDirectory=/var/lib/etcd/ EnvironmentFile=/usr/local/etcd/etcd.conf ExecStart=/usr/local/etcd/etcd $ETCD_ARGS Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target # ETCD_ARGS:对应systemd unit文件中ExecStart后的变量; # --cert-file,--key-file:分别指定etcd的公钥证书和私钥; # --peer-cert-file,--peer-key-file:分别指定etcd的peers通信的公钥证书和私钥; # --trusted-ca-file:指定客户端的CA证书; # --peer-trusted-ca-file:指定peers的CA证书; # --initial-cluster-state=new:表示这是新初始化集群,--name指定的参数值必须在--initial-cluster中 [root@kubenode1 ~]# touch /usr/local/etcd/etcd.conf [root@kubenode1 ~]# vim /usr/local/etcd/etcd.conf ETCD_ARGS="--name=kubenode1 --cert-file=/etc/kubernetes/etcdssl/etcd.pem --key-file=/etc/kubernetes/etcdssl/etcd-key.pem --peer-cert-file=/etc/kubernetes/etcdssl/etcd.pem --peer-key-file=/etc/kubernetes/etcdssl/etcd-key.pem --trusted-ca-file=/etc/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem --initial-advertise-peer-urls=https://172.30.200.21:2380 --listen-peer-urls=https://172.30.200.21:2380 --listen-client-urls=https://172.30.200.21:2379,http://127.0.0.1:2379 --advertise-client-urls=https://172.30.200.21:2379 --initial-cluster-token=etcd-cluster-1 --initial-cluster=kubenode1=https://172.30.200.21:2380,kubenode2=https://172.30.200.22:2380,kubenode3=https://172.30.200.23:2380 --initial-cluster-state=new --data-dir=/var/lib/etcd"

5. 启动etcd

# 第一个启动的etcd进程会卡顿,等待其他etcd节点启动进程加入集群; # 如果等待超时,则第一个etcd节点进程启动会失败 [root@kubenode1 ~]# systemctl daemon-reload [root@kubenode1 ~]# systemctl enable etcd.service [root@kubenode1 ~]# systemctl restart etcd.service

6. 验证

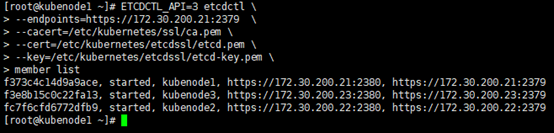

# 查看member list; # ETCDCTL_API=3:api版本; # 通信采用TLS加密,客户端访问时间需要指定对应公钥&私钥 [root@kubenode1 ~]# ETCDCTL_API=3 etcdctl --endpoints=https://172.30.200.21:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/etcdssl/etcd.pem --key=/etc/kubernetes/etcdssl/etcd-key.pem member list

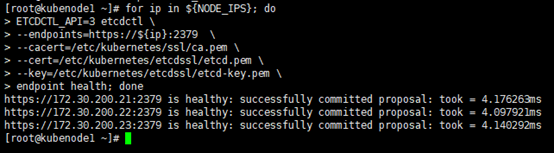

# 查看节点健康状态 [root@kubenode1 ~]# export NODE_IPS="172.30.200.21 172.30.200.22 172.30.200.23" [root@kubenode1 ~]# for ip in ${NODE_IPS}; do ETCDCTL_API=3 etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/etcdssl/etcd.pem --key=/etc/kubernetes/etcdssl/etcd-key.pem endpoint health; done