温馨提示:如在执行过程中遇到问题,请仔细检查执行命令的用户和所在目录。

1.环境准备

1.1系统配置说明

| ip | 安装服务 | 主机名 | 内核版本 | 备注 |

| 192.168.5.181 | ceph、ceph-deploy | admin-node | CentOS Linux release 7.9.2009 (Core) | 管理节点 |

|

192.168.5.182 |

ceph、mon、osd、rgw | node1 | CentOS Linux release 7.9.2009 (Core) | osd、rgw节点 |

| 192.168.5.183 | ceph、mon、osd | node2 | CentOS Linux release 7.9.2009 (Core) | osd |

| 192.168.5.184 | ceph、mon、osd | node3 | CentOS Linux release 7.9.2009 (Core) | osd |

1.2关闭selinux和防火墙(所有节点)

#关闭防火墙 systemctl stop firewalld systemctl disable firewalld #关闭selinux setenforce 0 sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

1.2设置时间同步(所有节点)

# yum 安装 ntp yum install ntp ntpdate ntp-doc # 校对系统时钟 ntpdate 0.cn.pool.ntp.org

1.3修改主机名和hosts文件(所有节点)

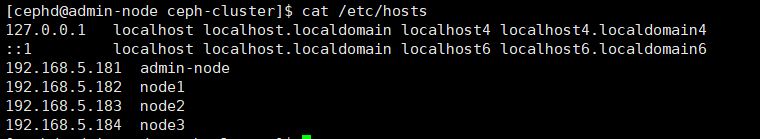

#192.168.5.181上操作 hostnamectl set-hostname admin-node #192.168.5.182上操作 hostnamectl set-hostname node1 #192.168.5.183上操作 hostnamectl set-hostname node2 #192.168.5.184上操作 hostnamectl set-hostname node3 #所有节点上操作 vi /etc/hosts #将以下内容增加到/etc/hosts中 192.168.5.181 admin-node 192.168.5.182 node1 192.168.5.183 node2 192.168.5.184 node3

1.4创建 Ceph 部署用户并设置其远程登录密码(所有节点)

# 创建 ceph 特定用户 useradd -d /home/cephd -m cephd echo cephd | passwd cephd --stdin # 添加 sudo 权限 echo "cephd ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephd chmod 0440 /etc/sudoers.d/cephd

# node1、node2、node3上操作,设置cephd用户的远程登录密码,记住此密码,后面配置免密登录会用到

passwd cephd

1.5配置免密登录(admin-node节点)

# 切换到cephd用户 su cephd # 生成ssh密钥,一路回车即可 ssh-keygen # 将公钥复制到 node1 节点,输入cephd的用户密码即可 ssh-copy-id node1 # 将公钥复制到 node2 节点,输入cephd的用户密码即可 ssh-copy-id node2 # 将公钥复制到 node3 节点,输入cephd的用户密码即可 ssh-copy-id node3

2.Ceph集群搭建(ceph version 10.2.11)

2.1安装ceph-deploy(admin-node节点)

配置ceph安装源

# yum 配置其他依赖包 sudo yum install -y yum-utils && sudo yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/ && sudo yum install --nogpgcheck -y epel-release && sudo rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 && rm /etc/yum.repos.d/dl.fedoraproject.org* # 添加 Ceph 源 sudo vi /etc/yum.repos.d/ceph.repo [ceph] name=ceph baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/ gpgcheck=0 [ceph-noarch] name=cephnoarch baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/ gpgcheck=0

安装 ceph-deploy

sudo yum update && sudo yum install ceph-deploy

2.2修改 ceph-deploy 管理节点上的 ~/.ssh/config 文件,这样无需每次执行 ceph-deploy 都要指定 –username cephd(admin-node)

sudo vi ~/.ssh/config Host admin-node Hostname admin-node User cephd Host node1 Hostname node1 User cephd Host node2 Hostname node2 User cephd Host node3 Hostname node3 User cephd

sudo chmod 600 ~/.ssh/config

2.3清理配置(admin-node)

# 清理 Ceph 安装包 sudo ceph-deploy purge admin-node node1 node2 node3 # 清理 Ceph 配置文件 sudo ceph-deploy purgedata admin-node node1 node2 node3 sudo ceph-deploy forgetkeys

2.4创建集群 mon节点(admin-node)

# 创建执行目录 mkdir /home/cephd/ceph-cluster && cd /home/cephd/ceph-cluster # 创建集群 mon节点 sudo ceph-deploy new node1 node2 node3

2.5修改ceph.conf配置文件(admin-node)

vi ceph.conf #增加以下内容 osd pool default size = 2 #增加默认副本数为 2 osd max object name len = 256 osd max object namespace len = 64

2.6安装ceph(admin-node)

sudo ceph-deploy install admin-node node1 node2 node3

2.7初始化 monitor 节点并收集所有密钥(admin-node)

sudo ceph-deploy --overwrite-conf mon create-initial

2.8修改硬盘挂载目录权限(node1、node2、node3)

前提:挂载存储硬盘,参考我的另一篇博客,这里假设将硬盘挂载到/data目录

chown -R ceph:ceph /data

2.9准备OSD(admin-node)

sudo ceph-deploy --overwrite-conf osd prepare node1:/data node2:/data node3:/data

2.10激活OSD(admin-node)

#激活osd sudo ceph-deploy osd activate node1:/data node2:/data node3:/data #通过 ceph-deploy admin 将配置文件和 admin 密钥同步到各个节点 sudo ceph-deploy admin node1 node2 node3

2.11修改ceph.client.admin.keyring权限(node1、node2、node3)

sudo chmod +r /etc/ceph/ceph.client.admin.keyring

2.12查看ceph集群状态(node1、node2、node3)

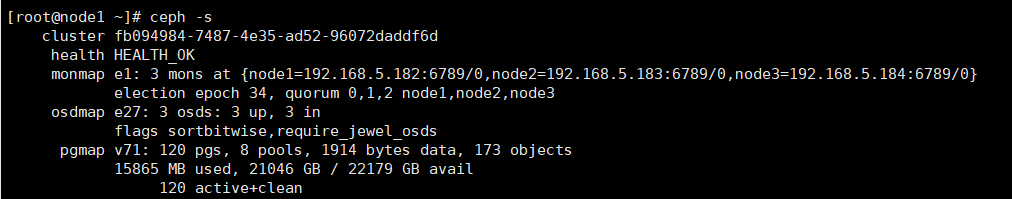

ceph -s

自此,恭喜你,集群已经部署完成,那么如何使用集群呢,请继续往下看!

3.Ceph RGW网关部署及使用说明(ceph version 10.2.11)

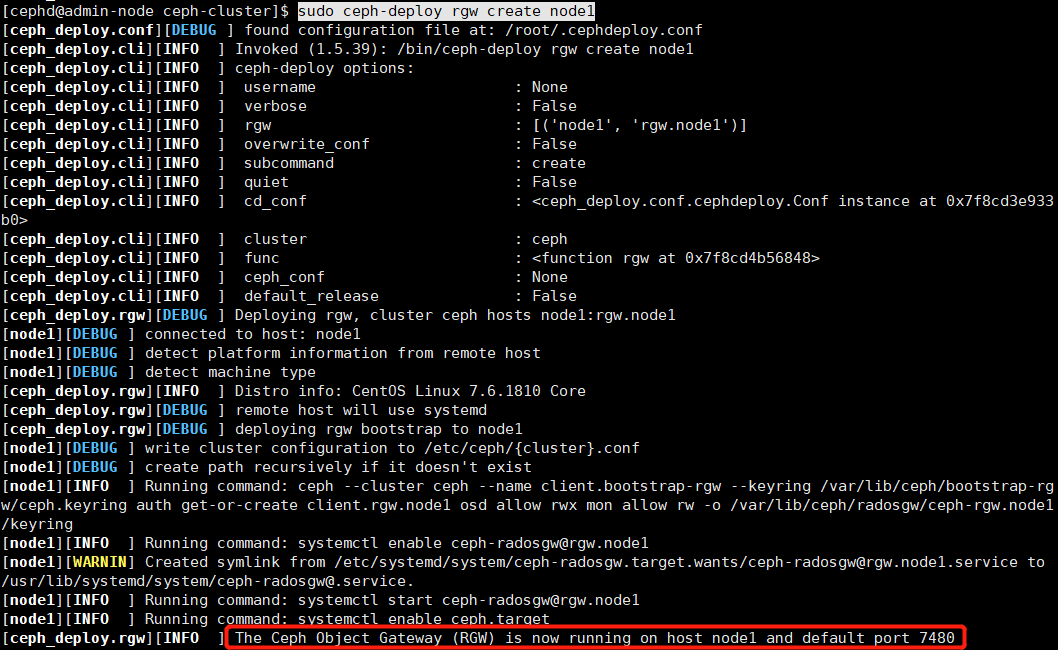

3.1RGW网关部署(admin-node)

sudo ceph-deploy rgw create node1

网关创建后默认会监听7480端口,请保证7480端口未被占用,否则可能会创建失败。

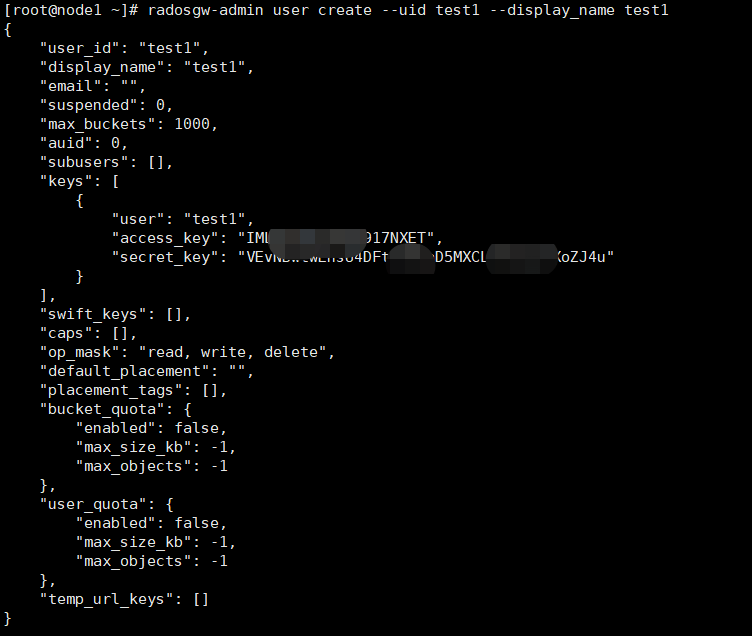

3.2RGW创建一个test1用户(node1)

radosgw-admin user create --uid test1 --display_name test1

打马赛克的地方就是test1用户连接ceph集群所需要的公私钥,现在已经可以使用test1用户通过S3 API连接ceph存储集群做数据上传操作了,连接端口为7480。

3.3RGW用户容量限制(node1)

#限制test1用户最大上传的对象个数为1024个,最大使用空间为1024B,即1KB radosgw-admin quota set --quota-scope=user --uid=test1 --max-objects=1024 --max-size=1024B #启用用户空间限制 radosgw-admin quota enable --quota-scope=user --uid=test1

3.4RGW bucket容量限制(node1)

#限制用户test1拥有的存储桶最大上传对象为1024个,最大空间为1024B radosgw-admin quota set --uid=test1 --quota-scope=bucket --max-objects=1024 --max-size=1024B #启用桶容量限制 radosgw-admin quota enable --quota-scope=bucket --uid=test1

4.Java连接Ceph RGW网关进行存储相关操作(ceph version 10.2.11)

4.1构建maven工程,并添加以下依赖

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk</artifactId>

<version>1.9.6</version>

</dependency>

4.2编写S3Utils工具类

package com.fh.util; import java.io.File; import java.io.FileOutputStream; import java.io.IOException; import java.io.InputStream; import java.util.ArrayList; import java.util.Date; import java.util.HashMap; import java.util.Iterator; import java.util.List; import java.util.Map; import com.amazonaws.ClientConfiguration; import com.amazonaws.Protocol; import com.amazonaws.auth.AWSCredentials; import com.amazonaws.auth.BasicAWSCredentials; import com.amazonaws.services.s3.AmazonS3; import com.amazonaws.services.s3.AmazonS3Client; import com.amazonaws.services.s3.S3ClientOptions; import com.amazonaws.services.s3.model.Bucket; import com.amazonaws.services.s3.model.GeneratePresignedUrlRequest; import com.amazonaws.services.s3.model.GetObjectRequest; import com.amazonaws.services.s3.model.ListBucketsRequest; import com.amazonaws.services.s3.model.ListObjectsRequest; import com.amazonaws.services.s3.model.ListVersionsRequest; import com.amazonaws.services.s3.model.ObjectListing; import com.amazonaws.services.s3.model.ObjectMetadata; import com.amazonaws.services.s3.model.PutObjectRequest; import com.amazonaws.services.s3.model.S3Object; import com.amazonaws.services.s3.model.S3ObjectSummary; import com.amazonaws.services.s3.model.S3VersionSummary; import com.amazonaws.services.s3.model.VersionListing; public class S3Utils { /** * 删除文件桶 * @param s3 * @param bucketName * @return */ public static boolean deleteBucket(AmazonS3 s3,String bucketName) { try { // 删除桶中所有文件对象 System.out.println(" - removing objects from bucket"); ObjectListing object_listing = s3.listObjects(bucketName); while (true) { for (Iterator<?> iterator = object_listing.getObjectSummaries().iterator(); iterator.hasNext(); ) { S3ObjectSummary summary = (S3ObjectSummary) iterator.next(); s3.deleteObject(bucketName, summary.getKey()); } // more object_listing to retrieve? if (object_listing.isTruncated()) { object_listing = s3.listNextBatchOfObjects(object_listing); } else { break; } } // 删除桶中所有文件对象版本信息 System.out.println(" - removing versions from bucket"); VersionListing version_listing = s3.listVersions( new ListVersionsRequest().withBucketName(bucketName)); while (true) { for (Iterator<?> iterator = version_listing.getVersionSummaries().iterator(); iterator.hasNext(); ) { S3VersionSummary vs = (S3VersionSummary) iterator.next(); s3.deleteVersion(bucketName, vs.getKey(), vs.getVersionId()); } if (version_listing.isTruncated()) { version_listing = s3.listNextBatchOfVersions( version_listing); } else { break; } } s3.deleteBucket(bucketName); return true; } catch (Exception e) { e.printStackTrace(); } return false; } /** * 删除文件桶中文件对象 * @param s3 * @param bucketName 文件桶名 * @param key 对象key * @return */ public static boolean deleteObject(AmazonS3 s3,String bucketName,String key) { try { s3.deleteObject(bucketName, key); return true; } catch (Exception e) { e.printStackTrace(); } return false; } /** * 获取文件桶中所有文件对象key * @param s3 * @param bucketName * @return */ public static List<String> getBucketObjects(AmazonS3 s3,String bucketName){ List<String> objectList = new ArrayList<>(); ObjectListing objectListing = s3.listObjects(new ListObjectsRequest().withBucketName(bucketName)); while (true) { for (Iterator<?> iterator = objectListing.getObjectSummaries().iterator(); iterator.hasNext(); ) { S3ObjectSummary summary = (S3ObjectSummary) iterator.next(); objectList.add(summary.getKey()); } // more object_listing to retrieve? if (objectListing.isTruncated()) { objectListing = s3.listNextBatchOfObjects(objectListing); } else { break; } } return objectList; } /** * 下载文件到本地 * @param s3 * @param bucketName * @param key * @param targetFilePath */ public static void downloadObject(AmazonS3 s3,String bucketName,String key,String targetFilePath){ S3Object object = s3.getObject(new GetObjectRequest(bucketName,key)); if(object != null){ System.out.println("Content-Type: " + object.getObjectMetadata().getContentType()); InputStream input = null; FileOutputStream fileOutputStream = null; byte[] data = null; try { //获取文件流 input=object.getObjectContent(); data = new byte[input.available()]; int len = 0; fileOutputStream = new FileOutputStream(targetFilePath+key); while ((len = input.read(data)) != -1) { fileOutputStream.write(data, 0, len); } System.out.println("下载文件成功"); } catch (IOException e) { e.printStackTrace(); }finally{ if(fileOutputStream!=null){ try { fileOutputStream.close(); } catch (IOException e) { e.printStackTrace(); } } if(input!=null){ try { input.close(); } catch (IOException e) { e.printStackTrace(); } } } } } /** * 获取文件桶中对应key的文件大小,如key不存在你则返回-1 * @param s3 * @param bucketName * @param key * @return */ public static long getObjectSize(AmazonS3 s3,String bucketName,String key) { long size = -1l; try { ObjectMetadata objectMetada = s3.getObjectMetadata(bucketName, key); size = objectMetada.getContentLength(); }catch(Exception e) { } return size; } /** * 生成文件url * @param s3 * @param bucketName * @param objectName * @return */ public static String getDownloadUrl(AmazonS3 s3,String bucketName, String objectName) { if (bucketName.isEmpty() || objectName.isEmpty()) { return null; } // GeneratePresignedUrlRequest request = new GeneratePresignedUrlRequest(bucketName, objectName); // System.out.println(conn.generatePresignedUrl(request)); // Date dateTime = new Date(); long msec = dateTime.getTime(); msec += 1000 * 60 * 5; dateTime.setTime(msec); GeneratePresignedUrlRequest request = new GeneratePresignedUrlRequest(bucketName,objectName).withExpiration(dateTime); // if (versionId != null) { // request.setVersionId(versionId); // } System.out.println(s3.generatePresignedUrl(request)); return s3.generatePresignedUrl(request).toString(); }/** * 获取S3连接对象 * * @return */ public static AmazonS3 getS3Client(String endPoint,String accessKey,String secretKey) { AWSCredentials credentials = new BasicAWSCredentials(accessKey, secretKey); ClientConfiguration clientConfig = new ClientConfiguration(); clientConfig.setProtocol(Protocol.HTTP); clientConfig.setConnectionTimeout(6000);//设置连接超时时间 clientConfig.setSocketTimeout(30000); clientConfig.setMaxErrorRetry(1);//设置最大错误重试次数 // System.setProperty("com.amazonaws.services.s3.disablePutObjectMD5Validation","true"); AmazonS3 conn = new AmazonS3Client(credentials,clientConfig); conn.setEndpoint(endPoint); conn.setS3ClientOptions(new S3ClientOptions().withPathStyleAccess(true)); return conn; } /** * 获取S3连接对象 * * @return */ public static AmazonS3 getS3SSLClient(String endPoint,String accessKey,String secretKey) { System.setProperty("com.amazonaws.sdk.disableCertChecking", "true"); AWSCredentials credentials = new BasicAWSCredentials(accessKey, secretKey); ClientConfiguration clientConfig = new ClientConfiguration(); clientConfig.setProtocol(Protocol.HTTPS); clientConfig.setConnectionTimeout(3000);//设置连接超时时间 clientConfig.setSocketTimeout(3000); clientConfig.setMaxErrorRetry(2);//设置最大错误重试次数 // System.setProperty("com.amazonaws.services.s3.disablePutObjectMD5Validation","true"); AmazonS3 conn = new AmazonS3Client(credentials,clientConfig); conn.setEndpoint(endPoint); conn.setS3ClientOptions(new S3ClientOptions().withPathStyleAccess(true)); return conn; } }

4.3使用S3Utils工具类进行相关操作即可

创建S3对象的endPoint为http://192.168.5.182:7480

公私钥是上面网关创建时生成的公私钥