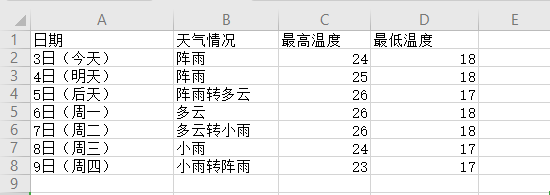

"""python爬取昆明天气信息""" import requests import time import csv import random import bs4 from bs4 import BeautifulSoup def get_content(url,data=None): headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.61 Safari/537.36' } timeout=random.choice(range(10,60)) rep=requests.get(url=url,headers=headers,timeout=timeout) rep.encoding='utf-8' return rep.text def get_data(html_text): final=[] bs=BeautifulSoup(html_text,"html.parser") body=bs.body data=body.find('div',attrs={'id':'7d'}) #data=body.find('div',{'id':'7d'}) print(type(data)) ul=data.find('ul') li=ul.find_all('li') for day in li: temp=[] date=day.find('h1').string temp.append(date) info=day.find_all('p') title=day.find('p',attrs={'class':'wea'}) temp.append(title.text) if info[1].find('span') is None: temperature_hightest=None else: temperature_hightest=info[1].find('span').string temperature_hightest=temperature_hightest.replace("℃","") temperature_lowest=info[1].find('i').string temperature_lowest=temperature_lowest.replace("℃","") temp.append(temperature_hightest) temp.append(temperature_lowest) final.append(temp) return final def write_date(data,name): file_name=name with open(file_name,'w',errors='ignore',newline='') as f: f_csv=csv.writer(f) f_csv.writerow(['日期','天气情况','最高温度','最低温度']) f_csv.writerows(data) if __name__=='__main__': url='http://www.weather.com.cn/weather/101290101.shtml' html=get_content(url) result=get_data(html) write_date(result,'weather.csv')