单节点

1.拉取镜像:docker pull zookeeper

2.运行容器

a.我的容器同一放在/root/docker下面,然后创建相应的目录和文件,

其中zoo.cfg(这里是默认的主要延时怪哉文件)如下:

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data dataLogDir=/datalog clientPort=2181 maxClientCnxns=60

这里也设置了zookeeper默认的环境变量

b.运行实例,切换到/root/docker/zookeeper下载执行(不知道为什么这里zoo.cfg一定要用相对路径,用绝对路径提示docker-entrypoint.sh: line 15: /conf/zoo.cfg: Is a directory)

docker run --name zookeeper --restart always -d -v$(pwd)/data:/data -v$(pwd)/datalog:/datalog -v $(pwd)/conf/zoo.cfg:/conf/zoo.cfg -p 2181:2181 -p 2888:2888 -p 3888:3888 zookeeperp 2181:2181 -p 2888:2888 -p 3888:3888 zookeeper # 2181端口号是zookeeper client端口 # 2888端口号是zookeeper服务之间通信的端口 # 3888端口是zookeeper与其他应用程序通信的端口 #用绝对路径 docker run --name zookeeper --restart always -d -v/root/docker/zookeep/data:/data -v/root/docker/zookeep/datalog:/datalog -v /root/docker/zookeep/conf/zoo.cfg:/conf/zoo.cfg -p 2181:2181 -p 2888:2888 -p 3888:3888 zookeeper #提示/docker-entrypoint.sh: line 15: /conf/zoo.cfg: Is a directory docker run --name zookeeper --restart always -d -v/root/docker/zookeep/data:/data -v/root/docker/zookeep/datalog:/datalog -v /root/docker/zookeep/conf/:/conf/ -p 2181:2181 -p 2888:2888 -p 3888:388 zookeeper #正确的用法是不指定文件

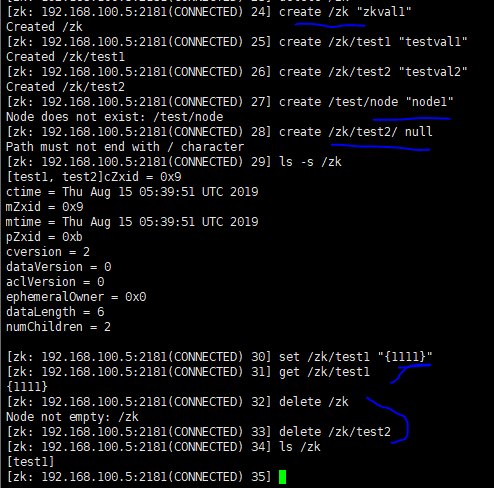

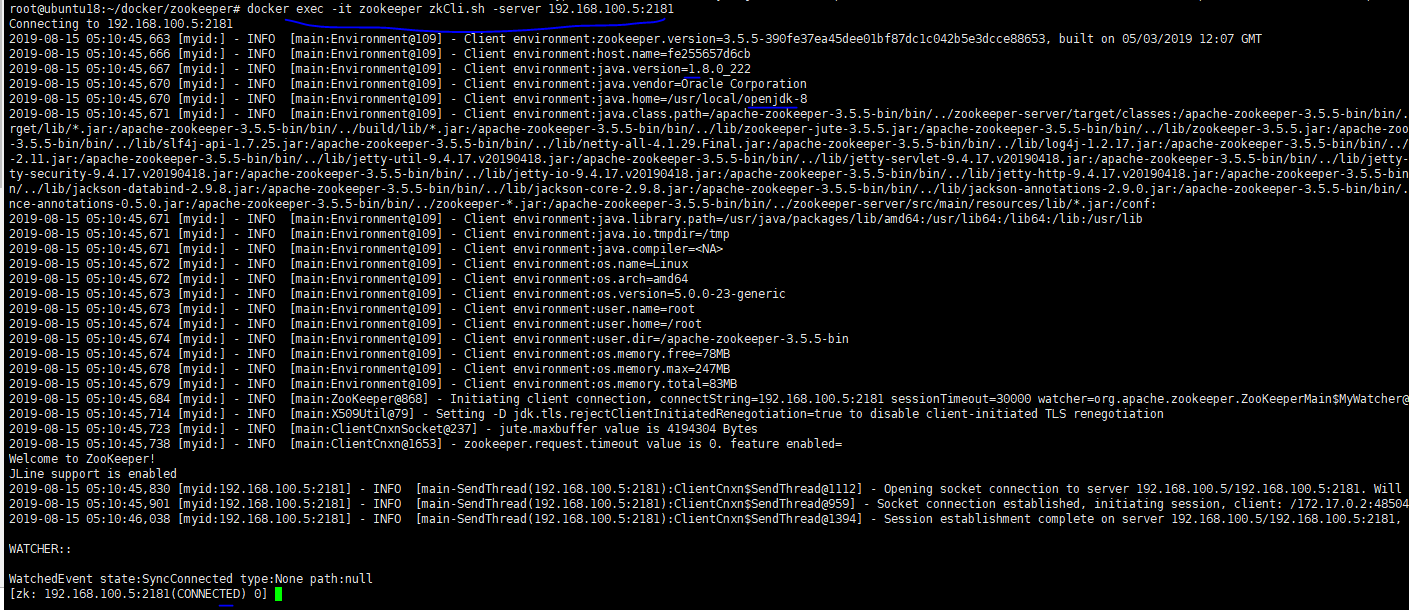

c.zookeeper常规操作,首先执行以下指令进入zookeeper客服端:

docker exec -it zookeeper zkCli.sh -server 192.168.100.5:2181 #如果是集群server用逗号分割 -server 192.168.100.5:2181,192.168.100.6:2182 create /zk "zkval1" #创建zk节点 create /zk/test1 "testval1" #创建zk/test1节点 create /zk/test2 "testval2" #创建zk/test2节点 #create /test/node "node1" 失败,不支持递归创建,多级时,必须一级一级创建 #create /zk/test2/ null 节点不能以 / 结尾,会直接报错 ls -s /zk #查看zk节点信息 set /zk/test1 "{1111}" #修改节点数据 get /zk/test1 #查看节点数据 delete /zk #删除时,须先清空节点下的内容,才能删除节点 delete /zk/test2

集群搭建

我这里搞了很久,最后还是用官网的配置 创建docker-compose.yml文件如下:

version: '3.1' services: zoo1: image: zookeeper restart: always hostname: zoo1 ports: - 2181:2181 environment: ZOO_MY_ID: 1 ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181 zoo2: image: zookeeper restart: always hostname: zoo2 ports: - 2182:2181 environment: ZOO_MY_ID: 2 ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181 zoo3: image: zookeeper restart: always hostname: zoo3 ports: - 2183:2181 environment: ZOO_MY_ID: 3 ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

最后运行docker-compose up指令,最后验证,

docker exec -it zookeeper_zoo1_1 zkCli.sh -server 192.168.100.5:2181 create /zk "test" quit #退出容器1 docker exec -it zookeeper_zoo2_1 zkCli.sh -server 192.168.100.5:2182 get /zk #在容器2获取值 quit docker exec -it zookeeper_zoo3_1 zkCli.sh -server 192.168.100.5:2183 get /zk #在容器3获取值 quit

分布式锁

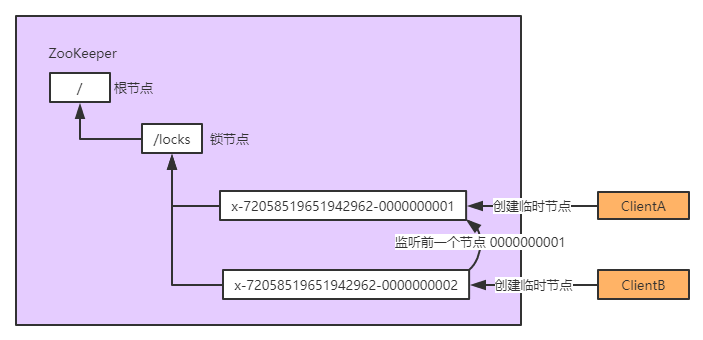

ZooKeeper 分布式锁是基于 临时顺序节点 来实现的,锁可理解为 ZooKeeper 上的一个节点,当需要获取锁时,就在这个锁节点下创建一个临时顺序节点。当存在多个客户端同时来获取锁,就按顺序依次创建多个临时顺序节点,但只有排列序号是第一的那个节点能获取锁成功,其他节点则按顺序分别监听前一个节点的变化,当被监听者释放锁时,监听者就可以马上获得锁。而且用临时顺序节点的另外一个用意是如果某个客户端创建临时顺序节点后,自己意外宕机了也没关系,ZooKeeper 感知到某个客户端宕机后会自动删除对应的临时顺序节点,相当于自动释放锁。

如上图:ClientA 和 ClientB 同时想获取锁,所以都在 locks 节点下创建了一个临时节点 1 和 2,而 1 是当前 locks 节点下排列序号第一的节点,所以 ClientA 获取锁成功,而 ClientB 处于等待状态,这时 ZooKeeper 中的 2 节点会监听 1 节点,当 1节点锁释放(节点被删除)时,2 就变成了 locks 节点下排列序号第一的节点,这样 ClientB 就获取锁成功了。如下是c#代码:

创建 .NET Core 控制台程序

Nuget 安装 ZooKeeperNetEx.Recipes

创建 ZooKeeper Client, ZooKeeprLock代码如下:

namespace ZookeeperDemo { using org.apache.zookeeper; using org.apache.zookeeper.recipes.@lock; using System; using System.Diagnostics; using System.Threading.Tasks; public class ZooKeeprLock { private const int CONNECTION_TIMEOUT = 50000; private const string CONNECTION_STRING = "192.168.100.5:2181,192.168.100.5:2182,192.168.100.5:2183"; /// <summary> /// 加锁 /// </summary> /// <param name="key">加锁的节点名</param> /// <param name="lockAcquiredAction">加锁成功后需要执行的逻辑</param> /// <param name="lockReleasedAction">锁释放后需要执行的逻辑,可为空</param> /// <returns></returns> public async Task Lock(string key, Action lockAcquiredAction, Action lockReleasedAction = null) { // 获取 ZooKeeper Client ZooKeeper keeper = CreateClient(); // 指定锁节点 WriteLock writeLock = new WriteLock(keeper, $"/{key}", null); var lockCallback = new LockCallback(() => { lockAcquiredAction.Invoke(); writeLock.unlock(); }, lockReleasedAction); // 绑定锁获取和释放的监听对象 writeLock.setLockListener(lockCallback); // 获取锁(获取失败时会监听上一个临时节点) await writeLock.Lock(); } private ZooKeeper CreateClient() { var zooKeeper = new ZooKeeper(CONNECTION_STRING, CONNECTION_TIMEOUT, NullWatcher.Instance); Stopwatch sw = new Stopwatch(); sw.Start(); while (sw.ElapsedMilliseconds < CONNECTION_TIMEOUT) { var state = zooKeeper.getState(); if (state == ZooKeeper.States.CONNECTED || state == ZooKeeper.States.CONNECTING) { break; } } sw.Stop(); return zooKeeper; } class NullWatcher : Watcher { public static readonly NullWatcher Instance = new NullWatcher(); private NullWatcher() { } public override Task process(WatchedEvent @event) { return Task.CompletedTask; } } class LockCallback : LockListener { private readonly Action _lockAcquiredAction; private readonly Action _lockReleasedAction; public LockCallback(Action lockAcquiredAction, Action lockReleasedAction) { _lockAcquiredAction = lockAcquiredAction; _lockReleasedAction = lockReleasedAction; } /// <summary> /// 获取锁成功回调 /// </summary> /// <returns></returns> public Task lockAcquired() { _lockAcquiredAction?.Invoke(); return Task.FromResult(0); } /// <summary> /// 释放锁成功回调 /// </summary> /// <returns></returns> public Task lockReleased() { _lockReleasedAction?.Invoke(); return Task.FromResult(0); } } } }

测试代码:

namespace ZookeeperDemo { using System; using System.Threading; using System.Threading.Tasks; class Program { static void Main(string[] args) { Parallel.For(1, 10, async (i) => { await new ZooKeeprLock().Lock("locks", () => { Console.WriteLine($"第{i}个请求,获取锁成功:{DateTime.Now},线程Id:{Thread.CurrentThread.ManagedThreadId}"); Thread.Sleep(1000); // 业务逻辑... }, () => { Console.WriteLine($"第{i}个请求,释放锁成功:{DateTime.Now},线程Id:{Thread.CurrentThread.ManagedThreadId}"); Console.WriteLine("-------------------------------"); }); }); Console.ReadKey(); } } }

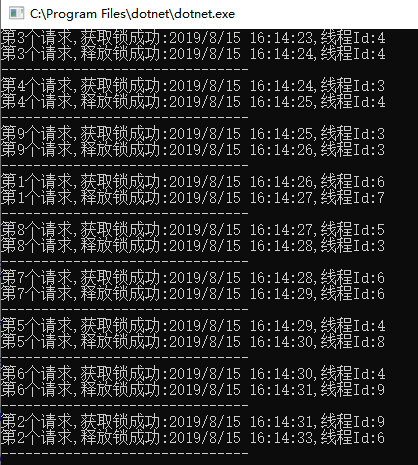

运行结果:

关于分布式锁, 我们也可以采用数据库和redis来实现, 各有优缺点。

参考:

How To Install and Configure an Apache ZooKeeper Cluster on Ubuntu 18.04