win7 + spark + hive + python集成

通过win7使用spark的pyspark访问hive

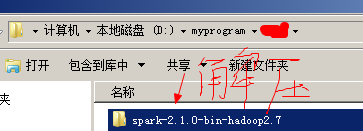

1、安装spark软件包

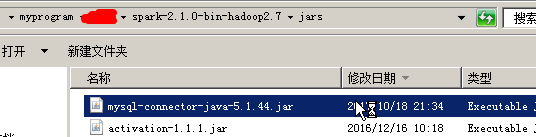

2、复制mysql驱动

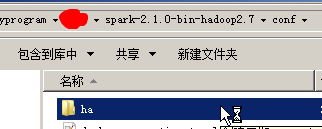

3、复制hadoop配置目录到spark的conf下

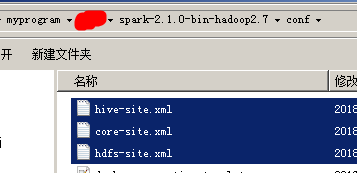

4、复制hadoop和hive的配置文件到conf下

5.1、在pyspark脚本中添加HADOOP_CONF_DIR环境变量,指向hadoop配置目录

set HADOOP_CONF_DIR=D:myprogramspark-2.1.0-bin-hadoop2.7confha

5.2、以下也要配置

set HADOOP_CONF_DIR=D:myprogramspark-2.1.0-bin-hadoop2.7confha

6、修改hdfs目录权限

[centos@s101 ~]$ hdfs dfs -chmod -R 777 /user

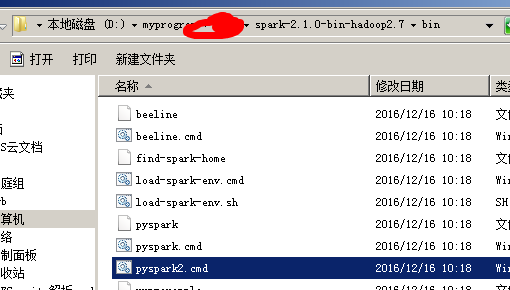

7、在win7启动pyspark shell,连接到yarn,在bin下

pyspark --master yarn

8、测试

>>> rdd1 = sc.textFile("/user/centos/myspark/wc") >>> rdd1.flatMap(lambda e:e.split(" ")).map(lambda e:(e,1)).reduceByKey(lambda a,b:a+b).collect() [(u'9', 3), (u'1', 2), (u'3', 3), (u'5', 4), (u'7', 3), (u'0', 2), (u'8', 3), (u'2', 3), (u'4', 3), (u'6', 4)] >>> for i in rdd1.flatMap(lambda e:e.split(" ")).map(lambda e:(e,1)).reduceByKey(lambda a,b:a+b).collect():print i ... (u'1', 2) (u'9', 3) (u'3', 3) (u'5', 4) (u'7', 3) (u'0', 2) (u'8', 3) (u'2', 3) (u'4', 3) (u'6', 4) >>> spark.sql("show databases").show() +------------+ |databaseName| +------------+ | default| | lx| | udtf| +------------+

IDEA中开发pyspark程序:前提是以上步骤完成

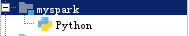

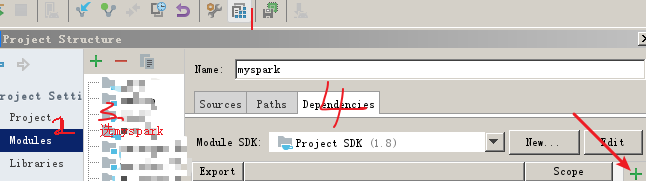

1、创建java或scala模块

2、进入项目结构(设置右侧)--左侧点modules--选myspark--右键add,python支持

点击python,指定解释器

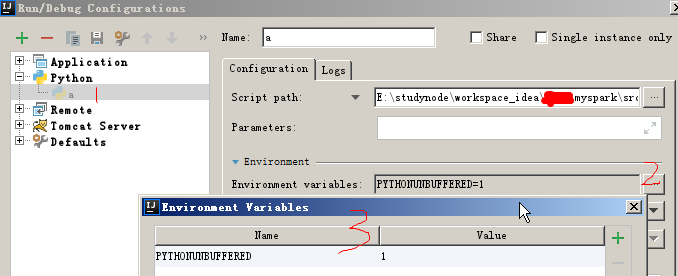

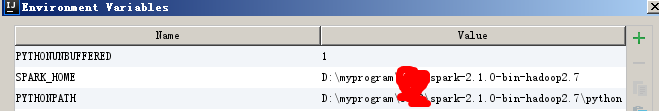

3、在配置中指定环境变量

1、进入设置界面

2、如下配置

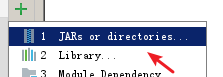

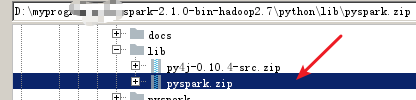

4、导入spark的python核心库

5、测试

安装:pip install py4j

#coding:utf-8 #wordcount from pyspark.context import SparkContext from pyspark import SparkConf conf = SparkConf().setMaster("local[*]").setAppName("") sc = SparkContext(conf=conf) rdd1 = sc.textFile("/user/centos/myspark/wc") rdd2 = rdd1.flatMap(lambda s:s.split(" ")).map(lambda s:(s,1)).reduceByKey(lambda a,b:a+b) lst = rdd2.collect() for i in lst: print(i) #sparksql from pyspark.sql import * spark = SparkSession.builder.enableHiveSupport().getOrCreate() arr = spark.sql("show databases").show() if __name__ == "__main__": pass