全面采集二手房数据:

网站二手房总数据量为27650条,但有的参数字段会出现一些问题,因为只给返回100页数据,具体查看就需要去细分请求url参数去请求网站数据。

我这里大概的获取了一下筛选条件参数,一些存在问题也没做细化处理,大致的采集数据量为21096,实际19794条。

看一下执行完成结果:

{'downloader/exception_count': 199,

'downloader/exception_type_count/twisted.internet.error.NoRouteError': 192,

'downloader/exception_type_count/twisted.web._newclient.ResponseNeverReceived': 7,

'downloader/request_bytes': 9878800,

'downloader/request_count': 21096,

'downloader/request_method_count/GET': 21096,

'downloader/response_bytes': 677177525,

'downloader/response_count': 20897,

'downloader/response_status_count/200': 20832,

'downloader/response_status_count/301': 49,

'downloader/response_status_count/302': 11,

'downloader/response_status_count/404': 5,

'dupefilter/filtered': 53,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 11, 12, 8, 49, 42, 371235),

'httperror/response_ignored_count': 5,

'httperror/response_ignored_status_count/404': 5,

'log_count/DEBUG': 21098,

'log_count/ERROR': 298,

'log_count/INFO': 61,

'request_depth_max': 3,

'response_received_count': 20837,

'retry/count': 199,

'retry/reason_count/twisted.internet.error.NoRouteError': 192,

'retry/reason_count/twisted.web._newclient.ResponseNeverReceived': 7,

'scheduler/dequeued': 21096,

'scheduler/dequeued/memory': 21096,

'scheduler/enqueued': 21096,

'scheduler/enqueued/memory': 21096,

'spider_exceptions/TypeError': 298,

'start_time': datetime.datetime(2018, 11, 12, 7, 59, 52, 608383)}

2018-11-12 16:49:42 [scrapy.core.engine] INFO: Spider closed (finished)

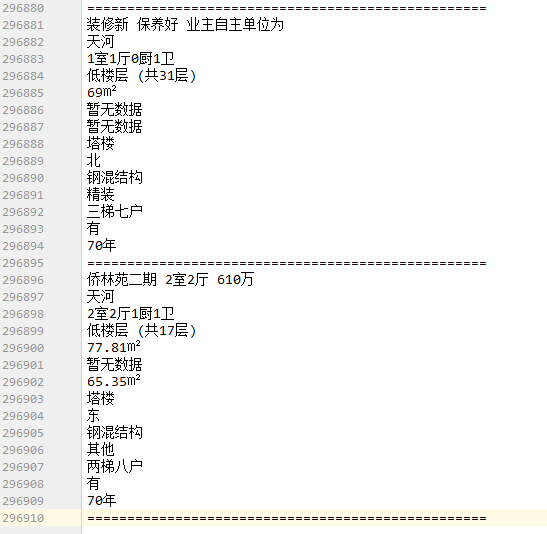

采集数据如图:

num = 296910/15=19794条

2. lianjia.py # -*- coding: utf-8 -*- import scrapy class LianjiaSpider(scrapy.Spider): name = 'lianjia' allowed_domains = ['gz.lianjia.com'] start_urls = ['https://gz.lianjia.com/ershoufang/pg1/'] def parse(self, response): for i in range(1,8): for j in range(1,8): url = 'https://gz.lianjia.com/ershoufang/p{}a{}pg1'.format(i,j) yield scrapy.Request(url=url,callback=self.parse_detail) def parse_detail(self,response): # 符合筛选条件的个数 counts = response.xpath("//h2[@class='total fl']/span/text()").extract_first().strip() # print(counts) if int(counts)%30 >0: p_num = int(counts)//30+1 # print(p_num) # 拼接首页url for k in range(1,p_num+1): url = response.url link_url = url.split('pg')[0]+'pg{}/'.format(k) # print(link_url) yield scrapy.Request(url=link_url,callback=self.parse_detail2) def parse_detail2(self,response): #获取当前页面url link_urls = response.xpath("//div[@class='info clear']/div[@class='title']/a/@href").extract() for link_url in link_urls: # print(link_url) yield scrapy.Request(url=link_url,callback=self.parse_detail3) # print('*'*100) def parse_detail3(self,response): title = response.xpath("//div[@class='title']/h1[@class='main']/text()").extract_first() print('标题: '+ title) dist = response.xpath("//div[@class='areaName']/span[@class='info']/a/text()").extract_first() print('所在区域: '+ dist) contents = response.xpath("//div[@class='introContent']/div[@class='base']") # print(contents) house_type = contents.xpath("./div[@class='content']/ul/li[1]/text()").extract_first() print('房屋户型: '+ house_type) floor = contents.xpath("./div[@class='content']/ul/li[2]/text()").extract_first() print('所在楼层: '+ floor) built_area = contents.xpath("./div[@class='content']/ul/li[3]/text()").extract_first() print('建筑面积: '+ built_area) family_structure = contents.xpath("./div[@class='content']/ul/li[4]/text()").extract_first() print('户型结构: '+ family_structure) inner_area = contents.xpath("./div[@class='content']/ul/li[5]/text()").extract_first() print('套内面积: '+ inner_area) architectural_type = contents.xpath("./div[@class='content']/ul/li[6]/text()").extract_first() print('建筑类型: '+ architectural_type) house_orientation = contents.xpath("./div[@class='content']/ul/li[7]/text()").extract_first() print('房屋朝向: '+ house_orientation) building_structure = contents.xpath("./div[@class='content']/ul/li[8]/text()").extract_first() print('建筑结构: '+ building_structure) decoration_condition = contents.xpath("./div[@class='content']/ul/li[9]/text()").extract_first() print('装修状况: '+ decoration_condition) proportion = contents.xpath("./div[@class='content']/ul/li[10]/text()").extract_first() print('梯户比例: '+ proportion) elevator = contents.xpath("./div[@class='content']/ul/li[11]/text()").extract_first() print('配备电梯: '+ elevator) age_limit =contents.xpath("./div[@class='content']/ul/li[12]/text()").extract_first() print('产权年限: '+ age_limit) # try: # house_label = response.xpath("//div[@class='content']/a/text()").extract_first() # except: # house_label = '' # print('房源标签: ' + house_label) with open('text2', 'a', encoding='utf-8')as f: f.write(' '.join( [title,dist,house_type,floor,built_area,family_structure,inner_area,architectural_type,house_orientation,building_structure,decoration_condition,proportion,elevator,age_limit])) f.write(' ' + '=' * 50 + ' ') print('-'*100)

3.代码还需要细分的话,就多配置url的请求参数,缩小筛选范围,获取页面就更精准,就能避免筛选到过3000的数据类型,可以再去细分。