搭建好HBase 集群后,各种后台进程都正常,搭建手册参考:

Hbase 2.1.3 集群搭建手册

https://www.cndba.cn/dave/article/3322

但是通过web访问,却报如下错误:

2019-03-05 23:13:49,508 WARN [qtp1911600942-82] servlet.ServletHandler: /master-status

java.lang.IllegalArgumentException: org.apache.hbase.thirdparty.com.google.protobuf.InvalidProtocolBufferException: CodedInputStream encountered an embedded string or message which claimed to have negative size.

at org.apache.hbase.thirdparty.com.google.protobuf.CodedInputStream.newInstance(CodedInputStream.java:155)

at org.apache.hbase.thirdparty.com.google.protobuf.CodedInputStream.newInstance(CodedInputStream.java:133)

at org.apache.hbase.thirdparty.com.google.protobuf.AbstractParser.parsePartialFrom(AbstractParser.java:162)

at org.apache.hbase.thirdparty.com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:197)

at org.apache.hbase.thirdparty.com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:203)

at org.apache.hbase.thirdparty.com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:49)

at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.parse(MasterAddressTracker.java:251)

at org.apache.hadoop.hbase.zookeeper.MasterAddressTracker.getMasterInfoPort(MasterAddressTracker.java:87)

at org.apache.hadoop.hbase.tmpl.master.BackupMasterStatusTmplImpl.renderNoFlush(BackupMasterStatusTmplImpl.java:50)

at org.apache.hadoop.hbase.tmpl.master.BackupMasterStatusTmpl.renderNoFlush(BackupMasterStatusTmpl.java:119)

at org.apache.hadoop.hbase.tmpl.master.MasterStatusTmplImpl.renderNoFlush(MasterStatusTmplImpl.java:423)

at org.apache.hadoop.hbase.tmpl.master.MasterStatusTmpl.renderNoFlush(MasterStatusTmpl.java:397)

at org.apache.hadoop.hbase.tmpl.master.MasterStatusTmpl.render(MasterStatusTmpl.java:388)

at org.apache.hadoop.hbase.master.MasterStatusServlet.doGet(MasterStatusServlet.java:81)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:687)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:848)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1780)

at org.apache.hadoop.hbase.http.lib.StaticUserWebFilter$StaticUserFilter.doFilter(StaticUserWebFilter.java:112)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1767)

at org.apache.hadoop.hbase.http.ClickjackingPreventionFilter.doFilter(ClickjackingPreventionFilter.java:48)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1767)

at org.apache.hadoop.hbase.http.HttpServer$QuotingInputFilter.doFilter(HttpServer.java:1374)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1767)

at org.apache.hadoop.hbase.http.NoCacheFilter.doFilter(NoCacheFilter.java:49)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1767)

at org.apache.hadoop.hbase.http.NoCacheFilter.doFilter(NoCacheFilter.java:49)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1767)

at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:583)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:143)

at org.eclipse.jetty.security.SecurityHandler.handle(SecurityHandler.java:548)

at org.eclipse.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:226)

at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1180)

at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:513)

at org.eclipse.jetty.server.session.SessionHandler.doScope(SessionHandler.java:185)

at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1112)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141)

at org.eclipse.jetty.server.handler.HandlerCollection.handle(HandlerCollection.java:119)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:134)

at org.eclipse.jetty.server.Server.handle(Server.java:539)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:333)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:251)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:283)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:108)

at org.eclipse.jetty.io.SelectChannelEndPoint$2.run(SelectChannelEndPoint.java:93)

at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.executeProduceConsume(ExecuteProduceConsume.java:303)

at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.produceConsume(ExecuteProduceConsume.java:148)

at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.run(ExecuteProduceConsume.java:136)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:671)

at org.eclipse.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:589)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hbase.thirdparty.com.google.protobuf.InvalidProtocolBufferException: CodedInputStream encountered an embedded string or message which claimed to have negative size.

at org.apache.hbase.thirdparty.com.google.protobuf.InvalidProtocolBufferException.negativeSize(InvalidProtocolBufferException.java:94)

at org.apache.hbase.thirdparty.com.google.protobuf.CodedInputStream$ArrayDecoder.pushLimit(CodedInputStream.java:1212)

at org.apache.hbase.thirdparty.com.google.protobuf.CodedInputStream.newInstance(CodedInputStream.java:146)

... 50 more

从日志看是java 函数异常了,此时查看Master日志:

2019-03-05 23:02:31,284 INFO [main] server.AbstractConnector: Started ServerConnector@9b21bd3{HTTP/1.1,[http/1.1]}{0.0.0.0:16010}

2019-03-05 23:02:31,284 INFO [main] server.Server: Started @8427ms

2019-03-05 23:02:31,290 INFO [main] master.HMaster: hbase.rootdir=hdfs://192.168.56.100:9000/hbase, hbase.cluster.distributed=true

2019-03-05 23:02:31,340 INFO [master/hadoopMaster:16000:becomeActiveMaster] master.HMaster: Adding backup master ZNode /hbase/backup-masters/hadoopmaster,16000,1551798143799

2019-03-05 23:02:31,555 INFO [master/hadoopMaster:16000:becomeActiveMaster] master.ActiveMasterManager: Another master is the active master, null; waiting to become the next active master

注意这里的最后一行:

master.ActiveMasterManager: Another master is the active master, null; waiting to become the next active master

这里的null值是导致异常的主要原因。

因为Hbase的信息是存储在Zookeeper中的,之前测试的结果可能对这里产生了影响,所以连上Zookeeper,把/hbase目录删除了。

ZK的命令参考如下博客:

Zookeeper 客户端 zkCLI 命令详解

https://www.cndba.cn/dave/article/3300

[zk: 192.168.56.100:2181(CONNECTED) 0] ls /

[newacl, zookeeper, oracle0000000002, oracle0000000001, acl, ustc, cndba, hbase]

[zk: 192.168.56.100:2181(CONNECTED) 1] rmr /hbase

Node not empty: /hbase

[zk: 192.168.56.100:2181(CONNECTED) 2] get /hbase

cZxid = 0x200000009

ctime = Mon Mar 04 00:46:33 CST 2019

mZxid = 0x200000009

mtime = Mon Mar 04 00:46:33 CST 2019

pZxid = 0xe00000075

cversion = 21

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

[zk: 192.168.56.100:2181(CONNECTED) 3] ls /

[newacl, zookeeper, oracle0000000002, oracle0000000001, acl, ustc, cndba, hbase]

[zk: 192.168.56.100:2181(CONNECTED) 4] rmr /hbase

[zk: 192.168.56.100:2181(CONNECTED) 5]

再次启动HMaster:

[hadoop@hadoopMaster ~]$ hbase-daemon.sh start master

running master, logging to /home/hadoop/hbase/logs/hbase-hadoop-master-hadoopMaster.out

[hadoop@hadoopMaster ~]$

查看日志:

2019-03-05 23:27:53,834 INFO [main] server.AbstractConnector: Started ServerConnector@14ef2482{HTTP/1.1,[http/1.1]}{0.0.0.0:16010}

2019-03-05 23:27:53,834 INFO [main] server.Server: Started @3884ms

2019-03-05 23:27:53,839 INFO [main] master.HMaster: hbase.rootdir=hdfs://192.168.56.100:9000/hbase, hbase.cluster.distributed=true

2019-03-05 23:27:53,894 INFO [master/hadoopMaster:16000:becomeActiveMaster] master.HMaster: Adding backup master ZNode /hbase/backup-masters/hadoopmaster,16000,1551799670771

2019-03-05 23:27:53,934 INFO [master/hadoopMaster:16000] regionserver.HRegionServer: ClusterId : c64d0ebc-85ed-4e44-aba4-e8986351bb2c

2019-03-05 23:27:54,099 INFO [master/hadoopMaster:16000:becomeActiveMaster] master.ActiveMasterManager: Another master is the active master, slave2,16000,1551799615662; waiting to become the next active master

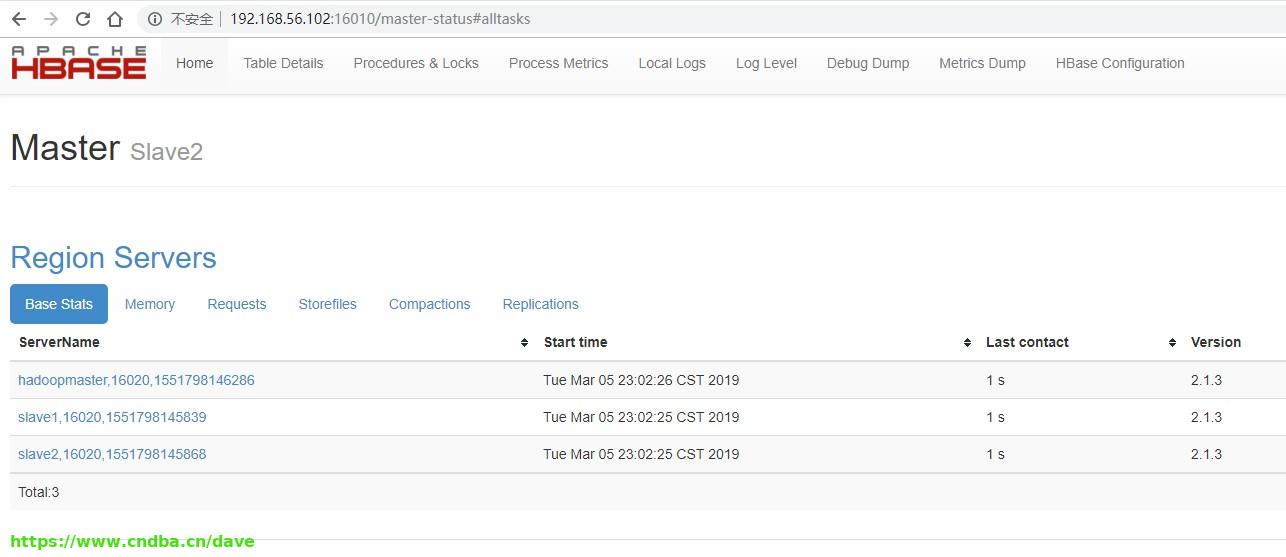

这里终于有值了,再次查看web页面,恢复正常: