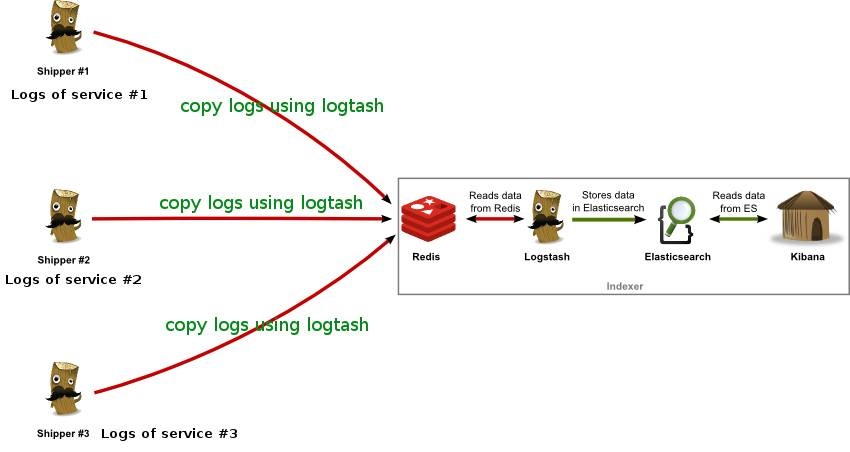

废话不多说,直接上干货,首先看下整体应用的大致结构。(整个过程我用到了两台虚拟机 应用和Shipper 部署在192.168.25.128 上 Redis和ELK 部署在192.168.25.129上)

Controller:

@RestController @RequestMapping("/user") public class UserController { private Logger logger = LoggerFactory.getLogger(UserController.class); @RequestMapping(value = "/addUser", method = RequestMethod.GET) public Object addUser() { String[] names = {"Jack", "Tom", "John", "Arnold", "Jim", "James", "Rock"}; String[] introduce = {"I like to collect rock albums", "I like to build cabinets", "I like playing computer games", "I like climbing mountains", "I love running", "I love eating", "I love drinking"}; for (int i = 0; i < 1000; i++) { Random random = new Random(); int tempNum = random.nextInt(7); User user = new User(); user.setId(i); user.setAge(random.nextInt(20) + 20); user.setIntroduce(introduce[tempNum]); user.setName(names[tempNum] + i); logger.info(JSON.toJSONString(user)); } return new ResponseData(200, "Success"); } }

通过nohup方式启动我们的SpringBoot应用

nohup java -jar -Dserver.port=8080 -Dlog.root=/var/log/springboot-elk/ /opt/springboot-elk/springboot-elk.jar >/dev/null 2>&1 &

LogStash shipper配置:

input {

file {

path => "/var/log/springboot-elk/*"

type => "springboot-elk"

}

}

output {

redis {

data_type => "list"

key => "springboot-elk-list"

host => "192.168.25.129"

port => 6379

}

}

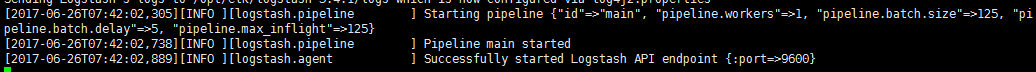

保证指定的Redis实例开启后,运行LogStash:

bin/logstash -f config/logback-logstash-redis.conf

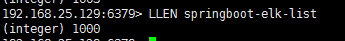

接下来我们访问应用来生成日志。

生成成功,观察我们的Redis,数据已经全部进入了队列中。

接下来我们配置LogStash 读取Redis数据放入ES中:

input {

redis {

data_type => "list"

key => "springboot-elk-list"

host => "192.168.25.129"

port => 6379

}

}

output {

elasticsearch {

hosts => ["192.168.25.129:9200","192.168.25.129:9201"] #从 1.5.0开始,host 可以设置数组,它会从节点列表中选取不同的节点发送数据,达到 Round-Robin负载均衡的效果。单节点也可以写成数组形式。

index => "springboot-elk"

user => elastic #没装x-pack这边就不需要user和password了

password => changeme

}

}

运行LogStash后,它会自动从Redis指定的队列中读取数据放入ES。

bin/logstash -f config/redis-logstash-es.conf

启动Kibana,建立索引,观察数据是否进入了ES:

可以看到1000条数据已经进入了ES (^o^)/~ 。