一、案例运行MapReduce Workflow

1、准备examples

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# pwd

/opt/cdh-5.3.6/oozie-4.0.0-cdh5.3.6

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# tar zxf oozie-examples.tar.gz //此压缩包默认存在

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# cd examples/

[root@hadoop-senior examples]# ls

apps input-data src

2、将examples目录上传到hdfs

##上传

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# /opt/cdh-5.3.6/hadoop-2.5.0-cdh5.3.6/bin/hdfs dfs -put examples examples

##查看

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -ls /user/root |grep examples

drwxr-xr-x - root supergroup 0 2019-05-10 14:01 /user/root/examples

3、修改配置

##先启动yarn、historyserver

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# sbin/yarn-daemon.sh start resourcemanager

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# sbin/yarn-daemon.sh start nodemanager

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# sbin/mr-jobhistory-daemon.sh start historyserver

##看一下hdfs上examples里的目录结构

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -ls /user/root/examples/apps/map-reduce

Found 5 items

-rw-r--r-- 1 root supergroup 1028 2019-05-10 14:01 /user/root/examples/apps/map-reduce/job-with-config-class.properties

-rw-r--r-- 1 root supergroup 1012 2019-05-10 14:01 /user/root/examples/apps/map-reduce/job.properties

drwxr-xr-x - root supergroup 0 2019-05-10 14:01 /user/root/examples/apps/map-reduce/lib

-rw-r--r-- 1 root supergroup 2274 2019-05-10 14:01 /user/root/examples/apps/map-reduce/workflow-with-config-class.xml

-rw-r--r-- 1 root supergroup 2559 2019-05-10 14:01 /user/root/examples/apps/map-reduce/workflow.xml

说明:workflow.xml文件必须在hdfs上; job.properties文件在本地有也可以

####修改 job.properties

nameNode=hdfs://hadoop-senior.ibeifeng.com:8020

jobTracker=hadoop-senior.ibeifeng.com:8032

queueName=default

examplesRoot=examples

oozie.coord.application.path=${nameNode}/user/${user.name}/${examplesRoot}/apps/map-reduce/workflow.xml

outputDir=map-reduce

##更新一下hdfs的文件内容,不更新应该也可以

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# /opt/cdh-5.3.6/hadoop-2.5.0-cdh5.3.6/bin/hdfs dfs -rm examples/apps/map-reduce/job.properties

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# /opt/cdh-5.3.6/hadoop-2.5.0-cdh5.3.6/bin/hdfs dfs -put examples/apps/map-reduce/job.properties examples/apps/map-reduce/

4、

##

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# bin/oozie help

##运行一个MapReduce job

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# bin/oozie job -oozie http://localhost:11000/oozie -config examples/apps/map-reduce/job.properties -run

job: 0000000-190510134749297-oozie-root-W

##

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -ls /user/root/examples/output-data/map-reduce

Found 2 items

-rw-r--r-- 1 root supergroup 0 2019-05-10 16:27 /user/root/examples/output-data/map-reduce/_SUCCESS

-rw-r--r-- 1 root supergroup 1547 2019-05-10 16:27 /user/root/examples/output-data/map-reduce/part-00000

oozie其实就是一个MapReduce,可以在yarn的web页面中看见,在oozie的页面中也可以看见;

##用命令行查看命令运行结果

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# bin/oozie job -oozie http://localhost:11000/oozie -info 0000000-190510134749297-oozie-root-W

二、自定义Workflow

1、关于workflow

工作流引擎Oozie(驭象者),用于管理Hadoop任务(支持MapReduce、Spark、Pig、Hive),把这些任务以DAG(有向无环图)方式串接起来。

Oozie任务流包括:coordinator、workflow;workflow描述任务执行顺序的DAG,而coordinator则用于定时任务触发,相当于workflow的定时管理器,其触发条件包括两类:

1. 数据文件生成

2. 时间条件

workflow定义语言是基于XML的,它被称为hPDL(Hadoop过程定义语言)。

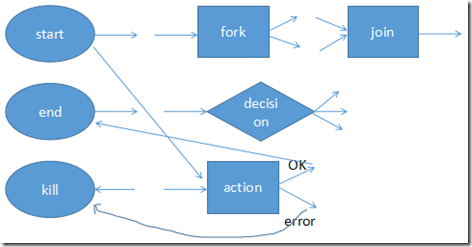

workflow节点:

控制流节点(Control Flow Nodes)

动作节点(Action Nodes)

其中,控制流节点定义了流程的开始和结束(start、end),以及控制流程的执行路径(Execution Path),如decision、fork、join等;

而动作节点包括Hadoop任务、SSH、HTTP、eMail和Oozie子流程等。

节点名称和转换必须符合以下模式=[a-zA-Z][-_a-zA-Z0-0]*=,最多20个字符。

start—>action—(ok)-->end

start—>action—(error)-->end

2、Workflow Action Nodes

Action Computation/Processing Is Always Remote

Actions Are Asynchronous

Actions Have 2 Transitions, ok and error

Action Recovery

三、MapReduce action

1、workflow

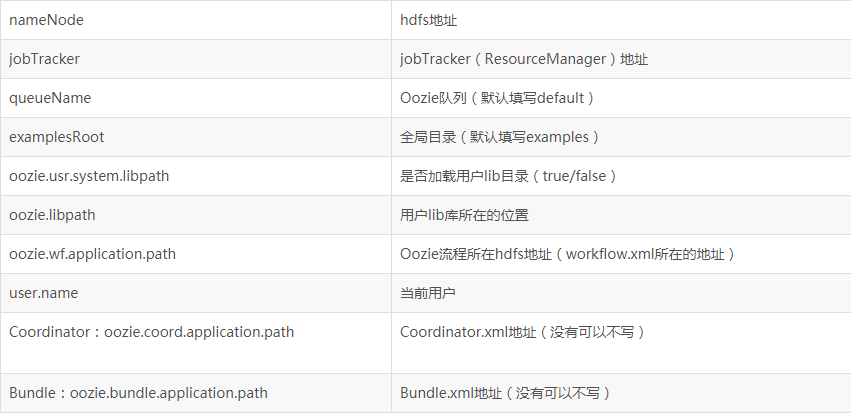

Oozie中WorkFlow包括job.properties、workflow.xml 、lib 目录(依赖jar包)三部分组成。

job.properties配置文件中包括nameNode、jobTracker、queueName、oozieAppsRoot、oozieDataRoot、oozie.wf.application.path、inputDir、outputDir,

其关键点是指向workflow.xml文件所在的HDFS位置。

##############

job.properties

关键点:指向workflow.xml文件所在的HDFS位置

workflow.xml (该文件需存放在HDFS上)

包含几点:

*start

*action

*MapReduce、Hive、Sqoop、Shell

ok

error

*kill

*end

lib 目录 (该目录需存放在HDFS上)

依赖jar包

2、MapReduce action

可以将map-reduce操作配置为在启动map reduce作业之前执行文件系统清理和目录创建,MapReduce的输入目录不能存在;

工作流作业将等待Hadoop map/reduce作业完成,然后继续工作流执行路径中的下一个操作。

Hadoop作业的计数器和作业退出状态(=FAILED=、kill或succeed)必须在Hadoop作业结束后对工作流作业可用。

map-reduce操作必须配置所有必要的Hadoop JobConf属性来运行Hadoop map/reduce作业。

四、新API中MapReduce Action

1、准备目录

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# mkdir -p oozie-apps/mr-wordcount-wf/lib

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# ls oozie-apps/mr-wordcount-wf/

job.properties lib workflow.xml //job.properties workflow.xml这两个文件可以从其他地方copy过来再修改

2、job.properties

nameNode=hdfs://hadoop-senior.ibeifeng.com:8020

jobTracker=hadoop-senior.ibeifeng.com:8032

queueName=default

oozieAppsRoot=user/root/oozie-apps

oozieDataRoot=user/root/oozie/datas

oozie.wf.application.path=${nameNode}/${oozieAppsRoot}/mr-wordcount-wf/workflow.xml

inputDir=mr-wordcount-wf/input

outputDir=mr-wordcount-wf/output

3、workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.5" name="mr-wordcount-wf"> <start to="mr-node-wordcount"/> <action name="mr-node-wordcount"> <map-reduce> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <prepare> <delete path="${nameNode}/${oozieDataRoot}/${outputDir}"/> </prepare> <configuration> <property> <name>mapred.mapper.new-api</name> <value>true</value> </property> <property> <name>mapred.reducer.new-api</name> <value>true</value> </property> <property> <name>mapreduce.job.queuename</name> <value>${queueName}</value> </property> <property> <name>mapreduce.job.map.class</name> <value>com.ibeifeng.hadoop.senior.mapreduce.WordCount$WordCountMapper</value> </property> <property> <name>mapreduce.job.reduce.class</name> <value>com.ibeifeng.hadoop.senior.mapreduce.WordCount$WordCountReducer</value> </property> <property> <name>mapreduce.map.output.key.class</name> <value>org.apache.hadoop.io.Text</value> </property> <property> <name>mapreduce.map.output.value.class</name> <value>org.apache.hadoop.io.IntWritable</value> </property> <property> <name>mapreduce.job.output.key.class</name> <value>org.apache.hadoop.io.Text</value> </property> <property> <name>mapreduce.job.output.value.class</name> <value>org.apache.hadoop.io.IntWritable</value> </property> <property> <name>mapreduce.input.fileinputformat.inputdir</name> <value>${nameNode}/${oozieDataRoot}/${inputDir}</value> </property> <property> <name>mapreduce.output.fileoutputformat.outputdir</name> <value>${nameNode}/${oozieDataRoot}/${outputDir}</value> </property> </configuration> </map-reduce> <ok to="end"/> <error to="fail"/> </action> <kill name="fail"> <message>Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message> </kill> <end name="end"/> </workflow-app>

4、创建hdfs目录和数据,并运行

##

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -mkdir -p /user/root/oozie/datas/mr-wordcount-wf/input

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -put /opt/datas/wc.input /user/root/oozie/datas/mr-wordcount-wf/input

##把oozie-apps目录上传到hdfs上

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# /opt/cdh-5.3.6/hadoop-2.5.0-cdh5.3.6/bin/hdfs dfs -put oozie-apps/ oozie-apps

##执行oozie job

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# export OOZIE_URL=http://hadoop-senior.ibeifeng.com:11000/oozie/

[root@hadoop-senior oozie-4.0.0-cdh5.3.6]# bin/oozie job -config oozie-apps/mr-wordcount-wf/job.properties -run

此时可以在oozie 和yarn的web上看到job

##运行成功,查看运行结果

[root@hadoop-senior hadoop-2.5.0-cdh5.3.6]# bin/hdfs dfs -text /user/root/oozie/datas/mr-wordcount-wf/output/part-r-00000

hadoop 4

hdfs 1

hive 1

hue 1

mapreduce 1

五、workflow编程要点

如何定义一个WorkFlow:

*job.properties

关键点:指向workflow.xml文件所在的HDFS位置

*workflow.xml

定义文件

XML文件

包含几点

*start

*action

MapReduce、Hive、Sqoop、Shelll

*ok

*fail

*kil1

*end

*1ib目录

依赖的jar包

workflow.xml编写:

*流程控制节点

*Action节点

MapReduce Action:

如何使用ooize调度MapReduce程序

关键点:

将以前Java MapReduce程序中的【Driver】部分

||

configuration

##使用新API的配置

<property> <name>mapred.mapper.new-api</name> <value>true</value> </property> <property> <name>mapred.reducer.new-api</name> <value>true</value> </property>