#朴素:考虑每个特征或者词,出项的可能性与它和其他单词相邻没有关系 #每个特征等权重 from numpy import * def loadDataSet(): postingList=[['my', 'dog', 'has', 'flea', 'problems', 'help', 'please'], ['maybe', 'not', 'take', 'him', 'to', 'dog', 'park', 'stupid'], ['my', 'dalmation', 'is', 'so', 'cute', 'I', 'love', 'him'], ['stop', 'posting', 'stupid', 'worthless', 'garbage'], ['mr', 'licks', 'ate', 'my', 'steak', 'how', 'to', 'stop', 'him'], ['quit', 'buying', 'worthless', 'dog', 'food', 'stupid']] classVec = [0,1,0,1,0,1] #1代表侮辱性段落,0代表正常段落 return postingList,classVec #创建一个单词的集合 def createVocabList(dataSet): vocabSet = set([]) #创建空集合 for document in dataSet: vocabSet |= set(document) return list(vocabSet) #判断文档出现在词汇表中 def setOfWordsVec(vocabSet,inputSet): returnVec = [0]*len(vocabSet) for word in inputSet: if word in vocabSet: returnVec[vocabSet.index(word)] = 1 else: print ("the word: %s is not in the Vocabulary!" % word) return returnVec def trainNB0(trainMatrix,trainCategory): numTrainDocs = len(trainMatrix) #有几行话 numWords = len(trainMatrix[0]) #每行的词汇表的词数 # print(numTrainDocs) # print(numWords) pAbusive = sum(trainCategory)/float(numTrainDocs) #p(Ci) p0Num = ones(numWords) p1Num = ones(numWords) # print(p0Num) #p0Denom = 2.0; p1Denom = 2.0 #书上是2.0 不知道为什么 p(x1|c1)= (n1 + 1) / (n + N) 看网上的, #为了凑成概率和是1,N应该是numWords p0Denom = 1.0*numWords; p1Denom = 1.0*numWords for i in range(numTrainDocs): if trainCategory[i] == 1: #某句话是侮辱性的话 p1Num += trainMatrix[i] #矩阵相加 #print(p1Num) p1Denom += sum(trainMatrix[i]) #print(p1Denom) else: p0Num += trainMatrix[i] p0Denom += sum(trainMatrix[i]) p1Vect = log(p1Num/p1Denom) p0Vect = log(p0Num/p0Denom) # print(p1Vect) # # summ = 0.0 # # for V in p1Vect: # # summ += V # # print(summ) # print(p0Vect) # print(pAbusive) return p0Vect,p1Vect,pAbusive def classifyNB(vecOClassify,p0Vec,p1Vec,p1Class): p1 = sum(vecOClassify*p1Vec) + log(p1Class) p0 = sum(vecOClassify*p0Vec) + log(1 - p1Class) if p1 > p0: return 1 else: return 0 def main(): listOPosts,listClasses = loadDataSet() myVocabList = createVocabList(listOPosts) #创建一个元素互不重复的集合 #print (myVocabList) trainMat = [] #print(type(trainMat)) for postinDoc in listOPosts: trainMat.append(setOfWordsVec(myVocabList, postinDoc)) #print(trainMat) p0V,p1V,pC1 = trainNB0(trainMat, listClasses) testEntry = ['love','my','dalmation'] thisDoc = array(setOfWordsVec(myVocabList, testEntry)) print(testEntry,"classified as: ",classifyNB(thisDoc, p0V, p1V, pC1)) testEntry = ['stupid','garbage'] thisDoc = array(setOfWordsVec(myVocabList, testEntry)) print(testEntry,"classified as: ",classifyNB(thisDoc, p0V, p1V, pC1)) testEntry = ['stupid','cute','love','help'] thisDoc = array(setOfWordsVec(myVocabList, testEntry)) print(testEntry,"classified as: ",classifyNB(thisDoc, p0V, p1V, pC1)) #print(setOfWordsVec(myVocabList, listOPosts[0])) #print(setOfWordsVec(myVocabList, listOPosts[1])) main()

['love', 'my', 'dalmation'] classified as: 0 ['stupid', 'garbage'] classified as: 1 ['stupid', 'cute', 'love', 'help'] classified as: 0

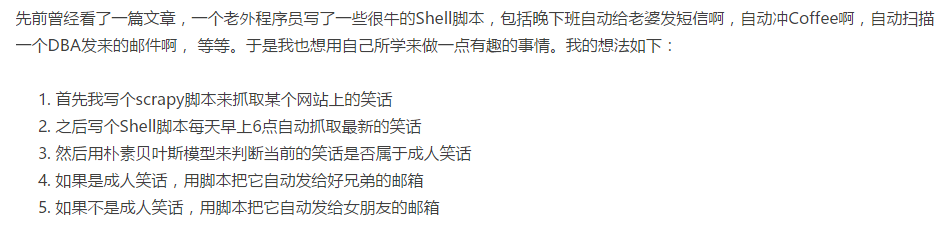

经过这一段时间学习,发现ML in Action这本书确实是侧重代码方面,关于数学推导方面还是很少,需要不断查看文档。

链接: 贝叶斯网络、拉普拉斯平滑

链接: Naive Bayes

看完拉普拉斯平滑后,我觉得书上有问题,就是防止概率中有0的时候,分子每项加一,它写的分母 p0Denom = 2.0; p1Denom = 2.0,不明白为什么

看了文章后,

p(x1|c1)= (n1 + 1) / (n + N)

N是所有单词的数目,为了保证概率和为1。

所以我这里用的是NumWords

学习致用还是很好,哈哈哈

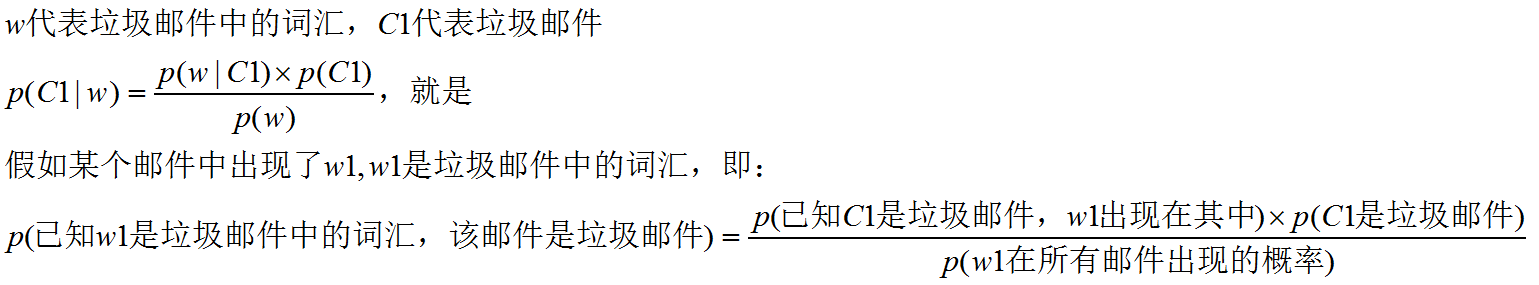

今天做个例子:使用朴素贝叶斯过滤垃圾邮件:

(还是ppt中写公式比较好用)

发现书上的代码和它给的代码库中的有些不一样,我觉得可能是翻译的时候出现了问题。

def textParse(bigString): import re listOTokens = re.split(r'W*', bigString) # 匹配任意数字和字母,相当于 [a-zA-Z0-9_] return [tok.lower() for tok in listOTokens if len(tok) > 2] def spamTest(): #广告过滤 docList = [];classList = [];fullText = [] for i in range(1,26): #wordList = textParse(open('email/spam/%d.txt' % i).read().encode('utf-8').decode('utf-8')) #spam中是垃圾邮件 wordList = textParse(open('email/spam/%d.txt' % i).read()) docList.append(wordList) fullText.extend(wordList) classList.append(1) #wordList = textParse(open('email/ham/%d.txt' % i).read().encode('gbk').decode('gbk')) wordList = textParse(open('email/ham/%d.txt' % i).read()) docList.append(wordList) fullText.extend(wordList) classList.append(0) vocabList = createVocabList(docList) #print(vocabList) # 40个训练集,10个测试集 trainingSet = list(range(50));testSet = [] #print(trainingSet) #print(type(random.uniform(0, len(trainingSet)))) float for i in range(10): randIndex = int(random.uniform(0, len(trainingSet))) testSet.append(trainingSet[randIndex]) #保证随机性,不管重复,其实不会重复,重复的只是元素索引 del(trainingSet[randIndex]) trainMax = []; trainClasses = [] #print(trainingSet) for docIndex in trainingSet: #print(docIndex) trainMax.append(bagOfWordsVec(vocabList, docList[docIndex])) trainClasses.append(classList[docIndex]) p0V,p1V,pSpam = trainNB0(array(trainMax),array(trainClasses)) errorCount = 0 for docIndex in testSet: wordVector = bagOfWordsVec(vocabList, docList[docIndex]) if classifyNB(array(wordVector), p0V, p1V, pSpam) != classList[docIndex]: errorCount += 1 print("the error text %s" % docList[docIndex]) print("error rate: %f " % (float(errorCount)/len(testSet))) spamTest()