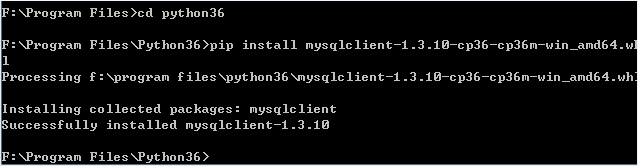

1.下载对应版本的python mysql 模块 我的是:pymssql-2.2.0.dev0-cp36-cp36m-win_amd64.whl

2.手动创建table

create table grilsbase ( id int primary key auto_increment, name varchar(50),height varchar(50),bwh varchar(50),title varchar(100),img_upload varchar(100),pc_img_upload varchar(100), resource_id varchar(50),totals varchar(50),recommend_id varchar(50),date varchar(50),headimg_upload varchar(50), show_datetime varchar(50),client_show_datetime varchar(50),video_duration varchar(50),free_select varchar(50), trial_time varchar(50),viewtimes varchar(50),coop_customselect_654 varchar(50),coop_id varchar(50),tag_class varchar(50), tag_name varchar(50),playerid varchar(50),block_detailid varchar(50),type varchar(50),istop varchar(50) )

3.实现爬虫代码

导入模块:requests ,os,json,re,Mysqldb

流程:获取数据=>分析数据=>解析数据=>持久化保存

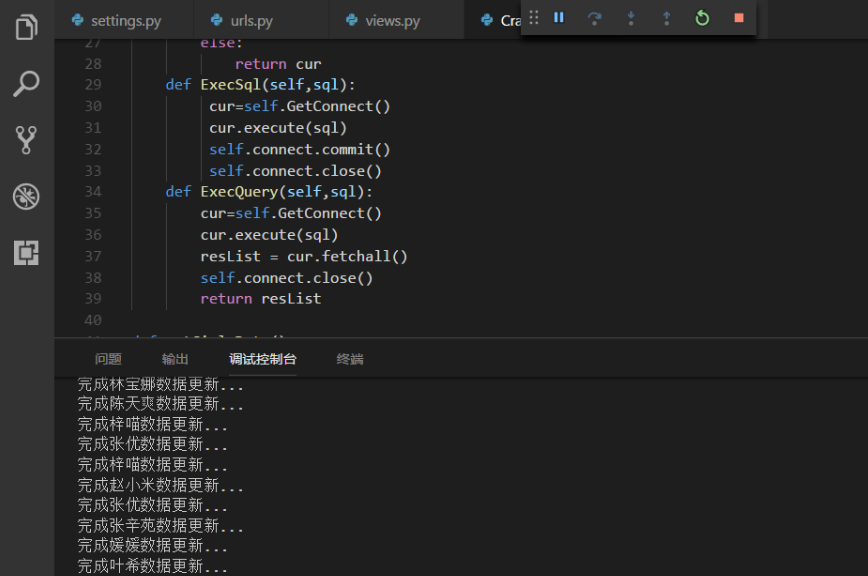

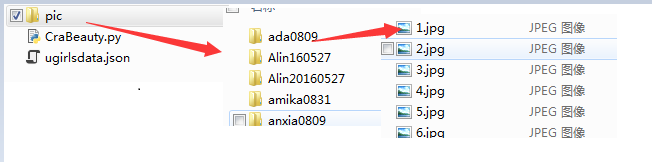

1 #coding:utf-8 2 import requests 3 import os 4 import json 5 import re 6 import MySQLdb 7 import threading 8 #获取数据url 9 gilsUrl='http://act.vip.xunlei.com/ugirls/js/ugirlsdata.js' 10 gilsDetailUrl='http://meitu.xunlei.com/detail.html' 11 gilsImgUrl='http://data.meitu.xunlei.com/data/image/%s/%s' 12 executor = threading.BoundedSemaphore(10) 13 regex=re.compile('/([^/]*?.jpg)$') 14 regexhead=re.compile('/([^/]*?).jpg$') 15 class MySQL: 16 def __init__(self,host,user,pwd,db): 17 self.host=host 18 self.user=user 19 self.db=db 20 self.pwd=pwd 21 def GetConnect(self): 22 if not self.db: 23 raise(NameError,'没有目标数据库') 24 self.connect=MySQLdb.connect(host=self.host,user=self.user,password=self.pwd,database=self.db,port=3306,charset='utf8') 25 cur=self.connect.cursor() 26 if not cur: 27 raise(NameError,'数据库访问失败') 28 else: 29 return cur 30 def ExecSql(self,sql): 31 cur=self.GetConnect() 32 cur.execute(sql) 33 self.connect.commit() 34 self.connect.close() 35 def ExecQuery(self,sql): 36 cur=self.GetConnect() 37 cur.execute(sql) 38 resList = cur.fetchall() 39 self.connect.close() 40 return resList 41 42 def getGirlsData(): 43 regex=re.compile("var ugirlsData=(.+)") 44 r=requests.get(gilsUrl) 45 jsond=regex.findall(r.text) 46 with open('ugirlsdata.json','w+',encoding='utf-8') as f: 47 f.write(jsond[0]) 48 #print('写入json成功') 49 return json.loads(jsond[0]) 50 51 52 def getImgName(imgurl): 53 if(imgurl==''): 54 return '' 55 m=regex.findall(imgurl) 56 if m is None: 57 return '' 58 else: 59 return m[0] if len(m)>0 else '' 60 61 def getImgNameHead(imgurl): 62 if(imgurl==''): 63 return '' 64 m=regexhead.findall(imgurl) 65 if m is None: 66 return '' 67 else: 68 return m[0] if len(m)>0 else '' 69 70 def WriteDB(jsdata): 71 ms = MySQL(host="192.168.0.108", user="lin", pwd="12345678", db="grils") 72 for data in jsdata: 73 sql="insert into grilsbase( 74 name,height,bwh,title,img_upload,pc_img_upload,resource_id,totals,recommend_id, 75 date,headimg_upload,show_datetime,client_show_datetime,video_duration,free_select,trial_time, 76 viewtimes,coop_customselect_654,coop_id,tag_class,tag_name,playerid,block_detailid,type,istop) 77 values('%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s')" % 78 (data['name'],data['height'],data['bwh'],data['title'],getImgName(data.get('img_upload','')),data['pc_img_upload'],data['resource_id'],data["totals"],data["recommend_id"], 79 data['date'],getImgName(data.get("headimg_upload",'')),data["show_datetime"],data["client_show_datetime"],data["video_duration"],data["free_select"],data["trial_time"], 80 data['viewtimes'],data['coop_customselect_654'],data['coop_id'],data.get('tag_class',''),data.get('tag_name',''),data.get('playerid',''),data['block_detailid'],data['type'],data['istop']) 81 #print(sql) 82 ms.ExecSql(sql) 83 print('完成'+data['name']+'数据更新...') 84 DownImg(data['name'],data["totals"],data['resource_id'],data["headimg_upload"],data["img_upload"]) 85 86 87 88 def DownImg(name,totals,resource_id,headimg_upload,img_upload): 89 path=creatFile(resource_id) 90 if headimg_upload.strip()!='': 91 #os.remove('./pic/'+resource_id+'/'+getImgName(headimg_upload)+'.jpg') 92 DownImgRun(headimg_upload,path,getImgNameHead(headimg_upload)) 93 if img_upload.strip()!='': 94 #os.remove('./pic/'+resource_id+'/'+getImgName(img_upload)+'.jpg') 95 DownImgRun(img_upload,path,getImgNameHead(img_upload)) 96 #print('正在下载'+name+'图片') 97 98 for i in range(1,int(totals)+1): 99 url=gilsImgUrl%(resource_id,str(i)+'.jpg') 100 DownImgRun(url,path,i) 101 #t=threading.Thread(target=DownImgRun,args={url,path,i}) 102 #t.start() 103 #t.join() 104 105 106 107 def DownImgRun(url,path,i): 108 #print(url) 109 110 r=requests.get(url) 111 if(r.status_code==200): 112 with open(path+'/'+str(i)+'.jpg','wb') as fimg: 113 fimg.write(r.content) 114 115 116 117 def creatFile(dirname): 118 path='./pic/'+dirname 119 if os.path.exists(path): 120 return path 121 else: 122 os.makedirs(path) 123 return path 124 125 126 if __name__ == '__main__': 127 gri=getGirlsData() 128 WriteDB(gri)

4.运行效果 和结果