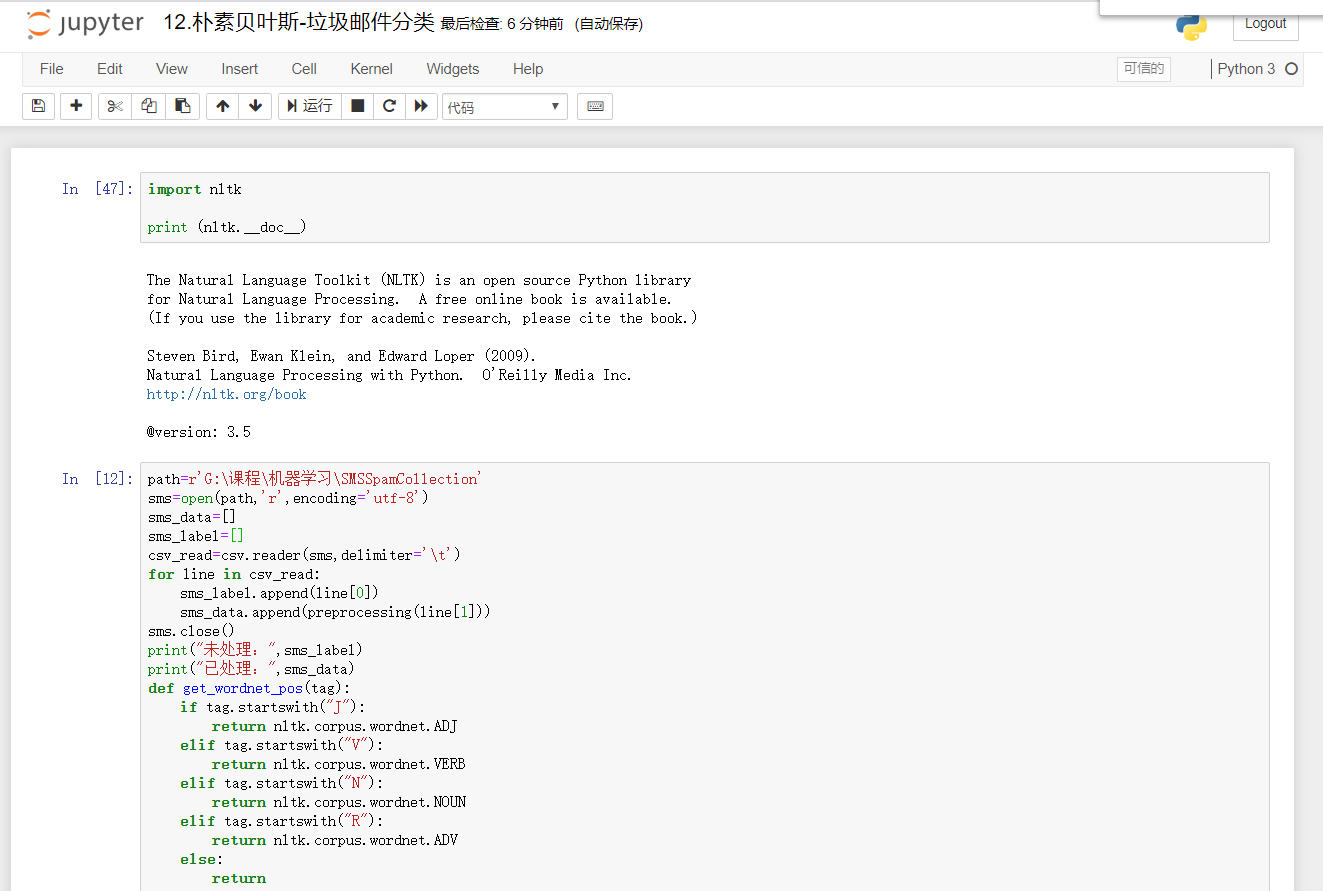

1.读取

2.数据预处理

3.数据划分—训练集和测试集数据划分

from sklearn.model_selection import train_test_split

x_train,x_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=0, stratify=y_train)

4.文本特征提取

sklearn.feature_extraction.text.CountVectorizer

sklearn.feature_extraction.text.TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf2 = TfidfVectorizer()

观察邮件与向量的关系

向量还原为邮件

4.模型选择

from sklearn.naive_bayes import GaussianNB

from sklearn.naive_bayes import MultinomialNB

说明为什么选择这个模型?

5.模型评价:混淆矩阵,分类报告

from sklearn.metrics import confusion_matrix

confusion_matrix = confusion_matrix(y_test, y_predict)

说明混淆矩阵的含义

from sklearn.metrics import classification_report

说明准确率、精确率、召回率、F值分别代表的意义

6.比较与总结

如果用CountVectorizer进行文本特征生成,与TfidfVectorizer相比,效果如何?

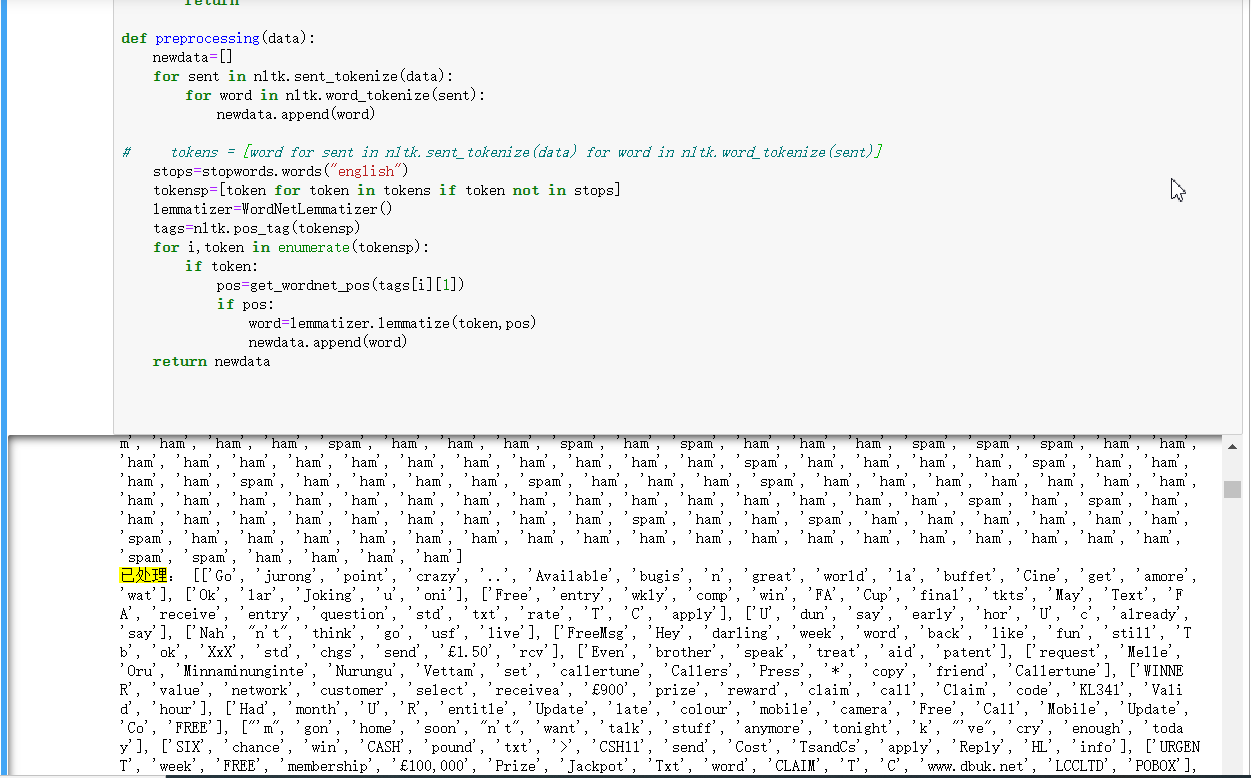

# 文本特征提取(向量)

tfidf = TfidfVectorizer()

X_train = tfidf.fit_transform(x_train).toarray()

X_test = tfidf.transform(x_test).toarray()

# 向量还原文件

import numpy as np

a = np.flatnonzero(X_train[0]) # 输入一个矩阵,返回非零元素的下标

# 非零元素对应的单词

b = tfidf.vocabulary_

key_list = []

for key, value in b.items():

if value in a:

key_list.append(key)

print("非零元素对应的单词:", key_list)

# 5、模型的构建、训练、预测

m_model = MultinomialNB()

m_model.fit(X_train, y_train)

y_m_pre = m_model.predict(X_test)

# 模型评价

# 朴素贝叶斯

print("朴素贝叶斯")

m_cm = confusion_matrix(y_test, y_m_pre)

print("混淆矩阵:

", m_cm)

m_cr = classification_report(y_test, y_m_pre)

print("分类报告:

", m_cr)

print("模型准确率:", (m_cm[0][0] + m_cm[1][1]) / np.sum(m_cm))