啦啦,网站屏蔽太严重。很难完成

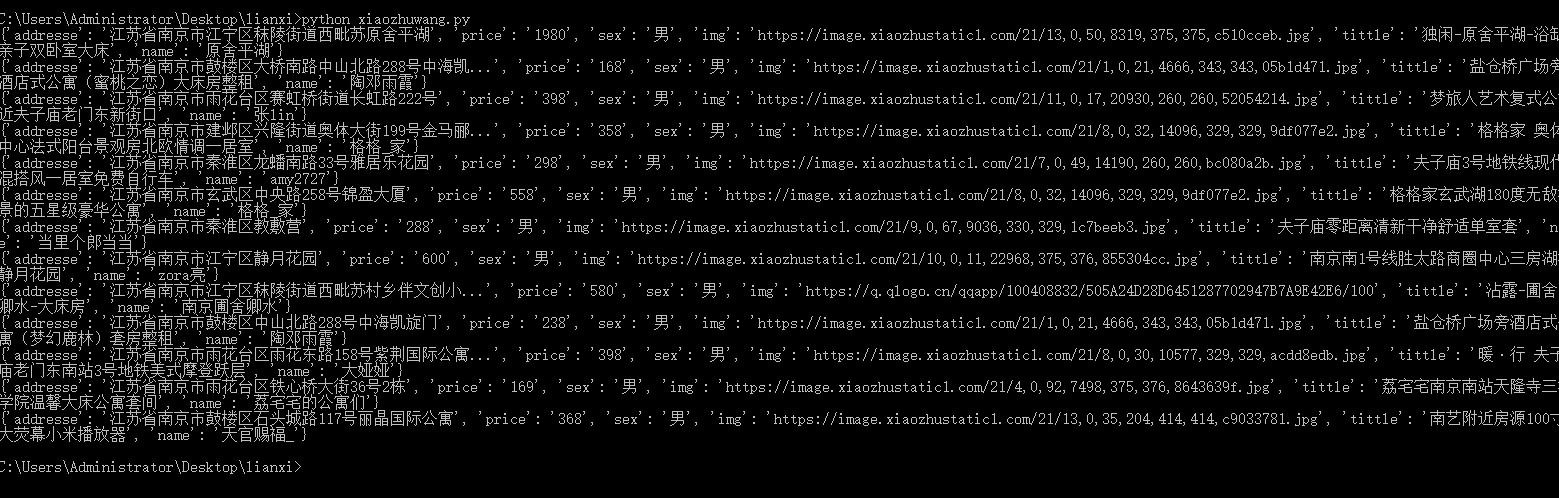

#爬去小猪短租网南京地区短租网13页信息 #导入beautifulsoup库和request库和time库 from bs4 import BeautifulSoup import requests import time #加入请求头:User-Agent,伪装成浏览器 headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36' } def judgment_sex(class_name): if(class_name==['member_icol']): return '女' else: return '男' def get_links(url): web_data=requests.get(url,headers=headers) soup=BeautifulSoup(web_data.text,'lxml') links=soup.select('#page_list > ul > li > a') for link in links: href=link.get('href') print(href) if __name__=='__main__': urls='http://nj.xiaozhu.com/search-duanzufang-p2-0/' get_links(urls)1 #爬去小猪短租网南京地区短租网13页信息 2 #导入beautifulsoup库和request库和time库 3 from bs4 import BeautifulSoup 4 import requests 5 import time 6 7 #加入请求头:User-Agent,伪装成浏览器 8 headers={ 9 'User-Agent':'Nokia6600/1.0 (3.42.1) SymbianOS/7.0s Series60/2.0 Profile/MIDP-2.0 Configuration/CLDC-1.0' 10 #'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36' 11 } 12 13 def judgment_sex(class_name): 14 if(class_name==['member_icol']): 15 return '女' 16 else: 17 return '男' 18 19 20 def get_links(url): 21 web_data=requests.get(url,headers=headers) 22 soup=BeautifulSoup(web_data.text,'lxml') 23 links=soup.select('#page_list > ul > li > a') 24 #href=links[0].get('href') 25 #time.sleep(6) 26 #get_info(href) 27 for link in links: 28 href=link.get('href') 29 time.sleep(10) 30 get_info(href) 31 32 33 34 def get_info(url): 35 wb_data=requests.get(url,headers=headers) 36 print(wb_data) 37 print('666666666666666666666666666666') 38 soup=BeautifulSoup(wb_data.text,'lxml') 39 #tittles=soup.select('div.pho_info > h4') 40 #print(tittles) 41 42 tittles=soup.select(' div.pho_info > h4') 43 addresses=soup.select('span.pr5') 44 prices=soup.select('#pricePart > div.day_l > span') 45 imgs=soup.select('#floatRightBox > div.js_box.clearfix > div.member_pic > a > img') 46 names=soup.select('#floatRightBox > div.js_box.clearfix > div.w_240 > h6 > a') 47 sexs=soup.select('#floatRightBox > div.js_box.clearfix > div.member_pic > div') 48 for tittle,addresse,price,img,name,sex in zip(tittles,addresses,prices,imgs,names,sexs): 49 data={ 50 'tittle':tittle.get_text().strip(), 51 'addresse':addresse.get_text().strip(), 52 'price':price.get_text(), 53 'img':img.get("src"), 54 'name':name.get_text(), 55 'sex':judgment_sex(sex.get("class")) 56 } 57 print(data) 58 59 60 if __name__=='__main__': 61 urls=['http://nj.xiaozhu.com/search-duanzufang-p{}-0/'.format(number) for number in range(1,15)] 62 for single_url in urls: 63 get_links(single_url) 64 #print(single_url)