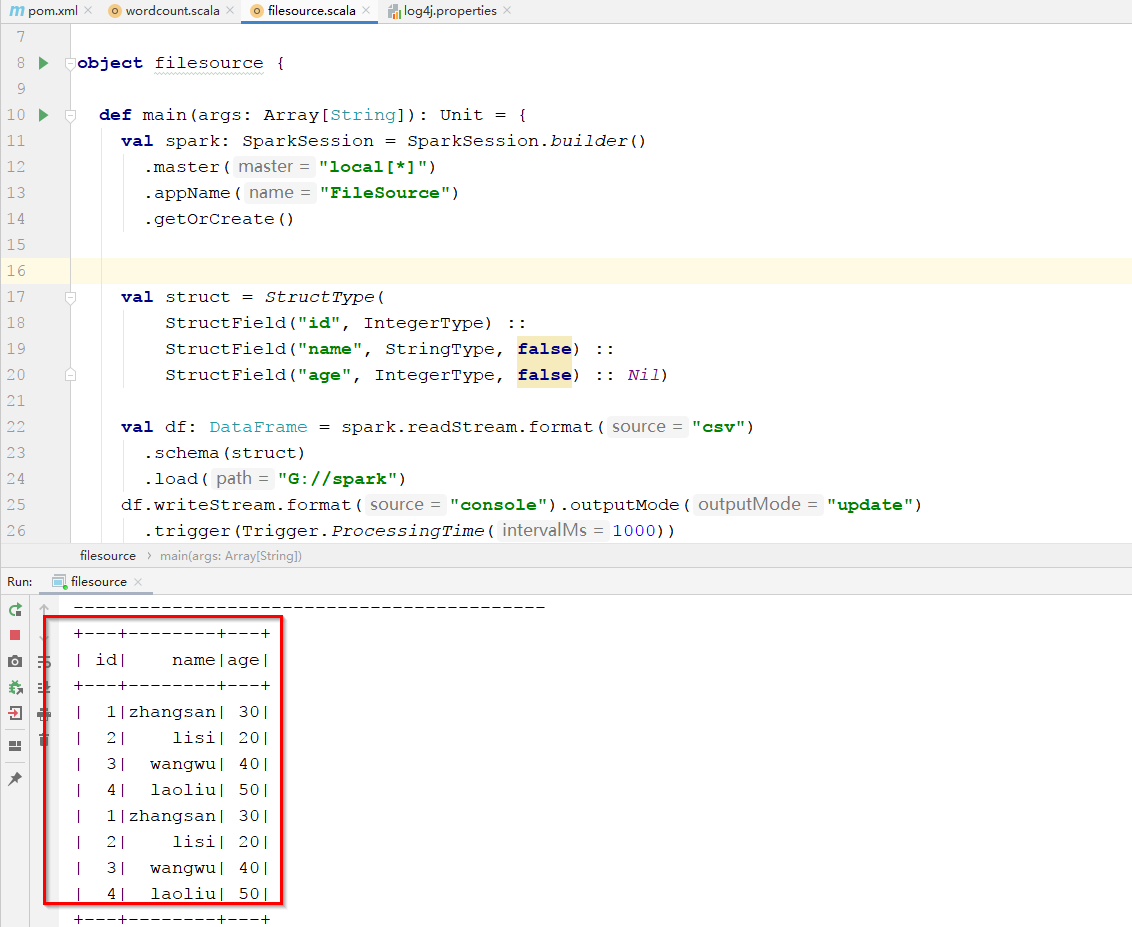

package com.kpwong.structure.streaming import org.apache.spark.sql.streaming.Trigger import org.apache.spark.sql.{DataFrame, SparkSession} import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType} object filesource { def main(args: Array[String]): Unit = { val spark: SparkSession = SparkSession.builder() .master("local[*]") .appName("FileSource") .getOrCreate()

import spark.implicits._

val struct = StructType( StructField("id", IntegerType) :: StructField("name", StringType, false) :: StructField("age", IntegerType, false) :: Nil) val df: DataFrame = spark.readStream.format("csv") .schema(struct) .load("G://spark") df.writeStream.format("console").outputMode("update") .trigger(Trigger.ProcessingTime(1000)) .start() .awaitTermination() spark.stop() } }

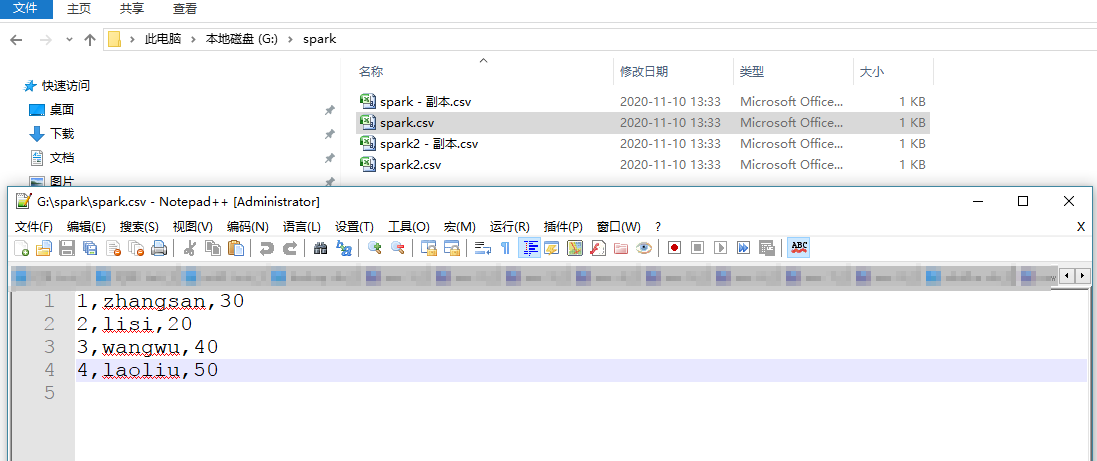

spark 目录下数据文件格式:

运行结果: